Error Correcting Romaji-Kana Conversion for Japanese Language Education

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Man'yogana.Pdf (574.0Kb)

Bulletin of the School of Oriental and African Studies http://journals.cambridge.org/BSO Additional services for Bulletin of the School of Oriental and African Studies: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here The origin of man'yogana John R. BENTLEY Bulletin of the School of Oriental and African Studies / Volume 64 / Issue 01 / February 2001, pp 59 73 DOI: 10.1017/S0041977X01000040, Published online: 18 April 2001 Link to this article: http://journals.cambridge.org/abstract_S0041977X01000040 How to cite this article: John R. BENTLEY (2001). The origin of man'yogana. Bulletin of the School of Oriental and African Studies, 64, pp 5973 doi:10.1017/S0041977X01000040 Request Permissions : Click here Downloaded from http://journals.cambridge.org/BSO, IP address: 131.156.159.213 on 05 Mar 2013 The origin of man'yo:gana1 . Northern Illinois University 1. Introduction2 The origin of man'yo:gana, the phonetic writing system used by the Japanese who originally had no script, is shrouded in mystery and myth. There is even a tradition that prior to the importation of Chinese script, the Japanese had a native script of their own, known as jindai moji ( , age of the gods script). Christopher Seeley (1991: 3) suggests that by the late thirteenth century, Shoku nihongi, a compilation of various earlier commentaries on Nihon shoki (Japan's first official historical record, 720 ..), circulated the idea that Yamato3 had written script from the age of the gods, a mythical period when the deity Susanoo was believed by the Japanese court to have composed Japan's first poem, and the Sun goddess declared her son would rule the land below. -

Introduction to Kanbun: W4019x (Fall 2004) Mondays and Wednesdays 11-12:15, Kress Room, Starr Library (Entrance on 200 Level)

1 Introduction to Kanbun: W4019x (Fall 2004) Mondays and Wednesdays 11-12:15, Kress Room, Starr Library (entrance on 200 Level) David Lurie (212-854-5034, [email protected]) Office Hours: Tuesdays 2-4, 500A Kent Hall This class is intended to build proficiency in reading the variety of classical Japanese written styles subsumed under the broad term kanbun []. More specifically, it aims to foster familiarity with kundoku [], a collection of techniques for reading and writing classical Japanese in texts largely or entirely composed of Chinese characters. Because it is impossible to achieve fluent reading ability using these techniques in a mere semester, this class is intended as an introduction. Students will gain familiarity with a toolbox of reading strategies as they are exposed to a variety of premodern written styles and genres, laying groundwork for more thorough competency in specific areas relevant to their research. This is not a class in Classical Chinese: those who desire facility with Chinese classical texts are urged to study Classical Chinese itself. (On the other hand, some prior familiarity with Classical Chinese will make much of this class easier). The pre-requisite for this class is Introduction to Classical Japanese (W4007); because our focus is the use of Classical Japanese as a tool to understand character-based texts, it is assumed that students will already have control of basic Classical Japanese grammar. Students with concerns about their competence should discuss them with me immediately. Goals of the course: 1) Acquire basic familiarity with kundoku techniques of reading, with a focus on the classes of special characters (unread characters, twice-read characters, negations, etc.) that form the bulk of traditional Japanese kanbun pedagogy. -

Consonant Characters and Inherent Vowels

Global Design: Characters, Language, and More Richard Ishida W3C Internationalization Activity Lead Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 1 Getting more information W3C Internationalization Activity http://www.w3.org/International/ Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 2 Outline Character encoding: What's that all about? Characters: What do I need to do? Characters: Using escapes Language: Two types of declaration Language: The new language tag values Text size Navigating to localized pages Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 3 Character encoding Character encoding: What's that all about? Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 4 Character encoding The Enigma Photo by David Blaikie Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 5 Character encoding Berber 4,000 BC Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 6 Character encoding Tifinagh http://www.dailymotion.com/video/x1rh6m_tifinagh_creation Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 7 Character encoding Character set Character set ⴰ ⴱ ⴲ ⴳ ⴴ ⴵ ⴶ ⴷ ⴸ ⴹ ⴺ ⴻ ⴼ ⴽ ⴾ ⴿ ⵀ ⵁ ⵂ ⵃ ⵄ ⵅ ⵆ ⵇ ⵈ ⵉ ⵊ ⵋ ⵌ ⵍ ⵎ ⵏ ⵐ ⵑ ⵒ ⵓ ⵔ ⵕ ⵖ ⵗ ⵘ ⵙ ⵚ ⵛ ⵜ ⵝ ⵞ ⵟ ⵠ ⵢ ⵣ ⵤ ⵥ ⵯ Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 8 Character encoding Coded character set 0 1 2 3 0 1 Coded character set 2 3 4 5 6 7 8 9 33 (hexadecimal) A B 52 (decimal) C D E F Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 9 Character encoding Code pages ASCII Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 10 Character encoding Code pages ISO 8859-1 (Latin 1) Western Europe ç (E7) Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 11 Character encoding Code pages ISO 8859-7 Greek η (E7) Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 12 Character encoding Double-byte characters Standard Country No. -

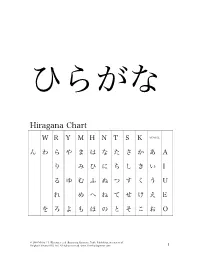

Hiragana Chart

ひらがな Hiragana Chart W R Y M H N T S K VOWEL ん わ ら や ま は な た さ か あ A り み ひ に ち し き い I る ゆ む ふ ぬ つ す く う U れ め へ ね て せ け え E を ろ よ も ほ の と そ こ お O © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 1 Beginning Japanese 名前: ________________________ 1-1 Hiragana Activity Book 日付: ___月 ___日 一、 Practice: あいうえお かきくけこ がぎぐげご O E U I A お え う い あ あ お え う い あ お う あ え い あ お え う い お う い あ お え あ KO KE KU KI KA こ け く き か か こ け く き か こ け く く き か か こ き き か こ こ け か け く く き き こ け か © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 2 GO GE GU GI GA ご げ ぐ ぎ が が ご げ ぐ ぎ が ご ご げ ぐ ぐ ぎ ぎ が が ご げ ぎ が ご ご げ が げ ぐ ぐ ぎ ぎ ご げ が 二、 Fill in each blank with the correct HIRAGANA. SE N SE I KI A RA NA MA E 1. -

Como Digitar Em Japonês 1

Como digitar em japonês 1 Passo 1: Mudar para o modo de digitação em japonês Abra o Office Word, Word Pad ou Bloco de notas para testar a digitação em japonês. Com o cursor colocado em um novo documento em algum lugar em sua tela você vai notar uma barra de idiomas. Clique no botão "PT Português" e selecione "JP Japonês (Japão)". Isso vai mudar a aparência da barra de idiomas. * Se uma barra longa aparecer, como na figura abaixo, clique com o botão direito na parte mais à esquerda e desmarque a opção "Legendas". ficará assim → Além disso, você pode clicar no "_" no canto superior direito da barra de idiomas, que a janela se fechará no canto inferior direito da tela (minimizar). ficará assim → © 2017 Fundação Japão em São Paulo Passo 2: Alterar a barra de idiomas para exibir em japonês Se você não consegue ler em japonês, pode mudar a exibição da barra de idioma para inglês. Clique em ツール e depois na opção プロパティ. Opção: Alterar a barra de idiomas para exibir em inglês Esta janela é toda em japonês, mas não se preocupe, pois da próxima vez que abrí-la estará em Inglês. Haverá um menu de seleção de idiomas no menu de "全般", escolha "英語 " e clique em "OK". © 2017 Fundação Japão em São Paulo Passo 3: Digitando em japonês Certifique-se de que tenha selecionado japonês na barra de idiomas. Após isso, selecione “hiragana”, como indica a seta. Passo 4: Digitando em japonês com letras romanas Uma vez que estiver no modo de entrada correto no documento, vamos digitar uma palavra prática. -

Handy Katakana Workbook.Pdf

First Edition HANDY KATAKANA WORKBOOK An Introduction to Japanese Writing: KANA THIS IS A SUPPLEMENT FOR BEGINNING LEVEL JAPANESE LANGUAGE INSTRUCTION. \ FrF!' '---~---- , - Y. M. Shimazu, Ed.D. -----~---- TABLE OF CONTENTS Page Introduction vi ACKNOWLEDGEMENlS vii STUDYSHEET#l 1 A,I,U,E, 0, KA,I<I, KU,KE, KO, GA,GI,GU,GE,GO, N WORKSHEET #1 2 PRACTICE: A, I,U, E, 0, KA,KI, KU,KE, KO, GA,GI,GU, GE,GO, N WORKSHEET #2 3 MORE PRACTICE: A, I, U, E,0, KA,KI,KU, KE, KO, GA,GI,GU,GE,GO, N WORKSHEET #~3 4 ADDmONAL PRACTICE: A,I,U, E,0, KA,KI, KU,KE, KO, GA,GI,GU,GE,GO, N STUDYSHEET #2 5 SA,SHI,SU,SE, SO, ZA,JI,ZU,ZE,ZO, TA, CHI, TSU, TE,TO, DA, DE,DO WORI<SHEEI' #4 6 PRACTICE: SA,SHI,SU,SE, SO, ZA,II, ZU,ZE,ZO, TA, CHI, 'lSU,TE,TO, OA, DE,DO WORI<SHEEI' #5 7 MORE PRACTICE: SA,SHI,SU,SE,SO, ZA,II, ZU,ZE, W, TA, CHI, TSU, TE,TO, DA, DE,DO WORKSHEET #6 8 ADDmONAL PRACI'ICE: SA,SHI,SU,SE, SO, ZA,JI, ZU,ZE,ZO, TA, CHI,TSU,TE,TO, DA, DE,DO STUDYSHEET #3 9 NA,NI, NU,NE,NO, HA, HI,FU,HE, HO, BA, BI,BU,BE,BO, PA, PI,PU,PE,PO WORKSHEET #7 10 PRACTICE: NA,NI, NU, NE,NO, HA, HI,FU,HE,HO, BA,BI, BU,BE, BO, PA, PI,PU,PE,PO WORKSHEET #8 11 MORE PRACTICE: NA,NI, NU,NE,NO, HA,HI, FU,HE, HO, BA,BI,BU,BE, BO, PA,PI,PU,PE,PO WORKSHEET #9 12 ADDmONAL PRACTICE: NA,NI, NU, NE,NO, HA, HI, FU,HE, HO, BA,BI,3U, BE, BO, PA, PI,PU,PE,PO STUDYSHEET #4 13 MA, MI,MU, ME, MO, YA, W, YO WORKSHEET#10 14 PRACTICE: MA,MI, MU,ME, MO, YA, W, YO WORKSHEET #11 15 MORE PRACTICE: MA, MI,MU,ME,MO, YA, W, YO WORKSHEET #12 16 ADDmONAL PRACTICE: MA,MI,MU, ME, MO, YA, W, YO STUDYSHEET #5 17 -

KANA Response Live Organization Administration Tool Guide

This is the most recent version of this document provided by KANA Software, Inc. to Genesys, for the version of the KANA software products licensed for use with the Genesys eServices (Multimedia) products. Click here to access this document. KANA Response Live Organization Administration KANA Response Live Version 10 R2 February 2008 KANA Response Live Organization Administration All contents of this documentation are the property of KANA Software, Inc. (“KANA”) (and if relevant its third party licensors) and protected by United States and international copyright laws. All Rights Reserved. © 2008 KANA Software, Inc. Terms of Use: This software and documentation are provided solely pursuant to the terms of a license agreement between the user and KANA (the “Agreement”) and any use in violation of, or not pursuant to any such Agreement shall be deemed copyright infringement and a violation of KANA's rights in the software and documentation and the user consents to KANA's obtaining of injunctive relief precluding any further such use. KANA assumes no responsibility for any damage that may occur either directly or indirectly, or any consequential damages that may result from the use of this documentation or any KANA software product except as expressly provided in the Agreement, any use hereunder is on an as-is basis, without warranty of any kind, including without limitation the warranties of merchantability, fitness for a particular purpose, and non-infringement. Use, duplication, or disclosure by licensee of any materials provided by KANA is subject to restrictions as set forth in the Agreement. Information contained in this document is subject to change without notice and does not represent a commitment on the part of KANA. -

Writing As Aesthetic in Modern and Contemporary Japanese-Language Literature

At the Intersection of Script and Literature: Writing as Aesthetic in Modern and Contemporary Japanese-language Literature Christopher J Lowy A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2021 Reading Committee: Edward Mack, Chair Davinder Bhowmik Zev Handel Jeffrey Todd Knight Program Authorized to Offer Degree: Asian Languages and Literature ©Copyright 2021 Christopher J Lowy University of Washington Abstract At the Intersection of Script and Literature: Writing as Aesthetic in Modern and Contemporary Japanese-language Literature Christopher J Lowy Chair of the Supervisory Committee: Edward Mack Department of Asian Languages and Literature This dissertation examines the dynamic relationship between written language and literary fiction in modern and contemporary Japanese-language literature. I analyze how script and narration come together to function as a site of expression, and how they connect to questions of visuality, textuality, and materiality. Informed by work from the field of textual humanities, my project brings together new philological approaches to visual aspects of text in literature written in the Japanese script. Because research in English on the visual textuality of Japanese-language literature is scant, my work serves as a fundamental first-step in creating a new area of critical interest by establishing key terms and a general theoretical framework from which to approach the topic. Chapter One establishes the scope of my project and the vocabulary necessary for an analysis of script relative to narrative content; Chapter Two looks at one author’s relationship with written language; and Chapters Three and Four apply the concepts explored in Chapter One to a variety of modern and contemporary literary texts where script plays a central role. -

Legacy Character Sets & Encodings

Legacy & Not-So-Legacy Character Sets & Encodings Ken Lunde CJKV Type Development Adobe Systems Incorporated bc ftp://ftp.oreilly.com/pub/examples/nutshell/cjkv/unicode/iuc15-tb1-slides.pdf Tutorial Overview dc • What is a character set? What is an encoding? • How are character sets and encodings different? • Legacy character sets. • Non-legacy character sets. • Legacy encodings. • How does Unicode fit it? • Code conversion issues. • Disclaimer: The focus of this tutorial is primarily on Asian (CJKV) issues, which tend to be complex from a character set and encoding standpoint. 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated Terminology & Abbreviations dc • GB (China) — Stands for “Guo Biao” (国标 guóbiâo ). — Short for “Guojia Biaozhun” (国家标准 guójiâ biâozhün). — Means “National Standard.” • GB/T (China) — “T” stands for “Tui” (推 tuî ). — Short for “Tuijian” (推荐 tuîjiàn ). — “T” means “Recommended.” • CNS (Taiwan) — 中國國家標準 ( zhôngguó guójiâ biâozhün) in Chinese. — Abbreviation for “Chinese National Standard.” 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated Terminology & Abbreviations (Cont’d) dc • GCCS (Hong Kong) — Abbreviation for “Government Chinese Character Set.” • JIS (Japan) — 日本工業規格 ( nihon kôgyô kikaku) in Japanese. — Abbreviation for “Japanese Industrial Standard.” — 〄 • KS (Korea) — 한국 공업 규격 (韓國工業規格 hangug gongeob gyugyeog) in Korean. — Abbreviation for “Korean Standard.” — ㉿ — Designation change from “C” to “X” on August 20, 1997. 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated Terminology & Abbreviations (Cont’d) dc • TCVN (Vietnam) — Tiu Chun Vit Nam in Vietnamese. — Means “Vietnamese Standard.” • CJKV — Chinese, Japanese, Korean, and Vietnamese. 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated What Is A Character Set? dc • A collection of characters that are intended to be used together to create meaningful text. -

D Igital Text

Digital text The joys of character sets Contents Storing text ¡ General problems ¡ Legacy character encodings ¡ Unicode ¡ Markup languages Using text ¡ Processing and display ¡ Programming languages A little bit about writing systems Overview Latin Cyrillic Devanagari − − − − − − Tibetan \ / / Gujarati | \ / − Armenian / Bengali SOGDIAN − Mongolian \ / / Gurumukhi SCRIPT Greek − Georgian / Oriya Chinese | / / | / Telugu / PHOENICIAN BRAHMI − − Kannada SINITIC − Japanese SCRIPT \ SCRIPT Malayalam SCRIPT \ / | \ \ Tamil \ Hebrew | Arabic \ Korean | \ \ − − Sinhala | \ \ | \ \ _ _ Burmese | \ \ Khmer | \ \ Ethiopic Thaana \ _ _ Thai Lao The easy ones Latin is the alphabet and writing system used in the West and some other places Greek and Cyrillic (Russian) are very similar, they just use different characters Armenian and Georgian are also relatively similar More difficult Hebrew is written from right−to−left, but numbers go left−to−right... Arabic has the same rules, but also requires variant selection depending on context and ligature forming The far east Chinese uses two ’alphabets’: hanzi ideographs and zhuyin syllables Japanese mixes four alphabets: kanji ideographs, katakana and hiragana syllables and romaji (latin) letters and numbers Korean uses hangul ideographs, combined from jamo components Vietnamese uses latin letters... The Indic languages Based on syllabic alphabets Require complex ligature forming Letters are not written in logical order, but require a strange ’circular’ ordering In addition, a single line consists of separate -

Characteristics of Developmental Dyslexia in Japanese Kana: From

al Ab gic no lo rm o a h l i c t y i e s s Ogawa et al., J Psychol Abnorm Child 2014, 3:3 P i Journal of Psychological Abnormalities n f o C l DOI: 10.4172/2329-9525.1000126 h a i n l d ISSN:r 2329-9525 r u e o n J in Children Research Article Open Access Characteristics of Developmental Dyslexia in Japanese Kana: from the Viewpoint of the Japanese Feature Shino Ogawa1*, Miwa Fukushima-Murata2, Namiko Kubo-Kawai3, Tomoko Asai4, Hiroko Taniai5 and Nobuo Masataka6 1Graduate School of Medicine, Kyoto University, Kyoto, Japan 2Research Center for Advanced Science and Technology, the University of Tokyo, Tokyo, Japan 3Faculty of Psychology, Aichi Shukutoku University, Aichi, Japan 4Nagoya City Child Welfare Center, Aichi, Japan 5Department of Pediatrics, Nagoya Central Care Center for Disabled Children, Aichi, Japan 6Section of Cognition and Learning, Primate Research Institute, Kyoto University, Aichi, Japan Abstract This study identified the individual differences in the effects of Japanese Dyslexia. The participants consisted of 12 Japanese children who had difficulties in reading and writing Japanese and were suspected of having developmental disorders. A test battery was created on the basis of the characteristics of the Japanese language to examine Kana’s orthography-to-phonology mapping and target four cognitive skills: analysis of phonological structure, letter-to-sound conversion, visual information processing, and eye–hand coordination. An examination of the individual ability levels for these four elements revealed that reading and writing difficulties are not caused by a single disability, but by a combination of factors. -

'Positional Effect' on Consonant Gemination in Japanese Haruo

日本語促音の「位置効果」について On the ‘positional effect’ on consonant gemination in Japanese Haruo KUBOZONO*, Hajime TAKEYASU** and Mikio GIRIKO* *National Institute for Japanese Language and Linguistics, **Mie University Japanese loanwords from English display many interesting phenomena concerning the occurrence or non-occurrence of sokuon, or geminate obstruent. One of them concerns the so-called ‘positional effect’ by which gemination tends to restrictively occur in word-final position. One typical example is the English word picnic [piknik], which yields a geminate consonant only in the original second syllable when borrowed into Japanese: /pi.ku.nik.ku/, */pik.ku.ni.ku/, */pik.ku.nik.ku/. Other examples are given in (1) and (2). Words in (1) have a geminate obstruent, whereas those in (2) do not. (1) cap kyap.pu, dock dok.ku, mix mik.ku.su, sax sak.ku.su, box bok.ku.su, fax fak.ku.su (2) captain kya.pu.ten, doctor do.ku.taa, mixer mi.ki.saa, saxophone sa.ki.so.fon, boxer bo.ku.saa, facsimile fa.ku.si.mi.ri The primary question we would like to address in this talk is where this positional effect comes from. There are at least two possibilities. One hypothesis is that the phonological position itself is a pivotal factor to which native speakers of Japanese are sensitive. According to this hypothesis, native Japanese speakers hear a geminate consonant in word-final position, but not in other positions, for purely structural/positional reasons. Alternatively, it is possible that phonetic properties in the input English words differ between word-medial and final syllables and that native speakers of Japanese display the positional effect in question by reacting to these phonetic properties sensitively.