Image Stitching

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MODUL PELATIHAN MIGRASI OPEN SOURCE SOFTWARE “Level Pengguna”

MODUL PELATIHAN MIGRASI OPEN SOURCE SOFTWARE “Level Pengguna” Update : Juli 2010 Oleh : Hasan B. Usman L Kelompok TIK Open Source Software Keterampilan √ Tingkat Pemula Tingkat Menengah Tingkat Mahir Jenis Buku √ Referensi √ Tutorial Latihan Pendukung √ CD/DVD OSS Linux √ Video Tutorial OSS √ Modul lain yg relevan URL http://www.igos.web.id, http://www.igos.or.id Email : [email protected] KATA PENGANTAR KATA PENGANTAR Adopsi terhadap perangkat lunak open source juga bisa diartikan sebagai bagian dari proses migrasi yang tidak secara kasat mata merupakan perpindahan, karena pada dasarnya migrasi bertujuan untuk menguatkan penggunaan software legal oleh pengguna perangkat lunak. Migrasi adalah pekerjaan dengan tingkat kerumitan yang sangat beragam, bisa mudah dan bahkan bisa menjadi sulit. Bila tidak ahli di bidangnya, proses migrasi akan menjadi lebih sulit. Untuk memudahkan anda dalam melakukan proses migrasi, buku panduan ini disusun sebagai salah satu referensi dan diperuntukkan bagi pengguna (end user) dan mudah-mudahan dengan adanya referensi ini dapat membantu anda. Salam Hangat Hasan B. Usman Ketua Tim Migrasi ([email protected] ) Modul Pelatihan Migrasi OSS Untuk Level Pengguna, update Juli 2010 http://www.igos.or.id, email : [email protected] i RINGKASAN RINGKASAN Materi pelatihan teknologi informasi menggunakan open source software ini adalah salah satu referensi untuk mendukung proses migrasi untuk level pengguna. Topik pembahasan pada materi ini adalah mengenai pengantar oss, Instalasi linux, desktop linux, aplikasi perkantoran openoffice, aplikasi internet, aplikasi multimedia dan grafis sampai dengan cara akses file melewati jaringan Modul Pelatihan Migrasi OSS Untuk Level Pengguna, update Juli 2010 http://www.igos.or.id, email : [email protected] ii COURSE OBJECTIVE COURSE OBJECTIVE 1.1. -

Hardware and Software for Panoramic Photography

ROVANIEMI UNIVERSITY OF APPLIED SCIENCES SCHOOL OF TECHNOLOGY Degree Programme in Information Technology Thesis HARDWARE AND SOFTWARE FOR PANORAMIC PHOTOGRAPHY Julia Benzar 2012 Supervisor: Veikko Keränen Approved _______2012__________ The thesis can be borrowed. School of Technology Abstract of Thesis Degree Programme in Information Technology _____________________________________________________________ Author Julia Benzar Year 2012 Subject of thesis Hardware and Software for Panoramic Photography Number of pages 48 In this thesis, panoramic photography was chosen as the topic of study. The primary goal of the investigation was to understand the phenomenon of pa- noramic photography and the secondary goal was to establish guidelines for its workflow. The aim was to reveal what hardware and what software is re- quired for panoramic photographs. The methodology was to explore the existing material on the topics of hard- ware and software that is implemented for producing panoramic images. La- ter, the best available hardware and different software was chosen to take the images and to test the process of stitching the images together. The ex- periment material was the result of the practical work, such the overall pro- cess and experience, gained from the process, the practical usage of hard- ware and software, as well as the images taken for stitching panorama. The main research material was the final result of stitching panoramas. The main results of the practical project work were conclusion statements of what is the best hardware and software among the options tested. The re- sults of the work can also suggest a workflow for creating panoramic images using the described hardware and software. The choice of hardware and software was limited, so there is place for further experiments. -

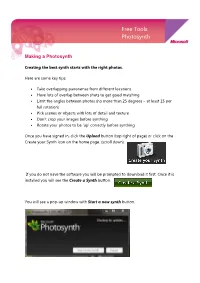

Free Tools Photosynth

Free Tools Photosynth Making a Photosynth Creating the best synth starts with the right photos. Here are some key tips: • Take overlapping panoramas from different locations • Have lots of overlap between shots to get good matching • Limit the angles between photos (no more than 25 degrees – at least 15 per full rotation) • Pick scenes or objects with lots of detail and texture • Don’t crop your images before synthing • Rotate your photos to be ‘up’ correctly before synthing Once you have signed in, click the Upload button (top right of page) or click on the Create your Synth icon on the home page. (scroll down). If you do not have the software you will be prompted to download it first. Once it is installed you will see the Create a Synth button. You will see a pop-up window with Start a new synth button. Give your synth a name, tags (descriptive words) and description. Click Add Photos, browse to your files add them. Then click on the Synth button at the bottom of the page. Photosynth will do the rest for you. Making a Panorama Many photosynths consist of photos shot from a single location. Our friends in Microsoft Research have developed a free, world class panoramic image stitcher called Microsoft Image Composite Editor (ICE for short.) ICE takes a set of overlapping photographs of a scene shot from a single camera location, and creates a single high-resolution image. Photosynth now has support for uploading, exploring and viewing ICE panoramas alongside normal synths. Here’s how to create a panorama in ICE and upload it to Photosynth: 1. -

Mobilizing (Ourselves) for a Critical Digital Archaeology Adam Rabinowitz University of Texas at Austin, [email protected]

University of Wisconsin Milwaukee UWM Digital Commons Mobilizing the Past Art History 10-21-2016 5.2. Response: Mobilizing (Ourselves) for a Critical Digital Archaeology Adam Rabinowitz University of Texas at Austin, [email protected] Follow this and additional works at: https://dc.uwm.edu/arthist_mobilizingthepast Part of the Classical Archaeology and Art History Commons Recommended Citation Rabinowitz, Adam. “Response: Mobilizing (Ourselves) for a Critical Digital Archaeology.” In Mobilizing the Past for a Digital Future: The Potential of Digital Archaeology, edited by Erin Walcek Averett, Jody Michael Gordon, and Derek B. Counts, 493-518. Grand Forks, ND: The Digital Press at the University of North Dakota, 2016. This Book is brought to you for free and open access by UWM Digital Commons. It has been accepted for inclusion in Mobilizing the Past by an authorized administrator of UWM Digital Commons. For more information, please contact [email protected]. MOBILIZING THE PAST FOR A DIGITAL FUTURE MOBILIZING the PAST for a DIGITAL FUTURE The Potential of Digital Archaeology Edited by Erin Walcek Averett Jody Michael Gordon Derek B. Counts The Digital Press @ The University of North Dakota Grand Forks Creative Commons License This work is licensed under a Creative Commons By Attribution 4.0 International License. 2016 The Digital Press @ The University of North Dakota Book Design: Daniel Coslett and William Caraher Cover Design: Daniel Coslett Library of Congress Control Number: 2016917316 The Digital Press at the University of North Dakota, Grand Forks, North Dakota ISBN-13: 978-062790137 ISBN-10: 062790137 Version 1.1 (updated November 5, 2016) Table of Contents Preface & Acknowledgments v How to Use This Book xi Abbreviations xiii Introduction Mobile Computing in Archaeology: Exploring and Interpreting Current Practices 1 Jody Michael Gordon, Erin Walcek Averett, and Derek B. -

Linux: Come E Perchх

ÄÒÙÜ Ô ©2007 mcz 12 luglio 2008 ½º I 1. Indice II ½º Á ¾º ¿º ÈÖÞÓÒ ½ º È ÄÒÙÜ ¿ º ÔÔÖÓÓÒÑÒØÓ º ÖÒÞ ×Ó×ØÒÞÐ ÏÒÓÛ× ¾½ º ÄÒÙÜ ÕÙÐ ×ØÖÙÞÓÒ ¾ º ÄÒÙÜ ÀÖÛÖ ×ÙÔÔ ÓÖØØÓ ¾ º È Ð ÖÒÞ ØÖ ÖÓ ÓØ Ù×Ö ¿½ ½¼º ÄÒÙÜ × Ò×ØÐÐ ¿¿ ½½º ÓÑ × Ò×ØÐÐÒÓ ÔÖÓÖÑÑ ¿ ½¾º ÒÓÒ ØÖÓÚÓ ÒÐ ×ØÓ ÐÐ ×ØÖÙÞÓÒ ¿ ½¿º Ó׳ ÙÒÓ ¿ ½º ÓÑ × Ð ××ØÑ ½º ÓÑ Ð ½º Ð× Ñ ½º Ð Ñ ØÐ ¿ ½º ÐÓ ½º ÓÑ × Ò×ØÐÐ Ð ×ØÑÔÒØ ¾¼º ÓÑ ÐØØÖ¸ Ø×Ø ÐÖ III Indice ¾½º ÓÑ ÚÖ Ð ØÐÚ×ÓÒ ¿ 21.1. Televisioneanalogica . 63 21.2. Televisione digitale (terrestre o satellitare) . ....... 64 ¾¾º ÐÑØ ¾¿º Ä 23.1. Fotoritocco ............................. 67 23.2. Grafica3D.............................. 67 23.3. Disegnovettoriale-CAD . 69 23.4.Filtricoloreecalibrazionecolori . .. 69 ¾º ×ÖÚ Ð ½ 24.1.Vari.................................. 72 24.2. Navigazionedirectoriesefiles . 73 24.3. CopiaCD .............................. 74 24.4. Editaretesto............................. 74 24.5.RPM ................................. 75 ¾º ×ÑÔ Ô ´ËÐе 25.1.Montareundiscoounapenna . 77 25.2. Trovareunfilenelsistema . 79 25.3.Vedereilcontenutodiunfile . 79 25.4.Alias ................................. 80 ¾º × ÚÓÐ×× ÔÖÓÖÑÑÖ ½ ¾º ÖÓÛ×Ö¸ ÑÐ ººº ¿ ¾º ÖÛÐРгÒØÚÖÙ× Ð ÑØØÑÓ ¾º ÄÒÙÜ ½ ¿¼º ÓÑ ØÖÓÚÖ ÙØÓ ÖÖÑÒØ ¿ ¿½º Ð Ø×ØÙÐ Ô Ö Ð ×ØÓÔ ÄÒÙÜ ¿¾º ´ÃµÍÙÒØÙ¸ ÙÒ ×ØÖÙÞÓÒ ÑÓÐØÓ ÑØ ¿¿º ËÙÜ ÙÒ³ÓØØÑ ×ØÖÙÞÓÒ ÄÒÙÜ ½¼½ ¿º Á Ó Ò ÄÒÙÜ ½¼ ¿º ÃÓÒÕÙÖÓÖ¸ ÕÙ×ØÓ ½¼ ¿º ÃÓÒÕÙÖÓÖ¸ Ñ ØÒØÓ Ô Ö ½½¿ 36.1.Unaprimaocchiata . .114 36.2.ImenudiKonqueror . .115 36.3.Configurazione . .116 IV Indice 36.4.Alcuniesempidiviste . 116 36.5.Iservizidimenu(ServiceMenu) . 119 ¿º ÃÓÒÕÙÖÓÖ Ø ½¾¿ ¿º à ÙÒ ÖÖÒØ ½¾ ¿º à ÙÒ ÐÙ×ÓÒ ½¿½ ¼º ÓÒÖÓÒØÓ Ò×ØÐÐÞÓÒ ÏÒÓÛ×È ÃÍÙÒØÙ º½¼ ½¿¿ 40.1. -

Engineering Law and Ethics

ENSC 406 Software, Computer and Internet Ethics Bob Gill, P.Eng., FEC, smIEEE May 15th 2017 1 Topics Covered What is Open Source Software? A One-Slide History of Open Source Software The Open Source Development Model Why Companies Use (and Don’t Use) Open Source Software Open Source Licensing Strategies Open Source Licenses and “Copyleft” Open Source Issues in Corporate Transactions Relevant Cases and Disputes Open source vs. Freeware vs. Shareware Site Licensing Software Maintenance Computer and Internet Ethics 2 What is Open Source Software? Open Source software is software licensed under an agreement that conforms to the Open Source Definition Access to Source Code Freedom to Redistribute Freedom to Modify Non-Discriminatory Licensing (licensee/product) Integrity of Authorship Redistribution in accordance with the Open Source License Agreement 3 What is Open Source Software? Any developer/licensor can draft an agreement that conforms to the OSD, though most licensors use existing agreements GNU Public License (“GPL”) Lesser/Library GNU Public License (“LGPL”) Mozilla Public License Berkeley Software Distribution license (“BSD”) Apache Software License MIT – X11 License See complete list at www.opensource.org/licenses 4 Examples of Open Source Software Linux (operating system kernel – substitutes for proprietary UNIX) Apache Web Server (web server for UNIX systems) MySQL(Structured Query Language – competes with Oracle) Cloudscape, Eclipse (IBM contributions) OpenOffice(Microsoft Office Alternate) SciLab, -

Los Gatos - Saratoga Camera Club Newsletter Vol

Los Gatos - Saratoga Camera Club Newsletter Vol. 31 Issue 8 August 2009 2009 Calendar August 3 Competition: Color, PJ, and Travel - Slides and Digital Images. Color, PJ, and Monochrome - Prints. 17 NO MEETING (Board Meeting Only: 7PM) ©Julie Kitzenberger September 7 NO MEETING 21 Program: TBD and Post Card Judging JULIE KITZENBERGER PHOTOGRAPHY Fine Art Landscapes - Travel - Events [email protected] 408-348-4199 http://photo.net/photos/JulieKitzenberger ©Julie Kitzenberger A Solo Show: “Captured Landscapes and Abstracts – with a Touch of Us” July 28 – August 23, 2009 Reception/Meet the Photographer: Saturday, August 1, 2009, 5 – 8 pm AEGIS GALLERY OF FINE ART www.Aegisgallery.com/ Gallery hours: 14531 Big Basin Way at 4th Street Sun, Tue, Wed: 11 AM – 7 PM Saratoga , CA 95070 Thu, Fri, Sat: 11 AM – 9 PM (408) 867-0171 Closed Mondays LOS GATOS/SARATOGA CAMERA CLUB EXHIBIT 2009 Theme: Through the Lens: Photographic Moments Sign Ups Begin July 6 It’s time to begin planning for our Club’s seventh annual photography exhibit that is scheduled to run November 12 through January 7, 2010. This exclusive LG/SCC show provides Club members the opportunity to exhibit their work in the “Art in the Council Chambers program sponsored by the Los Gatos Arts Commission and held in the Los Gatos Council Chambers. SIGN-UPS: Beginning at the July 6 meeting, Club sign-ups will run until September 1. At that time the final number of images per person (up to 2 each) will be determined. All exhibit photos must be matted and framed under glass (or plexi) See Club website for complete exhibit timelines and hanging information. -

Computer-Based Software Information Sheet

Computer-Based Software Information Sheet Name Curricular Use 7-Zip 7-Zip is an open source file archiver. Adobe Reader View, create, manipulate, print and manage files in PDF Format Audacity This software looks and acts like a tape recorder. Just hit record and talk, sing, play and export to mp3 for a finished product. CamStudio Chemistry Add-in for Word ComicLife Enchanting FET Fonts4Teachers Gimp GIMP is the GNU Image Manipulation Program. It is a freely distributed piece of software for such tasks as photo retouching, image composition and image authoring. Gimp Tutorials Google Earth Google SketchUp Graph HoverCamFlex Image Resizer InfanView iTunes Kodu Game Lab Komposer MDS Calculator Microsoft Mathematics Microsoft Office Mp4Cam2Avi This software is to convert Mp4 video files from a camera to an Avi video file. MuseScore Paint.NET Paint.NET is free image editing and photo manipulation software. It features an intuitive and innovative user interface with support for layers, unlimited undo, special effects, and a wide variety of useful and powerful tools. Paint.NET Tutorials. Photo Story Microsoft Photo Story is a free application that allows users to create a visual story (show and tell presentation) from their digital photos. Photosynth Pivot Stickfigure Animator Pivot makes it easy to create stick-figure animations. You can build your own stick figures and load your own backgrounds. The animations can be saved as animated gifs to be used on web pages. Scratch Songsmith SMART Education Software (Notebook) SMART Ideas StoryBoardPC VirtualDub Windows Movie Maker WinScp Zoomit . -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

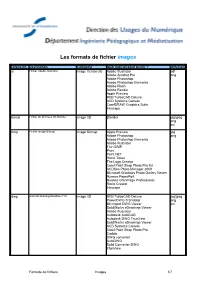

Les Formats De Fichier Images

Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion ai Fichier Adobe Illustrator Image Vectorielle Adobe Illustrator pdf Adobe Acrobat Pro png Adobe Photoshop Adobe Photoshop Elements Adobe Flash Adobe Reader Apple Preview IMSI TurboCAD Deluxe ACD Systems Canvas CorelDRAW Graphics Suite Inkscape blend Fichier de données 3D Blender Image 3D Blender jpg/jpeg png avi bmp Fichier Image Bitmap Image Bitmap Apple Preview jpg Adobe Photoshop png Adobe Photoshop Elements Adobe Illustrator The GIMP Paint Paint.NET Roxio Toast The Logo Creator Corel Paint Shop Photo Pro X3 ACDSee Photo Manager 2009 Microsoft Windows Photo Gallery Viewer Nuance PaperPort Nuance OmniPage Professional Roxio Creator Inkscape dwg AtoCAD Drawing Database File Image 3D IMSI TurboCAD Deluxe jpg/jpeg PowerDWG Translator png Microspot DWG Viewer avi SolidWorks eDrawings Viewer Adobe Illustrator Autodesk AutoCAD Autodesk DWG TrueView SolidWorks eDrawings Viewer ACD Systems Canvas Corel Paint Shop Photo Pro Caddie DWG converter AutoDWG Solid Converter DWG IrfanView Formats de fichiers Images 1/7 Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion dxf Drawing Exchange Format File Image 3D TurboCAD Deluxe 16 jpg/jpeg PowerCADD PowerDWG translator png Microspot DWG Viewer avi NeoOffice Draw DataViz MacLink Plus Autodesk AutoCAD IMSI TurboCAD Deluxe SolidWorks eDrawings Viewer Corel Paint Shop Photo Pro ACD Systems Canvas DWG converter DWG2Image Converter OpenOffice.org Draw Adobe Illustrator -

Single Snapshot System for the Fast 3D Modeling Using Dynamic Time Warping

SINGLE SNAPSHOT SYSTEM FOR THE FAST 3D MODELING USING DYNAMIC TIME WARPING Luis Ruiz, Xavier Mateo, Ciro Gr`acia and Xavier Binefa Department of Information and Communication Technologies, Universitat Pompeu Fabra, Barcelona, Spain Keywords: 3D Reconstruction, Mesh Zippering, Non-overlapping, Dynamic Time Warping. Abstract: In this work we explore the automatic 3D modeling of a person using images acquired from a range camera. Using only one range camera and two mirrors, the objective is to obtain a full 3D model with one single snapshot. The combination of the camera and the two mirrors give us three non-overlapping meshes, making impossible to use common zippering algorithms based on overlapping meshes. Therefore, Dynamic Time Warping algorithm is used to find the best matching between boundaries of the meshes. Experimental results and error evaluations are given to show the robustness and efficiency of our method. 1 INTRODUCTION aspects needed for the correct understanding of the presented system, which is explained in Section 4. Nowadays we can find on the market low cost range Experimental results of the presented system are ex- cameras which allow the direct retrieval of depth in- plained in Section 5, and finally conclusions and fu- formation from a scene. This depth information can ture work are discussed in Section 6. be used in combination with the visual information from another sensor in order to recreate the observed scene in a 3D environment with high realism. The increasing use of this kind of cameras has attracted 2 STATE OF THE ART interest from different research fields like computer vision, computer graphics, archeology, industrial pro- The 3D modeling of common objects is a long-time totyping, etc. -

An Amateur's Guide to Observing and Imaging the Heavens

An Amateur’s Guide to Observing and Imaging the Heavens An Amateur’s Guide to Observing and Imaging the Heavens is a highly comprehensive guidebook that bridges the gap between ‘beginners and hobbyists’ books and the many specialised and subject-specific texts for more advanced amateur astronomers. Written by an experienced astronomer and educator, the book is a one-stop reference providing extensive information and advice about observing and imaging equipment, with detailed examples showing how best to use them. In addition to providing in-depth knowledge about every type of astronomical telescope and highlighting the strengths and weaknesses of each, the book offers advice on making visual observations of the Sun, Moon, planets, stars and galaxies. All types of modern astronomical imaging are covered, with step-by-step details given on the use of DSLRs and webcams for solar, lunar and planetary imaging and the use of DSLRs and cooled CCD cameras for deep-sky imaging. Ian Morison spent his professional career as a radio astronomer at the Jodrell Bank Observatory. The International Astronomical Union has recognised his work by naming an asteroid in his honour. He is patron of the Macclesfield Astronomical Society, which he also helped found, and a council member and past president of the Society for Popular Astronomy, United Kingdom. In 2007 he was appointed professor of astronomy at Gresham College, the oldest chair of astronomy in the world. He is the author of numerous articles for the astronomical press and of a university astronomy textbook, and writes a monthly online sky guide and audio podcast for the Jodrell Bank Observatory.