Soft Evolution." Software Takes Command

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MODUL PELATIHAN MIGRASI OPEN SOURCE SOFTWARE “Level Pengguna”

MODUL PELATIHAN MIGRASI OPEN SOURCE SOFTWARE “Level Pengguna” Update : Juli 2010 Oleh : Hasan B. Usman L Kelompok TIK Open Source Software Keterampilan √ Tingkat Pemula Tingkat Menengah Tingkat Mahir Jenis Buku √ Referensi √ Tutorial Latihan Pendukung √ CD/DVD OSS Linux √ Video Tutorial OSS √ Modul lain yg relevan URL http://www.igos.web.id, http://www.igos.or.id Email : [email protected] KATA PENGANTAR KATA PENGANTAR Adopsi terhadap perangkat lunak open source juga bisa diartikan sebagai bagian dari proses migrasi yang tidak secara kasat mata merupakan perpindahan, karena pada dasarnya migrasi bertujuan untuk menguatkan penggunaan software legal oleh pengguna perangkat lunak. Migrasi adalah pekerjaan dengan tingkat kerumitan yang sangat beragam, bisa mudah dan bahkan bisa menjadi sulit. Bila tidak ahli di bidangnya, proses migrasi akan menjadi lebih sulit. Untuk memudahkan anda dalam melakukan proses migrasi, buku panduan ini disusun sebagai salah satu referensi dan diperuntukkan bagi pengguna (end user) dan mudah-mudahan dengan adanya referensi ini dapat membantu anda. Salam Hangat Hasan B. Usman Ketua Tim Migrasi ([email protected] ) Modul Pelatihan Migrasi OSS Untuk Level Pengguna, update Juli 2010 http://www.igos.or.id, email : [email protected] i RINGKASAN RINGKASAN Materi pelatihan teknologi informasi menggunakan open source software ini adalah salah satu referensi untuk mendukung proses migrasi untuk level pengguna. Topik pembahasan pada materi ini adalah mengenai pengantar oss, Instalasi linux, desktop linux, aplikasi perkantoran openoffice, aplikasi internet, aplikasi multimedia dan grafis sampai dengan cara akses file melewati jaringan Modul Pelatihan Migrasi OSS Untuk Level Pengguna, update Juli 2010 http://www.igos.or.id, email : [email protected] ii COURSE OBJECTIVE COURSE OBJECTIVE 1.1. -

Engineering Law and Ethics

ENSC 406 Software, Computer and Internet Ethics Bob Gill, P.Eng., FEC, smIEEE May 15th 2017 1 Topics Covered What is Open Source Software? A One-Slide History of Open Source Software The Open Source Development Model Why Companies Use (and Don’t Use) Open Source Software Open Source Licensing Strategies Open Source Licenses and “Copyleft” Open Source Issues in Corporate Transactions Relevant Cases and Disputes Open source vs. Freeware vs. Shareware Site Licensing Software Maintenance Computer and Internet Ethics 2 What is Open Source Software? Open Source software is software licensed under an agreement that conforms to the Open Source Definition Access to Source Code Freedom to Redistribute Freedom to Modify Non-Discriminatory Licensing (licensee/product) Integrity of Authorship Redistribution in accordance with the Open Source License Agreement 3 What is Open Source Software? Any developer/licensor can draft an agreement that conforms to the OSD, though most licensors use existing agreements GNU Public License (“GPL”) Lesser/Library GNU Public License (“LGPL”) Mozilla Public License Berkeley Software Distribution license (“BSD”) Apache Software License MIT – X11 License See complete list at www.opensource.org/licenses 4 Examples of Open Source Software Linux (operating system kernel – substitutes for proprietary UNIX) Apache Web Server (web server for UNIX systems) MySQL(Structured Query Language – competes with Oracle) Cloudscape, Eclipse (IBM contributions) OpenOffice(Microsoft Office Alternate) SciLab, -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

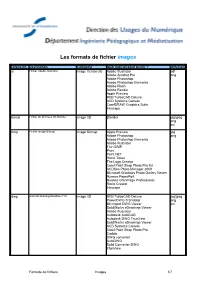

Les Formats De Fichier Images

Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion ai Fichier Adobe Illustrator Image Vectorielle Adobe Illustrator pdf Adobe Acrobat Pro png Adobe Photoshop Adobe Photoshop Elements Adobe Flash Adobe Reader Apple Preview IMSI TurboCAD Deluxe ACD Systems Canvas CorelDRAW Graphics Suite Inkscape blend Fichier de données 3D Blender Image 3D Blender jpg/jpeg png avi bmp Fichier Image Bitmap Image Bitmap Apple Preview jpg Adobe Photoshop png Adobe Photoshop Elements Adobe Illustrator The GIMP Paint Paint.NET Roxio Toast The Logo Creator Corel Paint Shop Photo Pro X3 ACDSee Photo Manager 2009 Microsoft Windows Photo Gallery Viewer Nuance PaperPort Nuance OmniPage Professional Roxio Creator Inkscape dwg AtoCAD Drawing Database File Image 3D IMSI TurboCAD Deluxe jpg/jpeg PowerDWG Translator png Microspot DWG Viewer avi SolidWorks eDrawings Viewer Adobe Illustrator Autodesk AutoCAD Autodesk DWG TrueView SolidWorks eDrawings Viewer ACD Systems Canvas Corel Paint Shop Photo Pro Caddie DWG converter AutoDWG Solid Converter DWG IrfanView Formats de fichiers Images 1/7 Les formats de fichier images Extension Description Catégorie Quel logiciel pour ouvrir ? diffusion dxf Drawing Exchange Format File Image 3D TurboCAD Deluxe 16 jpg/jpeg PowerCADD PowerDWG translator png Microspot DWG Viewer avi NeoOffice Draw DataViz MacLink Plus Autodesk AutoCAD IMSI TurboCAD Deluxe SolidWorks eDrawings Viewer Corel Paint Shop Photo Pro ACD Systems Canvas DWG converter DWG2Image Converter OpenOffice.org Draw Adobe Illustrator -

Can Free Software Replace Proprietary Software for Graphic Production? Investigating to Which Extent Free Software Can Be Used for Book and Magazine Production

Can free software replace proprietary software for graphic production? Investigating to which extent free software can be used for book and magazine production. 2013-04-25 Staffan Melin, D89, [email protected] Master´s thesis in Media Technology, School of Computer Science and Communication at the Royal Institute of Technology, Stockholm, Sweden This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 3.0 Unported License. Abstract Free and open source software is widely used. At the same time there are several areas where it is not. One of these is graphic production where the applications from Adobe – Indesign, Photoshop and Illustrator – dominates. In this thesis I start by describing a workflow for graphic production. Next I research the field of free software and put together a set of tools that fit into this workflow. The choices are made on the basis of functionality and how they work together. I then go on to apply these tools to two real world scenarios: production of a book and a magazine. The results show that free software can be used for graphic production without any loss of quality and only minor problems compared to the proprietary tools. A look into future development shows that the bulk of these problems are being taken care of by the open source community. Keywords: free software, open source, proprietary software, graphic production, graphic design, FLOSS, FOSS, layout, libre, Scribus, Inkscape, GIMP, GNOME Color Manager. Sammanfattning Fri och open source programvara används på många områden. Samtidigt finns det många områden där det inte används. Ett av dessa är grafisk produktion där programmen från Adobe – Indesign, Photoshop och Illustrator – dominerar. -

Grafika Rastrowa I Wektorowa

GRAFIKA RASTROWA I WEKTOROWA Grafikę komputerową, w dużym uproszczeniu, można podzielić na dwa rodzaje: 1) grafikę rastrową, zwaną też bitmapową, pikselową, punktową 2) grafikę wektorową zwaną obiektową. Grafika rastrowa – obraz budowany jest z prostokątnej siatki punktów (pikseli). Skalowanie rysunków bitmapowych powoduje najczęściej utratę jakości. Grafika ta ma największe zastosowanie w fotografice cyfrowej. Popularne formaty to: BMP, JPG, TIFF, PNG GIF, PCX, PNG, RAW Znane edytory graficzne: Paint, Photoshop, Gimp. Grafika wektorowa – stosuje obiekty graficzne zwane prymitywami takie jak: punkty, linie, krzywe opisane parametrami matematycznymi. Podstawową zaletą tej grafiki jest bezstratna zmian rozmiarów obrazów bez zniekształceń. Popularne formaty to: SVG, CDR, EPS, WMF - cilparty Znane edytory graficzne: Corel Draw, Sodipodi, Inscape, Adobe Ilustrator, 3DS LISTA PROGRAMÓW DO GRAFIKI BITMAPOWEJ Darmowe: CinePaint , DigiKam , GIMP , GimPhoto , GIMPshop , GNU Paint , GrafX2 , GraphicsMagick , ImageJ , ImageMagick , KolourPaint , Krita , LiveQuartz , MyPaint , Pencil , Pinta , Pixen , Rawstudio , RawTherapee , Seashore , Shotwell , Tile Studio , Tux Paint , UFRaw , XPaint , ArtRage Starter Edition , Artweaver , Brush Strokes Image Editor , Chasys Draw IES , FastStone Image Viewer , Fatpaint , Fotografix , IrfanView , Paint.NET , Picasa , Picnik , Pixia , Project Dogwaffle , TwistedBrush Open Studio , Xnview Płatne: Ability Photopaint, ACD Canvas, Adobe Fireworks, Adobe Photoshop, Adobe Photoshop Lightroom, Adobe Photoshop Elements, -

MODUL PELATIHAN MIGRASI OPEN SOURCE SOFTWARE “Level Administrator”

MODUL PELATIHAN MIGRASI OPEN SOURCE SOFTWARE “Level Administrator” Update : Juli 2010 Oleh : Team Pelaksana Kelompok TIK Open Source Software Keterampilan √ Tingkat Pemula √ Tingkat Menengah Tingkat Mahir Jenis Buku √ Referensi √ Tutorial Latihan Pendukung √ CD/DVD OSS Linux √ Video Tutorial OSS √ Modul lain yg relevan URL http://www.igos.web.id, http://www.igos.or.id Email : [email protected] KATA PENGANTAR KATA PENGANTAR Adopsi terhadap perangkat lunak open source juga bisa diartikan sebagai bagian dari proses migrasi yang tidak secara kasat mata merupakan perpindahan, karena pada dasarnya migrasi bertujuan untuk menguatkan penggunaan software legal oleh pengguna perangkat lunak. Migrasi adalah pekerjaan dengan tingkat kerumitan yang sangat beragam, bisa mudah dan bahkan bisa menjadi sulit. Bila tidak ahli di bidangnya, proses migrasi akan menjadi lebih sulit. Untuk memudahkan anda dalam melakukan proses migrasi, modul pelatihan ini disusun sebagai salah satu referensi dan diperuntukkan untuk level administrator dan mudah-mudahan dengan adanya referensi ini dapat membantu anda. Salam Hangat Hasan B. Usman Ketua Tim Migrasi Modul Pelatihan Migrasi OSS Untuk Level Administrator, update Juli 2010 http://www.igos.or.id, email : [email protected] i RINGKASAN RINGKASAN Materi pelatihan teknologi informasi menggunakan open source software ini adalah salah satu referensi untuk mendukung proses migrasi yang bersifat teknis untuk level administrator. Topik pembahasan pada materi ini adalah mengenai strategi migrasi, pengantar linux, aplikasi desktop OSS, openoffice, dasar penggunaan linux, instalasi linux sampai dengan sistem admin linux dan jaringan linux Modul Pelatihan Migrasi OSS Untuk Level Administrator, update Juli 2010 http://www.igos.or.id, email : [email protected] ii COURSE OBJECTIVE COURSE OBJECTIVE Deskrispi Modul pelatihan ini Desktop dan OpenOffice menggunakan Distro Ubuntu 10.4 Lucid namum juga dapat digunakan pada Distro Ubuntu sebelumnya dan juga Distro yang lain seperti IGN maupun Fedora. -

9 Free Photoshop Alternatives for Powerful Editing

coverstory 9 FREE PHOTOSHOP ALTERNATIVES FOR POWERFUL EDITING Adobe Photoshop is a dominant player among industry professionals but there are some alternatives available which can be useful when you need an intermediate level of editing and photo touch ups only – Raj Kumar Maurya he most important thing is that these functionality of this tool can be extended by installing the photoshop alternative software are free where plugins. as Photoshop comes for a hefty fee, something • Supported Platforms: Windows 7 or Later Tthat individuals and small businesses may not • Key Features: Selection editing, Layers, Histogram, be able to afford. With these free Photoshop alternatives Retouching, Resizing, Noise removal, Sharpening, you will get simpler interface and variety. Colour correction, Plugin support. • Supported File Formats: RAW files, BMP, JPEG, GIF, GIMP TIFF, PNG. GIMP stands for ‘GNU Image Seashore Manipulation Seashore is an Program’. It open-source is an old and image editing well-known tool for Mac tool for photo users. This editing. With a tool is based widely supported community and compatibility across on GIMP various operating systems, GIMP is one of the best free technology and alternatives to Photoshop. Although it doesn’t offer quite offers major a features like Photoshop but it possesses all basic and editing features some advanced features. like gradients, textures, and anti-aliasing for both text and • Supported Platforms: Windows, Mac OS X, Linux, brush strokes. BSD, Unix, SkyOS • Supported Platforms: Mac OS X • Key Features: Layers, Selection editing, HDR, • Key Features: Layers, Alpha channel support, Re-touching, Noise removal, Lens and Colour gradients, transparency, Anti-aliased brushes and correction, Sharpening, Plugin support, etc. -

Migration from Windows to Linux for a Small Engineering Firm "A&G Associates"

Rochester Institute of Technology RIT Scholar Works Theses 2004 Migration from Windows to Linux for a small engineering firm "A&G Associates" Trimbak Vohra Follow this and additional works at: https://scholarworks.rit.edu/theses Recommended Citation Vohra, Trimbak, "Migration from Windows to Linux for a small engineering firm A&G" Associates"" (2004). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. Migration from Windows to Linux for a Small Engineering Firm "A&G Associates" (H ' _T ^^L. WBBmBmBBBBmb- Windows Linux by Trimbak Vohra Thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Information Technology Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Date: December 2, 2004 12/B2/28B2 14:46 5854752181 RIT INFORMATION TECH PAGE 02 Rochester Institute of Teehnology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Thesis Approval Form Student Name: Trimbak Vohra Thesis Title: Migration from Windows to Unux for a Small Engineeriog Firm "A&G Associates" Thesis Committee Name Signature Date Luther Troell luther IrQell, Ph.D ttL ",j7/Uy Chair G. L. Barido Prof. ~~orge Barido ? - Dec:. -cl7' Committee Member Thomas Oxford Mr. Thomas OxfocQ \ 2. L~( Q~ Committee Member Thesis Reproduction Permission Form Rochester Institute of Technology B. Thomas Golisano College of Computing and Information Sciences Master of Science in Information Technology Migration from Windows to Linux for a Small Engineering Firm "A&G Associates" I,Trimbak Vohra, hereby grant permission to the Wallace Library of the Rochester Institute of Technology to reproduce my thesis in whole or in part. -

Tux with Shades Linux in Hollywood

Tux with Shades Linux in Hollywood FOSDEM Brussels February 23th, 2008 Gabrielle Pantera Beverly Hills Sculpture Robin Rowe “Sisyphus” www.LinuxMovies.org DreamWorks Transformer “Optimus Prime” www.CinePaint.org 1 of 40 A Short Time Ago, in a Galaxy Not Far Away... In August 1991 Linus Torvalds posts on USENIX : I’m doing a (free) operating system (just a hobby, won’t be big and professional like gnu) for 386 (486) AT clones. 2 of 40 1 By 2001 Linux is #1 •Dominates feature animation and visual effects •All big studios rely on Linux •Better, faster, cheaper •Millions of lines of IRIX code ported •The first film produced on Linux won eleven academy awards including Best Picture •The year was 1998 •Can you name the movie? 3 of 40 Digital Domain Titanic First Linux Renderfarm…on DEC Alpha... 4 of 40 2 DreamWorks SKG Linux on artist desktops… …team of 100 Linux developers 5 of 40 Industrial Light & Magic Star Wars Episode II Conversion to Linux during production... 6 of 40 3 Pixar Finding Nemo George Lucas pays for divorce, introduces Steve Jobs to Linux... 7 of 40 Disney Disney the last of the majors to convert to Linux... 8 of 40 4 Double Negative Captain Corelli's Mandolin Maya Stuka and Pixar RenderMan with deliberate motion blur... 9 of 40 Flash Film Works Collateral Damage Only one helicopter here is real...which one? 10 of 40 5 Hammerhead Blue Crush Ok, the girls are real, but some waves aren’t... 11 of 40 Rhythm & Hues Scooby-Doo CinePaint for retouching.. -

Enblend Combining Multiple Images with Enblend Version 4.0-753B534c819d, 1 August 2010

Enblend Combining Multiple Images with Enblend version 4.0-753b534c819d, 1 August 2010 Andrew Mihal This manual is for Enblend (version 4.0-753b534c819d, 1 August 2010), a tool for composit- ing images in such a way that the seam between the images is invisible, or at least very difficult to see. Copyright c 2004{2009 Andrew Mihal. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front-Cover Texts and no Back-Cover Texts. A copy of the license is included in the section entitled \GNU Free Documentation License". i Table of Contents List of Tables::::::::::::::::::::::::::::::::::::::: iv List of Figures :::::::::::::::::::::::::::::::::::::: v 1 Overview :::::::::::::::::::::::::::::::::::::::: 1 2 Workflow :::::::::::::::::::::::::::::::::::::::: 2 3 Invocation ::::::::::::::::::::::::::::::::::::::: 4 3.1 Response Files ::::::::::::::::::::::::::::::::::::::::::::::::: 4 3.1.1 Response File Format ::::::::::::::::::::::::::::::::::::: 5 3.1.2 Syntactic Comments::::::::::::::::::::::::::::::::::::::: 6 3.1.3 Globbing Algorithms :::::::::::::::::::::::::::::::::::::: 6 3.2 Common Options :::::::::::::::::::::::::::::::::::::::::::::: 7 3.3 Extended Options::::::::::::::::::::::::::::::::::::::::::::: 10 3.4 Mask Generation Options ::::::::::::::::::::::::::::::::::::: 12 4 Understanding Masks ::::::::::::::::::::::::: 20 4.1 Masks in Input Files :::::::::::::::::::::::::::::::::::::::::: -

Tabla De Aplicaciones Equivalentes Windows / GNU Linux Orientada Al Usuario En General O Promedio

Tabla de aplicaciones equivalentes Windows / GNU Linux Orientada al usuario en general o promedio. Imágen Nomacs http://www.nomacs.org/ Viewnior http://siyanpanayotov.com/project/viewnior/ Visor de imágnes Eye of GNOME (http://www.gnome.org/projects/eog/) ACDSee etc. Gwenview (http://gwenview.sourceforge.net/) XnView http://www.xnview.com/ digiKam (http://www.digikam.org/) Albums de fotos F-Spot (http://f-spot.org/Main_Page) Picasa, CyberLink gThumb (http://live.gnome.org/gthumb/) PhotoDirector, etc Shotwell (http://www.yorba.org/shotwell/) Editor de metadatos de FotoTagger (http://sourceforge.net/projects/fototagger/) imágnes ExifTool http://www.sno.phy.queensu.ca/~phil/exiftool/ PhotoME Inkscape (http://www.inkscape.org/) Skencil (http://www.skencil.org/) Editor de gráficos vectoriales SK1 http://sk1project.org/ Adobe Illustrator Xara Xtreme (http://www.xaraxtreme.org/) Corel Draw Alchemy (http://al.chemy.org/gallery/) Libre Office Draw (https://es.libreoffice.org/descubre/draw/) Blender (http://www.blender.org/) Natron https://natron.fr/ Gráficos 3D K-3D (http://www.k-3d.org/) 3D Studio Max Wings 3D http://www.wings3d.com/ After Effects Art of Illusion (http://www.artofillusion.org/) Jahshaka http://www.jahshaka.com/ KolourPaint (http://kolourpaint.sourceforge.net/) Pintura digital Pinta (http://pinta-project.com/) MS Paint TuxPaint (http://tuxpaint.org/) Pintura digital profesional Kitra (https://krita.org/) Corel PaintShopPro Pencil (http://www.pencil-animation.org/)