NUMA-Aware Hypervisor and Impact on Brocade* 5600 Vrouter

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

VX97 User's Manual ASUS CONTACT INFORMATION Asustek COMPUTER INC

R VX97 Pentium Motherboard USER'S MANUAL USER'S NOTICE No part of this manual, including the products and softwares described in it, may be repro- duced, transmitted, transcribed, stored in a retrieval system, or translated into any language in any form or by any means, except documentation kept by the purchaser for backup pur- poses, without the express written permission of ASUSTeK COMPUTER INC. (“ASUS”). ASUS PROVIDES THIS MANUAL “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE IMPLIED WARRANTIES OR CONDITIONS OF MERCHANTABILITY OR FITNESS FOR A PAR- TICULAR PURPOSE. IN NO EVENT SHALL ASUS, ITS DIRECTORS, OFFICERS, EMPLOYEES OR AGENTS BE LIABLE FOR ANY INDIRECT, SPECIAL, INCIDEN- TAL, OR CONSEQUENTIAL DAMAGES (INCLUDING DAMAGES FOR LOSS OF PROFITS, LOSS OF BUSINESS, LOSS OF USE OR DATA, INTERRUPTION OF BUSI- NESS AND THE LIKE), EVEN IF ASUS HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES ARISING FROM ANY DEFECT OR ERROR IN THIS MANUAL OR PRODUCT. Products and corporate names appearing in this manual may or may not be registered trade- marks or copyrights of their respective companies, and are used only for identification or explanation and to the owners’ benefit, without intent to infringe. • Intel, LANDesk, and Pentium are registered trademarks of Intel Corporation. • IBM and OS/2 are registered trademarks of International Business Machines. • Symbios is a registered trademark of Symbios Logic Corporation. • Windows and MS-DOS are registered trademarks of Microsoft Corporation. • Sound Blaster AWE32 and SB16 are trademarks of Creative Technology Ltd. • Adobe and Acrobat are registered trademarks of Adobe Systems Incorporated. -

Lista Sockets.Xlsx

Data de Processadores Socket Número de pinos lançamento compatíveis Socket 0 168 1989 486 DX 486 DX 486 DX2 Socket 1 169 ND 486 SX 486 SX2 486 DX 486 DX2 486 SX Socket 2 238 ND 486 SX2 Pentium Overdrive 486 DX 486 DX2 486 DX4 486 SX Socket 3 237 ND 486 SX2 Pentium Overdrive 5x86 Socket 4 273 março de 1993 Pentium-60 e Pentium-66 Pentium-75 até o Pentium- Socket 5 320 março de 1994 120 486 DX 486 DX2 486 DX4 Socket 6 235 nunca lançado 486 SX 486 SX2 Pentium Overdrive 5x86 Socket 463 463 1994 Nx586 Pentium-75 até o Pentium- 200 Pentium MMX K5 Socket 7 321 junho de 1995 K6 6x86 6x86MX MII Slot 1 Pentium II SC242 Pentium III (Cartucho) 242 maio de 1997 Celeron SEPP (Cartucho) K6-2 Socket Super 7 321 maio de 1998 K6-III Celeron (Socket 370) Pentium III FC-PGA Socket 370 370 agosto de 1998 Cyrix III C3 Slot A 242 junho de 1999 Athlon (Cartucho) Socket 462 Athlon (Socket 462) Socket A Athlon XP 453 junho de 2000 Athlon MP Duron Sempron (Socket 462) Socket 423 423 novembro de 2000 Pentium 4 (Socket 423) PGA423 Socket 478 Pentium 4 (Socket 478) mPGA478B Celeron (Socket 478) 478 agosto de 2001 Celeron D (Socket 478) Pentium 4 Extreme Edition (Socket 478) Athlon 64 (Socket 754) Socket 754 754 setembro de 2003 Sempron (Socket 754) Socket 940 940 setembro de 2003 Athlon 64 FX (Socket 940) Athlon 64 (Socket 939) Athlon 64 FX (Socket 939) Socket 939 939 junho de 2004 Athlon 64 X2 (Socket 939) Sempron (Socket 939) LGA775 Pentium 4 (LGA775) Pentium 4 Extreme Edition Socket T (LGA775) Pentium D Pentium Extreme Edition Celeron D (LGA 775) 775 agosto de -

Socket E Slot Per

Socket e Slot per CPU Socket e Slot per CPU Socket 1 Socket 2 Socket 3 Socket 4 Socket 5 Socket 6 Socket 7 e Super Socket 7 Socket 8 Slot 1 (SC242) Slot 2 (SC330) Socket 370 (PGA-370) Slot A Socket A (Socket 462) Socket 423 Socket 478 Socket 479 Socket 775 (LGA775) Socket 603 Socket 604 PAC418 PAC611 Socket 754 Socket 939 Socket 940 Socket AM2 (Socket M2) Socket 771 (LGA771) Socket F (Socket 1207) Socket S1 A partire dai processori 486, Intel progettò e introdusse i socket per CPU che, oltre a poter ospitare diversi modelli di processori, ne consentiva anche una rapida e facile sostituzione/aggiornamento. Il nuovo socket viene definito ZIF (Zero Insertion Force ) in quanto l'inserimento della CPU non richiede alcuna forza contrariamente ai socket LIF ( Low Insertion Force ) i quali, oltre a richiedere una piccola pressione per l'inserimento del chip, richiedono anche appositi tool per la sua rimozione. Il modello di socket ZIF installato sulla motherboard è, in genere, indicato sul socket stesso. Tipi diversi di socket accettano famiglie diverse di processori. Se si conosce il tipo di zoccolo montato sulla scheda madre è possibile sapere, grosso modo, che tipo di processori può ospitare. Il condizionale è d'obbligo in quanto per sapere con precisione che tipi di processore può montare una scheda madre non basta sapere solo il socket ma bisogna tenere conto anche di altri fattori come le tensioni, il FSB, le CPU supportate dal BIOS ecc. Nel caso ci si stia apprestando ad aggiornare la CPU è meglio, dunque, attenersi alle informazioni sulla compatibilità fornite dal produttore della scheda madre. -

Intel® Server Board S2600 Family BIOS Setup User Guide

Intel® Server Board S2600 Family BIOS Setup User Guide For the Intel® Server Board S2600 family supporting the Intel® Xeon processor Scalable family and 2nd Generation Intel® Xeon processor Scalable family. Rev 1.1 November 2019 Intel® Server Products and Solutions Intel® Server Board BIOS Setup Specification Document Revision History Date Revision Changes October 2017 1.0 First release based on Intel® Server Board S2600 Family BIOS Setup Utility Specification. November 2019 1.1 Update based on Intel Xeon Processor Scalable Family Refresh BIOS Setup Specification 1_13 2 Intel® Server Board BIOS Setup Specification Disclaimers Intel technologies’ features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at Intel.com, or from the OEM or retailer. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. -

Quad-Core Intel® Xeon® Processor 5400 Series

Quad-Core Intel® Xeon® Processor 5400 Series Datasheet August 2008 318589-005 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The Quad-Core Intel® Xeon® Processor 5400 Series may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. 64-bit computing on Intel architecture requires a computer system with a processor, chipset, BIOS, operating system, device drivers and applications enabled for Intel® 64 architecture. Processors will not operate (including 32-bit operation) without an Intel® 64 architecture-enabled BIOS. Performance will vary depending on your hardware and software configurations. Consult with your system vendor for more information. -

Intel Xeon Processor 7200 Series and 7300 Series and Dual-Core Intel® Xeon® Processor 7200 Series

Intel® Xeon® Processor 7200 Series and 7300 Series Datasheet September 2008 Notice: The Intel® Xeon® Processor 7200 Series and 7300 Series may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Document Number: 318080-002 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The Intel® Xeon® Processor 7200 Series and 7300 Series may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Contact your local Intel sales office or your distributor to obtain the latest specifications and before placing your product order. -

AP1600R Dual Intel¨ Xeonª 1U Rackmount Server

AP1600R Dual Intel® Xeon™ 1U Rackmount Server User Guide Disclaimer/Copyrights No part of this manual, including the products and software described in it, may be reproduced, transmitted, transcribed, stored in a retrieval system, or translated into any language in any form or by any means, except documentation kept by the purchaser for backup purposes, without the express written permission of ASUSTeK COMPUTER INC. (“ASUS”). ASUS PROVIDES THIS MANUAL “AS IS” WITHOUT WARRANTY OF ANY KIND, EITHER EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE IMPLIED WARRANTIES OR CONDITIONS OF MERCHANTABILITY OR FITNESS FOR A PARTICULAR PURPOSE. IN NO EVENT SHALL ASUS, ITS DIRECTORS, OFFICERS, EMPLOYEES OR AGENTS BE LIABLE FOR ANY INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES (INCLUDING DAMAGES FOR LOSS OF PROFITS, LOSS OF BUSINESS, LOSS OF USE OR DATA, INTERRUPTION OF BUSINESS AND THE LIKE), EVEN IF ASUS HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES ARISING FROM ANY DEFECT OR ERROR IN THIS MANUAL OR PRODUCT. Product warranty or service will not be extended if: (1) the product is repaired, modified or altered, unless such repair, modification of alteration is authorized in writing by ASUS; or (2) the serial number of the product is defaced or missing. Products and corporate names appearing in this manual may or may not be registered trademarks or copyrights of their respective companies, and are used only for identification or explanation and to the owners’ benefit, without intent to infringe. The product name and revision number are both printed on the product itself. Manual revisions are released for each product design represented by the digit before and after the period of the manual revision number. -

Typy Gniazd I Procesorów

TYPY GNIAZD I PROCESORÓW AMD Socket • Socket 5 - AMD K5. • Socket 7 - AMD K6. • Super Socket 7 - AMD K6-2, AMD K6-III. • Socket 462 (zwany tak Ŝe Socket A) - AMD Athlon, Duron, Athlon XP, Athlon XP-M, Athlon MP, i Sempron. • Socket 463 (zwany tak Ŝe Socket NexGen) - NexGen Nx586. • Socket 563 - AMD Athlon XP-M (µ-PGA Socket). • Socket 754 - AMD Athlon 64, Sempron, Turion 64. Obsługa pojedynczego kanału pami ęci DDR-SDRAM, tzw. single-channel. • Socket 939 - AMD Athlon 64, Athlon 64 FX, Athlon 64 X2, Sempron, Turion 64, Opteron (seria 100). Obsługa podwójnego kanału pami ęci DDR-SDRAM, tzw. dual-channel. • Socket 940 - AMD Opteron (seria 100, 200, 800), Athlon 64 FX. Obsługa podwójnego kanału pami ęci DDR-SDRAM, tzw. dual- channel. • Socket 1207 (zwane tak Ŝe Socket F) - Supports AMD Opteron (seria 200, 800). Zast ąpił Socket 940. Obsługa dual-channel DDR2- SDRAM. • Socket AM2 - AMD Athlon 64 FX, Athlon 64 X2, Sempron, Turion 64, Opteron (seria 100). Obsługa dual-channel DDR2-SDRAM. Posiada 940 pinów. • Socket AM2+ - AMD Athlon X2, Athlon X3, Athlon X4, Phenom X2, Phenom X3, Phenom X4, Sempron, Phenom II. Obsługa dual- channel DDR2-SDRAM, oraz Obsługa dual-channel DDR3-SDRAM i HyperTransport 3 z mniejszym zapotrzebowaniem na energi ę. Posiada 940 pinów. • Socket AM3 - gniazdo pod procesor AMD, charakteryzuj ący si ę obsług ą dual-channel DDR3-SDRAM, oraz HyperTransport 3. Phenom II X2, Phenom X3, Phenom X4, Phenom X6, Athlon II X2, Athlon X3, Athlon X4, Sempron. • Socket FM1 - gniazdo pod procesor AMD Vision z nowej serii APU, wykonany w w 32 nanometrowym procesie produkcyjnym. -

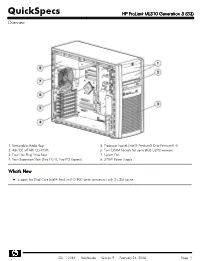

HP Proliant ML310 Generation 3 (G3) Overview

QuickSpecs HP ProLiant ML310 Generation 3 (G3) Overview 1. Removable Media Bays 5. Processor Socket (Intel® Pentium® D or Pentium® 4) 2. 48x IDE (ATAPI) CD-ROM 6. Four DIMM Sockets for up to 8GB DDR2 memory 3. Four Hot-Plug Drive Bays 7. System Fan 4. Four Expansion Slots (Two PCI-X, Two PCI Express) 8. 370W Power Supply What's New Support for Dual Core Intel® Pentium® D 900 Series processors with 2 x 2M cache DA - 12364 Worldwide — Version 9 — February 24, 2006 Page 1 QuickSpecs HP ProLiant ML310 Generation 3 (G3) Overview At A Glance Supports a single Intel® Pentium® D 800 or 900 Series, Pentium® 4 600 Series, or Celeron D® 300 Series processor Intel E7230 Chipset - 800MHz front side bus PC2-4200 533MHz unbuffered DDR2 SDRAM with advanced ECC capabilities and optional interleaving (expandable to 8 GB) Embedded NC320i PCI Express 10/100/1000T Gigabit network adapter Four Expansion Slots: Two PCI-X 64-bit/100MHz, Two PCI Express Supports four hot plug SCSI, SAS, or SATA hard drives for up to 2TB internal storage. SCSI models support U320 SCSI drives. Serial models support SAS and SATA hard drives Single Channel U320 SCSI Adapter in PCI-X slot (SCSI models) or four Channel embedded SATA Controller (SATA models) Integrated Lights-Out 2 (iLO 2) management controller Four USB 2.0 ports - Two front, two rear ROM Based Setup Utility (RBSU) and PXE support Protected by HP Services and a worldwide network of resellers and service providers. One year, Next Business Day, on-site limited global warranty. -

User's Manual

SUPER ® X6DHP-8G USER’S MANUAL Revision 1.0a The information in this User’s Manual has been carefully reviewed and is believed to be accurate. The vendor assumes no responsibility for any inaccuracies that may be contained in this document, makes no commitment to update or to keep current the information in this manual, or to notify any person or organization of the updates. Please Note: For the most up-to-date version of this manual, please see our web site at www.supermicro.com. SUPERMICRO COMPUTER reserves the right to make changes to the product described in this manual at any time and without notice. This product, including software, if any, and documentation may not, in whole or in part, be copied, photocopied, reproduced, translated or reduced to any medium or machine without prior written consent. IN NO EVENT WILL SUPERMICRO COMPUTER BE LIABLE FOR DIRECT, INDIRECT, SPECIAL, INCIDENTAL, OR CONSEQUENTIAL DAMAGES ARISING FROM THE USE OR INABILITY TO USE THIS PRODUCT OR DOCUMENTATION, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. IN PARTICULAR, THE VENDOR SHALL NOT HAVE LIABILITY FOR ANY HARDWARE, SOFTWARE, OR DATA STORED OR USED WITH THE PRODUCT, INCLUDING THE COSTS OF REPAIRING, REPLACING, INTEGRATING, INSTALLING OR RECOVERING SUCH HARDWARE, SOFTWARE, OR DATA. Any disputes arising between manufacturer and customer shall be governed by the laws of Santa Clara County in the State of California, USA. The State of California, County of Santa Clara shall be the exclusive venue for the resolution of any such disputes. Supermicro's total liability for all claims will not exceed the price paid for the hardware product. -

Technical Reference Guide HP Compaq 8100 Elite Series Business Desktop Computers

Technical Reference Guide HP Compaq 8100 Elite Series Business Desktop Computers Document Part Number: 601198-001 February 2010 This document provides information on the design, architecture, function, and capabilities of the HP Compaq 8100 Elite Series Business Desktop Computers. This information may be used by engineers, technicians, administrators, or anyone needing detailed information on the products covered. © Copyright 2010 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. Microsoft, MS-DOS, Windows, Windows NT, Windows XP, Windows Vista, and Windows 7 are trademarks of Microsoft Corporation in the U.S. and other countries. Intel, Intel Core 2 Duo, Intel Core 2 Quad, Pentium Dual-Core, Intel Inside, and Celeron are trademarks of Intel Corporation in the U.S. and other countries. Adobe, Acrobat, and Acrobat Reader are trademarks or registered trademarks of Adobe Systems Incorporated. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein. This document contains proprietary information that is protected by copyright. No part of this document may be photocopied, reproduced, or translated to another language without the prior written consent of Hewlett-Packard Company. Technical Reference Guide HP Compaq 8100 Elite Series Business Desktop Computers First Edition (February 2010) Document Part Number: 601198-001 Contents 1Introduction 1.1 About this Guide . 1–1 1.1.1 Online Viewing . 1–1 1.1.2 Hardcopy . -

Optimizing Memory Performance of Intel Xeon E7 V2-Based Servers

Front cover Optimizing Memory Performance of Intel Xeon E7 v2-based Servers Introduces the architecture of the Explains the testing methodology X6 servers that use these that is used to measure memory processors performance Analyzes the effects the different Provides best practices to maximize settings and features have on memory performance performance Charles Stephan Alicia Boozer Abstract This paper examines the architecture and memory performance of Lenovo System x and Flex System X6 platforms that are based on the Intel® Xeon® E7-8800, E7-4800, and E7-2800 v2 processors. These platforms can provide significant performance gains over predecessor products. The performance analysis in this paper covers memory latency, bandwidth, and application performance. In addition, the paper describes performance issues that are related to CPU frequency, memory speed, and population of memory DIMMs. Finally, the paper examines optimal memory configurations and best practices for the Lenovo X6 platforms. At Lenovo Press, we bring together experts to produce technical publications around topics of importance to you, which provides information and best practices for using Lenovo products and solutions to solve IT challenges. For more information about our most recent publications, see this website: http://lenovopress.com Contents Introduction . 3 System architecture . 4 Memory performance . 7 Balancing memory population on X6 platforms . 26 Best practices. 28 Conclusion . 28 Authors. 29 Notices . 30 Trademarks . 31 2 Optimizing Memory Performance of Intel Xeon E7 v2-based Servers Introduction The Intel Xeon E7 v2 series of EX processors has up to 15 cores and 30 threads per processor socket. Based on 22-nanometer manufacturing technology, these processors are designed to enable systems to scale 1 - 8 processor sockets natively.