A Course On: Pc-Based Seismic Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Prozessorenliste

Fach Bezeichnung Stepping Taktfrequenz Anmerkung 1A Intel C4001 7347 0,74 MHz ROM Intel P4002-1 0488D RAM Intel D4002-2 X1191128 RAM Intel P4003 F8956 Shift Register Intel P4004 5199W Prozessor Intel P1101A 7485 MOS SRAM 1B Intel D8008 I1220098 Intel P4040 5869W 1C Intel D3001 I3320035 Intel D3002 I3370036 1D Intel P8259A-2 L8360894 10 MHz PIC Intel P8254-2 L0421586 10 MHz PIT 1E Intel P8080A 2194B 2 MHz Intel P8086-2 L4151756B 5 MHz 1F Intel P8088 L5500345 5 MHz Intel C8087 L5380043 Math. CP 1G Intel P8031 L61100346 12 MHz Intel P8085 L5500055 3 MHz 1H Intel 8237A L0311554 5 MHz DMA Contr. Intel P8274 L7440107 MPSC Intel D8742 U2420277 12 MHz 8 Bit SMC 2A AMD N80186, N80186 91939DFE7 10 MHz, 12 MHz 335BCWD AMD 80286, Intel 80188 918KPSC L5322539 2B Intel 80286, 80286 L8500401 6, 12 MHz L9080860 2C Intel 80287 L9190444 10 MHz Math. CP 2D Intel 80286 L2140286 6 MHz Harris 80286 F3360 16MHz 2E Intel NG80386SX L1121576 16 MHz 2F Intel A82596SX SZ649 20 MHz 2G Intel 80486SX SX676 25 MHz 2H Intel A80501 SX835 60 MHz FDIV Bug 3A Intel Pentium II SL357 400 MHz Deschutes 3B Intel 80386DX SX366 33 MHz IIT 3C87 KY9220 40 MHz Math. CP 3C IT´s ST 80486DX4 100 MHz 3D UMC GREEN CPU U5S 9439K 33 MHz Not for US Sale 3E Intel 80386DX/DXL SX218 25 MHz IIT 3C87 ID9143.8 33MHz Math. CP 3F M27128AFI 88723S EPROM 3G Intel Pentium 4 SL79L 3,0 GHz Prescott Intel Pentium 4 SL6RZ 2,4 GHz Nortwood 3H Intel Celeron SL2WM 300 MHz Mendocino 4A Intel Pentium II SL357 400 MHz Deschutes 4B Intel i80960 L8373305B0 33 MHz RISC 4C Intel i80860XR SX438 40 MHz RISC 4D Intel -

MICROPROCESSOR EVALUATIONS for SAFETY-CRITICAL, REAL-TIME February 2009 APPLICATIONS: AUTHORITY for EXPENDITURE NO

DOT/FAA/AR-08/55 Microprocessor Evaluations for Air Traffic Organization Operations Planning Safety-Critical, Real-Time Office of Aviation Research and Development Applications: Authority for Washington, DC 20591 Expenditure No. 43 Phase 3 Report February 2009 Final Report This document is available to the U.S. public through the National Technical Information Services (NTIS), Springfield, Virginia 22161. U.S. Department of Transportation Federal Aviation Administration NOTICE This document is disseminated under the sponsorship of the U.S. Department of Transportation in the interest of information exchange. The United States Government assumes no liability for the contents or use thereof. The United States Government does not endorse products or manufacturers. Trade or manufacturer's names appear herein solely because they are considered essential to the objective of this report. This document does not constitute FAA certification policy. Consult your local FAA aircraft certification office as to its use. This report is available at the Federal Aviation Administration William J. Hughes Technical Center’s Full-Text Technical Reports page: actlibrary.act.faa.gov in Adobe Acrobat portable document format (PDF). Technical Report Documentation Page 1. Report No. 2. Government Accession No. 3. Recipient's Catalog No. DOT/FAA/AR-08/55 4. Title and Subtitle 5. Report Date MICROPROCESSOR EVALUATIONS FOR SAFETY-CRITICAL, REAL-TIME February 2009 APPLICATIONS: AUTHORITY FOR EXPENDITURE NO. 43 PHASE 3 REPORT 6. Performing Organization Code 7. Author(s) 8. Performing Organization Report No. TAMU-CS-AVSI-72005 Rabi N. Mahapatra, Praveen Bhojwani, Jason Lee, and Yoonjin Kim 9. Performing Organization Name and Address 10. Work Unit No. -

Osnovi Računarstva

OSNOVI RAČUNARSTVA - laboratorijske vježbe - Literatura: Scott Mueller, Nadogradnja i popravka PC-ja 1 Preteče savremenih računara Abakus – nalik današnjoj računaljki – naprava koja ima pokretne djelove – pokretni djelovi nisu međusobno povezani – sve operacije izvodi sam korisnik 2 Preteče savremenih računara Kalkulatori: Prva mašina za računanje - 1623. godine, Vilhelm Šikard (Wilhelm Schickard), Njemačka Paskalina: Blez Paskal (Blaise Pascal) – 1642. počeo da konstruiše prvu računsku mašinu koja je mogla da sabira i oduzima Mašina je završena poslije tri godine rada i dobila je ime Paskalina Do 1652. je proizvedeno pedeset mašina Korisnici su upotrebu smatrali komplikovanom, pa je proizvodnja obustavljena 3 Preteče savremenih računara 1820. godine, Čarls Havijer Tomas (Charles Xavier Thomas) je napravio prvi mehanički kalkulator Kalkulator je mogao da sabira, oduzima, množi i dijeli 4 Preteče savremenih računara ANALITIČKA MAŠINA: Engleski matematičar Čarls Bebidž (Charles Babbage) automatizovao je proces dugačkih računanja Napravio je prvi automatski mehanički digitalni računar, tj. računar koji radi na osnovu programa (1822. god.) Podaci su se unosili pomoću bušenih kartica Trebalo je da mašina ima mogućnost da radi sa pedesetocifrenim brojevima i da ima kapacitet memorije za 1000 takvih 5 brojeva Elektronski računari 4 generacije Prva generacija: izrađeni od elektronskih cijevi bez tastature, monitora i memorije velikih dimenzija, spori, skupi 6 ENIAC “ENIAC” (Electronic Numerical Integrator and Computer ) – 1946. god. prvi uspješan elektronski računar opšte namjene 30 tona 18.000 elektronskih cijevi Imao je sposobnost da proračuna 100.000 kalkulacija u jednoj sekundi. 7 EDVAC Ova mašina je trebalo da ima samo desetinu komponenata od kojih je bio sastavljen ENIAC, i 100 puta veću memoriju Završen je 1949. -

OURNAL January/February 1995

\,!! OO muter The print forum for the MicroComputer professional and semi-professional OURNAL January/February 1995 n an o r With A Network Use YourPC As A Multiple Metering Device Video Monitors A Guided Tour An Alternative To DOS And Windo 03 0076'4138 9 502 FOR SDC GREG SELF 9501N AG T 9782 BR ANCROF U.S. $4.95CANADA $5PO BOX 274 T ROAD BANCR OF T MI 48414-0274 1111,11111,11,111,11,1,,Wwill,111,11,11,11111,1 10 I 0 /485108559 68HC11 Controller &Languages Program it inC $99 orAssembly orFORTH orBasic Low Cost Development Package: Controller + Languages + Manualson disk included! Package Price Breakthrough! Caaaapoo oa0000000 0000000000000000 ocianarnoonaciaancoociooci000000000aa The NMIT-0020 Single Board Computer is perfect for dedicated 00000000000000000000000000000 0 0 0 controller with convenient interfaces for LCD displays and 000000000000000000000000000000000000 keypad. Intelligent LCD's up to 2 lines by 80 characters and 000000000000000000000000000000000000 matrix keypads up to 4x5 can be used. The processor is the 000000000000000000000000000000000000 popular F68HC11 with many features, including SCI and SPI L00000000000000000000000000000000000000000000000000000000000000000000000 serial channels, 8 -bit 8-ch. A/D, 20 available I/O lines, Watch 00000000000000000000000000000000000000000000000000000000000000000000000 Dog Timer, 1/2K EEPROM and Max -FORTH w/Floating Point 000000P00000000000000000000000000000 Package embedded in 12K internal ROM. SBC expands 000000000000000000000000000000000000 F68HC11 providing 3 28 -pin JEDEC sockets for 8-32K RAMs, 00000000000000 0 0 0 0 000 0 0 0 0 0 0 0 0 0 0 0 0 ROMs, EPROMs, EEPROMs, etc. RS -232 conversion supplied. 000000000000000000000000000000000000 0000000000000000000000000000000000001.400000000000000000000000000000000000 Requires external regulated supply: 5V at -30 mA. Based on 0U0000000000000000000000000000000000 NMIX-0020 board, so, many features may be added as desired ID00000000000000000000000000000000000.... -

Toshiba - 20 Years of Leadership in Mobile Computing

Toshiba - 20 years of leadership in mobile computing T3200SX T6600 C Tecra 750DVD Portégé 4000 The office computer the “It can change to fit my “DVD is the medium of the Designed for a new era of Portégé R200 size of a briefcase style of working” future” mobile working The ultimate thin and secure Dimensions: 99x370x395 mm; Dimensions: 119x320x390 mm; Dimensions: 54x240x303 mm Dimensions: 31/34 x 270 x 248 mm; Weight: Weight: 7.9 kg; Weight: 7.8 kg; Weight: 3.8 kg; 1.7 – 1.9 kg; ultra-portable Display: built-in VGA graphics, plasma Processor: Intel Pentium® with MMX™ Processor: Mobile Intel Pentium III 750 MHz; Display: 10.4” active matrix TFT color Dimensions:286 x 229 x display resolution of 640x480; technology,233 MHz int./66 MHz ext., HDD: 20 GB; display, 256 colors; 9.9/19.8mm Processor: Intel 80386SX, 16 MHz HDD: 4.77 GB; built-in DVD-ROM drive Display: 12.1’’ TFT poly-silicon active matrix HDD: 510 MB expandable to 1GB; Weight: 1.2kg Display: 13.3” TFT colour; DVD colour display Processor: Intel 486DX2, 66 MHz; Processor: Intel Pentium M processor Ultra Low Voltage 753 Display: 12" XGA TFT polysilicon 1985 1986 -- Introduction 1990 – First 1992 -- 1994 -- Introduction 1995 – First 2000 -- First notebook 2002 – First full- of the first portable notebook PC with Introduction of the of the first notebook notebook PC PC incorporating performance PC featuring an longer-lasting first portable PC PC featuring an incorporating a DVD/CD-RW multi-drive convertible-type internal hard disk NiMH battery featuring an Intel Intel Pentium CD-ROM -

Számítógépes Architektúrák II

Számítógépes architektúrák II. Komló Csaba MÉDIAINFORMATIKAI KIADVÁNYOK Számítógépes architektúrák II. Komló Csaba Eger, 2013 Korszerű információtechnológiai szakok magyaror- szági adaptációja TÁMOP-4.1.2-A/1-11/1-2011-0021 Lektorálta: Nyugat-magyarországi Egyetem Regionális Pedagógiai Szolgáltató és Kutató Központ Felelős kiadó: dr. Kis-Tóth Lajos Készült: az Eszterházy Károly Főiskola nyomdájában, Egerben Vezető: Kérészy László Műszaki szerkesztő: Nagy Sándorné Tartalom 1. Bevezetés .......................................................................... 13 1.1. Célkitűzések, kompetenciák a tantárgy teljesítésének feltételei . 13 1.1.1. Célkitűzés .............................................................................. 13 1.1.2. Kompetenciák ....................................................................... 13 1.1.3. A tantárgy teljesítésének feltételei ...................................... 13 1.2. A kurzus tartalma ..................................................................... 14 2. Informatikai alapfogalmak ................................................ 17 2.1. Célkitűzések és kompetenciák .................................................. 17 2.2. Tananyag ................................................................................. 17 2.2.1. Számítások gépesítése .......................................................... 17 2.2.2. A számítógép megszületése ................................................. 19 2.2.3. Neumann-elvek .................................................................... 19 2.2.4. -

Years of Emerging Technology: What's Next?

201 0 | No. 1 | 3 | Vol. 2 20th Anniversary 2020 years of emerging technology: What’s next? e National Security Agency’s review of emerging technologies Globe at a Glance | Pointers | Spinouts T GUES Editor’s column Deborah Frincke, PhD It is my distinct privilege to introduce the 20th anniversary L-Gopher, gave way to Internet browsers Mosaic and Netscape. issue of The Next Wave (TNW). The timing is striking for me Fast forward to 2013, where we are pleased, though not entirely personally because I nished my own PhD and began my rst amazed, to learn that we can control our tiny smartphone faculty job some 20 years ago. I, along with other newly minted screens through our gaze, where wall displays are reasonable PhDs that year, dreamed of technological changes in so many choices for operational environments, and where tangible areas—faster computers, making the user experience safer user interfaces (i.e., those allowing users to interact with their and friendlier, embedding our beloved technical devices in our information through their physical environment) are part of our personal lives . the list goes on. Many of those dreams have commodity gaming systems. been realized or exceeded, and you’ll read about a few of them Nowadays we expect intuitive design and responsiveness, in this issue. and we protest if the haptic screen on our pedometer-powered The world of 1993 believed 60 gigaopsa was blazingly video games is not up to snu. User experiences are less bound fast and thought individual users would never have access to by the traditional keyboard and mouse and the one-size-ts-all such speeds—or the need to use them. -

Vi Xử Lý,Nguyễn Hoàng Dũng,Dhbkhn

© DHBK 2005 Nội dung môn học 1. Giới thiệu chung về hệ vi xử lý 2. Bộ vi xử lý Intel 8088/8086 3. Lập trình hợp ngữ cho 8086 4. Tổ chức vào ra dữ liệu 5. Ngắt và xử lý ngắt 6. Truy cập bộ nhớ trực tiếp DMA 7. Các bộ vi xử lý trên thực tế cuu duong than cong . com 1 CuuDuongThanCong.com https://fb.com/tailieudientucntt © DHBK 2005 Chương 7: Các bộ vi xử lý trên thực tế 7.1 General purpose microprocessors 7.1.1 Intel 80x86 7.1.2 Xu hướng phát triển 7.2 Microcontrollers 7.2.1 Vi điều khiển của Microchip và Motorola 7.2.2 Họ vi điều khiển 8051 7.2.3 Họ vi điều khiển AVR 7.2.4 PSOC 7.2.5 Xu hướng phát triển 7.3 Digital signal processors 7.3.1 Texas Instrumentscuu duong than cong . com 7.3.2 Motorola 7.3.3 Philips 7.3.4 Xu hướng phát triển 2 CuuDuongThanCong.com https://fb.com/tailieudientucntt © DHBK 2005 Chương 7: Các bộ vi xử lý trên thực tế 7.1 General purpose microprocessors 7.1.1 Intel 80x86 7.1.2 Xu hướng phát triển 7.2 Microcontrollers 7.2.1 Vi điều khiển của Microchip và Motorola 7.2.2 Họ vi điều khiển 8051 7.2.3 Họ vi điều khiển AVR 7.2.4 PSOC 7.2.5 Xu hướng phát triển 7.3 Digital signal processors 7.3.1 Texas Instrumentscuu duong than cong . com 7.3.2 Motorola 7.3.3 Philips 7.3.4 Xu hướng phát triển 3 CuuDuongThanCong.com https://fb.com/tailieudientucntt © DHBK 2005 7.1.1 Vi xử lý của Intel cuu duong than cong . -

Mikroprocesory Intel 8086 – 80486 Copyright © Michal Brandejs, 1991, 2010 Fakulta Informatiky, Masarykova Univerzita, Brno

Michal Brandejs Mikroprocesory Intel 8086 – 80486 Copyright © Michal Brandejs, 1991, 2010 Fakulta informatiky, Masarykova univerzita, Brno Michal Brandejs Mikroprocesory Intel 8086 – 80486 The following are trademarks of Intel Corporation and may only be used to identify Intel products: Intel, Intel287, Intel386, Intel387, Intel486, Intel487, Pentium. Tento text byl vydán v nakladatelství Grada v roce 1991. Po vypršení plat- nosti nakladatelské smlouvy byl text autorem jako další vydání elektronicky zveřejněn dne 1. 9. 2010. Text lze šířit výhradně bezplatně a s uvedením au- tora a této copyrightové doložky. Text lze libovolně citovat, pokud je uveden odkaz na zdroj následovně: Brandejs, M. Mikroprocesory Intel 8086 – 80486 [online]. Brno : Fakulta in- formatiky, Masarykova univerzita, 2010. Dostupný z WWW: http://www.fi.muni.cz/usr/brandejs/Brandejs_Mikroprocesory_Intel_8086_80486_2010.pdf CONTENTS Obsah 1 Řada procesorů Intel 13 2 Intel 8086 15 2.1 Typy dat . 15 2.2 Adresace paměti procesoru 8086 . 16 2.3 Registry procesoru 8086 . 17 2.4 Zásobník . 20 2.5 Přerušení . 21 2.6 Ovládání V/V zařízení . 25 2.7 Počáteční nastavení procesoru . 26 2.8 Adresovací techniky . 26 2.8.1 Registr . 26 2.8.2 Přímý operand . 27 2.8.3 Přímá adresa . 28 2.8.4 Nepřímá adresa . 29 2.8.5 Bázovaná adresa . 29 2.8.6 Indexovaná adresa . 29 2.8.7 Kombinovaná adresa: báze+index . 29 2.8.8 Kombinovaná adresa: přímá+báze+index . 30 2.8.9 Změna segmentového registru . 30 2.9 Instrukční repertoár procesoru 8086 . 31 2.9.1 Instrukce MOV ...................... 34 2.9.2 Aritmetické instrukce . 35 2.9.3 Logické instrukce . -

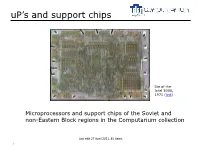

Up's and Support Chips

uP’s and support chips Die of the Intel 8008, 1971 (link) Microprocessors and support chips of the Soviet and non-Eastern Block regions in the Computarium collection Last edit 27 April 2021, 45 items 1 Sections ► Soviet and Eastern Block ► Western and other Regions ( = non-Eastern Block), Up to the 486 period. 2 Soviet and Eastern Block Soviet series (labels are in Cyrillic!): • 580 : i8080 compatible • 1801: PDP-11 compatible • 1804: AMD 2903 compatible • 1810: i8086 compatible • 1816: i8046 compatible • 1821: i8085 compatible • 1858: Z80 compatible See here: Soviet_integrated_circuit_designation history of computing in the Soviet Union conversion table from Cyrillic to Latin alphabet • 3 KR580VM80A Manufact. Electropribor, Rodon Country CCCP Model KR580VM80A Year 1979 Type 8 bit µP Speed 2.5 MHz Clone of i8080A Function microprocessor Clone of the Intel 8080A microprocessor which was introduced in 1974 in the USA. Gift Carlo MULLESCH, COMPUTARIUM http://www.cpushack.com/soviet-cpus.html#2 Database 1360, 1382 4 KR580IK57 Manufact. Country CCCP Model KR580IK57 Year 1989 Type DMA controller Speed Clone of i8257 Function support Clone of the Intel 8257 programmable DMA controller for the 8080 uP family. Gift Carlo MULLESCH http://www.cpushack.com/soviet-cpus.html#2 Database 1361 https://datasheetspdf.com/pdf/702169/IntelCorporation/8257/1 5 KR580VN59 Manufact. Kvantor Country CCCP (Ukraine) Model KR580VN59 Year 1989 Type interrupt controller Speed Clone of i8257 Function support Clone of the Intel 8259 priority interrupt controller for the 8080 uP family. Gift Carlo MULLESCH http://www.cpushack.com/soviet-cpus.html#2 Database 1362 https://datasheetspdf.com/pdf/702169/IntelCorporation/8257/1 6 KR580VG75 Manufact. -

Welcher Typ Ist Der Co...? Typ-Identifizierung Von CPU Und Coprozessor in MS-DOS-Pcs

Welcher Typ ist der Co...? Typ-Identifizierung von CPU und Coprozessor in MS-DOS-PCs Das Ziel wohl jeden Softwareentwicklers ist es, die Anwender seines Programms rundum zufriedenzustellen. Eines der vielen Kriterien, das der Programmierer dabei beherzigen muß, ist die optimale Ausnutzung der CPU-(und Coprozessor-)Leistung im Anwender-PC, denn so etwas bringt Geschwindigkeit. Wegen der vielen unterschiedlichen Prozessortypen muß ein gutes Programm also vor dem Start selbst herausfinden, von welchem „Rechenchef“ es demnächst bearbeitet werden wird. Wie es das tut, das lesen Sie hier. Im Abstand von nur wenigen Jahren brachte Intel immer neue Generationen der 80x86-CPU-Reihe heraus, jede mit einem spürbaren Leistungs-Plus im Vergleich zu den Vorgängern. Dazu kam eine ständige Erhöhung der Taktfrequenzen von ursprünglich 5 MHz beim 8086 bis zu fast 50 MHz heutzutage. Auch die Befehlssätze wurden ständig erweitert, und beim Übergang vom 80286 zum 80386 wechselte man sogar von einer 16- auf eine 32-Bit-Architektur. Alle denkbaren Hardware- Konfigurationen, dazu die Coprozessoren, findet man heute bei den PC-Anwendern vor, weshalb der Softwareentwickler vor der schwierigen Frage steht: „Auf welche CPU und auf welchen Coprozessor soll ich mein Programm zurechtschneidern?“ Der Programmierer kann sich natürlich auf die vom 8086 gebotene Power beschränken. Eine solche Software verschenkt dann auf 386/486-Rechnern einen Großteil der potentiellen Leistung. Er kann andererseits die speziellen Möglichkeiten der modernen Hochleistungschips ausnutzen und nimmt dabei in Kauf, daß seine Software auf Millionen noch existierender 8086- oder 80286-Computer nicht lauffähig ist. Die beste Lösung ist aber doch letztlich, wenn ein Programm die Hardware-Ressourcen optimal nutzt. -

Számítógépes Konfigurációk

SZÁMÍTÓGÉPES KONFIGURÁCIÓK Antal Péter – Bóta László MÉDIAINFORMATIKAI KIADVÁNYOK SZÁMÍTÓGÉPES KONFIGURÁCIÓK Antal Péter – Bóta László Eger, 2011 Lektorálta: CleverBoard Interaktív Eszközöket és Megoldásokat Forgalmazó és Szolgáltató Kft. A könyvkereskedelem története című fejezetet írta: Makai János A projekt az Európai Unió támogatásával, az Európai Szociális Alap társfinanszírozásával valósul meg. Felelős kiadó: dr. Kis-Tóth Lajos Készült: az Eszterházy Károly Főiskola nyomdájában, Egerben Vezető: Kérészy László Műszaki szerkesztő: Nagy Sándorné Kurzusmegosztás elvén (OCW) alapuló informatikai curriculum és SCORM kompatibilis tananyag- fejlesztés Informatikus könyvtáros BA, MA lineáris képzésszerkezetben TÁMOP-4.1.2-08/1/A-2009-0005 SZÁMÍTÓGÉPES KONFIGURÁCIÓK Számítógépes konfigurációk 1. Bevezetés ..................................................................................................................... 13 1.1 Célkitűzés ........................................................................................................ 13 1.2 A kurzus tartalma ............................................................................................ 13 1.3 A kurzus tömör kifejtése ................................................................................. 14 1.4 Kompetenciák és követelmények .................................................................... 14 1.5 Tanulási tanácsok, tudnivalók ......................................................................... 14 2. Számítógépek a mindennapokban ...........................................................................