Chapter 6 : Memory System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Secondary Memory

Secondary Memory This type of memory is also known as external memory or non-volatile. It is slower than main memory. These are used for storing data/Information permanently. CPU directly does not access these memories instead they are accessed via input-output routines. Contents of secondary memories are first transferred to main memory, and then CPU can access it. For example : Hard disk, CD-ROM, DVD etc. Electronic data is a sequence of bits. This data can either reside in : • Primary storage - main memory (RAM), relatively small, fast access, expensive (cost per MB), volatile (go away when power goes off) • Secondary storage - disks, tape, large amounts of data, slower access, cheap (cost per MB), persistent (remain even when power is off) Data storage has expanded from text and numeric files to include digital music files, photographic files, video files, and much more. These new types of files require secondary storage devices with much greater capacity than floppy disks. 1 Primary storage ( or main memory or internal memory) , often referred to simply as memory , is the only directly accessible to the CPU. Primary memory can be divided into volatile and nonvolatile memories. Primary storage (Main Memory ) has three main functions: 1-It stored all or part of the program that being executed. 2-It also holds data that are being used by the program. 3-It also stored the operating system programs that manage the operation of the computer. Limitation of Primary storage 1. Limited capacity- because the cost per bit of storage is high. 2. Volatile – data stored in it is lost when the electric power is turned off Or interrupted. -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

Embedded DRAM

Embedded DRAM Raviprasad Kuloor Semiconductor Research and Development Centre, Bangalore IBM Systems and Technology Group DRAM Topics Introduction to memory DRAM basics and bitcell array eDRAM operational details (case study) Noise concerns Wordline driver (WLDRV) and level translators (LT) Challenges in eDRAM Understanding Timing diagram – An example References Slide 1 Acknowledgement • John Barth, IBM SRDC for most of the slides content • Madabusi Govindarajan • Subramanian S. Iyer • Many Others Slide 2 Topics Introduction to memory DRAM basics and bitcell array eDRAM operational details (case study) Noise concerns Wordline driver (WLDRV) and level translators (LT) Challenges in eDRAM Understanding Timing diagram – An example Slide 3 Memory Classification revisited Slide 4 Motivation for a memory hierarchy – infinite memory Memory store Processor Infinitely fast Infinitely large Cycles per Instruction Number of processor clock cycles (CPI) = required per instruction CPI[ ∞ cache] Finite memory speed Memory store Processor Finite speed Infinite size CPI = CPI[∞ cache] + FCP Finite cache penalty Locality of reference – spatial and temporal Temporal If you access something now you’ll need it again soon e.g: Loops Spatial If you accessed something you’ll also need its neighbor e.g: Arrays Exploit this to divide memory into hierarchy Hit L2 L1 (Slow) Processor Miss (Fast) Hit Register Cache size impacts cycles-per-instruction Access rate reduces Slower memory is sufficient Cache size impacts cycles-per-instruction For a 5GHz -

CSCI 120 Introduction to Computation Bits... and Pieces (Draft)

CSCI 120 Introduction to Computation Bits... and pieces (draft) Saad Mneimneh Visiting Professor Hunter College of CUNY 1 Yes No Yes No... I am a Bit You may recall from the previous lecture that the use of electro mechanical relays, and in subsequent years, diodes and transistor, made it possible to con- struct more advanced computers, e.g. ENIAC. This is accredited to the fact that these devices could function as on/off switches. On one hand, they create the ability to encode logic into the circuits of the computer. This means that the computer can perform different tasks under different conditions, i.e. the notion of a program. For instance, one could encode the logic if A OR B then C. On the other hand, these devices allow the engineers to worry less about the values that could possibly arise in the system: the switch is either on or off. It cannot be anything in between. Therefore, this means that any errors due to fluctuation in voltage levels are greatly reduced. It would be enough to simply distinguish between high voltage and low voltage. This brings us to the question of Analog versus Digital. In simple terms, a digital system encodes information using a number of de- vices that have discrete states (e.g. on/off switches). An analog system encodes information using a device that have continuous states (e.g. measurement in an electric circuit). To build an intuition for digital versus analog, consider the problem of en- coding a number using buckets of water. One possibility is to use two kinds of buckets, full and empty. -

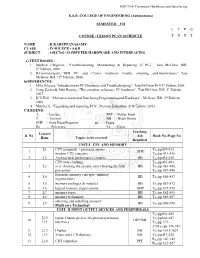

Computer Hardware and Interfacing KSR

16EC764/ Computer Hardware and Interfacing K.S.R. COLLEGE OF ENGINEERING (Autonomous) SEMESTER – VII L T P C COURSE / LESSON PLAN SCHEDULE 3 0 0 3 NAME : K.KARUPPANASAMY CLASS : IV-B.E ECE - A&B SUBJECT : 16EC764 / COMPUTER HARDWARE AND INTERFACING a) TEXT BOOKS : 1. Stephen J.Bigelow, “Troubleshooting, Maintaining & Repairing of PCs”, Tata McGraw Hill, 5th Edition, 2008. 2. B.Govindarajulu, “IBM PC and Clones hardware trouble shooting and maintenance”, Tata McGraw Hill, 12th Edition, 2008. b)REFERENCES: 1. Mike Meyers,“Introduction to PC Hardware and Troubleshooting”, Tata McGraw Hill,1st Edition,2005. 2. Craig Zacker& John Rourke, “The complete reference: PC hardware”, Tata McGraw Hill, 1st Edition, 2007. 3. D.V.Hall, “Microprocessorsand InterfacingProgrammingand Hardware”, McGraw Hill, 2nd Edition, 2006. 4. Mueller.S, “Upgrading and repairing PCS”, Pearson Education, 21th Edition, 2013. C)LEGEND: L - Lecture PPT - Power Point T - Tutorial BB - Black Board OHP - Over Head Projector pp - Pages Rx - Reference Ex - Extra Teaching Lecture S. No Aid Book No./Page No Hour Topics to be covered Required UNIT-I CPU AND MEMORY L1 CPU essentials - processor modes , T /pp429-431 1 OHP X1 modern CPU concepts TX1/pp 431-436 2 L2 Architectural performance features BB TX1/pp436-439 CPU over clocking , TX1/pp481-483, 3 L3 over clocking the system ,over clocking the Intel BB TX1/pp 483-486, processors TX1/pp 487-490 Essential memory concepts -memory 4 L4 BB T /pp 856-857 organizations X1 5 L5 memory packages & modules BB TX1/pp 857-872 6 L6 logical memory -

Memory Systems

Memory Systems Patterson & Hennessey Chapter 5 1 Memory Hierarchy Primary Memory Secondary Secondary CPU Cache Main Memory Memory ---- Memory Memory (Disk) (Archival) Registers MC MM MD MA Memory Content: MC ⊆ MM ⊆ MD ⊆ MA Memory Parameters: Other Factors: •Access Time: increase with distance from CPU • Energy consumption •Cost/Bit: decrease with distance from CPU • Reliability •Capacity: increase with distance from CPU • Size/density 2 § 5.1 Introducion Memory Technology Static RAM (SRAM) 0.5ns – 2.5ns, $2000 – $5000 per GB Memory Hierarchy Dynamic RAM (DRAM) CPU 50ns – 70ns, $20 – $75 per GB Increasing distance Level 1 from the CPU in Magnetic disk access time Levels in the Level 2 5ms – 20ms, $0.20 – $2 per GB memory hierarchy Ideal memory Level n Access time of SRAM Capacity and cost/GB of disk Size of the memory at each level 3 Locality of Reference Programs access a small proportion of their address space at any time If an item is referenced: - temporal locality: it will tend to be referenced again soon - spatial locality: nearby items will tend to be referenced soon Why does code have locality? Our initial focus: two levels (upper, lower) block: minimum unit of data transferred hit: data requested is in the upper level miss: data requested is not in the upper level 4 Taking Advantage of Locality Memory hierarchy Store everything on disk Copy recently accessed (and nearby) items from disk to smaller DRAM memory Main memory Copy more recently accessed (and nearby) items from DRAM to smaller/faster SRAM memory -

BES Scientific and Facility Highlights/Accomplishments FY 1997

Thirteen Years of Basic Energy Sciences Accomplishments Provided below are vignettes of some significant Basic Energy Sciences (BES) program accomplishments from FY 1997 through FY 2009. These brief accounts appear in the BES sections of the President’s FY 1999 through FY 2011 Budget Requests to Congress, respectively. The selected program highlights are representative of the broad range of studies supported in the BES program. Selected FY 2009 Scientific Highlights/Accomplishments Materials Sciences and Engineering Subprogram . Encoding Information at Sub-Atomic Scales. Using state-of-the-art nanoscience instruments and novel techniques, scientists have set a record for the smallest writing, forming letters with features that are one third of a billionth of a meter or 0.3 nanometers. This sub-atomic writing was achieved by using a scanning tunnelling microscope (STM) to precisely position carbon monoxide molecules into a desired pattern on a copper surface. The electrons that move around on the copper surface act as waves that interfere with the carbon monoxide molecules and with each other, forming an interference pattern that depends on the positions of the molecules. By altering the arrangement of the molecules, specific electron interference patterns are created, thereby encoding information for later retrieval. In addition, several data sets can be stored in a single molecular arrangement by using multiple electron energies, one of the variables possible with the STM. The same STM technology can then be used to read the data that has been stored. Because the information is stored in the electron interference pattern, rather than in the individual carbon monoxide molecules or surface copper atoms, the storage density is not limited by the size of an atom. -

Random Access Memory (Ram)

www.studymafia.org A Seminar report On RANDOM ACCESS MEMORY (RAM) Submitted in partial fulfillment of the requirement for the award of degree of Bachelor of Technology in Computer Science SUBMITTED TO: SUBMITTED BY: www.studymafia.org www.studymafia.org www.studymafia.org Acknowledgement I would like to thank respected Mr…….. and Mr. ……..for giving me such a wonderful opportunity to expand my knowledge for my own branch and giving me guidelines to present a seminar report. It helped me a lot to realize of what we study for. Secondly, I would like to thank my parents who patiently helped me as i went through my work and helped to modify and eliminate some of the irrelevant or un-necessary stuffs. Thirdly, I would like to thank my friends who helped me to make my work more organized and well-stacked till the end. Next, I would thank Microsoft for developing such a wonderful tool like MS Word. It helped my work a lot to remain error-free. Last but clearly not the least, I would thank The Almighty for giving me strength to complete my report on time. www.studymafia.org Preface I have made this report file on the topic RANDOM ACCESS MEMORY (RAM); I have tried my best to elucidate all the relevant detail to the topic to be included in the report. While in the beginning I have tried to give a general view about this topic. My efforts and wholehearted co-corporation of each and everyone has ended on a successful note. I express my sincere gratitude to …………..who assisting me throughout the preparation of this topic. -

National Institute of Standards and Technolog....Xml

G:\COMP\SCIENCE\NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOG....XML NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY ACT [[ACT OF MARCH 3, 1901, Chapter 872 of the 56th Congress]] [As Amended Through P.L. 116–283, Enacted January 1, 2021] øCurrency: This publication is a compilation of the text of chapter 872 of the 56th Congress. It was last amended by the public law listed in the As Amended Through note above and below at the bottom of each page of the pdf version and reflects current law through the date of the enactment of the public law listed at https://www.govinfo.gov/app/collection/comps/¿ øNote: While this publication does not represent an official version of any Federal statute, substantial efforts have been made to ensure the accuracy of its contents. The official version of Federal law is found in the United States Statutes at Large and in the United States Code. The legal effect to be given to the Statutes at Large and the United States Code is established by statute (1 U.S.C. 112, 204).¿ CHAP. 872.—AN ACT To establish the National Bureau of Standards. Be it enacted by the Senate and House of Representatives of the United States of America in Congress assembled, FINDINGS AND PURPOSES SECTION 1. ø15 U.S.C. 271¿ (a) The Congress finds and de- clares the following: (1) The future well-being of the United States economy de- pends on a strong manufacturing base and requires continual improvements in manufacturing technology, quality control, and techniques for ensuring product reliability and cost-effec- tiveness. -

A Novel Cache Architecture for Multicore Processor Using Vlsi Sujitha .S, Anitha .N

International Journal of Scientific & Engineering Research, Volume 4, Issue 6, June-2013 178 ISSN 2229-5518 A Novel Cache Architecture For Multicore Processor Using Vlsi Sujitha .S, Anitha .N Abstract— Memory cell design for cache memories is a major concern in current Microprocessors mainly due to its impact on energy consumption, area and access time. Cache memories dissipate an important amount of the energy budget in current microprocessors. An n-bit cache cell, namely macrocell, has been proposed. This cell combines SRAM and eDRAM technologies with the aim of reducing energy consumption while maintaining the performance. The proposed M-Cache is implemented in processor architecture and its performance is evaluated. The main objective of this project is to design a newly modified cache of Macrocell (4T cell). The speed of these cells is comparable to the speed of 6T SRAM cells. The performance of the processor architecture including this modified-cache is better than the conventional Macrocell cache design. Further this Modified Macrocell is implemented in Mobile processor architecture and compared with the conventional memory. Also a newly designed Femtocell is compared with both the macrocell and Modified m-cache cell. Index Terms— Femtocell. L1 cache, L2 cache, Macrocell, Modified M-cell, UART. —————————— —————————— 1 INTRODUCTION The cache operation is that when the CPU requests contents of memory location, it first checks cache for this data, if present, get from cache (fast), if not present, read PROCESSORS are generally able to perform operations required block from main memory to cache. Then, deliver from cache to CPU. Cache includes tags to identify which on operands faster than the access time of large capacity block of main memory is in each cache slot. -

Memory Hierarchy Summary

Carnegie Mellon Carnegie Mellon Today DRAM as building block for main memory The Memory Hierarchy Locality of reference Caching in the memory hierarchy Storage technologies and trends 15‐213 / 18‐213: Introduction to Computer Systems 10th Lecture, Feb 14, 2013 Instructors: Seth Copen Goldstein, Anthony Rowe, Greg Kesden 1 2 Carnegie Mellon Carnegie Mellon Byte‐Oriented Memory Organization Simple Memory Addressing Modes Normal (R) Mem[Reg[R]] • • • . Register R specifies memory address . Aha! Pointer dereferencing in C Programs refer to data by address movl (%ecx),%eax . Conceptually, envision it as a very large array of bytes . In reality, it’s not, but can think of it that way . An address is like an index into that array Displacement D(R) Mem[Reg[R]+D] . and, a pointer variable stores an address . Register R specifies start of memory region . Constant displacement D specifies offset Note: system provides private address spaces to each “process” . Think of a process as a program being executed movl 8(%ebp),%edx . So, a program can clobber its own data, but not that of others nd th From 2 lecture 3 From 5 lecture 4 Carnegie Mellon Carnegie Mellon Traditional Bus Structure Connecting Memory Read Transaction (1) CPU and Memory CPU places address A on the memory bus. A bus is a collection of parallel wires that carry address, data, and control signals. Buses are typically shared by multiple devices. Register file Load operation: movl A, %eax ALU %eax CPU chip Main memory Register file I/O bridge A 0 Bus interface ALU x A System bus Memory bus I/O Main Bus interface bridge memory 5 6 Carnegie Mellon Carnegie Mellon Memory Read Transaction (2) Memory Read Transaction (3) Main memory reads A from the memory bus, retrieves CPU read word xfrom the bus and copies it into register word x, and places it on the bus. -

![Problem 1 : Locality of Reference. [10 Points] Problem 2 : Virtual Memory](https://docslib.b-cdn.net/cover/5785/problem-1-locality-of-reference-10-points-problem-2-virtual-memory-2025785.webp)

Problem 1 : Locality of Reference. [10 Points] Problem 2 : Virtual Memory

CMU 18–746 Storage Systems Spring 2010 Solutions to Homework 0 Problem 1 : Locality of reference. [10 points] Spatial locality is the concept that objects logically “close” in location to an object in the cache are more likely to be accessed than objects logically “far.” For example, if disk block #3 is read and placed in the cache, it is more likely that disk block #4 will be referenced soon than block #31,415,926 because blocks #3 and #4 are close to each other on the disk. Temporal locality is the concept that items more recently accessed in the cache are more likely to be accessed than older, “stale” blocks. For example, if disk blocks are read and placed in the cache in the order #271, #828, #182, then it is more likely that disk block #182 will be referenced again soon than block #271. Another way of thinking of this is that a block is likely to be accessed multiple times in a short span of time. Problem 2 : Virtual memory. [20 points] (a) Swapping is the process of evicting a virtual memory page from physical memory (writing the contents of the page to a backing store [for example, a disk]) and replacing the freed memory with another page (either a newly created page, or an existing page read from the backing store). In essence, one virtual memory page is “swapped” with another. Swapping is initiated whenever all physical page frames are allocated and a virtual memory reference is made to a page that’s not in memory.