NMT Based Similar Language Translation for Hindi - Marathi

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ZEYLANICA a Study of the Peoples and Languages of Sri Lanka

ZEYLANICA A Study of the Peoples and Languages of Sri Lanka Asiff Hussein Second Edition: September 2014 ZEYLANICA. A Study of the Peoples and Languages of Sri Lanka ISBN 978-955-0028-04-7 © Asiff Hussein Printed by: Printel (Pvt) Ltd 21/11, 4 th Lane, Araliya Uyana Depanama, Pannipitiya Published by: Neptune Publications CONTENTS Chapter 1 Legendary peoples of Lanka Chapter 2 The Veddas, the aboriginal inhabitants of Lanka and their speech Chapter 3 The Origins of the Sinhalese nation and the Sinhala language Chapter 4 The Origins of the Sri Lankan Tamils and the Tamil language Chapter 5 The Sri Lankan Moors and their language Chapter 6 The Malays of Sri Lanka and the local Malay language Chapter 7 The Memons, a people of North Indian origin and their language Chapter 8 Peoples of European origin. The Portuguese and Dutch Burghers Chapter 9 The Kaffirs. A people of African origin Chapter 10 The Ahikuntaka. The Gypsies of Sri Lanka INTRODUCTORY NOTE The system of transliteration employed in the text, save for citations, is the standard method. Thus dots below letters represent retroflex sounds which are pronounced with the tip of the tongue striking the roof of the mouth further back than for dental sounds which are articulated by placing the tip of the tongue against the upper front teeth. Among the other sounds transliterated here c represents the voiceless palato-alveolar affricate (as sounded in the English church ) and ś the palatal sibilant (as sounded in English sh ow ). The lingual which will be found occurring in Sanskrit words is similar in pronunciation to the palatal . -

Diachrony of Ergative Future

• THE EVOLUTION OF THE TENSE-ASPECT SYSTEM IN HINDI/URDU: THE STATUS OF THE ERGATIVE ALGNMENT Annie Montaut INALCO, Paris Proceedings of the LFG06 Conference Universität Konstanz Miriam Butt and Tracy Holloway King (Editors) 2006 CSLI Publications http://csli-publications.stanford.edu/ Abstract The paper deals with the diachrony of the past and perfect system in Indo-Aryan with special reference to Hindi/Urdu. Starting from the acknowledgement of ergativity as a typologically atypical feature among the family of Indo-European languages and as specific to the Western group of Indo-Aryan dialects, I first show that such an evolution has been central to the Romance languages too and that non ergative Indo-Aryan languages have not ignored the structure but at a certain point went further along the same historical logic as have Roman languages. I will then propose an analysis of the structure as a predication of localization similar to other stative predications (mainly with “dative” subjects) in Indo-Aryan, supporting this claim by an attempt of etymologic inquiry into the markers for “ergative” case in Indo- Aryan. Introduction When George Grierson, in the full rise of language classification at the turn of the last century, 1 classified the languages of India, he defined for Indo-Aryan an inner circle supposedly closer to the original Aryan stock, characterized by the lack of conjugation in the past. This inner circle included Hindi/Urdu and Eastern Panjabi, which indeed exhibit no personal endings in the definite past, but only gender-number agreement, therefore pertaining more to the adjectival/nominal class for their morphology (calâ, go-MSG “went”, kiyâ, do- MSG “did”, bola, speak-MSG “spoke”). -

Phonology of Marathi-Hindi Contact in Eastern Vidarbha

Volume II, Issue VII, November 2014 - ISSN 2321-7065 Change in Progress: Phonology of Marathi-Hindi contact in (astern Vidarbha Rahul N. Mhaiskar Assistant Professor Department of Linguistics, Deccan Collage Post Graduate and Research Institute, Yerwada Pune Abstract Language contact studies the multilingualism and bilingualism in particular language society. There are two division of vidarbha, Nagpur (eastern) and Amaravati (western). It occupied 21.3% of area of the state of Maharashtra. This Area is connected to Hindi speaking states, Madhya Pradesh and Chhattisgarh. When speakers of different languages interact closely, naturally their languages influence each other. There is a close interaction between the speakers of Hindi and local Marathi and they are influencing each other’s languages. A majority of Vidarbhians speak Varhadi, a dialect of Marathi. The present paper aims to look into the phonological nature of Marathi-Hindi contact in eastern Vidarbha. Standard Marathi is used as a medium of instruction for education and Hindi is used for informal communication in this area. This situation creates phonological variation in Marathi for instance: the standard Marathi ‘c’ is pronounced like Hindi ‘þ’. Eg. Marathi Local Variety Hindi pac paþ panþ ‘five’ cor þor þor ‘thief’ Thus, this paper will be an attempt to examine the phonological changes occurs due to influence of Hindi. Keywords: Marathi-Hindi, Language Contact, Phonology, Vidarbha. http://www.ijellh.com 63 Volume II, Issue VII, November 2014 - ISSN 2321-7065 Introduction: Multilingualism and bilingualism is likely to be common phenomenon throughout the world. There are so many languages spoken in the world where there is much variation in language over short distances. -

Language and Literature

1 Indian Languages and Literature Introduction Thousands of years ago, the people of the Harappan civilisation knew how to write. Unfortunately, their script has not yet been deciphered. Despite this setback, it is safe to state that the literary traditions of India go back to over 3,000 years ago. India is a huge land with a continuous history spanning several millennia. There is a staggering degree of variety and diversity in the languages and dialects spoken by Indians. This diversity is a result of the influx of languages and ideas from all over the continent, mostly through migration from Central, Eastern and Western Asia. There are differences and variations in the languages and dialects as a result of several factors – ethnicity, history, geography and others. There is a broad social integration among all the speakers of a certain language. In the beginning languages and dialects developed in the different regions of the country in relative isolation. In India, languages are often a mark of identity of a person and define regional boundaries. Cultural mixing among various races and communities led to the mixing of languages and dialects to a great extent, although they still maintain regional identity. In free India, the broad geographical distribution pattern of major language groups was used as one of the decisive factors for the formation of states. This gave a new political meaning to the geographical pattern of the linguistic distribution in the country. According to the 1961 census figures, the most comprehensive data on languages collected in India, there were 187 languages spoken by different sections of our society. -

International Multidisciplinary Research Journal

Vol 4 Issue 9 March 2015 ISSN No :2231-5063 InternationaORIGINALl M ARTICLEultidisciplinary Research Journal Golden Research Thoughts Chief Editor Dr.Tukaram Narayan Shinde Associate Editor Publisher Dr.Rajani Dalvi Mrs.Laxmi Ashok Yakkaldevi Honorary Mr.Ashok Yakkaldevi Welcome to GRT RNI MAHMUL/2011/38595 ISSN No.2231-5063 Golden Research Thoughts Journal is a multidisciplinary research journal, published monthly in English, Hindi & Marathi Language. All research papers submitted to the journal will be double - blind peer reviewed referred by members of the editorial board.Readers will include investigator in universities, research institutes government and industry with research interest in the general subjects. International Advisory Board Flávio de São Pedro Filho Mohammad Hailat Hasan Baktir Federal University of Rondonia, Brazil Dept. of Mathematical Sciences, English Language and Literature University of South Carolina Aiken Department, Kayseri Kamani Perera Regional Center For Strategic Studies, Sri Abdullah Sabbagh Ghayoor Abbas Chotana Lanka Engineering Studies, Sydney Dept of Chemistry, Lahore University of Management Sciences[PK] Janaki Sinnasamy Ecaterina Patrascu Librarian, University of Malaya Spiru Haret University, Bucharest Anna Maria Constantinovici AL. I. Cuza University, Romania Romona Mihaila Loredana Bosca Spiru Haret University, Romania Spiru Haret University, Romania Ilie Pintea, Spiru Haret University, Romania Delia Serbescu Fabricio Moraes de Almeida Spiru Haret University, Bucharest, Federal University of Rondonia, Brazil Xiaohua Yang Romania PhD, USA George - Calin SERITAN Anurag Misra Faculty of Philosophy and Socio-Political ......More DBS College, Kanpur Sciences Al. I. Cuza University, Iasi Titus PopPhD, Partium Christian University, Oradea,Romania Editorial Board Pratap Vyamktrao Naikwade Iresh Swami Rajendra Shendge ASP College Devrukh,Ratnagiri,MS India Ex - VC. -

Map by Steve Huffman; Data from World Language Mapping System

Svalbard Greenland Jan Mayen Norwegian Norwegian Icelandic Iceland Finland Norway Swedish Sweden Swedish Faroese FaroeseFaroese Faroese Faroese Norwegian Russia Swedish Swedish Swedish Estonia Scottish Gaelic Russian Scottish Gaelic Scottish Gaelic Latvia Latvian Scots Denmark Scottish Gaelic Danish Scottish Gaelic Scottish Gaelic Danish Danish Lithuania Lithuanian Standard German Swedish Irish Gaelic Northern Frisian English Danish Isle of Man Northern FrisianNorthern Frisian Irish Gaelic English United Kingdom Kashubian Irish Gaelic English Belarusan Irish Gaelic Belarus Welsh English Western FrisianGronings Ireland DrentsEastern Frisian Dutch Sallands Irish Gaelic VeluwsTwents Poland Polish Irish Gaelic Welsh Achterhoeks Irish Gaelic Zeeuws Dutch Upper Sorbian Russian Zeeuws Netherlands Vlaams Upper Sorbian Vlaams Dutch Germany Standard German Vlaams Limburgish Limburgish PicardBelgium Standard German Standard German WalloonFrench Standard German Picard Picard Polish FrenchLuxembourgeois Russian French Czech Republic Czech Ukrainian Polish French Luxembourgeois Polish Polish Luxembourgeois Polish Ukrainian French Rusyn Ukraine Swiss German Czech Slovakia Slovak Ukrainian Slovak Rusyn Breton Croatian Romanian Carpathian Romani Kazakhstan Balkan Romani Ukrainian Croatian Moldova Standard German Hungary Switzerland Standard German Romanian Austria Greek Swiss GermanWalser CroatianStandard German Mongolia RomanschWalser Standard German Bulgarian Russian France French Slovene Bulgarian Russian French LombardRomansch Ladin Slovene Standard -

Languages of New York State Is Designed As a Resource for All Education Professionals, but with Particular Consideration to Those Who Work with Bilingual1 Students

TTHE LLANGUAGES OF NNEW YYORK SSTATE:: A CUNY-NYSIEB GUIDE FOR EDUCATORS LUISANGELYN MOLINA, GRADE 9 ALEXANDER FFUNK This guide was developed by CUNY-NYSIEB, a collaborative project of the Research Institute for the Study of Language in Urban Society (RISLUS) and the Ph.D. Program in Urban Education at the Graduate Center, The City University of New York, and funded by the New York State Education Department. The guide was written under the direction of CUNY-NYSIEB's Project Director, Nelson Flores, and the Principal Investigators of the project: Ricardo Otheguy, Ofelia García and Kate Menken. For more information about CUNY-NYSIEB, visit www.cuny-nysieb.org. Published in 2012 by CUNY-NYSIEB, The Graduate Center, The City University of New York, 365 Fifth Avenue, NY, NY 10016. [email protected]. ABOUT THE AUTHOR Alexander Funk has a Bachelor of Arts in music and English from Yale University, and is a doctoral student in linguistics at the CUNY Graduate Center, where his theoretical research focuses on the semantics and syntax of a phenomenon known as ‘non-intersective modification.’ He has taught for several years in the Department of English at Hunter College and the Department of Linguistics and Communications Disorders at Queens College, and has served on the research staff for the Long-Term English Language Learner Project headed by Kate Menken, as well as on the development team for CUNY’s nascent Institute for Language Education in Transcultural Context. Prior to his graduate studies, Mr. Funk worked for nearly a decade in education: as an ESL instructor and teacher trainer in New York City, and as a gym, math and English teacher in Barcelona. -

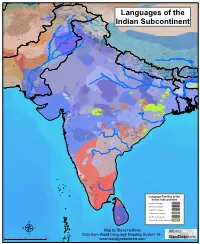

Map by Steve Huffman Data from World Language Mapping System 16

Tajiki Tajiki Tajiki Shughni Southern Pashto Shughni Tajiki Wakhi Wakhi Wakhi Mandarin Chinese Sanglechi-Ishkashimi Sanglechi-Ishkashimi Wakhi Domaaki Sanglechi-Ishkashimi Khowar Khowar Khowar Kati Yidgha Eastern Farsi Munji Kalasha Kati KatiKati Phalura Kalami Indus Kohistani Shina Kati Prasuni Kamviri Dameli Kalami Languages of the Gawar-Bati To rw al i Chilisso Waigali Gawar-Bati Ushojo Kohistani Shina Balti Parachi Ashkun Tregami Gowro Northwest Pashayi Southwest Pashayi Grangali Bateri Ladakhi Northeast Pashayi Southeast Pashayi Shina Purik Shina Brokskat Aimaq Parya Northern Hindko Kashmiri Northern Pashto Purik Hazaragi Ladakhi Indian Subcontinent Changthang Ormuri Gujari Kashmiri Pahari-Potwari Gujari Bhadrawahi Zangskari Southern Hindko Kashmiri Ladakhi Pangwali Churahi Dogri Pattani Gahri Ormuri Chambeali Tinani Bhattiyali Gaddi Kanashi Tinani Southern Pashto Ladakhi Central Pashto Khams Tibetan Kullu Pahari KinnauriBhoti Kinnauri Sunam Majhi Western Panjabi Mandeali Jangshung Tukpa Bilaspuri Chitkuli Kinnauri Mahasu Pahari Eastern Panjabi Panang Jaunsari Western Balochi Southern Pashto Garhwali Khetrani Hazaragi Humla Rawat Central Tibetan Waneci Rawat Brahui Seraiki DarmiyaByangsi ChaudangsiDarmiya Western Balochi Kumaoni Chaudangsi Mugom Dehwari Bagri Nepali Dolpo Haryanvi Jumli Urdu Buksa Lowa Raute Eastern Balochi Tichurong Seke Sholaga Kaike Raji Rana Tharu Sonha Nar Phu ChantyalThakali Seraiki Raji Western Parbate Kham Manangba Tibetan Kathoriya Tharu Tibetan Eastern Parbate Kham Nubri Marwari Ts um Gamale Kham Eastern -

A Print Version of All the Papers of July 2010 Issue in Book Format

LANGUAGE IN INDIA Strength for Today and Bright Hope for Tomorrow Volume 10 : 7 July 2010 ISSN 1930-2940 Managing Editor: M. S. Thirumalai, Ph.D. Editors: B. Mallikarjun, Ph.D. Sam Mohanlal, Ph.D. B. A. Sharada, Ph.D. A. R. Fatihi, Ph.D. Lakhan Gusain, Ph.D. K. Karunakaran, Ph.D. Jennifer Marie Bayer, Ph.D. S. M. Ravichandran, Ph.D. Contents 1. EAT Expressions in Manipuri 1-15 N. Pramodini, Ph.D. 2. Learning from Movies - 'Slumdog Maya Khemlani David, Ph.D. & Millionaire' and Language Awareness 16-26 Caesar DeAlwis, Ph.D. 3. Maternal Interaction and Verbal Input in Ravikumar, M.Sc., Haripriya, G., M.Sc., and Normal and Hearing Impaired Children 27-36 Shyamala, K. C., Ph.D. 4. Role of L2 Motivation and the Performance Tahir Ghafoor Malik, M.S., Ph.D. Candidate of Intermediate Students in the English (L2) Exams in Pakistan 37-49 5. Problems in Ph.D. English Degree Umar-ud- Din, M. Kamal Khan and Shahzad Programme in Pakistan - The Issue of Quality Mahmood Assurance 50-60 6. Using Technology in the English Language Renu Gupta, Ph.D. Classroom 61-77 7. Teaching Literature through Language – Abraham Panavelil Abraham, Ph.D. Some Considerations 78-90 8. e-Learning of Japanese Pictography - Some Sanjiban Sekhar Roy, B.E., M.Tech., and Perspectives 91-102 Santanu Mukerji, M.A. 9. Is It a Language Worth Researching? Muhammad Gulfraz Abbasi, M. A., Ph.D. Language in India www.languageinindia.com i 10 : 7 July 2010 Contents List Ethnographic Challenges in the Study of Scholar Pahari Language 103-110 10. -

Natural Language Processing Tasks for Marathi Language Pratiksha Gawade, Deepika Madhavi, Jayshree Gaikwad, Sharvari Jadhav I

International Journal of Engineering Research and Development e-ISSN: 2278-067X, p-ISSN: 2278-800X, www.ijerd.com Volume 6, Issue 7 (April 2013), PP.88-91 Natural Language Processing Tasks for Marathi Language Pratiksha Gawade, Deepika Madhavi, Jayshree Gaikwad, Sharvari Jadhav I. T. Department, Padmabhushan Vasantdada Patil Pratishthan’s College Of Engineering. Sion (East), Mumbai-400 022 Abstract:- Natural language processing is process to handle the linguistic languages to understand the language in to more detailed format. It will providing us will the complete grammatical structure of the word of linguistic language into English. In the present system such as dictionary we only have the word with its meaning but it does provide us grammar. In our proposed system we are presenting the parsing tree which shows the complete grammatical structure .It will show all the tags chunks etc which help to understand the language in more detailed way. Keywords:- NLP, Morphological analyser, inflection rules, parse tree. I. INTRODUCTION 1.1Major tasks in NLP The following is a list of some of the most commonly researched tasks in NLP. Note that some of these tasks have direct real-world applications, while others more commonly serve as subtasks that are used to aid in solving larger tasks. What distinguishes these tasks from other potential and actual NLP tasks is not only the volume of research devoted to them but the fact that for each one there is typically a well-defined problem setting, a standard metric for evaluating the task, standard corpora on which the task can be evaluated, and competitions devoted to the specific task. -

Elementary Marathi Syllabus SASLI 2019

ELEMENTARY MARATHI, ASIALANG 317 & ASIALANG 327 SASLI-2019, UW-MADISON Classroom: Van Hise Hall, Room-379 Level One. 8:30 am-1:00 pm Instructor: Milind Ranade Email: [email protected] Phone Number: 609-290-8352 Office Hours: Every weekday from 4.00 PM to 5.00 PM at the Memorial Union. Credit Hours: ASIALANG 317(4.0 credits), ASIALANG 327 (4.0 credits) The credit hours for this course is met by an equivalent of academic year credits of “One hour (i.e. 50 minutes) of classroom or direct faculty/instructor and a minimum of two hours of out of class student work each over approximately 15 weeks, or an equivalent amount of engagement over a different number of weeks.” Students will meet with the instructor for a total of 156 hours of class (4 hours per day, 5 days a week) over an 8-week period. COURSE DESCRIPTION: Elementary Marathi course offers introduction and study into one of the most culturally rich languages from India. With more than seventy million native speakers and twenty million all over India and the world, Marathi is one of the widely spoken languages of India. Foundation of the 'Bhakti' movement was laid by twelfth century poet and philosopher Sant Dnyaneshwar. He composed his literature in Marathi, a language of common people, and not in Sanskrit, a language of the elites. The tradition of Devotion was carried forward by Sant Janabai, Sant Namdeo, Sant Tukaram, Sant Eknath and many other Saints. Their influence on the culture of Maharashtra is so great that Marathi has the largest compilation of devotional songs and literature amongst all the Prakrit languages in India. -

Comparative Study of Indexing and Search Strategies for the Hindi, Marathi, and Bengali Languages

Comparative Study of Indexing and Search Strategies for the Hindi, Marathi, and Bengali Languages LJILJANA DOLAMIC and JACQUES SAVOY University of Neuchatel 11 The main goal of this article is to describe and evaluate various indexing and search strategies for the Hindi, Bengali, and Marathi languages. These three languages are ranked among the world’s 20 most spoken languages and they share similar syntax, morphology, and writing systems. In this article we examine these languages from an Information Retrieval (IR) perspective through describing the key elements of their inflectional and derivational morphologies, and suggest a light and more aggressive stemming approach based on them. In our evaluation of these stemming strategies we make use of the FIRE 2008 test collections, and then to broaden our comparisons we implement and evaluate two language independent in- dexing methods: the n-gram and trunc-n (truncation of the first n letters). We evaluate these solutions by applying our various IR models, including the Okapi, Divergence from Randomness (DFR) and statistical language models (LM) together with two classical vector-space approaches: tf idf and Lnu-ltc. Experiments performed with all three languages demonstrate that the I(ne)C2 model derived from the Divergence from Randomness paradigm tends to provide the best mean average precision (MAP). Our own tests suggest that improved retrieval effectiveness would be obtained by applying more aggressive stemmers, especially those accounting for certain derivational suffixes, compared to those involving a light stemmer or ignoring this type of word normalization procedure. Compar- isons between no stemming and stemming indexing schemes shows that performance differences are almost always statistically significant.