Named Entity Disambiguation in a Question Answering System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pdf Liste Totale Des Chansons

40ú Comórtas Amhrán Eoraifíse 1995 Finale - Le samedi 13 mai 1995 à Dublin - Présenté par : Mary Kennedy Sama (Seule) 1 - Pologne par Justyna Steczkowska 15 points / 18e Auteur : Wojciech Waglewski / Compositeurs : Mateusz Pospiezalski, Wojciech Waglewski Dreamin' (Révant) 2 - Irlande par Eddie Friel 44 points / 14e Auteurs/Compositeurs : Richard Abott, Barry Woods Verliebt in dich (Amoureux de toi) 3 - Allemagne par Stone Und Stone 1 point / 23e Auteur/Compositeur : Cheyenne Stone Dvadeset i prvi vijek (Vingt-et-unième siècle) 4 - Bosnie-Herzégovine par Tavorin Popovic 14 points / 19e Auteurs/Compositeurs : Zlatan Fazlić, Sinan Alimanović Nocturne 5 - Norvège par Secret Garden 148 points / 1er Auteur : Petter Skavlan / Compositeur : Rolf Løvland Колыбельная для вулкана - Kolybelnaya dlya vulkana - (Berceuse pour un volcan) 6 - Russie par Philipp Kirkorov 17 points / 17e Auteur : Igor Bershadsky / Compositeur : Ilya Reznyk Núna (Maintenant) 7 - Islande par Bo Halldarsson 31 points / 15e Auteur : Jón Örn Marinósson / Compositeurs : Ed Welch, Björgvin Halldarsson Die welt dreht sich verkehrt (Le monde tourne sens dessus dessous) 8 - Autriche par Stella Jones 67 points / 13e Auteur/Compositeur : Micha Krausz Vuelve conmigo (Reviens vers moi) 9 - Espagne par Anabel Conde 119 points / 2e Auteur/Compositeur : José Maria Purón Sev ! (Aime !) 10 - Turquie par Arzu Ece 21 points / 16e Auteur : Zenep Talu Kursuncu / Compositeur : Melih Kibar Nostalgija (Nostalgie) 11 - Croatie par Magazin & Lidija Horvat 91 points / 6e Auteur : Vjekoslava Huljić -

Queen of Country

TThehe mmonthlyonthly mmagazineagazine ddedicatededicated ttoo LLineine ddancingancing IIssue:ssue: 116464 • ££33 BBourdagesourdages RRebaeba NNancyancy MMorganorgan QQUEENUEEN OOFF CCOUNTRYOUNTRY 01 9 771366 650031 14 DANCES INCLUDING : SENORITA – SLOW RAIN – TANGO TONIGHT – I LIED! cover.indd 1 27/11/09, 10:41:21 am Line Dance Holidays from 20102010 £69.00 JANUARY 10th Birthday Party Southport Sensation £109 Bournemouth Bonanza £119 3 days/2 nights Prince of Wales Hotel, Lord Street 10th Birthday Party Glasgow Gallop £99 3 days/2 nights Carrington House Hotel Artistes – Paul Bailey (Friday) Gemmani (Saturday) 3 days/2 nights Thistle Hotel Artistes – Tim McKay (Friday) Broadcaster (Saturday) Dance Instruction and Disco: Chrissie Hodgson Artistes – Diamond Jack (Friday) Magill and Paul Bailey (Saturday) Dance Instruction and Disco:Rob Fowler Starts: Friday 26 February Finishes: Sunday 28 February 2010 Dance Instruction and Disco: Yvonne Anderson Starts: Friday 5 February Finishes: Sunday 7 February 2010 Starts: Friday 8 January Finishes: Sunday 10 January 2010 Highland Palace Hop £147 No more singles available 3 days/2 nights Atholl Palaces Hotel, Pitlochry Scarborough Valentine Scamper £95 Artistes –Carson City (Saturday) 3 days/2 nights Clifton Hotel, North Cliff Dance Instruction and Disco: Willie Brown now Morecambe New Year Party Cheapy £69 Artistes – Lass Vegas (Saturday) Starts: Friday 26 February Finishes: Sunday 28 February 2010 3 days/2 nights Headway Hotel £6 off Dance Instruction and Disco:Kim Alcock Dance Instruction and -

Öppet Arkiv 2014

10 ÅR MED CAROLA 1000 ÅR PÅ 2 TIMMAR 20:00 1985-1986 21 - MED ESS I LEKEN 24 MINUTER 2003 24-TIMMARS, EN ANNORLUNDA REGATTA 300 ÅR AV ÖGONBLICK 900 SEK A FÖR AGNETHA AB DUN OCH BOLSTER ABBA IN CONCERT ABSOLUT SAMTAL ADVENTSGUDSTJÄNST ADVENTSGUDSTJÄNST FRÅN JUKKASJÄRVI KYRKA AGENDA SPECIAL, 2005 AGNES AGNETHA FÄLTSKOG - THE HEAT IS ON AKTUELLT FRÅN MOSEBACKE MONARKI AKTUELLT SPECIAL - MANDELA I STOCKHOLM ALBERTS UNDERLIGA RESA ALFRED NOBEL - MR DYNAMITE ALICE BABS OCH DUKE ELLINGTON I PRAISE GOD AND DANCE, EN JAZZMÄSSA AV DUKE ELLINGTON ALICE BABS, SVEND ASMUSSEN OCH ULRIK NEUMANN UNDERHÅLLER ALICE VISOR ALLA TIDERS MELODIFESTIVAL ALLAN REDO ALLIS MED IS ALLSÅNG PÅ SKANSEN 1979 ALLSÅNG PÅ SKANSEN 1991 ALLSÅNG PÅ SKANSEN 1992 ALLSÅNG PÅ SKANSEN 1996 ALLSÅNG PÅ SKANSEN 1998 ALLSÅNG PÅ SKANSEN 1999 ALLSÅNG PÅ SKANSEN 2000 ALLSÅNG PÅ SKANSEN 2001 ALLSÅNG PÅ SKANSEN 2002 ALLSÅNG PÅ SKANSEN 2003 ALLSÅNG PÅ SKANSEN 2004 ALLSÅNG PÅ SKANSEN 2005 ALLT OCH LITE TILL ALPINT : WORLD CUP - ÅRE SLALOM 1977 ALPINT: WORLD CUP - ÅRE 1977 ALPINT: VÄRLDSCUPEN I UTFÖRSÅKNING 1979 ALPINT: VÄRLDSCUPEN I UTFÖRSÅKNING 1981 AMAZING GRACE ANDERLUND & ZETTERSSON U P A ANDERSSONS ÄLSKARINNA ANDRA 'PIPEL' ANNA PÅ NYA ÄVENTYR ANNA PÅ NYA ÄVENTYR 1999 ANTIKRUNDAN 1989 ANTIKRUNDAN 1990 ANTON NILSSON APELSINMANNEN ARBETSPLATS: SHERATON ARGA GUBBEN HADE FEL - VILKET SKULLE BEVISAS ARMÉNS TATTOO -88 ARNE DOMNÉRUS, RUNE GUSTAFSSON, BENGT HALLBERG, EGIL JOHANSEN, GEORG RIEDEL OCH CLAES ROSENDAHL MÖTE ARON OCH NORA ARRAN ARRAN 1995 ARTISTSHOW 1984 ASPIRANTERNA ATT RIVA EN MUR -

SVT:S Öppet Arkiv 2017 Sida 1 Av

SVT:s Öppet arkiv 2017 Program-ID Programtitel Episodnummer Avsnittsnamn 1 127 984 "utan-tvivel-ar-man-inte-klok"---tage-danielsson 1 "utan tvivel är man inte klok" - tage danielsson 1 357 554 1000-ar-pa-2-timmar 1 avsnitt 1 1 357 554 1000-ar-pa-2-timmar 2 avsnitt 2 1 356 320 10-ar-med-carola 1 10-ar-med-carola 1 357 538 300-ar-av-ogonblick 1 1700-talet 1 357 538 300-ar-av-ogonblick 2 1800-talet 1 357 538 300-ar-av-ogonblick 3 1900-talet 1 374 221 40-talister 1 kommer-hem-och-ar-snall 1 358 394 88-oresrevyn 1 88-oresrevyn 1 355 522 abba-dabba-dooo 4 abba-dabba-dooo 1 169 205 ab-dun-och-bolster 1 ab-dun-och-bolster 1 367 022 absolut-samtal 1 43-1991 1 367 022 absolut-samtal 3 273-1991 1 367 022 absolut-samtal 4 34-1991 1 367 022 absolut-samtal 5 104-1991 1 367 022 absolut-samtal 6 174-1991 1 367 022 absolut-samtal 7 85-1991 1 361 446 adventsgudstjanst-fran-jukkasjarvi-kyrka 1 adventsgudstjanst-fran-jukkasjarvi-kyrka 1 365 430 a-for-agnetha 1 a-for-agnetha 1 375 270 afrikaner-i-sverige 1 karlek-fotboll-och-trummor 1 375 300 agaton-sax 1 den-ljudlosa-sprangamnesligan 1 375 300 agaton-sax 2 kolossen-pa-rhodos 1 375 300 agaton-sax 3 det-gamla-pipskagget 1 374 622 ainbusk-singers---bondbrudar-i-narbild 1 ainbusk-singers---bondbrudar-i-narbild 1 355 635 aktuellt 0 samling-för-eftertanke-ceremoni-för-tsunamins-offer 1 355 635 aktuellt 1 forsta-nyhetssandningen-om-mordet-pa-olof-palme 1 355 635 aktuellt 2 rapport-13-86-10.00 1 355 635 aktuellt 4 fantombilden 1 355 635 aktuellt 5 smith-och-wesson-kaliber-357 1 355 635 aktuellt 6 abc-anna-lindh-knivskadad-pa-nk -

FOU2008 2 Idrottens Anläggningar

Idrottens anläggningar – ägande, driftsförhållanden och dess effekter FoU-rapport 2008:2 FoU-rapporter 2004:1 Ätstörningar – en kunskapsöversikt (Christian Carlsson) 2004:2 Kostnader för idrott – en studie om kostnader för barns idrottande 2003 2004:3 Varför lämnar ungdomar idrotten (Mats Franzén, Tomas Peterson) 2004:4 IT-användning inom idrotten (Erik Lundmark, Alf Westelius) 2004:5 Svenskarnas idrottsvanor – en studie av svenska folkets tävlings- och motionsvanor 2003 2004:6 Idrotten i den ideella sektorn – en kunskapsöversikt (Johan R Norberg) 2004:7 Den goda barnidrotten – föräldrar om barns idrottande (Staffan Karp) 2004:8 Föräldraengagemang i barns idrottsföreningar (Göran Patriksson, Stefan Wagnsson) 2005:1 Doping- och antidopingforskning 2005:2 Kvinnor och män inom idrotten 2004 2005:3 Idrottens föreningar - en studie om idrottsföreningarnas situation 2005:4 Toppningsstudien - en kvalitativ analys av barn och ledares uppfattningar av hur lag konstitueras inom barnidrott (Eva-Carin Lindgren, Hansi Hinic) 2005:5 Idrottens sociala betydelse - en statistisk undersökning hösten 2004 2005:6 Ungdomars tävlings- och motionsvanor - en statistisk undersökning våren 2005 2005:7 Inkilning inom idrottsrörelsen - en kvalitativ studie 2006:1 Lärande och erfarenheters värde (Per Gerrevall, Samanthi Carlsson och Ylva Nilsson) 2006:2 Regler och tävlingssystem (Bo Carlsson, Kristin Fransson) 2006:3 Fysisk aktivitet på Recept (FaR) (Annika Mellquist) 2006:4 Nya perspektiv på riksidrottsgymnasierna(Maja Uebel) 2006:5 Kvinnor och män inom idrotten -

Studier I Svensk Språkhistoria 14

Studier i svensk språkhistoria 14 HARRY LÖNNROTH | BODIL HAAGENSEN | MARIA KVIST | KIM SANDVAD WEST (red.) aaa VAASAN YLIOPISTON TUTKIMUKSIA 305 II © Vasa universitet Pärmbild: Petra B. Fritz, https://flic.kr/p/fHDgeg ISBN 978-952-476-799-6 (tryckt) 978-952-476-800-9 (online) URN:ISBN:978-952-476-800-9 ISSN 2489-2556 (Vaasan yliopiston tutkimuksia 305, tryckt) 2489-2564 (Vaasan yliopiston tutkimuksia 305, online) Suomen yliopistopaino – Juvenes Print 2018 III FÖRORD Den fjortonde konferensen i serien Svenska språkets historia hölls i Vasa, Finlands soligaste stad i hjärtat av Österbotten. Som värd för konferensen stod enheten för nordiska språk vid Vasa universitet. Som tema för konferensen hade vi valt ”Flerspråkighet och språkhistoria”, ett stort och aktuellt tema. En bärande tanke för oss arrangörer var att flerspråkighet inte är något nytt fenomen, och för att fördjupa vår förståelse av detta mång- facetterade fenomen behöver vi mer språkhistorisk forskning och ökad språk- historisk medvetenhet. Och många nappade på vår inbjudan! I konferensen deltog cirka 60 personer från 13 universitet och fyra forskningsorganisationer. De tre inbjudna plenarföreläsarna var Lars-Erik Edlund, professor i nordiska språk vid Umeå universitet, Anna Helga Hannesdóttir, professor i nordiska språk vid Göteborgs universitet, och Nils Erik Villstrand, professor i nordisk historia vid Åbo Akademi och docent i de nordiska ländernas historia vid Vasa universitet. Utöver plenarföredragen hölls det 36 sektionsföredrag och 4 poster- presentationer på konferensen. I denna konferensvolym publiceras två plenar- föredrag och 15 sektionsföredrag. Artiklarna har gått igenom referentgranskning. Till slut vill vi tacka FD Sanna Heittola som har tagit hand om den tekniska redigeringen. -

Öppet Arkiv 2015

Programnamn Öppet Arkiv 2015 10 ÅR MED CAROLA 1000 ÅR PÅ 2 TIMMAR 20:00 1985-1986 21 - MED ESS I LEKEN 24 MINUTER 2003 24-TIMMARS, EN ANNORLUNDA REGATTA 300 ÅR AV ÖGONBLICK 401 7 TILL 9 900 SEK A FÖR AGNETHA AB DUN OCH BOLSTER ABSOLUT SAMTAL AD LIB 1991 AD LIB 1993 ADVENTSGUDSTJÄNST ADVENTSGUDSTJÄNST FRÅN JUKKASJÄRVI KYRKA AGENDA SPECIAL, 2005 AGNES AGNETHA FÄLTSKOG - THE HEAT IS ON AKTUELLT FRÅN MOSEBACKE MONARKI AKTUELLT SPECIAL - HOLMÉR I FÖRHÖR AKTUELLT SPECIAL - MANDELA I STOCKHOLM ALBERT OCH HERBERT 1974 ALBERT OCH HERBERT 1976 ALBERT OCH HERBERT 1977 ALBERTS UNDERLIGA RESA ALBERTS UNDERLIGA RESA 1997 ALFRED NOBEL - MR DYNAMITE ALICE BABS OCH DUKE ELLINGTON I PRAISE GOD AND DANCE, EN JAZZMÄSSA AV DUKE ELLINGTON ALICE BABS, SVEND ASMUSSEN OCH ULRIK NEUMANN UNDERHÅLLER ALLA TIDERS MELODIFESTIVAL ALLAN REDO ALLIS MED IS ALLSÅNG PÅ SKANSEN 1979 ALLSÅNG PÅ SKANSEN 1990 ALLSÅNG PÅ SKANSEN 1991 ALLSÅNG PÅ SKANSEN 1992 ALLSÅNG PÅ SKANSEN 1992 ALLSÅNG PÅ SKANSEN 1996 ALLSÅNG PÅ SKANSEN 1998 ALLSÅNG PÅ SKANSEN 1999 ALLSÅNG PÅ SKANSEN 2000 ALLSÅNG PÅ SKANSEN 2001 ALLSÅNG PÅ SKANSEN 2002 ALLSÅNG PÅ SKANSEN 2003 ALLSÅNG PÅ SKANSEN 2004 ALLSÅNG PÅ SKANSEN 2005 ALLSÅNGSKONSERT MED KJELL LÖNNÅ 1990 ALLT OCH LITE TILL ALPINT : WORLD CUP - ÅRE SLALOM 1977 ALPINT: WORLD CUP - ÅRE 1977 ALPINT: VÄRLDSCUPEN I UTFÖRSÅKNING 1979 ALPINT: VÄRLDSCUPEN I UTFÖRSÅKNING 1981 ALTERNATIVFESTIVALEN AMALA, KAMALA AMAZING GRACE ANDERLUND & ZETTERSSON U P A ANDERSSONS ÄLSKARINNA ANDRA ORD 1976 ANDRA 'PIPEL' ANGNE & SVULLO ANNA OCH GÄNGET ANNA PÅ NYA ÄVENTYR ANNA PÅ -

Inbjudan Till Melodifestivalen 2004

Inbjudan till Melodifestivalen 2004 Melodifestivalen 2003 blev en stor framgång. Över 60 000 människor följde tävlingarna på plats och drygt två miljoner tittare såg varje deltävling och 3,8 miljoner följde den direktsända finalen i Sveriges Television. Nu är det dags för alla kompositörer och textförfattare att lämna in sina bidrag till Melodifestivalen 2004. Sveriges Television inbjuder alla intresserade att skicka in sina bidrag till: Melodifestivalen 2004 Sveriges Television 109 14 Stockholm Tävlingsbidragen måste vara poststämplade senast den 30 september 2003. Alla upphovsmän måste skriva på och bifoga den tävlingsblankett som finns i regelverket för tävlingen. Regelverket finns tillgängligt på svt.se och kan dessutom erhållas om man skickar in ett brev med bifogat frankerat (dubbelt porto) och färdigadresserat returkuvert till adressen ovan. Boende i Stockholm kan även hämta reglerna i receptionen på Sveriges Television Oxenstiernsgatan 26 eller 34. Öppet vardagar mellan klockan 09.00-17.00. Slutligen vill vi passa på att uppmärksamma tva viktiga förändringar i Melodifestivalen 2004: - Tio platser av tävlingens 32 ges med förtur till bidrag med svensk text. Läs mer i punkt 5. - SVT förbehåller sig rätten att specialinbjuda fyra av de 32 bidragen. Läs mer i punkt 11. För ytterligare information kontakta: Thomas Hall, projektledare SVT Fiktion, 08-784 44 41 eller via mail [email protected] TÄVLINGSREGLER MELODIFESTIVAL 2004 1. Melodifestivalen 2004 är den nationella tävling som utser den melodi som ska representera Sverige i 2004 års Eurovision Song Contest, ESC. ESC arrangeras av European Broadcasting Union (EBU). 2. Alla svenska medborgare och/eller i Sverige folkbokförda (senast per den 30:e september 2003) personer har rätt att deltaga i tävlingen som kompositörer och textförfattare. -

SVT:S Öppet Arkiv 2015 Sida 1 Av

SVT:s Öppet arkiv 2015 ID-nr Programnamn Episodnamn Utbud 1370140-1 401 Prod Year 1970 Drama 1356320-1 10 ÅR MED CAROLA Prod Year 1993 Artistshow 1357554-1 1000 ÅR PÅ 2 TIMMAR Episod 1 Humaniora 1357554-2 1000 ÅR PÅ 2 TIMMAR Episod 2 Humaniora 1361824-24 20:00 1985-1986 Episod 24: Special - Stina möter Silvia Politik, samhälle 1358953-1 21 - MED ESS I LEKEN Prod Year 1977 Blandat nöje 1358955-1 21 - MED ESS I LEKEN Prod Year 1979 Blandat nöje 1367093-42 24 MINUTER 2003 Episod 42: Timbuktu Blandat nöje 1367093-78 24 MINUTER 2003 Episod 78: Leif GW Persson Blandat nöje 1367093-0 24 minuter 2003 1359448-1 24-TIMMARS, EN ANNORLUNDA REGATTA Prod Year 1975 Idrottstävling 1357538-1 300 ÅR AV ÖGONBLICK Episod 1 Politik, samhälle 1357538-2 300 ÅR AV ÖGONBLICK Episod 2 Politik, samhälle 1357538-3 300 ÅR AV ÖGONBLICK Episod 3 Politik, samhälle 1358773-1 7 TILL 9 Episod 1 Blandat nöje 1358773-5 7 TILL 9 Episod 5 Blandat nöje 1358773-6 7 TILL 9 Episod 6 Blandat nöje 1366976-1 900 SEK Episod 1 Blandat nöje 1366976-2 900 SEK Episod 2 Blandat nöje 1366976-3 900 SEK Episod 3 Blandat nöje 1366976-4 900 SEK Episod 4 Blandat nöje 1366976-5 900 SEK Episod 5 Blandat nöje 1366976-6 900 SEK Episod 6 Blandat nöje 1366976-7 900 SEK Episod 7 Blandat nöje 1366976-8 900 SEK Episod 8 Blandat nöje 1366976-9 900 SEK Episod 9 Blandat nöje 1365430-1 A FÖR AGNETHA Prod Year 1985 Musik 1169205-1 AB DUN OCH BOLSTER Prod Year 1988 Drama 1367022-1 ABSOLUT SAMTAL Episod 1 Kulturgestaltning 1367022-2 ABSOLUT SAMTAL Episod 2 Kulturgestaltning 1367022-3 ABSOLUT SAMTAL Episod 3 -

DE NOMINERADE TILL PRIS VID Fov GALAN 2014

DE NOMINERADE TILL PRIS VID FoV GALAN 2014: Årets Arrangör Vi vill uppmärksamma alla dem som ofta är osynliga men med ideellt och engagerat arbete bidrar till att konserter och festivaler blir av. Priset går till en arrangör som under 2013 nått framgång eller stärkt sin position med ett aktivt arrangörskap och ett för genren och dess publik bra koncept och program. FARHANG Farhang (persiska för kultur) är en politiskt och religiöst obunden stockholmsförening som verkar för att bygga broar mellan människor med olika kulturell bakgrund. Föreningen bildades 1995 och organiserar festivaler, konserter, seminarier m m involverande konstnärer, författare, musiker och de som är allmänt intresserade av frågor kring kultur, identitet och kreativitet i en värld präglad av diaspora, globalisering och kulturell mångfald. Man fungerar också som rådgivare åt musiker med utländsk bakgrund i Sverige. Nominerades till Årets Arrangör också 2011. www.farhang.nu PLANETA Västsvensk festival för musik och dans i hela världen som firade tioårsjubileum i år. Över hundra akter på mer än 30 scener i fem städer bjöd på allt från flamencopop och barnföreställningar till svensk folkdans och brasilianskt sväng. Började som ett nätverk för arrangörer och har idag mer än 30 olika medlemsorganisationer. www.planeta.se SOMMARSCEN MALMÖ Under nio veckor varje sommar presenterar Sommarscen Malmö närmare 200 föreställningar i både stort och litet format, på ett fyrtiotal platser runtom i Malmö. Programmet spänner från det unika och oväntade mötet till det breda och folkliga med allt från barnteater, dans, litterära vandringar och jazz till film, pop, nycirkus, allsång och musik från hela världen. Gemensamt för alla arrangemang är att de äger rum utomhus, att de är med fri entré och att de ska bjuda på en mångfald av scenkonst för barn, unga och vuxna. -

Dragqueens Och Musiken Ett Arbete Om Dragqueens, Queerteori Och Divornas Röst

C-uppsats Sara Andersson Prpic Handledare: John Howland Musikvetenskap Lunds universitet MUVK01 2016 Dragqueens och musiken Ett arbete om dragqueens, queerteori och divornas röst. 1 Abstract Syftet med denna uppsats är att utveckla en kunskap om sambandet mellan dragqueens, musiken i deras föreställningar och deras relation till de sångdivor som de gärna gestaltar på scenen. Denna uppsats ska försöka lyfta fram dragkulturen och dess shower ännu mer då dragpersoner har börjat få en mer centralare plats i vårt samhälle och för att det är en kultur som fascinerar och är väldigt mycket uppskattad. Uppsatsen igenom går rösten hos operadivor och deras popularitet, både inom gaykulturen men även hos dragqueens, samt en undersökning gjord av Craig Jennex angående Lady Gaga och hennes röst samt varför fans dras till henne. Kommer även titta på draggruppen After Dark och schlagern i Sverige. Det tas även upp en del om queerteori, då den är viktig för HBTQ kulturen och dess förståelse. Craig Jennex skriver att pragmatiska och assimilationiska ideal genomsyrar vår samtida gaykultur, och att enligt Michael Joseph Gross, till exempel, hävdar att homosexuella mäns deltagande i musikkulturen fortfarande längtar efter det normativa, och att, enligt Gross, är den nuvarande generationen av homosexuella män likgiltiga för vördnaden av kvinnliga ikoner, en viktig aspekt av de manligt homosexuellas identitets förflutna. Men Jennex går emot detta påstående och menar på att de kvinnliga ikonerna är fortfarande lika viktiga nu som då och det är det som den här uppsatsen skulle vilja och ska försöka visa. Och divornas, de kvinnliga ikonernas, betydelse för inte bara homosexuella dragpersoner utan även för heterosexuella inom så kallad cross-dressing. -

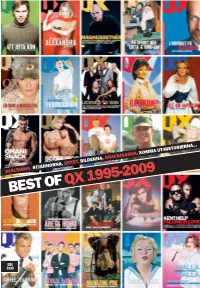

Best of Qx 1995-2009

HJÄLTARNA. STJÄRNORNA. SEXET. BILDERNA. SNACKISARNA. KOMMA UT-HISTORIERNA... BEST OF QX 1995-2009 DEC 2009 VEMTYCKER DUSKAFÅPRIS PÅGAYGALAN 2010? VEM ÄR ÅRETS HOMO? VEM ÄR ÅRETS HETERO? VILKET ÄR ÅRTES GAYSTÄLLE? VILKA ÄR ÅRETS DUO? VILKEN ÄR ÅRETS LÅT? VILKEN ÄR ÅRETS FILM? Nominera dina favoriter på www.qx.se fram till och med den 10 december. Gaygalan hålls på Cirkus i Stockholm måndagen den 1 februari 2010. Dörrarna öppnas 18.00. Show 21.00. Biljetterna släpps torsdag den 17 december på ticnet.se. Galakonferencier: Petra Mede QX <:?6D:D<E ?J£C A6CD:D<E ?J£C 42D:?@ E@FC 3CF?49 =2 G682D =25:6D 42D:?@ ?:89E =6E|D>656=92GDE6>2 86E G2=6?E:?6 >2CC:65 3CF?49 3C2DD6C:6E E6>2<G¢==2C E;6; <G¢== A@<6C EFC?6C:?82C >2C<FD 2F;2=2J 9¢>E25:EEAC@8C2>A£<2D:?@E6==6C36D´<HHH42D:?@4@D>@A@=D6 DE@4<9@=>8´E63@C8>2=>´DF?5DG2== HHH42D:?@4@D>@A@=D6 INNEHÅLL INGÅNG Det här är det sista numret av QX. daktionen satt och skrev in. Vi tog sedan Idag finns det säkert 100 mer eller Och det bästa. hand om alla svarsbrev som folk skrev mindre kända svenskar som är öppet H, Nej, så allvarligt är det inte, men an- och postade dem vidare till rätt avsändare. B eller T. ledningen till att vi gjort ett ”Best of ” i Ibland blev det ofrivilligt roligt när man Fantastiskt. det här numret är att QX i och med nästa läste annonserna i tidningen, i en annons Utan öppna homosar skulle QX ald- FOTO: LOUISE BILLGERT nummer byter format och design.