Decoupling Intensity Radiated by the Emitter in Distance Estimation from Camera to IR Emitter

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Fundametals of Rendering - Radiometry / Photometry

Fundametals of Rendering - Radiometry / Photometry “Physically Based Rendering” by Pharr & Humphreys •Chapter 5: Color and Radiometry •Chapter 6: Camera Models - we won’t cover this in class 782 Realistic Rendering • Determination of Intensity • Mechanisms – Emittance (+) – Absorption (-) – Scattering (+) (single vs. multiple) • Cameras or retinas record quantity of light 782 Pertinent Questions • Nature of light and how it is: – Measured – Characterized / recorded • (local) reflection of light • (global) spatial distribution of light 782 Electromagnetic spectrum 782 Spectral Power Distributions e.g., Fluorescent Lamps 782 Tristimulus Theory of Color Metamers: SPDs that appear the same visually Color matching functions of standard human observer International Commision on Illumination, or CIE, of 1931 “These color matching functions are the amounts of three standard monochromatic primaries needed to match the monochromatic test primary at the wavelength shown on the horizontal scale.” from Wikipedia “CIE 1931 Color Space” 782 Optics Three views •Geometrical or ray – Traditional graphics – Reflection, refraction – Optical system design •Physical or wave – Dispersion, interference – Interaction of objects of size comparable to wavelength •Quantum or photon optics – Interaction of light with atoms and molecules 782 What Is Light ? • Light - particle model (Newton) – Light travels in straight lines – Light can travel through a vacuum (waves need a medium to travel in) – Quantum amount of energy • Light – wave model (Huygens): electromagnetic radiation: sinusiodal wave formed coupled electric (E) and magnetic (H) fields 782 Nature of Light • Wave-particle duality – Light has some wave properties: frequency, phase, orientation – Light has some quantum particle properties: quantum packets (photons). • Dimensions of light – Amplitude or Intensity – Frequency – Phase – Polarization 782 Nature of Light • Coherence - Refers to frequencies of waves • Laser light waves have uniform frequency • Natural light is incoherent- waves are multiple frequencies, and random in phase. -

About SI Units

Units SI units: International system of units (French: “SI” means “Système Internationale”) The seven SI base units are: Mass: kg Defined by the prototype “standard kilogram” located in Paris. The standard kilogram is made of Pt metal. Length: m Originally defined by the prototype “standard meter” located in Paris. Then defined as 1,650,763.73 wavelengths of the orange-red radiation of Krypton86 under certain specified conditions. (Official definition: The distance traveled by light in vacuum during a time interval of 1 / 299 792 458 of a second) Time: s The second is defined as the duration of a certain number of oscillations of radiation coming from Cs atoms. (Official definition: The second is the duration of 9,192,631,770 periods of the radiation of the hyperfine- level transition of the ground state of the Cs133 atom) Current: A Defined as the current that causes a certain force between two parallel wires. (Official definition: The ampere is that constant current which, if maintained in two straight parallel conductors of infinite length, of negligible circular cross-section, and placed 1 meter apart in vacuum, would produce between these conductors a force equal to 2 × 10-7 Newton per meter of length. Temperature: K One percent of the temperature difference between boiling point and freezing point of water. (Official definition: The Kelvin, unit of thermodynamic temperature, is the fraction 1 / 273.16 of the thermodynamic temperature of the triple point of water. Amount of substance: mol The amount of a substance that contains Avogadro’s number NAvo = 6.0221 × 1023 of atoms or molecules. -

Application Note (A2) LED Measurements

Application Note (A2) LED Measurements Revision: F JUNE 1997 OPTRONIC LABORATORIES, INC. 4632 36TH STREET ORLANDO, FLORIDA 32811 USA Tel: (407) 422-3171 Fax: (407) 648-5412 E-mail: www.olinet.com LED MEASUREMENTS Table of Contents 1.0 INTRODUCTION ................................................................................................................. 1 2.0 PHOTOMETRIC MEASUREMENTS.................................................................................... 1 2.1 Total Luminous Flux (lumens per 2B steradians)...................................................... 1 2.2 Luminous Intensity ( millicandelas) ........................................................................... 3 3.0 RADIOMETRIC MEASUREMENTS..................................................................................... 6 3.1 Total Radiant Flux or Power (watts per 2B steradians)............................................. 6 3.2 Radiant Intensity (watts per steradian) ..................................................................... 8 4.0 SPECTRORADIOMETRIC MEASUREMENTS.................................................................. 11 4.1 Spectral Radiant Flux or Power (watts per nm)....................................................... 11 4.2 Spectral Radiant Intensity (watts per steradian nm) ............................................... 13 4.3 Peak Wavelength and 50% Power Points............................................................... 15 LED MEASUREMENTS 1.0 INTRODUCTION The instrumentation selected to measure the output of an LED -

Radiometry of Light Emitting Diodes Table of Contents

TECHNICAL GUIDE THE RADIOMETRY OF LIGHT EMITTING DIODES TABLE OF CONTENTS 1.0 Introduction . .1 2.0 What is an LED? . .1 2.1 Device Physics and Package Design . .1 2.2 Electrical Properties . .3 2.2.1 Operation at Constant Current . .3 2.2.2 Modulated or Multiplexed Operation . .3 2.2.3 Single-Shot Operation . .3 3.0 Optical Characteristics of LEDs . .3 3.1 Spectral Properties of Light Emitting Diodes . .3 3.2 Comparison of Photometers and Spectroradiometers . .5 3.3 Color and Dominant Wavelength . .6 3.4 Influence of Temperature on Radiation . .6 4.0 Radiometric and Photopic Measurements . .7 4.1 Luminous and Radiant Intensity . .7 4.2 CIE 127 . .9 4.3 Spatial Distribution Characteristics . .10 4.4 Luminous Flux and Radiant Flux . .11 5.0 Terminology . .12 5.1 Radiometric Quantities . .12 5.2 Photometric Quantities . .12 6.0 References . .13 1.0 INTRODUCTION Almost everyone is familiar with light-emitting diodes (LEDs) from their use as indicator lights and numeric displays on consumer electronic devices. The low output and lack of color options of LEDs limited the technology to these uses for some time. New LED materials and improved production processes have produced bright LEDs in colors throughout the visible spectrum, including white light. With efficacies greater than incandescent (and approaching that of fluorescent lamps) along with their durability, small size, and light weight, LEDs are finding their way into many new applications within the lighting community. These new applications have placed increasingly stringent demands on the optical characterization of LEDs, which serves as the fundamental baseline for product quality and product design. -

Radiometry and Photometry

Radiometry and Photometry Wei-Chih Wang Department of Power Mechanical Engineering National TsingHua University W. Wang Materials Covered • Radiometry - Radiant Flux - Radiant Intensity - Irradiance - Radiance • Photometry - luminous Flux - luminous Intensity - Illuminance - luminance Conversion from radiometric and photometric W. Wang Radiometry Radiometry is the detection and measurement of light waves in the optical portion of the electromagnetic spectrum which is further divided into ultraviolet, visible, and infrared light. Example of a typical radiometer 3 W. Wang Photometry All light measurement is considered radiometry with photometry being a special subset of radiometry weighted for a typical human eye response. Example of a typical photometer 4 W. Wang Human Eyes Figure shows a schematic illustration of the human eye (Encyclopedia Britannica, 1994). The inside of the eyeball is clad by the retina, which is the light-sensitive part of the eye. The illustration also shows the fovea, a cone-rich central region of the retina which affords the high acuteness of central vision. Figure also shows the cell structure of the retina including the light-sensitive rod cells and cone cells. Also shown are the ganglion cells and nerve fibers that transmit the visual information to the brain. Rod cells are more abundant and more light sensitive than cone cells. Rods are 5 sensitive over the entire visible spectrum. W. Wang There are three types of cone cells, namely cone cells sensitive in the red, green, and blue spectral range. The approximate spectral sensitivity functions of the rods and three types or cones are shown in the figure above 6 W. Wang Eye sensitivity function The conversion between radiometric and photometric units is provided by the luminous efficiency function or eye sensitivity function, V(λ). -

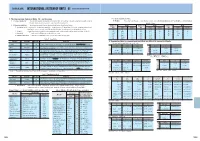

International System of Units (Si)

[TECHNICAL DATA] INTERNATIONAL SYSTEM OF UNITS( SI) Excerpts from JIS Z 8203( 1985) 1. The International System of Units( SI) and its usage 1-3. Integer exponents of SI units 1-1. Scope of application This standard specifies the International System of Units( SI) and how to use units under the SI system, as well as (1) Prefixes The multiples, prefix names, and prefix symbols that compose the integer exponents of 10 for SI units are shown in Table 4. the units which are or may be used in conjunction with SI system units. Table 4. Prefixes 1 2. Terms and definitions The terminology used in this standard and the definitions thereof are as follows. - Multiple of Prefix Multiple of Prefix Multiple of Prefix (1) International System of Units( SI) A consistent system of units adopted and recommended by the International Committee on Weights and Measures. It contains base units and supplementary units, units unit Name Symbol unit Name Symbol unit Name Symbol derived from them, and their integer exponents to the 10th power. SI is the abbreviation of System International d'Unites( International System of Units). 1018 Exa E 102 Hecto h 10−9 Nano n (2) SI units A general term used to describe base units, supplementary units, and derived units under the International System of Units( SI). 1015 Peta P 10 Deca da 10−12 Pico p (3) Base units The units shown in Table 1 are considered the base units. 1012 Tera T 10−1 Deci d 10−15 Femto f (4) Supplementary units The units shown in Table 2 below are considered the supplementary units. -

![[V2C2-09P70] 2.9 Radiant Intensity](https://docslib.b-cdn.net/cover/0717/v2c2-09p70-2-9-radiant-intensity-2610717.webp)

[V2C2-09P70] 2.9 Radiant Intensity

70 RADIOMETRY AND PHOTOMETRY VOL. I I 2.9 Radiant Intensity The concept of radiant intensity, the last of the set of basic radiometric concepts to be introduced in this chapter, is designed to give a measure of the solid angle density of radiant flux. Thus radiant intensity is a dual concept to irradiance in the sense that the latter gives a measure of the area density of radiant flux while the former gives a measure of the solid angle density of radiant flux. At one time the concept of radiant intensity enjoyed the place now occupied by radiant flux. In the days of the candle and gas lamp there were very few artificial extended light sources. The controlled artificial point source, such as a candle's flame, was the sole basis for radiometric standards and its radiant output was conveniently measured in terms of intensity. However, with the passing of years the numbers of extended artificial light sources increased and the classical mode of use of radiant intensity has become correspondingly less frequent than that of radiant flux. Eventually radiance for the most part usurped the once centrally disposed radiant intensity concept. Despite this displacement of radiant intensity's status, it appears that there will always exist times when its use arises naturally. For example when emitting 'point sources' are considered, the use of radiant intensity seems automatically indicated. This useful aspect of radiant intensity will be discussed during its systematic development, to which we now turn. Operational Definition of Empirical Radiant Intensity In presenting the concept of radiant intensity we shall be guided by operational considerations so as to give the concept a secure footing relative to the other radiometric concepts already defined. -

Basic Principles of Imaging and Photometry Lecture #2

Basic Principles of Imaging and Photometry Lecture #2 Thanks to Shree Nayar, Ravi Ramamoorthi, Pat Hanrahan Computer Vision: Building Machines that See Lighting Camera Physical Models Computer Scene Scene Interpretation We need to understand the Geometric and Radiometric relations between the scene and its image. A Brief History of Images 1558 Camera Obscura, Gemma Frisius, 1558 A Brief History of Images 1558 1568 Lens Based Camera Obscura, 1568 A Brief History of Images 1558 1568 1837 Still Life, Louis Jaques Mande Daguerre, 1837 A Brief History of Images 1558 1568 1837 Silicon Image Detector, 1970 1970 A Brief History of Images 1558 1568 1837 Digital Cameras 1970 1995 Geometric Optics and Image Formation TOPICS TO BE COVERED : 1) Pinhole and Perspective Projection 2) Image Formation using Lenses 3) Lens related issues Pinhole and the Perspective Projection Is an image being formed (x,y) on the screen? YES! But, not a “clear” one. screen scene image plane r =(x, y, z) y optical effective focal length, f’ z axis pinhole x r'=(x', y', f ') r' r x' x y' y = = = f ' z f ' z f ' z Magnification A(x, y, z) B y d B(x +δx, y +δy, z) optical f’ z A axis Pinhole A’ d’ x planar scene image plane B’ A'(x', y', f ') B'(x'+δx', y'+δy', f ') From perspective projection: Magnification: x' x y' y d' (δx')2 + (δy')2 f ' = = m = = = f ' z f ' z d (δx)2 + (δy)2 z x'+δx' x +δx y'+δy' y +δy = = Area f ' z f ' z image = m2 Areascene Orthographic Projection Magnification: x' = m x y' = m y When m = 1, we have orthographic projection r =(x, y, z) r'=(x', y', f ') y optical z axis x z ∆ image plane z This is possible only when z >> ∆z In other words, the range of scene depths is assumed to be much smaller than the average scene depth. -

Radiometric Quantities and Units Used in Photobiology And

Photochemistry and Photobiology, 2007, 83: 425–432 Radiometric Quantities and Units Used in Photobiology and Photochemistry: Recommendations of the Commission Internationale de l’Eclairage (International Commission on Illumination) David H. Sliney* International Commission on Illumination (CIE), Vienna, Austria and Laser ⁄ Optical Radiation Program, US Army Center for Health Promotion and Preventive Medicine, Gunpowder, MD Received 14 November 2006; accepted 16 November 2006; published online 20 November 2006 DOI: 10.1562 ⁄ 2006-11-14-RA-1081 ABSTRACT The International Commission on Illumination, the CIE, has provided international guidance in the science, termi- To characterize photobiological and photochemical phenomena, nology and measurement of light and optical radiation since standardized terms and units are required. Without a uniform set 1913. The International Lighting Vocabulary (parts of which of descriptors, much of the scientific value of publications can be are also issued as an ISO or IEC standard) has been an lost. Attempting to achieve an international consensus for a international reference for photochemists and photobiolo- common language has always been difficult, but now with truly gists for many decades; and the next revision is due to be international scientific publications, it is all the more important. published later this year or next (2). Despite its stature, As photobiology and photochemistry both represent the fusion of many research scientists are unfamiliar with some of the several scientific disciplines, it is not surprising that the physical subtle distinctions between several widely used radiometric terms used to describe exposures and dosimetric concepts can terms. Tables 1–4 provide several standardized terms that vary from author to author. -

Radiant Flux Density

Schedule 08/31/17 (Lecture #1) • 12 lectures 09/05/17 (Lecture #2) • 3 long labs (8 hours each) 09/07/17 (Lecture #3) • 2-3 homework 09/12/17 (4:00 – 18:30 h) (Lecture #4-5) • 1 group project 09/14/17 (Lecture #6): radiation • 4 Q&A (Geography Room 206) 09/21/17 (Lecture #7): radiation lab • Dr. Dave Reed on CRBasics with 09/26/17 (4:00 – 18:30 h) (Lecture #8-9) CR5000 datalogger 09/28/17 (Lecture #10) 10/03/17 (12:00 – 8:00 h) (Lab #1) 10/10/17 (12:00 – 8:00 h) (Lab #2) 10/20/17 (8:00 -17:00 h) (Lab #3) 10/24/17 (Q&A #1) 10/26/17 (Lecture #11) 11/07/17 (Q&A #2) 11/14/17 (Q&A #3) 11/21/17 (Q&A #4) 12/07/17 (Lecture #12): Term paper due on Dec. 14, 2017 Radiation (Ch 10 & 11; Campbell & Norman 1998) • Rt, Rl, Rn, Rin, Rout, PAR, albedo, turbidity • Greenhouse effect, Lambert’s Cosine Law • Sensors: Pyranometer, K&Z CNR4, Q7, PAR, etc. • Demonstration of solar positions • Programming with LogerNet Review of Radiation Solar constant: 1.34-1.36 kW.m-2, with about 2% fluctuations Sun spots: in pairs, ~11 yrs frequency, and from a few days to several months duration Little ice age (1645-1715) Greenhouse effects (atmosphere as a selective filter) Low absorptivity between 8-13 m Clouds Elevated CO2 Destroy of O3 O3 also absorb UV and X-ray Global radiation budget Related terms: cloudiness turbidity (visibility), and albedo Lambert’s Cosine Law Other Considerations Zenith distance (angle) Other terms Solar noon: over the meridian of observation Equinox: the sun passes directly over the equator Solstice Solar declination Path of the Earth around the sun 147*106 km 152*106 km Radiation Balance Model R R Rt e r Rs Rtr Solar declination (D): an angular distance north (+) or south (-) of the celestial equator of place of the earth’s equator. -

Radiometry and Photometry

Radiometry and Photometry Wei-Chih Wang Department of Power Mechanical Engineering National TsingHua University W.Wang 1 Week 11 • Course Website: http://courses.washington.edu/me557/sensors • Reading Materials: - Week 11 reading materials are from: http://courses.washington.edu/me557/readings/ • H3 # 3 assigned due next week (week 12) • Sign up to do Lab 2 next two weeks • Final Project proposal: Due Monday Week 13 • Set up a time to meet for final project Week 12 (11/27) • Oral Presentation on 12/23, Final report due 1/7 5PM. w.wang 2 Last Week • Light sources - Chemistry 101, orbital model-> energy gap model (light in quantum), spectrum in energy gap - Broad band light sources ( Orbital energy model, quantum theory, incandescent filament, gas discharge, LED) - LED (diode, diode equation, energy gap equation, threshold voltage, device efficiency, junction capacitance, emission pattern, RGB LED, OLED) - Narrow band light source (laser, Coherence, lasing principle, population inversion in different lasing medium- ion, molecular and atom gas laser, liquid, solid and semiconductor lasers, laser resonating cavity- monochromatic light, basic laser constitutive parameters) w.wang 3 This Week • Photodetectors - Photoemmissive Cells - Semiconductor Photoelectric Transducer (diode equation, energy gap equation, reviser bias voltage, quantum efficiency, responsivity, junction capacitance, detector angular response, temperature effect, different detector operating mode, noises in detectors, photoconductive, photovoltaic, photodiode, PIN, APD, PDA, PSD, CCD, CMOS detectors) - Thermal detectors (IR and THz detectors) w.wang 4 Materials Covered • Radiometry - Radiant Flux - Radiant Intensity - Irradiance - Radiance • Photometry - luminous Flux - luminous Intensity - Illuminance - luminance Conversion from radiometric and photometric W.Wang 5 Radiometry Radiometry is the detection and measurement of light waves in the optical portion of the electromagnetic spectrum which is further divided into ultraviolet, visible, and infrared light. -

L14: Radiometry and Reflectance

Appearance and reflectance 16-385 Computer Vision http://www.cs.cmu.edu/~16385/ Spring 2018, Lecture 13 Course announcements • Apologies for cancelling last Wednesday’s lecture. • Homework 3 has been posted and is due on March 9th. - Any questions about the homework? - How many of you have looked at/started/finished homework 3? • Office hours for Yannis’ this week: Wednesday 3-5 pm. • Results from poll for adjusting Yannis’ regular office hours: They stay the same. • Many talks this week: 1. Judy Hoffman, "Adaptive Adversarial Learning for a Diverse Visual World," Monday March 5th, 10:00 AM, NSH 3305. 2. Manolis Savva, "Human-centric Understanding of 3D Environments," Wednesday March 7, 2:00 PM, NSH 3305. 3. David Fouhey, "Recovering a Functional and Three Dimensional Understanding of Images," Thursday March 8, 4:00 PM, NSH 3305. • How many of you went to Pulkit Agrawal’s talk last week? Overview of today’s lecture • Appearance phenomena. • Measuring light and radiometry. • Reflectance and BRDF. Slide credits Most of these slides were adapted from: • Srinivasa Narasimhan (16-385, Spring 2014). • Todd Zickler (Harvard University). • Steven Gortler (Harvard University). Course overview Lectures 1 – 7 1. Image processing. See also 18-793: Image and Video Processing Lectures 7 – 12 2. Geometry-based vision. See also 16-822: Geometry-based Methods in Vision 3. Physics-based vision. We are starting this part now 4. Learning-based vision. 5. Dealing with motion. Appearance Appearance “Physics-based” computer vision (a.k.a “inverse optics”) Our