Simulations of ATLAS Silicon Strip Detector Modules in ATHENA Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Annual Compendium of Commercial Space Transportation: 2012

Federal Aviation Administration The Annual Compendium of Commercial Space Transportation: 2012 February 2013 About FAA About the FAA Office of Commercial Space Transportation The Federal Aviation Administration’s Office of Commercial Space Transportation (FAA AST) licenses and regulates U.S. commercial space launch and reentry activity, as well as the operation of non-federal launch and reentry sites, as authorized by Executive Order 12465 and Title 51 United States Code, Subtitle V, Chapter 509 (formerly the Commercial Space Launch Act). FAA AST’s mission is to ensure public health and safety and the safety of property while protecting the national security and foreign policy interests of the United States during commercial launch and reentry operations. In addition, FAA AST is directed to encourage, facilitate, and promote commercial space launches and reentries. Additional information concerning commercial space transportation can be found on FAA AST’s website: http://www.faa.gov/go/ast Cover art: Phil Smith, The Tauri Group (2013) NOTICE Use of trade names or names of manufacturers in this document does not constitute an official endorsement of such products or manufacturers, either expressed or implied, by the Federal Aviation Administration. • i • Federal Aviation Administration’s Office of Commercial Space Transportation Dear Colleague, 2012 was a very active year for the entire commercial space industry. In addition to all of the dramatic space transportation events, including the first-ever commercial mission flown to and from the International Space Station, the year was also a very busy one from the government’s perspective. It is clear that the level and pace of activity is beginning to increase significantly. -

The Evolving Launch Vehicle Market Supply and the Effect on Future NASA Missions

Presented at the 2007 ISPA/SCEA Joint Annual International Conference and Workshop - www.iceaaonline.com The Evolving Launch Vehicle Market Supply and the Effect on Future NASA Missions Presented at the 2007 ISPA/SCEA Joint International Conference & Workshop June 12-15, New Orleans, LA Bob Bitten, Debra Emmons, Claude Freaner 1 Presented at the 2007 ISPA/SCEA Joint Annual International Conference and Workshop - www.iceaaonline.com Abstract • The upcoming retirement of the Delta II family of launch vehicles leaves a performance gap between small expendable launch vehicles, such as the Pegasus and Taurus, and large vehicles, such as the Delta IV and Atlas V families • This performance gap may lead to a variety of progressions including – large satellites that utilize the full capability of the larger launch vehicles, – medium size satellites that would require dual manifesting on the larger vehicles or – smaller satellites missions that would require a large number of smaller launch vehicles • This paper offers some comparative costs of co-manifesting single- instrument missions on a Delta IV/Atlas V, versus placing several instruments on a larger bus and using a Delta IV/Atlas V, as well as considering smaller, single instrument missions launched on a Minotaur or Taurus • This paper presents the results of a parametric study investigating the cost- effectiveness of different alternatives and their effect on future NASA missions that fall into the Small Explorer (SMEX), Medium Explorer (MIDEX), Earth System Science Pathfinder (ESSP), Discovery, -

1.1 a Survey of the Lightning Launch Commit Criteria

1.1 A Survey Of The Lightning Launch Commit Criteria William P. Roeder and Todd M. McNamara 45th Weather Squadron, Patrick AFB, FL 1. 45 WS MISSION The 45th Weather Squadron (45 WS) provides comprehensive weather services to America’s space program at Cape Canaveral Air Force Station (CCAFS) and NASA’s Kennedy Space Center (KSC) (Harms et al., 1999). These services include weather support for pre-launch, launch, post-launch, routine weather forecast, 24/7 watches/warnings, flight briefings, and special missions. A major part of the 45 WS support to launch is evaluating and forecasting the Lightning Launch Commit Criteria (LLCC) (Roeder et al., 1999) and User Launch Commit Criteria (Boyd et al., 1995). The LLCC protect against natural and rocket triggered lightning strikes to the in-flight rocket. The User Launch Commit Criteria include low level winds so the rocket doesn’t Figure 1. Average cloud-to-ground lighting flash density topple or get blown back into the launch pad 2 during launch, and ceiling and visibility so the (flashes/km •year) for the CONUS (1989–1993), corrected for detection efficiency. Data are from the ascending rocket can be tracked by camera to National Lightning Detection Network. Figure provided ensure it is on the correct trajectory. Launch by Dr. Richard Orville, Texas A&M University. customers include the DoD, NASA, and commercial customers for approximately 30 launches per year. The launch vehicles supported 2. INTRODUCTION TO THE LIGHTNING recently include Titan, Atlas, Delta, Athena, LAUNCH COMMIT CRITERIA Pegasus, and Space Shuttle space launch vehicles, and Trident ballistic missiles. -

N AS a Facts

National Aeronautics and Space Administration NASA’s Launch Services Program he Launch Services Program (LSP) manufacturing, launch operations and rockets for launching Earth-orbit and Twas established at Kennedy Space countdown management, and providing interplanetary missions. Center for NASA’s acquisition and added quality and mission assurance in In September 2010, NASA’s Launch program management of expendable lieu of the requirement for the launch Services (NLS) contract was extended launch vehicle (ELV) missions. A skillful service provider to obtain a commercial by the agency for 10 years, through NASA/contractor team is in place to launch license. 2020, with the award of four indefinite meet the mission of the Launch Ser- Primary launch sites are Cape Canav- delivery/indefinite quantity contracts. The vices Program, which exists to provide eral Air Force Station (CCAFS) in Florida, expendable launch vehicles that NASA leadership, expertise and cost-effective and Vandenberg Air Force Base (VAFB) has available for its science, Earth-orbit services in the commercial arena to in California. and interplanetary missions are United satisfy agencywide space transporta- Other launch locations are NASA’s Launch Alliance’s (ULA) Atlas V and tion requirements and maximize the Wallops Flight Facility in Virginia, the Delta II, Space X’s Falcon 1 and 9, opportunity for mission success. Kwajalein Atoll in the South Pacific’s Orbital Sciences Corp.’s Pegasus and facts The principal objectives of the LSP Republic of the Marshall Islands, and Taurus XL, and Lockheed Martin Space are to provide safe, reliable, cost-effec- Kodiak Island in Alaska. Systems Co.’s Athena I and II. -

Athena Launch Vehicle Family

TM APPROVED FOR PUBLIC RELEASE Copyright © 2014 by Lockheed Martin Corporation, All Rights Reserved. 1 Athena Launch Vehicle Family The Athena Program consists of a modular family of launch vehicles based on solid rocket motor (SRM) main propulsion. The Athena family is commercially developed by LM , ATK (Athena teammate) & subcontractors Athena has flown 7 times with 5 successes, from 3 different launch locations: • Kodiak Launch Complex, AK: Highly Inclined orbits (1 launch) – (active) • Vandenberg Air Force Base, CA: Highly Inclined orbits (4 launches) – (retired) • Cape Canaveral Air Force Station, FL: Low Inclined orbits (2 launches) – (active) Athena launches are performed from commercially leased facilities under fixed price contracts – the Athena Program does not own or maintain the launch complexes – however, they are rarely used by other launch vehicles. The Athena Program consists 4 basic configurations, of which 2 have flown: • Athena Ic: Very Small Payloads ~300kg to SSO (4 flights) • Athena IIc: Small Payloads ~1000kg to SSO (3 flights) • Athena IIS: Medium Lite Payloads ~1400kg - 3000kg to SSO (Development Required) • Athena III: Medium Payloads ~4300kg to SSO (Development Required) 2 Expanded Athena Launch Vehicle Family Athena III Athena IIS-2/4/6 Athena IIc Athena Athena Ic Model 165 PLF Common Model 120 PLF (4.2m) Model 92 PLF (3.1m) Core (2.3m) OAM Castor 30 UIS Castor 120 LIS LIS CLIS Castor 120 Castor 120 Castor 900 PLF – Payload Fairing OAM – Orbit Adjust Module DM – Dual Mode (Bi-Propellant) Orion 50SXLG UIS – Upper -

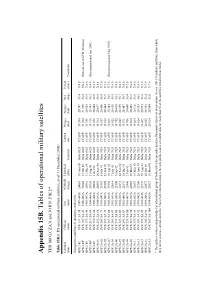

Appendix 15B. Tables of Operational Military Satellites TED MOLCZAN and JOHN PIKE*

Appendix 15B. Tables of operational military satellites TED MOLCZAN and JOHN PIKE* Table 15B.1. US operational military satellites, as of 31 December 2002a Common Official Intl NORAD Launched Launch Perigee Apogee Incl. Period name name name design. (date) Launcher site (km) (km) (deg.) (min.) Comments Navigation satellites in medium earth orbit GPS 2-02 SVN 13/USA 38 1989-044A 20061 10 June 89 Delta 6925 CCAFS 19 594 20 787 53.4 718.0 GPS 2-04b SVN 19/USA 47 1989-085A 20302 21 Oct 89 Delta 6925 CCAFS 21 204 21 238 53.4 760.2 (Retired, not in SEM Almanac) GPS 2-05 SVN 17/USA 49 1989-097A 20361 11 Dec 89 Delta 6925 CCAFS 19 795 20 583 55.9 718.0 GPS 2-08b SVN 21/USA 63 1990-068A 20724 2 Aug 90 Delta 6925 CCAFS 19 716 20 705 56.2 718.8 (Decommissioned Jan. 2003) GPS 2-09 SVN 15/USA 64 1990-088A 20830 1 Oct 90 Delta 6925 CCAFS 19 978 20 404 55.8 718.0 GPS 2A-01 SVN 23/USA 66 1990-103A 20959 26 Nov 90 Delta 6925 CCAFS 19 764 20 637 56.4 718.4 GPS 2A-02 SVN 24/USA 71 1991-047A 21552 4 July 91 Delta 7925 CCAFS 19 927 20 450 56.0 717.9 GPS 2A-03 SVN 25/USA 79 1992-009A 21890 23 Feb 92 Delta 7925 CCAFS 19 913 20 464 53.9 717.9 GPS 2A-04b SVN 28/USA 80 1992-019A 21930 10 Apr 92 Delta 7925 CCAFS 20 088 20 284 54.5 717.8 (Decommissioned May 1997) GPS 2A-05 SVN 26/USA 83 1992-039A 22014 7 July 92 Delta 7925 CCAFS 19 822 20 558 55.9 718.0 GPS 2A-06 SVN 27/USA 84 1992-058A 22108 9 Sep 92 Delta 7925 CCAFS 19 742 20 638 54.1 718.0 GPS 2A-07 SVN 32/USA 85 1992-079A 22231 22 Nov 92 Delta 7925 CCAFS 20 042 20 339 55.7 718.0 GPS 2A-08 SVN 29/USA 87 1992-089A -

Information Summary Assurance in Lieu of the Requirement for the Launch Service Provider Apollo Spacecraft to the Moon

National Aeronautics and Space Administration NASA’s Launch Services Program he Launch Services Program was established for mission success. at Kennedy Space Center for NASA’s acquisi- The principal objectives are to provide safe, reli- tion and program management of Expendable able, cost-effective and on-schedule processing, mission TLaunch Vehicle (ELV) missions. A skillful NASA/ analysis, and spacecraft integration and launch services contractor team is in place to meet the mission of the for NASA and NASA-sponsored payloads needing a Launch Services Program, which exists to provide mission on ELVs. leadership, expertise and cost-effective services in the The Launch Services Program is responsible for commercial arena to satisfy Agencywide space trans- NASA oversight of launch operations and countdown portation requirements and maximize the opportunity management, providing added quality and mission information summary assurance in lieu of the requirement for the launch service provider Apollo spacecraft to the Moon. to obtain a commercial launch license. The powerful Titan/Centaur combination carried large and Primary launch sites are Cape Canaveral Air Force Station complex robotic scientific explorers, such as the Vikings and Voyag- (CCAFS) in Florida, and Vandenberg Air Force Base (VAFB) in ers, to examine other planets in the 1970s. Among other missions, California. the Atlas/Agena vehicle sent several spacecraft to photograph and Other launch locations are NASA’s Wallops Island flight facil- then impact the Moon. Atlas/Centaur vehicles launched many of ity in Virginia, the North Pacific’s Kwajalein Atoll in the Republic of the larger spacecraft into Earth orbit and beyond. the Marshall Islands, and Kodiak Island in Alaska. -

Space Launch Vehicles: Government Activities, Commercial Competition, and Satellite Exports

Order Code IB93062 CRS Issue Brief for Congress Received through the CRS Web Space Launch Vehicles: Government Activities, Commercial Competition, and Satellite Exports Updated October 6, 2003 Marcia S. Smith Resources, Science, and Industry Division Congressional Research Service ˜ The Library of Congress CONTENTS SUMMARY MOST RECENT DEVELOPMENTS BACKGROUND AND ANALYSIS U.S. Launch Vehicle Policy From “Shuttle-Only” to “Mixed Fleet” Clinton Administration Policy George W. Bush Administration Activity U.S. Launch Vehicle Programs and Issues NASA’s Space Shuttle Program New U.S. Expendable and Reusable Launch Vehicles NASA’s Efforts to Develop New Reusable Launch Vehicles (RLVs) Private Sector RLV Development Efforts DOD’s Evolved Expendable Launch Vehicle (EELV) Program U.S. Commercial Launch Services Industry Congressional Interest Foreign Launch Competition (Including Satellite Export Issues) Europe China Russia Ukraine India Japan Satellite Exports: Agency Jurisdiction and Other Continuing Issues LEGISLATION For more information on the space shuttle program, see: CRS Report RS21408, NASA’s Space Shuttle Columbia: Quick Facts and Issues for Congress CRS Report RS21606, NASA’s Space Shuttle Columbia: Synopsis of the Report of the Columbia Accident Investigation Board CRS Report RS21411, NASA’s Space Shuttle Program: Space Shuttle Appropriations FY1992-FY2002 CRS Report RS21419, NASA’s Space Shuttle Program: Excerpts from Recent Reports and Hearings Regarding Shuttle Safety For information on the Orbital Space Plane, which is not a launch vehicle, but a spacecraft, see CRS Issue Brief IB93017, Space Stations. IB93062 10-06-03 Space Launch Vehicles: Government Activities, Commercial Competition, and Satellite Exports SUMMARY Launching satellites into orbit, once the plans to refocus its latest RLV development exclusive domain of the U.S. -

Athena Mission Planner’S Guide (MPG)

Athena Mission Planner’s Guide (MPG) Release Date Date – –2326 January August 2012 2011 Published by: Athena Mission Planner’s Guide FOREWORD January 2012 This Athena Mission Planner’s Guide presents information regarding the Athena Launch Vehicle and related launch services. A range of vehicle configurations and performance capabilities are offered to allow optimum match to customer requirements at low cost. Information is presented in sufficient detail for preliminary assessment of the Athena family for your missions. This guide includes essential technical and programmatic information for preliminary mission planning and preliminary spacecraft design. Athena performance capability, environments and interfaces are described in sufficient detail to assess a first-order compatibility. A description of the spacecraft processing and launch facilities at our launch sites is also included. This guide also describes the operations and hardware flow for the spacecraft and launch vehicle leading to encapsulation, spacecraft mating and launch. This guide is subject to change and will be revised periodically. For inquiries, contact: Gregory J. Kehrl Athena Mission Manager Telephone: (303) 977-0310 Fax: (303) 971-3748 E-mail: [email protected] Postal Address: Lockheed Martin Space Systems Company P.O. Box 179 Denver, CO 80201-0179 MS H3005 Street Address: Lockheed Martin Space Systems Company 12257 S. Wadsworth Blvd. Littleton, CO 80125 MS H3005 i Athena Mission Planner’s Guide TABLE OF CONTENTS 1.0 INTRODUCTION .............................................................................................................. -

2013 Commercial Space Transportation Forecasts

Federal Aviation Administration 2013 Commercial Space Transportation Forecasts May 2013 FAA Commercial Space Transportation (AST) and the Commercial Space Transportation Advisory Committee (COMSTAC) • i • 2013 Commercial Space Transportation Forecasts About the FAA Office of Commercial Space Transportation The Federal Aviation Administration’s Office of Commercial Space Transportation (FAA AST) licenses and regulates U.S. commercial space launch and reentry activity, as well as the operation of non-federal launch and reentry sites, as authorized by Executive Order 12465 and Title 51 United States Code, Subtitle V, Chapter 509 (formerly the Commercial Space Launch Act). FAA AST’s mission is to ensure public health and safety and the safety of property while protecting the national security and foreign policy interests of the United States during commercial launch and reentry operations. In addition, FAA AST is directed to encourage, facilitate, and promote commercial space launches and reentries. Additional information concerning commercial space transportation can be found on FAA AST’s website: http://www.faa.gov/go/ast Cover: The Orbital Sciences Corporation’s Antares rocket is seen as it launches from Pad-0A of the Mid-Atlantic Regional Spaceport at the NASA Wallops Flight Facility in Virginia, Sunday, April 21, 2013. Image Credit: NASA/Bill Ingalls NOTICE Use of trade names or names of manufacturers in this document does not constitute an official endorsement of such products or manufacturers, either expressed or implied, by the Federal Aviation Administration. • i • Federal Aviation Administration’s Office of Commercial Space Transportation Table of Contents EXECUTIVE SUMMARY . 1 COMSTAC 2013 COMMERCIAL GEOSYNCHRONOUS ORBIT LAUNCH DEMAND FORECAST . -

Current Space Launch Vehicles Used by The

Current Space Launch Vehicles Used by the HEET United States S Nathan Daniels April 2014 At present, the United States relies on Russian rocket engines to launch satellites ACT into space. F The U.S. also relies on Russia to transport its astronauts to the International Space Station (as the U.S. Space Shuttle program ended in 2011). Not only does this reliance have direct implications for our launch capabilities, but it also means that we are funding Russian space and missile technology while we could be investing in U.S. based jobs and the defense industrial base. These facts raises national security concerns, as the United States’ relationship with Russia is ever-changing - the situation in Ukraine is a prime example. This paper serves as a brief, but factual overview of active launch vehicles used by the United States. First, are the three vehicles with the payload capability to launch satellites into orbit: the Atlas V, Delta IV, and the Falcon 9. The other active launch vehicles will follow in alphabetical order. www.AmericanSecurityProject.org 1100 New York Avenue, NW Suite 710W Washington, DC AMERICAN SECURITY PROJECT Atlas Launch Vehicle History and the Current Atlas V • Since its debut in 1957 as America’s first operational intercon- tinental ballistic missile (ICBM) designed by the Convair Divi- sion of General Dynamics, the Atlas family of launch vehicles has logged nearly 600 flights. • The missiles saw brief ICBM service, and the last squadron was taken off of operational alert in 1965. • From 1962 to 1963, Atlas boosters launched the first four Ameri- can astronauts to orbit the Earth. -

![IX. Tables of Operational Military Satellites [PDF]](https://docslib.b-cdn.net/cover/8540/ix-tables-of-operational-military-satellites-pdf-3368540.webp)

IX. Tables of Operational Military Satellites [PDF]

656 NON-PROLIFERATION,ARMSCONTROL,DISARMAMENT,2001 656 Table 11.1. US operational military satellites, as of 31 December 2001a Common Official Intl NORAD Launched Launch Perigee Apogee Incl. Period name name name design. (date) Launcher site (km) (km) (deg.) (min.) Comments Navigation satellites in medium earth orbit GPS 2-02 SVN 13/USA 38 1989-044A 20061 10 June 89 Delta 6925 CCAFS 19 576 20 752 53.4 717.9 GPS 2-04b SVN 19/USA 47 1989-085A 20302 21 Oct 89 Delta 6925 CCAFS 21 195 21 232 55.0 760.1 (Retired, not in SEM Almanac) GPS 2-05 SVN 17/USA 49 1989-097A 20361 11 Dec 89 Delta 6925 CCAFS 19 821 20 543 56.2 717.9 GPS 2-08 SVN 21/USA 63 1990-068A 20724 2 Aug 90 Delta 6925 CCAFS 19 699 20 666 55.1 717.9 GPS 2-09 SVN 15/USA 64 1990-088A 20830 1 Oct 90 Delta 6925 CCAFS 19 975 20 389 56.0 717.9 GPS 2A-01 SVN 23/USA 66 1990-103A 20959 26 Nov 90 Delta 6925 CCAFS 19 749 20 607 55.0 717.9 USA 066, moved to slot E5 GPS 2A-02 SVN 24/USA 71 1991-047A 21552 4 July 91 Delta 7925 CCAFS 19 925 20 439 56.3 717.9 GPS 2A-03b SVN 28/USA 79 1992-009A 21890 23 Feb 92 Delta 7925 CCAFS 19 925 20 435 53.8 717.9 (Retired, not in SEM Almanac) GPS 2A-04b SVN 25/USA 80 1992-019A 21930 10 Apr 92 Delta 7925 CCAFS 20 096 20 260 55.0 717.9 (Decommissioned May 1997) GPS 2A-05 SVN 26/USA 83 1992-039A 22014 7 July 92 Delta 7925 CCAFS 19 835 20 529 55.6 718.0 GPS 2A-06 SVN 27/USA 84 1992-058A 22108 9 Sep 92 Delta 7925 CCAFS 19 760 20 604 54.0 717.9 GPS 2A-07 SVN 32/USA 85 1992-079A 22231 22 Nov 92 Delta 7925 CCAFS 20 037 20 328 55.4 717.9 GPS 2A-08 SVN 29/USA 87 1992-089A