IBM Z14 (3906) Technical Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MVS Planning: Workload Management

z/OS Version 2 Release 3 MVS Planning: Workload Management IBM SC34-2662-30 Note Before using this information and the product it supports, read the information in “Notices” on page 235. This edition applies to Version 2 Release 3 of z/OS (5650-ZOS) and to all subsequent releases and modifications until otherwise indicated in new editions. Last updated: 2019-06-24 © Copyright International Business Machines Corporation 1994, 2019. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Figures................................................................................................................. xi Tables..................................................................................................................xv About this information....................................................................................... xvii Who should use this information..............................................................................................................xvii Where to find more information............................................................................................................... xvii Other referenced documents.................................................................................................................. xviii Information updates on the web...............................................................................................................xix How to send your comments to IBM.....................................................................xxi -

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

1994 Hearings on Software Patents

United States Patent and Trademark Office Public Hearing on Use of the Patent System to Protect Software-Related Inventions Transcript of Proceedings Wednesday, January 26, 1994 Thursday, January 27, 1994 9:00 a.m. to 5:00 p.m. Before Bruce A. Lehman Assistant Secretary of Commerce and Commissioner of Patents and Trademarks Location: San Jose Convention Center 408 Almaden Avenue San Jose, California UNITED STATES PATENT AND TRADEMARK OFFICE Public Hearing on Patent Protection for Software-Related Inventions San Jose, California -- January 26-27, 1994 Table of Participants Before: Bruce A. Lehman Assistant Secretary of Commerce and Commissioner of Patents and Trademarks United States Patent and Trademark Office The Panel: Ginger Lew General Counsel-Designate United States Department of Commerce Lawrence Goffney Assistant Commissioner for Patents-Designate United States Patent and Trademark Office Micheal K. Kirk Assistant Commissioner for External Affairs United States Patent and Trademark Office Jeffrey P. Kushan Attorney-Advisor United States Patent and Trademark Office Recording Technicians: Karl Henderscheid Support Office Services 52 Second Street, Third Floor San Francisco, CA 94104 (415) 391-4578 Trascriber: Milton Hare Rogershare Transcribers 541 Maud Avenue San Leandro, CA 94577 (510) 357-8220 - ii - UNITED STATES PATENT AND TRADEMARK OFFICE Public Hearing on Patent Protection for Software-Related Inventions San Jose, California -- January 26-27, 1994 Witnesses January 26, 1994 January 27, 1994 Mr. Clark Mr. Fiddler VideoDiscovery Wind River Systems Mr. Poppa Mr. Warren StorageTek Autodesk, Inc. Mr. Ryan Ms. O'Hare Intellectual Property Owners, Inc. Mr. Glenn Mr. LeFaivre Intellectual Property Section of the State Bar of California Apple Computer Mr. -

Systems Management Performance Reference Information 7.1

IBM IBM i Systems management Performance reference information 7.1 IBM IBM i Systems management Performance reference information 7.1 Note Before using this information and the product it supports, read the information in “Notices,” on page 267. This edition applies to IBM i 7.1 (product number 5770-SS1) and to all subsequent releases and modifications until otherwise indicated in new editions. This version does not run on all reduced instruction set computer (RISC) models nor does it run on CISC models. © Copyright IBM Corporation 1998, 2010. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Reference information for Performance 1 Disk Watcher data files: QAPYDWSTAT ... 237 Collection Services data files ......... 1 Disk Watcher data files: QAPYDWTDER ... 239 Collection Services data files containing time Disk Watcher data files: QAPYDWTRC .... 240 interval data ............. 1 Data files: File abbreviations ........ 244 Collection Services data files: Field data for CL commands for performance ....... 244 configuration database files........ 221 Intelligent Agents ............ 247 Collection Services database files: Field data for Intelligent Agent concepts ........ 247 trace database files .......... 229 Developing agents........... 250 Collection Services data files: System category Set up your agent environment ...... 252 and file relationships .......... 229 Managing agents ........... 260 Collection Services data files: Task type extender 231 Disk -

CPU MF Counters for Efficiency

CPU MF Counters for Efficiency John Burg IBM, WSC – [email protected] 2019 IBM Systems Technical University October 9, 2019 | Las Vegas Notices and disclaimers — © 2019 International Business Machines Corporation. No part of — Performance data contained herein was generally obtained in a this document may be reproduced or transmitted in any form controlled, isolated environments. Customer examples are without written permission from IBM. presented as illustrations of how those — U.S. Government Users Restricted Rights — use, duplication or — customers have used IBM products and the results they may have disclosure restricted by GSA ADP Schedule Contract with IBM. achieved. Actual performance, cost, savings or other results in other operating environments may vary. — Information in these presentations (including information relating to products that have not yet been announced by IBM) — References in this document to IBM products, programs, or has been reviewed for accuracy as of the date of services does not imply that IBM intends to make such products, initial publication and could include unintentional technical or programs or services available in all countries in which typographical errors. IBM shall have no responsibility to update IBM operates or does business. this information. This document is distributed “as is” without any warranty, either express or implied. In no event, shall IBM — Workshops, sessions and associated materials may have been be liable for any damage arising from the use of this prepared by independent session speakers, and do not necessarily information, including but not limited to, loss of data, business reflect the views of IBM. All materials and discussions are provided interruption, loss of profit or loss of opportunity. -

Leveraging Integrated Cryptographic Service Facility

Front cover Leveraging Integrated Cryptographic Service Facility Lydia Parziale Redpaper International Technical Support Organization Leveraging Integrated Cryptographic Service Facility January 2018 REDP-5431-00 Note: Before using this information and the product it supports, read the information in “Notices” on page v. First Edition (January 2018) This edition applies to Version 2 Release 3 of IBM z/OS (product number 5650-ZOS). © Copyright International Business Machines Corporation 2018. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . .v Trademarks . vi Preface . vii Authors. vii Now you can become a published author, too! . vii Comments welcome. viii Stay connected to IBM Redbooks . viii Chapter 1. Overview . 1 1.1 The need for cryptography . 2 1.2 Cryptographic architectures . 3 1.2.1 PKCS #11 . 3 1.2.2 IBM Common Cryptographic Architecture. 3 1.3 System Authorization Facility . 4 1.4 What ICSF is . 5 1.4.1 ICSF services . 5 1.4.2 ICSF options . 6 1.4.3 SAF-protecting ICSF services and IBM CCA Keys. 8 Chapter 2. IBM Z hardware cryptography implementation . 9 2.1 CP Assist for Cryptographic Functions . 10 2.2 The IBM Cryptographic Coprocessor . 10 2.3 The Trusted Key Entry workstation . 12 2.3.1 Clear key versus secure key versus protected key. 12 2.3.2 TKE and the benefits of using ICSF and protected keys . 14 Chapter 3. Auditing . 15 3.1 ICSF: Enhanced logging for PCI audit requirements . 16 3.2 ICSF and SMF . -

Bladecenter Interoperability Guide

Front cover BladeCenter Interoperability Guide Quick reference for BladeCenter Includes internal components and interoperability external connectivity Covers software compatibility Discusses storage interoperability Ilya Krutov David Watts Note: Before using this information and the product it supports, read the information in “Notices” on page iii. Last update on 24 February 2015 This edition applies to: BladeCenter E BladeCenter H BladeCenter HT BladeCenter S BladeCenter HS12 type 8028 BladeCenter HS22 BladeCenter HS22V BladeCenter HS23 (E5-2600) BladeCenter HS23 (E5-2600 v2) BladeCenter HS23E BladeCenter HX5 BladeCenter PS700/701/702 BladeCenter PS703/704 © Copyright Lenovo 2015. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract Contents Notices . iii Trademarks . iv Preface . .v Authors. .v Comments welcome. vi Do you have the latest version?. vi Chapter 1. Chassis interoperability. 1 1.1 Server to chassis compatibility . 2 1.1.1 HS22 chassis support . 3 1.1.2 HS22V chassis support. 4 1.1.3 HS23 (E5-2600) chassis support . 5 1.1.4 HS23 (E5-2600 v2) chassis support . 6 1.1.5 HS23E chassis support. 7 1.1.6 HX5 chassis support . 8 1.1.7 PS700 chassis support . 9 1.2 I/O module to chassis interoperability . 10 1.2.1 SAS, InfiniBand, Pass-thru, and interconnect modules interoperability . 10 1.2.2 Ethernet I/O module interoperability . 11 1.2.3 Fibre Channel I/O module interoperability. 12 1.3 I/O module to adapter interoperability . 13 1.3.1 I/O module bay to adapter mappings . 13 1.3.2 Ethernet I/O modules and adapters . -

Table of Contents

^9/08/89 11:43 U206 883 8101 MICROSOFT CORP.. 12)002 Table of Contents m-^mm Table of Contaits 09/08/89 11:44 'Q206 883 8101 MICROSOFT CORP _ _ [ 1003 The Story Begins JAN The story of MS-DOS_begins ..in a hotel in Albuquerque, New Mexico. 1975 In 1975, Albuquerque was the home of Micro Instrumentation'Telemetry MiTS introduces the 8080-baseci Systems, better known as MITS- In January of that year, MITS had intro- Altair computer. duced a kit computer called the Altair. When it was first snipped, the Altair consisted of a metal box with, a panel of switches for input and output, a power supply, and-two boards. One board was the CPU.. At its heart was the 8-bit 8080 microprocessor chip from InteL The other board provided 256 bytes of memory. The Altair had no keyboard, no monitor, and no permanent storage. But it had a revolutionary price tag. It cost $397. For the first time, the term "personal computer" acquired a real-world meaning. The real world of the Altair was not, however, the world of business computing. It was-primarily the world of the computer hobbyist These first users of the microcomputer were not as interested in using spreadsheets and word processors as they were in programming. Accordingly, the first soft- ware for the Altair was a programming language. And the company that developed it was a two-man firm, in Albuquerque, called Microsoft FEB The two men at MiCTosof^ej^PailjAJten^and Bffl Gates-Allen and 1975 Gates-had met when-they were both students at Lakeside High School in Microsoft sails first BASIC to Seattle, where they began their computer-science education oa the school's MITS lor Altair time-sharing terminal By the time Gates had graduated, me two of them had computer. -

(12) United States Patent (10) Patent No.: US 9,129,043 B2 Pandya (45) Date of Patent: Sep

USOO9129043B2 (12) United States Patent (10) Patent No.: US 9,129,043 B2 Pandya (45) Date of Patent: Sep. 8, 2015 (54) 1OOGBPS SECURITY AND SEARCH (56) References Cited ARCHITECTURE USING PROGRAMMABLE INTELLIGENT SEARCH MEMORY U.S. PATENT DOCUMENTS 5, 187,800 A 2f1993 Sutherland (76) Inventor: Ashish A. Pandya, El Dorado Hills, CA 5,640,525 A 6/1997 Yumoto et al. 5,872,972 A 2f1999 Boland et al. (US) 5,968,176 A 10, 1999 Nessett et al. 6,018,779 A 1/2000 Blumenau 6,018,799 A 1/2000 Wallace et al. (*) Notice: Subject to any disclaimer, the term of this 6,021,490 A 2/2000 Vorbach et al. patent is extended or adjusted under 35 6,130,892 A 10/2000 Short et al. U.S.C. 154(b) by 0 days. 6,147,976 A 11/2000 Shand et al. 6,205,537 B1 3/2001 Albonesi 6,237,029 B1 5, 2001 Master et al. (21) Appl. No.: 13/472,042 6,304,973 B1 10/2001 Williams 6.421,742 B1 7, 2002 Tillier (22) Filed: May 15, 2012 6.427,170 B1 7/2002 Sitaraman et al. (Continued) (65) Prior Publication Data FOREIGN PATENT DOCUMENTS US 2013/OO18835A1 Jan. 17, 2013 JP 2002-63060 2, 2002 WO WO 98.54644 12/1998 Related U.S. Application Data (Continued) OTHER PUBLICATIONS (63) Continuation of application No. 13/172.276, filed on Jun. 29, 2011, now Pat. No. 8,200,599, which is a International Search Report Issued in PCT/US2007/86785, mailed continuation of application No. -

IBM Z Connectivity Handbook

Front cover IBM Z Connectivity Handbook Octavian Lascu John Troy Anna Shugol Frank Packheiser Kazuhiro Nakajima Paul Schouten Hervey Kamga Jannie Houlbjerg Bo XU Redbooks IBM Redbooks IBM Z Connectivity Handbook August 2020 SG24-5444-20 Note: Before using this information and the product it supports, read the information in “Notices” on page vii. Twentyfirst Edition (August 2020) This edition applies to connectivity options available on the IBM z15 (M/T 8561), IBM z15 (M/T 8562), IBM z14 (M/T 3906), IBM z14 Model ZR1 (M/T 3907), IBM z13, and IBM z13s. © Copyright International Business Machines Corporation 2020. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . vii Trademarks . viii Preface . ix Authors. ix Now you can become a published author, too! . xi Comments welcome. xi Stay connected to IBM Redbooks . xi Chapter 1. Introduction. 1 1.1 I/O channel overview. 2 1.1.1 I/O hardware infrastructure . 2 1.1.2 I/O connectivity features . 3 1.2 FICON Express . 4 1.3 zHyperLink Express . 5 1.4 Open Systems Adapter-Express. 6 1.5 HiperSockets. 7 1.6 Parallel Sysplex and coupling links . 8 1.7 Shared Memory Communications. 9 1.8 I/O feature support . 10 1.9 Special-purpose feature support . 12 1.9.1 Crypto Express features . 12 1.9.2 Flash Express feature . 12 1.9.3 zEDC Express feature . 13 Chapter 2. Channel subsystem overview . 15 2.1 CSS description . 16 2.1.1 CSS elements . -

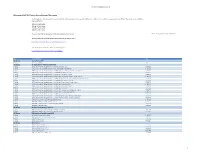

IBM Services ISMS / PIMS Products / Pids in Scope

1H 2021 Certified Product List IBM services ISMS/PIMS Product/Service Offerings/PIDs in scope The following is a list of products associated with the offering bundles in scope of the IBM services information security management system (ISMS). The Cloud services ISMS has been certified on: ISO/IEC 27001:2013 ISO/IEC 27017:2015 ISO/IEC 27018:2019 ISO/IEC 27701:2019 As well as the IBM Cloud Services STAR Self-Assessment found here: This listing is current as of 07/20/2021 IBM Cloud Services STAR Self-Assessment Cloud Controls Matrix v3.0.1 https://cloudsecurityalliance.org/registry/ibm-cloud/ To find out more about IBM Cloud compliance go to: https://www.ibm.com/cloud/compliance/global type groupNameproductName pids ISO Group AccessHub-at-IBM Offering AccessHub-at-IBM N/A ISO Group AI Applications - Maximo and TRIRIGA Offering IBM Enterprise Asset Management Anywhere on Cloud (Maximo) 5725-Z55 Offering IBM Enterprise Asset Management Anywhere on Cloud (Maximo) Add-On 5725-Z55 Offering IBM Enterprise Asset Management on Cloud (Maximo) Asset Configuration Manager Add-On 5725-P73 Offering IBM Enterprise Asset Management on Cloud (Maximo) Aviation Add-On 5725-P73 Offering IBM Enterprise Asset Management on Cloud (Maximo) Calibration Add-On 5725-P73 Offering IBM Enterprise Asset Management on Cloud (Maximo) for Managed Service Provider Add-On 5725-P73 Offering IBM Enterprise Asset Management on Cloud (Maximo) Health, Safety and Environment Manager Add-On 5725-P73 Offering IBM Enterprise Asset Management on Cloud (Maximo) Life Sciences Add-On -

Ethernet Networks: Design, Implementation, Operation, Management

Ethernet Networks: Design, Implementation, Operation, Management. Gilbert Held Copyright 2003 John Wiley & Sons, Ltd. ISBN: 0-470-84476-0 ethernet networks Fourth Edition Books by Gilbert Held, published by Wiley Quality of Service in a Cisco Networking Environment 0 470 84425 6 (April 2002) Bulletproofing TCP/IP-Based Windows NT/2000 Networks 0 471 49507 7 (April 2001) Understanding Data Communications: From Fundamentals to Networking, Third Edition 0 471 62745 3 (October 2000) High Speed Digital Transmission Networking: Covering T/E-Carrier Multiplexing, SONET and SDH, Second Edition 0 471 98358 6 (April 1999) Data Communications Networking Devices: Operation, Utilization and LAN and WAN Internetworking, Fourth Edition 0 471 97515 X (November 1998) Dictionary of Communications Technology: Terms, Definitions and Abbreviations, Third Edition 0 471 97517 6 (May 1998) Internetworking LANs and WANs: Concepts, Techniques and Methods, Second Edition 0 471 97514 1 (May 1998) LAN Management with SNMP and RMON 0 471 14736 2 (September 1996) ethernet networks Fourth Edition ♦ Design ♦ Implementation ♦ Operation ♦ Management GILBERT HELD 4-Degree Consulting, Macon, Georgia, USA Copyright 2003 John Wiley & Sons Ltd, The Atrium, Southern Gate, Chichester, West Sussex PO19 8SQ, England Telephone (+44) 1243 779777 Email (for orders and customer service enquiries): [email protected] Visit our Home Page on www.wileyeurope.com or www.wiley.com All Rights Reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except under the terms of the Copyright, Designs and Patents Act 1988 or under the terms of a licence issued by the Copyright Licensing Agency Ltd, 90 Tottenham Court Road, London W1T 4LP, UK, without the permission in writing of the Publisher.