Are Ethernet Attached Ssds Happening?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Semiconductor Times, April 2020

SEMICONDUCTOR TIMES APR 2020 / 1 ® APR 2020 FOCUSED ON EMERGING SEMICONDUCTOR COMPANIES VOL 25, ISSUE 4 bandwidth in the automotive market. Christopher Mash, VP of Business De- Startup Profiles Next generation cars require multiple velopment (previously Senior Dir. layers of security protection to prevent, Automotive Apps, Product Definition Axonne detect, and adapt to evolving threats. The and Architecture at Marvell) Axonne was founded in early 2018 auto industry is increasingly adopting Craig Farnsworth, GM and Senior Dir. to develop connectivity solutions for Ethernet solutions and 2019 will see the of Engineering (previously Senior automotive and industrial applications. international standardization (IEEE) of Director of Digital Design at Peraso) The company has received funding multi-gigabit Ethernet bandwidth rates Ahmad Tavakoli, Sr. Director, Analog from Translink Capital. We believe for automotive applications. & Mixed-Signal Design (previously the company is developing automotive Sr. Hardware Engineer at Google and ethernet ICs. Ethernovia is developing an Ethernet Verily Life Sciences, and Sr. Director system sophisticated enough to process of Engineering at Aquantia) Gani Jusuf, Ph.D., Co-Founder and the bandwidth demands of future auto- 2050 Ringwood Ave Suite B CEO (previously VP of engineering motive systems by unifying in-vehicle San Jose, CA 95131 at Marvell) networks into an end to end Ethernet www.ethernovia.com William Lo, Co-Founder and CTO system. Ethernovia is developing an (previously VP, R&D Datacom En- Ethernet solution that will provide a gineering at Marvell) unified, standards-based solution built ONiO Sunnyvale, CA to meet automotive functional safety ONiO was founded in 2016 to develop www.axonne.com requirements. -

Stoxx® Global 3000 Technology Index

SUPERSECTOR INDICES 1 STOXX® GLOBAL 3000 TECHNOLOGY INDEX Stated objective Key facts The STOXX Supersector indices track supersectors of the relevant » Categorization according to their primary source of revenue benchmark index. There are 19 supersectors according to the guarantees an accurate classification of companies in their respective Industry Classification Benchmark (ICB). Companies are categorized business environments according to their primary source of revenue. The following supersectors are available: automobile and parts, banks, basic » Component capping ensures that no component can dominate the resources, chemicals, construction and materials, financial services, index food and beverage, health care, industrial goods and services, insurance, media, oil and gas, personal and household goods, real estate, communications, retail, technology, travel and leisure and utilities. Descriptive statistics Index Market cap (USD bn.) Components (USD bn.) Component weight (%) Turnover (%) Full Free-float Mean Median Largest Smallest Largest Smallest Last 12 months STOXX Global 3000 Technology Index 4,573.6 4,143.5 19.6 5.4 618.0 1.2 14.9 0.0 4.1 STOXX Global 3000 Index 48,487.0 40,843.1 13.6 5.1 618.0 1.2 1.5 0.0 3.4 Supersector weighting (top 10) Country weighting Risk and return figures1 Index returns Return (%) Annualized return (%) Last month YTD 1Y 3Y 5Y Last month YTD 1Y 3Y 5Y STOXX Global 3000 Technology Index 3.8 15.3 33.8 56.5 87.5 55.8 23.3 33.0 15.7 13.1 STOXX Global 3000 Index 2.3 7.5 21.7 51.5 87.5 31.3 11.2 21.2 14.5 13.1 -

SK Hynix to Acquire Intel NAND Memory Business

SK hynix to Acquire Intel NAND Memory Business NEWS HIGHLIGHTS SK hynix will pay US $9 billion for the Intel NAND memory and storage business, which includes the NAND SSD business, the NAND component and wafer business, and the Dalian NAND memory manufacturing facility in China. SK hynix aims to enhance the competitiveness of its NAND flash solutions as one of the leading global semiconductor companies and grow the memory ecosystem to the benefit of customers, partners, employees and shareholders. Intel will retain its Intel® Optane™ business and intends to invest transaction proceeds in long-term growth priorities. SEOUL, Republic of Korea and SANTA CLARA, Calif., Oct. 20, 2020 -- SK hynix and Intel today announced that they have signed an agreement on Oct. 20, KST, under which SK hynix would acquire Intel’s NAND memory and storage business for US $9 billion. The transaction includes the NAND SSD business, the NAND component and wafer business, and the Dalian NAND memory manufacturing facility in China. Intel will retain its distinct Intel® OptaneTM business. SK hynix and Intel will endeavor to obtain required governmental approvals expected in late 2021. Following receipt of these approvals, SK hynix will acquire from Intel the NAND SSD business (including NAND SSD-associated IP and employees), as well as the Dalian facility, with the first payment of US $7 billion. SK hynix will acquire from Intel the remaining assets, including IP related to the manufacture and design of NAND flash wafers, R&D employees, and the Dalian fab workforce, upon a final closing, expected to occur in March 2025 with the remaining payment of US $2 billion. -

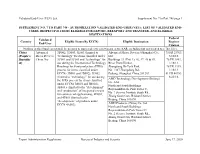

Comparison of Leading Edge 3D NAND Memories TOSHIBA-Sandisk /SAMSUNG/SK HYNIX/INTEL- MICRON Memory Report by Belinda Dube December 2018

REVERSE COSTING® – STRUCTURAL, PROCESS & COST REPORT 3D NAND FLASH MEMORIES Comparison of Leading Edge 3D NAND Memories TOSHIBA-SanDisk /SAMSUNG/SK HYNIX/INTEL- MICRON Memory report by Belinda Dube December 2018 22 Bd. Benoni Goullin 44200 NANTES - FRANCE +33 2 40 18 09 16 [email protected] www.systemplus.fr Table of Contents Overview / Introduction 4 o SK Hynix 80 o Executive Summary Synthesis o Reverse Costing Methodology Die design Company Profile 8 Cross-Section o Toshiba/SanDisk Patents o Samsung o SK Hynix o Intel/ Micron 110 o Micron/Intel Synthesis Die design Cross-Section Technology & Market 8 o NAND Roadmap Patents o NAND Revenue Manufacturing Process Flow 146 o Global Overview Physical Analysis 14 o Wafer Fabrication Unit o Toshiba Synthesis of the Physical Analysis o Front-End Process o Toshiba Physical Analysis Methodology o Toshiba Physical analysis Cost Analysis 179 Die o Synthesis of the cost analysis Cross-Section Yields Explanation & Hypotheses o Toshiba Patents NAND wafer and die cost o Samsung Synthesis of the Physical Analysis 53 Front-End Cost o Samsung Physical Analysis Methodology Component Cost o Samsung Physical analysis Die Estimated Price and Manufacturer Gross Margin 228 Cross-Section Company services 239 o Samsung Patents Executive Summary Overview / Introduction o Executive Summary The memory semiconductor industry continues to grow due to higher memory demand in consumer electronics and o Reverse Costing Methodology mass storage. Remarkable investments are channeled into the memory manufacturing business. IoT escalates this o Glossary demand and hence manufacturers continue to increase the die density of memories at the same time aiming on Company Profile reduction of the NAND die. -

Case 1:14-Cv-01432-LPS-CJB Document 13 Filed 03/27/15 Page 1 of 57 Pageid #: 219

Case 1:14-cv-01432-LPS-CJB Document 13 Filed 03/27/15 Page 1 of 57 PageID #: 219 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE ELM 3DS INNOVATIONS, LLC, a ) Delaware limited liability company, ) ) Plaintiff, ) ) C.A. No. 1:14-cv-01432-LPS-CJB v. ) ) SK HYNIX INC., a Korean corporation, ) Jury Trial Demanded SK HYNIX AMERICA INC., a California ) corporation, ) HYNIX SEMICONDUCTOR ) MANUFACTURING AMERICA INC., a California corporation, and ) SK HYNIX MEMORY SOLUTIONS ) INC., a Delaware corporation, ) ) ) Defendants. FIRST AMENDED COMPLAINT FOR PATENT INFRINGEMENT Plaintiff Elm 3DS Innovations, LLC (“Plaintiff” or “Elm 3DS”), by its attorneys, for its complaint against Defendants SK hynix Inc., and its U.S. subsidiaries and related entities SK hynix America Inc., Hynix Semiconductor Manufacturing America Inc., and SK hynix Memory Solutions Inc. (individually or collectively “Defendants” or “Hynix”) hereby alleges as follows: INTRODUCTION 1. This is an action for patent infringement under the Patent Laws of the United States, 35 U.S.C. § 1 et seq., for infringing the following Elm 3DS patents: 1 Case 1:14-cv-01432-LPS-CJB Document 13 Filed 03/27/15 Page 2 of 57 PageID #: 220 (a) U.S. Patent No. 7,193,239 (“Leedy ’239 patent”), entitled “Three Dimensional Structure Integrated Circuit,” owned by Elm 3DS Innovations, LLC (attached as Exhibit 1); (b) U.S. Patent No. 7,474,004 (“Leedy ‘004 patent”), entitled “Three Dimensional Structure Memory,” owned by Elm 3DS Innovations, LLC (attached as Exhibit 2); (c) U.S. Patent No. 7,504,732 (“Leedy ‘732 patent”), entitled “Three Dimensional Structure Memory,” owned by Elm 3DS Innovations, LLC (attached as Exhibit 3); (d) U.S. -

Is This the End of South Korea's Semi-Charmed Export Life? Live by the Semiconductor, Die by the Semiconductor

1/10/2019 Is This the End of South Korea’s Semi-Charmed Export Life? | The Diplomat THE DlPLO§££S*ved By NSD/FARA Registration Unit 01/10/2019 12:30:00 PM Read The Diplomat, Know the Asia-Pacific : ge Credit: Pixabay Is This the End of South Korea's Semi-Charmed Export Life? Live by the semiconductor, die by the semiconductor. By Troy Stangarone January 10, 2019 The last two years have been a semi-charmed period for South Korean exports. As semiconductor demand and prices rose, so too did South Korean exports. But what has been a fortuitous period for South Korean exports may be coming to an end. The boom in demand for semiconductors came right when South Korea needed it. After a record $572.7 billion in exports in 2014, South Korea saw total exports fall for two consecutive years for the first time in 58 years. Exports bottomed out at $495.4 billion in 2016, also falling below $500 billion for the first time since 2010. By 2017, South Korean exports had bounced back to a new high of $573.7 billion, and are on pace to exceed that amount in 2018 when the final numbers are released. However, unlike the sharp bounce back after the Global Financial Crisis, this bounce back was due in large part thanks to the beginning of a super-cycle in semiconductors rather than an upswing in global economic growth. While South Korean firms produce processing chips, they have come to dominate in the memory segment. Samsung and SK Hynix account for roughly 75 percent of the DRAM market, with Samsung alone accounting for 45 percent of the market. -

SK Hynix E-NAND Product Family Emmc5.1 Compatible

SK hynix e-NAND Product Family eMMC5.1 Compatible Rev 1.6 / Jul. 2016 1 Revision History Revision No. History Date Remark 1.0 - 1st Official release May. 20, 2015 1.1 - Change ‘FFU argument’ value in ‘send FW to device’ from 0x6600 to May. 22, 2015 0xFFFAFFF0 (p.19) 1.2 - Add ‘Vccq=2.7V ~ 3.6V’ (p.4) May. 28, 2015 - Change ‘The last value of PNM’ from ‘1’ to ‘2’ (p.65) - Modify VENDOR_PROPRIETARY_HEALTH_REPORT[301-270] (p.22) - Change the typo : bit[0] value of Cache Flush Policy (p.33) 1.3 - Modify 4.2.7 RPMB throughput improvement (p.43) Jul. 07, 2015 - Change ‘PON Busy Time’ (p.63) - Modify CSD/EXT_CSD values of 64GB (WP_GRP_SIZE, etc.) (p.68 ~ 73) 1.4 - Modify the Typo of 64GB PNM Value (p.65) Nov. 13, 2015 - Modify the value of TRIM multiplier (p.69) 1.5 - Modification of power value (p.56) Apr. 20, 2016 - Modification of PSN value and usage guidance 1.6 - Added 8GB Information Jul. 21, 2016 - Modification of PKG Ball Size Value Rev 1.6 / Jul. 2016 2 Table of Contents 1. Introduction ................................................................................................................. 4 1.1 General Description ............................................................................................................................................................. 4 1.2 Product Line-up ................................................................................................................................................................. 4 1.3 Key Features ..................................................................................................................................................................... -

September 28, 2017 Toshiba Corporation for IMMEDIATE RELEASE Notice on the Signing of a Share Purchase Agreement with a Bain

September 28, 2017 Toshiba Corporation FOR IMMEDIATE RELEASE Notice on the Signing of a Share Purchase Agreement with a Bain Capital-Led Consortium for the Sale of Toshiba Memory Corporation TOKYO— Toshiba Corporation’s Board of Directors (TOKYO: 6502) (Toshiba), as announced on September 20, 2017, “Notice on the Sale of Toshiba Memory Corporation,” has approved the sale of all shares of Toshiba Memory Corporation (TMC), a wholly owned subsidiary of Toshiba, to K.K. Pangea (Pangea), a special purpose acquisition company formed and controlled by a Bain Capital Private Equity, LP (including its affiliates, Bain Capital)-led consortium (the Consortium). Today, Toshiba has entered into a Share Purchase Agreement (SPA) with Pangea. An outline of the agreement follows. 1. Names of Seller and Purchaser Seller: Toshiba Corporation Purchaser: K.K. Pangea 2. Target Shares All shares of TMC 3. Purchase Price Two trillion yen This amount is based on estimated debt, working capital, and capital expenditures by Toshiba and TMC. Any discrepancy with actual figures will be addressed by a purchase price adjustment determined after the closing of the share purchase. If the shares held by Toshiba in three joint venture entities* are not transferred to TMC, the estimated value of such shares in the joint ventures as of the end of the month before closing will be deducted as a purchase price adjustment. *Flash Partners, Ltd., Flash Alliance, Ltd. and Flash Forward, Ltd. are three joint ventures between Toshiba, SanDisk LLC, a subsidiary of Western Digital Corporation, and certain SanDisk subsidiaries for the joint investment in equipment used at TMC’s Yokkaichi facilities in the production of NAND Flash Memory. -

As a New Interface Nvme Enjoys Unprecedented

As a new interface NVMe enjoys unprecedented breadth and depth of industry support and involvement; a testament to its leading edge technology and to the organization’s openness and inclusiveness. NVMe has the support of companies that range from those who are in the Fortune 50 to those companies who are taking advantage of this technology shift to make their name in the industry. Below is a list of just some of the 100 plus members of the NVM Express organization. Promoter Member Cisco DellEMC NetApp Intel Corporation Oracle America Micron Samsung Microsoft Corporation Seagate Technology Microsemi Western Digital Technologies, Inc. Contributor Member Apeiron Mangstor Avago Technologies Marvell Semiconductor Baidu Mentor Graphics Corporation Beijing Memblaze Technology Co. Ltd. Mobiveil, Inc. Broadcom Corporation NEC Corporation Cadence Design Systems NetBRIC Technology Co., Ltd. Cavium, Inc. OCZ Storage Solutions, Inc. CNEX Labs, Inc. Pavilion Data Systems Crossfield Technology LLC Phison Electronics Corp. Elastifile Ltd. Qlogic Corporation Excelero Quanta Computer Inc. Fujitsu Realtek Semiconductor Corp. G2M Communications Inc. Red Hat Inc. Google, Inc. SANBlaze Technology, Inc. Greenliant Systems Silicon Motion Grace Technology Labs Inc. SK hynix memory solutions, Inc. Hewlett-Packard Company SMART Modular Technologies Hitachi, Ltd. TDK Corporation Huawei Technologies Co. Ltd. Teledyne LeCroy Hyperstone GmbH Tidal Systems, Inc. International Business Machines Corporation Toshiba Corporation IP-Maker ULINK Technology, Inc. JDSU - Storage Network Test Unigen DataStorage Corporation JMicron Technology Corp. VIA Alliance Semiconductor Co., Ltd. Kalray, Inc. X-IO Technologies Kazan Networks Corporation Xilinx Adopter Member Apacer Technology Inc. OSR Open Systems Resources, Inc. Echostreams Innovative Solutions LLC Pivot3 eInfochips, Inc. Qnap Systems, Inc. -

Multi-Company Report

November 30, 2015 Equity Research Chip Weekly: Bumping Along The Bottom Last week ADI reported that all of its end market segments except consumer (driven by a win in Apple’s iPhone) declined year/year in its October quarter. Infineon guided for sales to decline 6% in the December quarter. PMC-Sierra and Microsemi jointly announced an agreement for Microsemi to acquire PMC-Sierra, Semiconductors indicating that PMC-Sierra has decided to terminate its agreement to be acquired by Skyworks. ADI's October quarter results were a fair amount above guidance, with overall growth driven by a big jump in consumer related revenues (associated, we believe, with chips for Apple's iPhone). However, ADI's other segments were all down year/year, consistent with broad-based softness reflected in other recent chip reports and other data. ADI reported quarterly revenue of $978.7 million (up 13% sequentially and up 20% yr/yr), which exceeded the high end of ADI’s original guidance range of $880-$940 million. ADI expects sales to be down 13% to 17% sequentially in the January quarter in the $805-$855 million range. Infineon reported September quarter sales of EUR 1.59 billion (up 1% sequentially and up 36% yr/yr). Infineon guided December quarter sales to decline 6% sequentially, plus or minus 2 percentage points due to seasonal factors. PMC-Sierra and Microsemi jointly announced an agreement for Microsemi to acquire PMC-Sierra. Our understanding is that this will involve PMC-Sierra paying a breakup fee to Skyworks to terminate the acquisition agreement between Skyworks and PMC-Sierra. -

Validated End-User (VEU) List Supplement No. 7 to Part 748 Page 1

Validated End-User (VEU) List Supplement No. 7 to Part 748 page 1 SUPPLEMENT NO. 7 TO PART 748 - AUTHORIZATION VALIDATED END-USER (VEU): LIST OF VALIDATED END- USERS, RESPECTIVE ITEMS ELIGIBLE FOR EXPORT, REEXPORT AND TRANSFER, AND ELIGIBLE DESTINATIONS Federal Validated Country Eligible Items (By ECCN) Eligible Destination Register End-User Citation Nothing in this Supplement shall be deemed to supersede other provisions in the EAR, including but not limited to § 748.15(c). China Advanced 3D002, 3D003, 3E001 (limited to Advanced Micro Devices (Shanghai) Co., 75 FR 25763, (People’s Micro Devices “technology” for items classified under Ltd. 5/10/10. Republic China, Inc. 3C002 and 3C004 and “technology” for Buildings 33 (Unit 1), 46, 47, 48 & 49, 76 FR 2802, of) use during the International Technology River Front Harbor, 1/18/11. Roadmap for Semiconductors (ITRS) Zhangjiang Hi-Tech Park, 78 FR 3319, process for items classified under No. 1387 Zhangdong Rd., 1/16/13. ECCNs 3B001 and 3B002), 3E002 Pudong, Shanghai, China 201203. 81 FR 40783, (limited to “technology” for use during 6/23/16. AMD Technology Development (Beijing) the ITRS process for items classified Co., Ltd. under ECCNs 3B001 and 3B002), North and South Buildings 3E003.e (limited to the “development” RaycomInfotech, Park Tower C, and “production” of integrated circuits No. 2 Science Institute South Rd., for commercial applications), 4D001, Zhong Guan Cun, Haidian District, and 4E001 (limited to the Beijing, China 100190. “development” of products under ECCN 4A003). AMD Products (China) Co. Ltd. North and South Buildings RaycomInfotech Park Tower C, No. 2 Science Institute South Rd., Zhong Guan Cun, Haidian District, Beijing, China 100190. -

Current Status of the Integrated Circuit Industry in China

J. Microelectron. Manuf. 3, 20030203 (2020) doi: 10.33079/jomm.20030203 Editorial Introduction: China's IC industry has been flourishing in recent years, huge market demand together with government investments are the major driving forces for this development. The status and development momentum of the Chinese IC industry also attracted wide interest and attention of international counterparts. A group of domestic IC experts are invited by the JoMM to write a series of articles about China's IC industry, including the history, current status, development, and related government policies. Information in these articles is all from public data from recent years. The purpose of these articles is to enhance mutual understanding between the Chinese domestic IC industry and international IC ecosystem. Current Status of the Integrated Circuit Industry in China ― Overview of the Memory Industry 1. Status of China's Memory Market products, China has a huge demand for memory chips. According to data released by the General In the past years, the Chinese memory chip Administration of Customs of China [2], the total cost market has been occupied by foreign manufacturers. of imported integrated circuits in China reached According to TrendForce statistics [1], the top five 312.058 billion dollars throughout 2018. Among them, global NAND Flash in Q4 2019 are by Samsung the number of memory imports was as high as (36.6%), Kioxia (18.9%), Western Digital (14.2%), 123.083 billion dollars (imports increased by 1188.99% Micron Technology (12.1%) and SK Hynix (9.7%). year-on-year), accounting for 39.4% of total imports.