The Next Generation Amd Enterprise Server Product

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CFD Analyses of a Notebook Computer Thermal Management

PREPRINT. 1 Ilker Tari and Fidan Seza Yalçin, "CFD Analyses of a Notebook Computer Thermal Management System and a Proposed Passive Cooling Alternative, IEEE Transactions on Components and Packaging Technologies, Vol. 33, No. 2, pp. 443-452 (2010). CFD Analyses of a Notebook Computer Thermal Management System and a Proposed Passive Cooling Alternative Ilker Tari, and Fidan Seza Yalcin H Fin height, mm. Abstract— A notebook computer thermal management system L Heat sink vertical length, mm. is analyzed using a commercial CFD software package (ANSYS Nu Nusselt Number. Fluent). The active and passive paths that are used for heat Pr Prandtl Number. dissipation are examined for different steady state operating Ra Rayleigh Number. conditions. For each case, average and hot-spot temperatures of Re Reynolds Number. the components are compared with the maximum allowable T Temperature, °C or K. operating temperatures. It is observed that when low heat W Heat sink width, mm. dissipation components are put on the same passive path, the 2 increased heat load of the path may cause unexpected hot spot g Gravitational acceleration, m/s . 2 temperatures. Especially, Hard Disk Drive (HDD) is susceptible h Convection heat transfer coefficient, W/(m ·K). to overheating and the keyboard surface may reach k Thermal conductivity, W/(m·K). ergonomically undesirable temperatures. Based on the analysis q Heat transfer rate, W. results and observations, a new component arrangement s Fin spacing, mm. considering passive paths and using the back side of the LCD screen is proposed and a simple correlation based thermal Greek Symbols analysis of the proposed system is presented. -

Effective Virtual CPU Configuration with QEMU and Libvirt

Effective Virtual CPU Configuration with QEMU and libvirt Kashyap Chamarthy <[email protected]> Open Source Summit Edinburgh, 2018 1 / 38 Timeline of recent CPU flaws, 2018 (a) Jan 03 • Spectre v1: Bounds Check Bypass Jan 03 • Spectre v2: Branch Target Injection Jan 03 • Meltdown: Rogue Data Cache Load May 21 • Spectre-NG: Speculative Store Bypass Jun 21 • TLBleed: Side-channel attack over shared TLBs 2 / 38 Timeline of recent CPU flaws, 2018 (b) Jun 29 • NetSpectre: Side-channel attack over local network Jul 10 • Spectre-NG: Bounds Check Bypass Store Aug 14 • L1TF: "L1 Terminal Fault" ... • ? 3 / 38 Related talks in the ‘References’ section Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications What this talk is not about 4 / 38 Related talks in the ‘References’ section What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications 4 / 38 What this talk is not about Out of scope: Internals of various side-channel attacks How to exploit Meltdown & Spectre variants Details of performance implications Related talks in the ‘References’ section 4 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP QEMU QEMU VM1 VM2 Custom Disk1 Disk2 Appliance ioctl() KVM-based virtualization components Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) libvirtd QMP QMP Custom Appliance KVM-based virtualization components QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 OpenStack, et al. libguestfs Virt Driver (guestfish) Custom Appliance KVM-based virtualization components libvirtd QMP QMP QEMU QEMU VM1 VM2 Disk1 Disk2 ioctl() Linux with KVM 5 / 38 libguestfs (guestfish) Custom Appliance KVM-based virtualization components OpenStack, et al. -

AMD EPYC™ 7371 Processors Accelerating HPC Innovation

AMD EPYC™ 7371 Processors Solution Brief Accelerating HPC Innovation March, 2019 AMD EPYC 7371 processors (16 core, 3.1GHz): Exceptional Memory Bandwidth AMD EPYC server processors deliver 8 channels of memory with support for up The right choice for HPC to 2TB of memory per processor. Designed from the ground up for a new generation of solutions, AMD EPYC™ 7371 processors (16 core, 3.1GHz) implement a philosophy of Standards Based AMD is committed to industry standards, choice without compromise. The AMD EPYC 7371 processor delivers offering you a choice in x86 processors outstanding frequency for applications sensitive to per-core with design innovations that target the performance such as those licensed on a per-core basis. evolving needs of modern datacenters. No Compromise Product Line Compute requirements are increasing, datacenter space is not. AMD EPYC server processors offer up to 32 cores and a consistent feature set across all processor models. Power HPC Workloads Tackle HPC workloads with leading performance and expandability. AMD EPYC 7371 processors are an excellent option when license costs Accelerate your workloads with up to dominate the overall solution cost. In these scenarios the performance- 33% more PCI Express® Gen 3 lanes. per-dollar of the overall solution is usually best with a CPU that can Optimize Productivity provide excellent per-core performance. Increase productivity with tools, resources, and communities to help you “code faster, faster code.” Boost AMD EPYC processors’ innovative architecture translates to tremendous application performance with Software performance. More importantly, the performance you’re paying for can Optimization Guides and Performance be matched to the appropriate to the performance you need. -

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

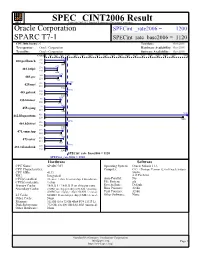

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Poweredge R940 (Intel Xeon Gold 5122, 3.60 Ghz) Specint Rate Base2006 = 1080 CPU2006 License: 55 Test Date: Jun-2017 Test Sponsor: Dell Inc

SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. SPECint_rate2006 = 1150 PowerEdge R940 (Intel Xeon Gold 5122, 3.60 GHz) SPECint_rate_base2006 = 1080 CPU2006 license: 55 Test date: Jun-2017 Test sponsor: Dell Inc. Hardware Availability: Jul-2017 Tested by: Dell Inc. Software Availability: Nov-2016 Copies 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 11000 12000 13000 14000 15000 16000 18000 917 400.perlbench 32 32 762 521 401.bzip2 32 32 487 808 403.gcc 32 32 802 1480 429.mcf 32 624 445.gobmk 32 2010 456.hmmer 32 32 1530 710 458.sjeng 32 32 658 17700 462.libquantum 32 1170 464.h264ref 32 32 1130 554 471.omnetpp 32 32 509 614 473.astar 32 1430 483.xalancbmk 32 SPECint_rate_base2006 = 1080 SPECint_rate2006 = 1150 Hardware Software CPU Name: Intel Xeon Gold 5122 Operating System: SUSE Linux Enterprise Server 12 SP2 CPU Characteristics: Intel Turbo Boost Technology up to 3.70 GHz 4.4.21-69-default CPU MHz: 3600 Compiler: C/C++: Version 17.0.3.191 of Intel C/C++ FPU: Integrated Compiler for Linux CPU(s) enabled: 16 cores, 4 chips, 4 cores/chip, 2 threads/core Auto Parallel: Yes CPU(s) orderable: 2,4 chip File System: xfs Primary Cache: 32 KB I + 32 KB D on chip per core System State: Run level 3 (multi-user) Secondary Cache: 1 MB I+D on chip per core Base Pointers: 32-bit L3 Cache: 16.5 MB I+D on chip per chip Peak Pointers: 32/64-bit Other Cache: None Other Software: Microquill SmartHeap V10.2 Memory: 768 GB (48 x 16 GB 2Rx8 PC4-2666V-R) Disk Subsystem: 1 x 960 GB SATA SSD Other Hardware: None Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. -

Evaluation of AMD EPYC

Evaluation of AMD EPYC Chris Hollowell <[email protected]> HEPiX Fall 2018, PIC Spain What is EPYC? EPYC is a new line of x86_64 server CPUs from AMD based on their Zen microarchitecture Same microarchitecture used in their Ryzen desktop processors Released June 2017 First new high performance series of server CPUs offered by AMD since 2012 Last were Piledriver-based Opterons Steamroller Opteron products cancelled AMD had focused on low power server CPUs instead x86_64 Jaguar APUs ARM-based Opteron A CPUs Many vendors are now offering EPYC-based servers, including Dell, HP and Supermicro 2 How Does EPYC Differ From Skylake-SP? Intel’s Skylake-SP Xeon x86_64 server CPU line also released in 2017 Both Skylake-SP and EPYC CPU dies manufactured using 14 nm process Skylake-SP introduced AVX512 vector instruction support in Xeon AVX512 not available in EPYC HS06 official GCC compilation options exclude autovectorization Stock SL6/7 GCC doesn’t support AVX512 Support added in GCC 4.9+ Not heavily used (yet) in HEP/NP offline computing Both have models supporting 2666 MHz DDR4 memory Skylake-SP 6 memory channels per processor 3 TB (2-socket system, extended memory models) EPYC 8 memory channels per processor 4 TB (2-socket system) 3 How Does EPYC Differ From Skylake (Cont)? Some Skylake-SP processors include built in Omnipath networking, or FPGA coprocessors Not available in EPYC Both Skylake-SP and EPYC have SMT (HT) support 2 logical cores per physical core (absent in some Xeon Bronze models) Maximum core count (per socket) Skylake-SP – 28 physical / 56 logical (Xeon Platinum 8180M) EPYC – 32 physical / 64 logical (EPYC 7601) Maximum socket count Skylake-SP – 8 (Xeon Platinum) EPYC – 2 Processor Inteconnect Skylake-SP – UltraPath Interconnect (UPI) EYPC – Infinity Fabric (IF) PCIe lanes (2-socket system) Skylake-SP – 96 EPYC – 128 (some used by SoC functionality) Same number available in single socket configuration 4 EPYC: MCM/SoC Design EPYC utilizes an SoC design Many functions normally found in motherboard chipset on the CPU SATA controllers USB controllers etc. -

IBM Power Systems Performance Report Apr 13, 2021

IBM Power Performance Report Power7 to Power10 September 8, 2021 Table of Contents 3 Introduction to Performance of IBM UNIX, IBM i, and Linux Operating System Servers 4 Section 1 – SPEC® CPU Benchmark Performance 4 Section 1a – Linux Multi-user SPEC® CPU2017 Performance (Power10) 4 Section 1b – Linux Multi-user SPEC® CPU2017 Performance (Power9) 4 Section 1c – AIX Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 5 Section 1d – Linux Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 6 Section 2 – AIX Multi-user Performance (rPerf) 6 Section 2a – AIX Multi-user Performance (Power8, Power9 and Power10) 9 Section 2b – AIX Multi-user Performance (Power9) in Non-default Processor Power Mode Setting 9 Section 2c – AIX Multi-user Performance (Power7 and Power7+) 13 Section 2d – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power8) 15 Section 2e – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power7 and Power7+) 20 Section 3 – CPW Benchmark Performance 19 Section 3a – CPW Benchmark Performance (Power8, Power9 and Power10) 22 Section 3b – CPW Benchmark Performance (Power7 and Power7+) 25 Section 4 – SPECjbb®2015 Benchmark Performance 25 Section 4a – SPECjbb®2015 Benchmark Performance (Power9) 25 Section 4b – SPECjbb®2015 Benchmark Performance (Power8) 25 Section 5 – AIX SAP® Standard Application Benchmark Performance 25 Section 5a – SAP® Sales and Distribution (SD) 2-Tier – AIX (Power7 to Power8) 26 Section 5b – SAP® Sales and Distribution (SD) 2-Tier – Linux on Power (Power7 to Power7+) -

Desktop 3Rd Generation Intel® Core™ Processor Family, Desktop Intel® Pentium® Processor Family, Desktop Intel® Celeron® Processor Family, and LGA1155 Socket

Desktop 3rd Generation Intel® Core™ Processor Family, Desktop Intel® Pentium® Processor Family, Desktop Intel® Celeron® Processor Family, and LGA1155 Socket Thermal Mechanical Specifications and Design Guidelines (TMSDG) January 2013 Document Number: 326767-005 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. A “Mission Critical Application” is any application in which failure of the Intel Product could result, directly or indirectly, in personal injury or death. SHOULD YOU PURCHASE OR USE INTEL'S PRODUCTS FOR ANY SUCH MISSION CRITICAL APPLICATION, YOU SHALL INDEMNIFY AND HOLD INTEL AND ITS SUBSIDIARIES, SUBCONTRACTORS AND AFFILIATES, AND THE DIRECTORS, OFFICERS, AND EMPLOYEES OF EACH, HARMLESS AGAINST ALL CLAIMS COSTS, DAMAGES, AND EXPENSES AND REASONABLE ATTORNEYS' FEES ARISING OUT OF, DIRECTLY OR INDIRECTLY, ANY CLAIM OF PRODUCT LIABILITY, PERSONAL INJURY, OR DEATH ARISING IN ANY WAY OUT OF SUCH MISSION CRITICAL APPLICATION, WHETHER OR NOT INTEL OR ITS SUBCONTRACTOR WAS NEGLIGENT IN THE DESIGN, MANUFACTURE, OR WARNING OF THE INTEL PRODUCT OR ANY OF ITS PARTS. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined”. -

Thermal Guide: Intel® Xeon® Processor E5 V4 Product Family

Intel® Xeon® Processor E5 v4 Product Family Thermal Mechanical Specification and Design Guide June 2016 Document Number: 333812-002 IntelLegal Lines and Disclaimerstechnologies’ features and benefits depend on system configuration and may require enabled hardware, software or service activation. Learn more at Intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppal or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. Intel® Turbo Boost Technology requires a PC with a processor with Intel Turbo Boost Technology capability. Intel Turbo Boost Technology performance varies depending on hardware, software and overall system configuration. Check with your PC manufacturer on whether your system delivers Intel Turbo Boost Technology. For more information, see http://www.intel.com/technology/turboboost Copies of documents which have an order number and are referenced in this document may be obtained by calling 1-800-548- 4725 or by visiting www.intel.com/design/literature.htm. -

AMD EPYC 7002 Architecture Extends Benefits for Storage-Centric

Micron Technical Brief AMD EPYC™ 7002 Architecture Extends Benefits for Storage-Centric Solutions Overview With the release of the second generation of AMD EPYC™ family of processors, Micron believes that AMD has extended the benefits of EPYC as a foundation for storage-centric, all-flash solutions beyond the previous generation. As more enterprises are evaluating and deploying commodity server-based software-defined storage (SDS) solutions, platforms built using AMD EPYC 7002 processors continue to provide massive storage flexibility and throughput using the latest generation of PCI Express® (PCIe™) and NVM Express® (NVMe™) SSDs. With this new offering, Micron revisits our previously released analysis of the advantages that AMD EPYC architecture-based servers provide storage-centric solutions. To best assess the AMD EPYC architecture, we discuss the EPYC 7002 for solid-state storage solutions, based on AMD EPYC product features, capabilities and server manufacturer recommendations. We have not included any specific testing performed by Micron. Each OEM/ODM will have differing server implementation and additional support components, which could ultimately affect solution performance. Architecture Overview The new EPYC 7002 series of enterprise-class server processors, AMD created a second generation of its “Zen” microarchitecture and a second- generation Infinity Fabric™ to interconnect up to eight processor core complex die (CCD) per socket. Each CCD can host up to eight cores together with a centralized I/O controller that handles all PCIe and memory traffic (Figure 1). AMD has doubled the performance of each system-on-a- chip (SoC) while reducing the overall power consumption per core through advanced 7nm process technology over the first generation’s 14nm process, doubling memory DIMM size support to 256GB LRDIMMs while also providing a 2x peripheral throughput increase with the introduction of PCIe Generation 4.0 I/O controllers.