Chapter 1. Interactive Systems

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Planning Curriculum in Art and Design

Planning Curriculum in Art and Design Wisconsin Department of Public Instruction Planning Curriculum in Art and Design Melvin F. Pontious (retired) Fine Arts Consultant Wisconsin Department of Public Instruction Tony Evers, PhD, State Superintendent Madison, Wisconsin This publication is available from: Content and Learning Team Wisconsin Department of Public Instruction 125 South Webster Street Madison, WI 53703 608/261-7494 cal.dpi.wi.gov/files/cal/pdf/art.design.guide.pdf © December 2013 Wisconsin Department of Public Instruction The Wisconsin Department of Public Instruction does not discriminate on the basis of sex, race, color, religion, creed, age, national origin, ancestry, pregnancy, marital status or parental status, sexual orientation, or disability. Foreword Art and design education are part of a comprehensive Pre-K-12 education for all students. The Wisconsin Department of Public Instruction continues its efforts to support the skill and knowledge development for our students across the state in all content areas. This guide is meant to support this work as well as foster additional reflection on the instructional framework that will most effectively support students’ learning in art and design through creative practices. This document represents a new direction for art education, identifying a more in-depth review of art and design education. The most substantial change involves the definition of art and design education as the study of visual thinking – including design, visual communications, visual culture, and fine/studio art. The guide provides local, statewide, and national examples in each of these areas to the reader. The overall framework offered suggests practice beyond traditional modes and instead promotes a more constructivist approach to learning. -

Full Text (Pdf)

Selected Papers from the CLARIN Annual Conference 2015 October 14–16, 2015 Wrocław, Poland Edited by Koenraad De Smedt Published by Linköping University Electronic Press, Sweden Linköping Electronic Conference Proceedings, No. 123 NEALT Proceedings Series, Volume 28 eISSN 1650-3740 • ISSN 1650-3686 • ISBN 978-91-7685-765-6 Preface These proceedings present the highlights of the CLARIN Annual Conference 2015 that took place in Wrocław, Poland. It is the second volume of what we hope is becoming a series of publications that constitutes a multiannual record of the experience and insights gained within CLARIN. The selected papers present results which were obtained in the projects conducted within and between the national consortia and which contribute to the realization of what CLARIN aims to be: a European Re- search Infrastructure providing sustainable access to language resources in all forms, analysis services for the processing of language materials, and a platform for the sharing of knowledge that can stimulate the use, reuse and repurposing of the available data. As an international research infrastructure, CLARIN has the potential to lower the barriers for researchers to entry into digital scholarship and cutting edge research. With the growing number of participating countries and languages covered, CLARIN will help to establish a truly connected and multilingual European Research Area for digital research in the Humanities and Social Sciences, in which Europe’s multilinguality reinforces the basis for the comparative investigation of a wide range of intellectual and societal phenomena. This volume illustrates the various ways in which CLARIN supports researchers and it shows that there are more and more scholarly domains in which language resources are a crucial data type, whether approached as big data or nor so big data. -

Garment Care Labels & Symbols

Care Written Care What Care Symbol and Instructions Mean Symbol Instructions Wash Garment may be laundered through the use of hottest available water, detergent or soap, Machine Wash, Normal agitation, and a machine designed for this purpose. Initial water temperature should not exceed 30C Machine Wash, Cold or 65 to 85F. Initial water temperature should not exceed 40C Machine Wash, Warm or 105F. Initial water temperature should not exceed 50C Machine Wash, Hot or 120F. Initial water temperature should not exceed 60C Machine Wash, Hot or 140F. Initial water temperature should not exceed 70C Machine Wash, Hot or 160F. Initial water temperature should not exceed 95C Machine Wash, Hot or 200F. NOTE: SYSTEM OF DOTS INDICATING TEMPERATURE RANGE IS THE SAME FOR ALL WASH PROCEDURES. Garment may be machine laundered only on the Machine Wash, setting designed to preserve Permanent Press Permanent Press with cool down or cold rinse prior to reduced spin. Garment may be machine laundered only on the Machine Wash, Gentle or setting designed for gentle agitation and/or Delicate reduced time for delicate items. Garment may be laundered through the use of Hand Wash water, detergent or soap and gentle hand manipulation. Garment may not be safely laundered by any Do Not Wash process. Normally accompanied by Dry Clean instructions. Bleach NOTE: All (98+%) washable textiles are safe in some type of bleach. IF BLEACH IS NOT MENTIONED OR REPRESENTED BY A SYMBOL, ANY BLEACH MAY BE USED. Any commercially available bleach product may Bleach When Needed be used in the laundering process. Only a non-chlorine, color-safe bleach may be Non-Chlorine Bleach used in the laundering process. -

Apparel, Made-Ups and Home Furnishing

Apparel, Made-ups and Home Furnishing NSQF Level 2 – Class X Student Workbook COORDINATOR: Dr. Pinki Khanna, Associate Professor Dept. of Home Science and Hospitality Management iii-i---lqlqlqlq----'k'k'k'k----dsUnzh;dsUnzh; O;kolkf;d f'k{kk laLFkku]';keyk fgYl , Hkksiky PSS Central Institute of Vocational Education, Shyamla Hills, Bhopal Student Workbook Apparel, Made-ups and Home Furnishing (Class X; NSQF Level 2) March, 2017 Publication No.: © PSS Central Institute of Vocational Education, 2017 ALL RIGHTS RESERVED ° No part of this publication may be reproduced, stored in a retrieval system or transmitted, in any form or by any means, electronically, mechanical, photocopying, recording or otherwise without prior permission of the publisher. ° This document is supplied subject to the condition that it shall not, by way of trade, be lent, resold, hired out or otherwise disposed of without the publisher’s consent in any form of binding or cover other than that in which it is published. • The document is only for free circulation and distribution. Coordinator Dr. Pinki Khanna Associate Professor, Department of Home Science & Hospitality Management Production Assistant Mr. A. M. Vinod Kumar Layout, Cover Design and Laser Typesetting Mr. Vinod K. Soni, C.O. Gr.II Published by the Joint Director, PSS Central Institute of Vocational Education, Shyamla Hills, Bhopal-462 013, Madhya Pradesh, India Tel: +91-755-2660691, 2704100, Fax: +91-755-2660481, Web: http://www.psscive.nic.in Preface The National Curriculum Framework, 2005, recommends that children’s life at school must be linked to their life outside the school. This principle makes a departure from the legacy of bookish learning which continues to shape our system and causes a gap between the school, home, community and the workplace. -

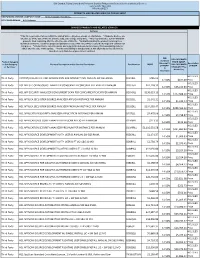

HCL Software's

IBM Branded, Fujistu Branded and Panasonic Branded Products and Related Services and Cloud Services Contract DIR-TSO-3999 PRICING SHEET PRODUCTS AND RELATED SERVICES PRICING SHEET RESPONDING VENDOR COMPANY NAME:____Sirius Computer Solutions_______________ PROPOSED BRAND:__HCL Software__________________________ BRANDED PRODUCTS AND RELATED SERVICES Software * This file is generated for use within the United States. All prices shown are US Dollars. * Products & prices are effective as of the date of this file and are subject to change at any time. * HCL may announce new or withdraw products from marketing after the effective date of this file. * Nothwithstanding the product list and prices identified on this file, customer proposals/quotations issued will reflect HCL's current offerings and commercial list prices. * Product list is not all inclusive and may not include products removed from availability (sale) or added after the date of this update. * Product availability is not guaranteed. Not all products listed below are found on every State/Local government contract. DIR DIR CUSTOMER Customer Product Category PRICE (MSRP- Discount % Description or SubCategory Product Description and/or Service Description Part Number MSRP DIR CUSTOMER off MSRP* of MSRP or Services* DISCOUNT Plus (2 Admin Fee) Decimals) HCL SLED Third Party CNTENT/COLLAB ACC AND WEBSPH PRTL SVR INTRANET PVU ANNUAL SW S&S RNWL E045KLL $786.02 14.50% $677.09 Price HCL SLED Third Party HCL APP SEC OPEN SOURCE ANALYZER CONSCAN PER CONCURRENT EVENT PER ANNUM D20H6LL $41,118.32 -

Monitoring Enterprise Collaboration Platform Change and the Building of Digital Transformation Capabilities

Monitoring Enterprise Collaboration Platform Change and the Building of Digital Transformation Capabilities: An Information Infrastructure Perspective by Clara Sabine Nitschke Approved Dissertation thesis for the partial fulfilment of the requirements for a Doctor of Economics and Social Sciences (Dr. rer. pol.) Fachbereich 4: Informatik Universität Koblenz-Landau Chair of PhD Board: Prof. Dr. Ralf Lämmel Chair of PhD Commission: Prof. Dr. Viorica Sofronie-Stokkermans Examiner and Supervisor: Prof. Dr. Susan P. Williams Further Examiners: Prof. Dr. Petra Schubert, Prof. Dr. Catherine A. Hardy Date of the doctoral viva: 28/07/2021 Acknowledgements Many thanks to all the people who supported me on my PhD journey, a life-changing experience. This work was funded by two research grants from the Deutsche Forschungsgemeinschaft (DFG). The related projects were designed as a joint work between two research groups at the University Koblenz-Landau. I am especially grateful to my supervisor Prof. Dr. Sue Williams who provided invaluable support throughout my whole project, particularly through her expertise, as well as her research impulses and discussions. Still, she gave me the freedom to shape my research, so many thanks! Further, I would like to thank my co-advisor Prof. Dr. Petra Schubert for very constructive feedback through the years. I am thankful for having had the unique opportunity to be part of the Center for Enterprise Information Research (CEIR) team with excellent researchers and the IndustryConnect initiative, which helped me bridge the gap between academia and ‘real world’ cases. IndustryConnect, founded by Prof. Dr. Schubert and Prof. Dr. Williams, enabled me to participate in long-term practice-oriented research with industry, a privilege that most other doctoral students cannot enjoy. -

Investor Release

INVESTOR RELEASE Noida, India, January 15th, 2021 Revenue at US$ 10,022 mn; up 3.6% YoY in US$ and Constant Currency EBITDA margin at 26.5%, (US GAAP); EBITDA margin at 27.4% (Ind AS); EBIT margin at 21.5% Net Income at US$ 1781 mn (Net Income margin at 17.8%) up 19.8% YoY Revenue at ` 74,327 crores; up 9.2% YoY Net Income at ` 13,202 crores; up 26.0% YoY Revenue at US$ 2,617 mn; up 4.4% QoQ & up 2.9% YoY Revenue in Constant Currency; up 3.5% QoQ & up 1.1% YoY EBITDA margin at 28.2%, (US GAAP); EBITDA margin at 29.1% (Ind AS); EBIT margin at 22.9% Net Income at US$ 540 mn (Net Income margin at 20.6%) up 27.3% QoQ & up 26.5% YoY Revenue at ` 19,302 crores; up 3.8% QoQ & up 6.4% YoY Net Income at ` 3,982 crores; up 26.7% QoQ & up 31.1% YoY Revenue expected to grow QoQ between 2% to 3% in constant currency for Q4, FY’21, including DWS contribution. EBIT expected to be between 21.0% and 21.5% for FY’21 Financial Highlights 2 Corporate Overview 4 Performance Trends 5 Financials in US$ 18 Cash and Cash Equivalents, Investments & Borrowings 21 Revenue Analysis at Company Level 22 Constant Currency Reporting 23 Client Metrics 24 Headcount 24 Financials in ` 25 - 1 - (Amount in US $ Million) CALENDAR YEAR QUARTER ENDED PARTICULARS CY’20 Margin YoY 31-Dec-2020 Margin QoQ YoY Revenue 10,022 3.6% 2,617 4.4% 2.9% Revenue Growth 3.6% 3.5% 1.1% (Constant Currency) EBITDA 2,655 26.5% 19.9% 738 28.2% 10.5% 17.7% EBIT 2,155 21.5% 16.5% 599 22.9% 10.6% 16.4% Net Income 1,781 17.8% 19.8% 540 20.6% 27.3% 26.5% (Amount in ` Crores) CALENDAR YEAR QUARTER ENDED -

Syllabus: Art 4366-Interactive Design

Art 4348 - Interactive Design, 2013 Fall p. 1 SYLLABUS: ART 4366-INTERACTIVE DESIGN INSTRUCTOR Seiji Ikeda Assistant Professor Office: Fine Arts Building 369-C Hours: Tuesdays, 10-11am [email protected] CLASS INFORMATION Art 4348 - Interactive Design, Section 1 Fine Arts Building 368-A, Monday and Wednesday, 11-1:50pm CATALOGUE DESCRIPTION Comprehensive analysis of interactive concepts and the role of new media in relationship to the field of visual communications. The student will explore and construct innovative uses of interaction using coding and contemporary applications. May be repeated for credit. Prerequisite: ART 3354: SIGN AND SYMBOL, or permission of the advisor. COURSE OBJECTIVES The course will explore interactive media and trends in web design and development. The basic course structure is a combination of seminars (made up of lecture, readings, discussion, research of topics) and practical digital projects. Comprehensive learning of how the theoretical and sociological impact of today’s media & design has on the culture and the individual will be emphasized. The class will research and implement effective ideas solving visual problems in navigation, interface design, and layout design through the use of type, graphic elements, texture, motion and animation. Main projects will revolve around creating Motion-based websites. DESCRIPTION OF INSTRUCTIONAL METHODS The structure of the class includes lectures, demonstrations, group discussion, individual and group critiques and in/outside class studio activities. Projects will be assigned and will be due on scheduled dates. Each project will include an introduction to the specifics of what is expected and what concepts we are covering. At the completion of assigned projects a critique/class review will take place. -

Phd and Supervisors Guide to Welcome to Arcintexetn

PhD and Supervisors Guide to Welcome to ArcInTexETN This guide to the ArcInTexETN gives an overview of the research program of the training network, the impact promised in our application and also an overview of major network wide training activities as well as the work packages structure of the network. For contact information, news and up-to-date information please check the ArcInTexETN web at www.arcintexetn.eu We look forward to work with all of you to develop cross-disciplinary research and research education in the areas of architecture, textiles, fashion and interaction design. Lars Hallnäs – Network Coordinator Agneta Nordlund Andersson – Network Manager Delia Dumitrescu – Director of Studies Content I. The ArcInTexETN research program................................... 5 II. Impact of the ArcInTexETN............................................... 12 III. Work packages 2-4.......................................................... 15 IV. Summer schools, courses and workshops........................ 16 V. Secondment..................................................................... 18 VI. Exploitation, dissemination and communication............... 21 VII. Overview ESR:s.............................................................. 23 VIII. Activities by year and month.......................................... 24 IX. Legal guide......................................................................26 I. ArcInTexETN and technical development into the design of new forms of living that will research program provide the foundations -

Introduction to Chemistry

Introduction to Chemistry Author: Tracy Poulsen Digital Proofer Supported by CK-12 Foundation CK-12 Foundation is a non-profit organization with a mission to reduce the cost of textbook Introduction to Chem... materials for the K-12 market both in the U.S. and worldwide. Using an open-content, web-based Authored by Tracy Poulsen collaborative model termed the “FlexBook,” CK-12 intends to pioneer the generation and 8.5" x 11.0" (21.59 x 27.94 cm) distribution of high-quality educational content that will serve both as core text as well as provide Black & White on White paper an adaptive environment for learning. 250 pages ISBN-13: 9781478298601 Copyright © 2010, CK-12 Foundation, www.ck12.org ISBN-10: 147829860X Except as otherwise noted, all CK-12 Content (including CK-12 Curriculum Material) is made Please carefully review your Digital Proof download for formatting, available to Users in accordance with the Creative Commons Attribution/Non-Commercial/Share grammar, and design issues that may need to be corrected. Alike 3.0 Unported (CC-by-NC-SA) License (http://creativecommons.org/licenses/by-nc- sa/3.0/), as amended and updated by Creative Commons from time to time (the “CC License”), We recommend that you review your book three times, with each time focusing on a different aspect. which is incorporated herein by this reference. Specific details can be found at http://about.ck12.org/terms. Check the format, including headers, footers, page 1 numbers, spacing, table of contents, and index. 2 Review any images or graphics and captions if applicable. -

Facility Service Life Requirements

Whole Building Design Guide Federal Green Construction Guide for Specifiers This is a guidance document with sample specification language intended to be inserted into project specifications on this subject as appropriate to the agency's environmental goals. Certain provisions, where indicated, are required for U.S. federal agency projects. Sample specification language is numbered to clearly distinguish it from advisory or discussion material. Each sample is preceded by identification of the typical location in a specification section where it would appear using the SectionFormatTM of the Construction Specifications Institute; the six digit section number cited is per CSI MasterformatTM 2004 and the five digit section number cited parenthetically is per CSI MasterformatTM 1995. SECTION 01 81 10 (SECTION 01120) – FACILITY SERVICE LIFE REQUIREMENTS SPECIFIER NOTE: Sustainable Federal Facilities: A Guide to Integrating Value Engineering, Life-Cycle Costing, and Sustainable Development, Federal Facilities Council Technical Report No.142; 2001 http://www.wbdg.org/ccb/SUSFFC/fedsus.pdf , states that the total cost of facility ownership, which includes all costs an owner will make over the course of the building’s service lifetime, are dominated by operation and maintenance costs. On average, design and construction expenditures, “first costs” of a facility, will account for 5-10 % of the total life-cycle costs. Land acquisition, conceptual planning, renewal or revitalization, and disposal will account for 5-35 % of the total life-cycle costs. However, operation and maintenance will account for 60-85 % of the total life-cycle costs. Service life planning can improve the economic and environmental impacts of the building. It manages the most costly portion of a building’s life cycle costs, the operation and maintenance stage. -

On the Subject of Laundry

Keep Talking and Nobody Explodes Mod Laundry On the Subject of Laundry Sorting and folding are the least of your worries. All the messes from the previous explosions must be cleaned up. Using the Laundry Symbol Reference L4UHDR9 and the rules below, determine the correct setting on the machine panel. Once satisfied, insert a coin. On error, a sock will be lost, and a strike gained. Determine the piece of clothing that has to be cleaned with the tables below. For all values higher than 5, subtract 6 from the total until the new number is less than 6. For all negative values, add 6 until you have a value between 0–5. The Clothing Item (table L41) is determined by the number of unsolved modules (excluding needy modules) + total amount of indicators. The Item Material (table L42) is determined by the total number of ports + the number of solved modules – battery holders. The Item Color (table L43) is determined by the last digit of serial number + batteries. Use washing instructions based on the material, drying instructions based on the color, and use ironing and special instructions based on the item. But, prioritize the following rules from top to bottom: If the color is Clouded Pearl ALWAYS use non-chlorine bleach. If the item is made out of leather, or in the color Jade Cluster, it can’t go above 120°F. To be safe ALWAYS wash at 80°F. If the item is a corset or the material is corduroy then use special instructions based on material. If the material is wool or the color is Star Lemon Quartz ALWAYS dry with high heat.