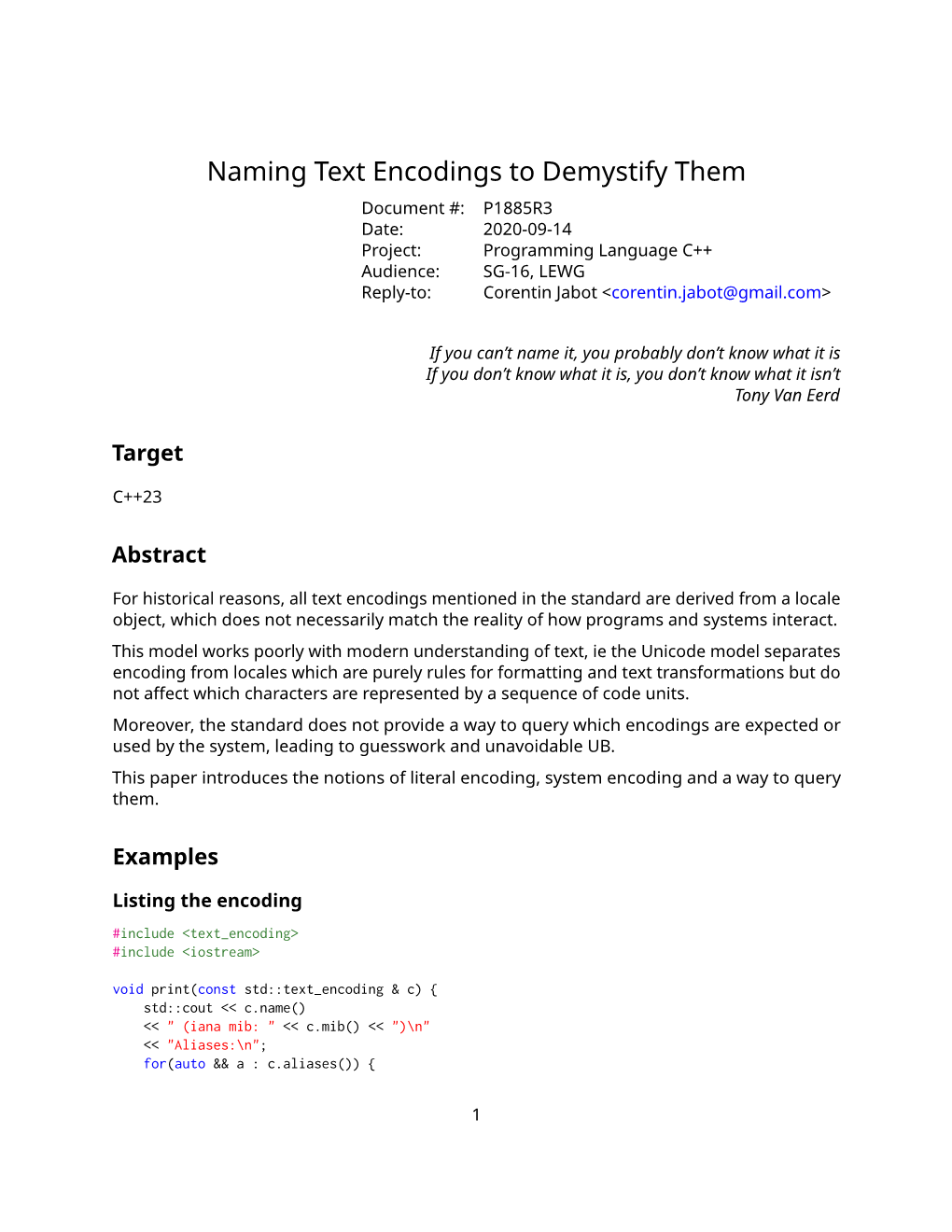

Naming Text Encodings to Demystify Them

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

INTERSKILL MAINFRAME QUARTERLY December 2011

INTERSKILL MAINFRAME QUARTERLY December 2011 Retaining Data Center Skills Inside This Issue and Knowledge Retaining Data Center Skills and Knowledge 1 Interskill Releases - December 2011 2 By Greg Hamlyn Vendor Briefs 3 This the final chapter of this four part series that briefly Taking Care of Storage 4 explains the data center skills crisis and the pros and cons of Learning Spotlight – Managing Projects 5 implementing a coaching or mentoring program. In this installment we will look at some of the steps to Tech-Head Knowledge Test – Utilizing ISPF 5 implementing a program such as this into your data center. OPINION: The Case for a Fresh Technical If you missed these earlier installments, click the links Opinion 6 below. TECHNICAL: Lost in Translation Part 1 - EBCDIC Code Pages 7 Part 1 – The Data Center Skills Crisis MAINFRAME – Weird and Unusual! 10 Part 2 – How Can I Prevent Skills Loss in My Data Center? Part 3 – Barriers to Implementing a Coaching or Mentoring Program should consider is the GROW model - Determine whether an external consultant should be Part Four – Implementing a Successful Coaching used (include pros and cons) - Create a basic timeline of the project or Mentoring Program - Identify how you will measure the effectiveness of the project The success of any project comes down to its planning. If - Provide some basic steps describing the coaching you already believe that your data center can benefit from and mentoring activities skills and knowledge transfer and that coaching and - Next phase if the pilot program is deemed successful mentoring will assist with this, then outlining a solid (i.e. -

Consonant Characters and Inherent Vowels

Global Design: Characters, Language, and More Richard Ishida W3C Internationalization Activity Lead Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 1 Getting more information W3C Internationalization Activity http://www.w3.org/International/ Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 2 Outline Character encoding: What's that all about? Characters: What do I need to do? Characters: Using escapes Language: Two types of declaration Language: The new language tag values Text size Navigating to localized pages Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 3 Character encoding Character encoding: What's that all about? Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 4 Character encoding The Enigma Photo by David Blaikie Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 5 Character encoding Berber 4,000 BC Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 6 Character encoding Tifinagh http://www.dailymotion.com/video/x1rh6m_tifinagh_creation Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 7 Character encoding Character set Character set ⴰ ⴱ ⴲ ⴳ ⴴ ⴵ ⴶ ⴷ ⴸ ⴹ ⴺ ⴻ ⴼ ⴽ ⴾ ⴿ ⵀ ⵁ ⵂ ⵃ ⵄ ⵅ ⵆ ⵇ ⵈ ⵉ ⵊ ⵋ ⵌ ⵍ ⵎ ⵏ ⵐ ⵑ ⵒ ⵓ ⵔ ⵕ ⵖ ⵗ ⵘ ⵙ ⵚ ⵛ ⵜ ⵝ ⵞ ⵟ ⵠ ⵢ ⵣ ⵤ ⵥ ⵯ Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 8 Character encoding Coded character set 0 1 2 3 0 1 Coded character set 2 3 4 5 6 7 8 9 33 (hexadecimal) A B 52 (decimal) C D E F Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 9 Character encoding Code pages ASCII Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 10 Character encoding Code pages ISO 8859-1 (Latin 1) Western Europe ç (E7) Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 11 Character encoding Code pages ISO 8859-7 Greek η (E7) Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 12 Character encoding Double-byte characters Standard Country No. -

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Reference & Manual

DynaPDF 4.0 Reference & Manual API Reference Version 4.0.59 September 16, 2021 Legal Notices Copyright: © 2003-2021 Jens Boschulte, DynaForms GmbH. All rights reserved. DynaForms GmbH Burbecker Street 24 D-58285 Gevelsberg, Germany Trade Register HRB 9770, District Court Hagen CEO Jens Boschulte Phone: ++49 23 32-666 78 37 Fax: ++49 23 32-666 78 38 If you have questions please send an email to [email protected], or contact us by phone. This publication and the information herein is furnished as is, is subject to change without notice, and should not be construed as a commitment by DynaForms GmbH. DynaForms assumes no responsibility or liability for any errors or inaccuracies, makes no warranty of any kind (express, implied or statutory) with respect to this publication, and expressly disclaims any and all warranties of merchantability, fitness for particular purposes and no infringement of third-party rights. Adobe, Acrobat, and PostScript are trademarks of Adobe Systems Inc. AIX, IBM, and OS/390, are trademarks of International Business Machines Corporation. Microsoft, Windows, and Windows NT are trademarks of Microsoft Corporation. Apple, Mac OS, and Safari are trademarks of Apple Computer, Inc. registered in the United States and other countries. TrueType is a trademark of Apple Computer, Inc. Unicode and the Unicode logo are trademarks of Unicode, Inc. UNIX is a trademark of The Open Group. Solaris is a trademark of Sun Microsystems, Inc. Tru64 is a trademark of Hewlett-Packard. Linux is a trademark of Linus Torvalds. Other company product and service names may be trademarks or service marks of others. -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

HAIL: an Algorithm for the Hardware Accelerated Identification of Languages, Master's Thesis, May 2006

Washington University in St. Louis Washington University Open Scholarship All Computer Science and Engineering Research Computer Science and Engineering Report Number: WUCSE-2006-36 2006-01-01 HAIL: An Algorithm for the Hardware Accelerated Identification of Languages, Master's Thesis, May 2006 Charles M. Kastner This thesis examines in detail the Hardware-Accelerated Identification of Languages (HAIL) project. The goal of HAIL is to provide an accurate means to identify the language and encoding used in streaming content, such as documents passed over a high-speed network. HAIL has been implemented on the Field-programmable Port eXtender (FPX), an open hardware platform developed at Washington University in St. Louis. HAIL can accurately identify the primary languages and encodings used in text at rates much higher than what can be achieved by software algorithms running on microprocessors. Follow this and additional works at: https://openscholarship.wustl.edu/cse_research Part of the Computer Engineering Commons, and the Computer Sciences Commons Recommended Citation Kastner, Charles M., " HAIL: An Algorithm for the Hardware Accelerated Identification of Languages, Master's Thesis, May 2006" Report Number: WUCSE-2006-36 (2006). All Computer Science and Engineering Research. https://openscholarship.wustl.edu/cse_research/187 Department of Computer Science & Engineering - Washington University in St. Louis Campus Box 1045 - St. Louis, MO - 63130 - ph: (314) 935-6160. Department of Computer Science & Engineering 2006-36 HAIL: An Algorithm for the Hardware Accelerated Identification of Languages, Master's Thesis, May 2006 Authors: Charles M. Kastner Corresponding Author: [email protected] Web Page: http://www.arl.wustl.edu/projects/fpx/reconfig.htm Abstract: This thesis examines in detail the Hardware-Accelerated Identification of Languages (HAIL) project. -

Iso/Iec 8632-4:1999(E)

This is a preview - click here to buy the full publication INTERNATIONAL ISO/IEC STANDARD 8632-4 Second edition 1999-12-01 Information technology — Computer graphics — Metafile for the storage and transfer of picture description information — Part 4: Clear text encoding Technologies de l'information — Infographie — Métafichier de stockage et de transfert des informations de description d'images — Partie 4: Codage en clair des textes Reference number ISO/IEC 8632-4:1999(E) © ISO/IEC 1999 ISO/IEC 8632-4:1999(E) This is a preview - click here to buy the full publication PDF disclaimer This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but shall not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In downloading this file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat accepts no liability in this area. Adobe is a trademark of Adobe Systems Incorporated. Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation parameters were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In the unlikely event that a problem relating to it is found, please inform the Central Secretariat at the address given below. © ISO/IEC 1999 All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or ISO's member body in the country of the requester. -

Vntex — Typesetting Vietnamese Hàn Thế Thành Reinhard Kotucha

VnTEX — Typesetting Vietnamese Hàn Thế Thành Reinhard Kotucha Abstract VnTEX is an extension to Donald Knuth’s TEX typesetting system which provides support for typesetting Vietnamese. The primary site of VnTEX is http://vntex.sf.net. 1 Where to get Help The current maintainers of VnTEX are: I Hàn Thế Thành [email protected] I Reinhard Kotucha [email protected] I Werner Lemberg [email protected] There is a mailing list (very low traffic) for questions about VnTEX and typesetting Vietnamese. To subscribe to the list, visit: http://lists.sourceforge.net/lists/listinfo/vntex-users There is also a Wiki: http://vntex.info 2 Related Documents The following files are part of the VnTEX distribution I Hàn Thế Thành, Hỗ trợ tiếng Việt cho TEX I Hàn Thế Thành, Minimal steps to typeset Vietnamese I Hàn Thế Thành và Thái Phú Khánh Hòa, Dùng font với VnTEX The following files are not part of VnTEX but might be part of the TEX distribution you are using. I The American Mathematical Society, Hướng dẫn sử dụng gói amsmath, http://ctan.org/tex-archive/info/amslatex/vietnamese/amsldoc-vi.pdf http://ctan.org/tex-archive/info/amslatex/vietnamese/amsldoc-print-vi.pdf I H. Partl, E. Schlegl, I. Hyna, T. Oetiker, Một tài liệu ngắn gọn giới thiệu về LATEX 2", Translated by Nguyễn Tân Khoa. http://ctan.org/tex-archive/info/lshort/vietnamese/lshort-vi.pdf I Wolfgang May, Andreas Schlechte, Mở rộng môi trường định lý. Translated by Huỳnh Kỳ Anh. http://ctan.org/tex-archive/info/translations/vn/ntheorem-doc-vn.pdf 1 3 Typesetting Vietnamese In order to typeset Vietnamese, you need a text editor which supports Vietnamese. -

Legacy Character Sets & Encodings

Legacy & Not-So-Legacy Character Sets & Encodings Ken Lunde CJKV Type Development Adobe Systems Incorporated bc ftp://ftp.oreilly.com/pub/examples/nutshell/cjkv/unicode/iuc15-tb1-slides.pdf Tutorial Overview dc • What is a character set? What is an encoding? • How are character sets and encodings different? • Legacy character sets. • Non-legacy character sets. • Legacy encodings. • How does Unicode fit it? • Code conversion issues. • Disclaimer: The focus of this tutorial is primarily on Asian (CJKV) issues, which tend to be complex from a character set and encoding standpoint. 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated Terminology & Abbreviations dc • GB (China) — Stands for “Guo Biao” (国标 guóbiâo ). — Short for “Guojia Biaozhun” (国家标准 guójiâ biâozhün). — Means “National Standard.” • GB/T (China) — “T” stands for “Tui” (推 tuî ). — Short for “Tuijian” (推荐 tuîjiàn ). — “T” means “Recommended.” • CNS (Taiwan) — 中國國家標準 ( zhôngguó guójiâ biâozhün) in Chinese. — Abbreviation for “Chinese National Standard.” 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated Terminology & Abbreviations (Cont’d) dc • GCCS (Hong Kong) — Abbreviation for “Government Chinese Character Set.” • JIS (Japan) — 日本工業規格 ( nihon kôgyô kikaku) in Japanese. — Abbreviation for “Japanese Industrial Standard.” — 〄 • KS (Korea) — 한국 공업 규격 (韓國工業規格 hangug gongeob gyugyeog) in Korean. — Abbreviation for “Korean Standard.” — ㉿ — Designation change from “C” to “X” on August 20, 1997. 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated Terminology & Abbreviations (Cont’d) dc • TCVN (Vietnam) — Tiu Chun Vit Nam in Vietnamese. — Means “Vietnamese Standard.” • CJKV — Chinese, Japanese, Korean, and Vietnamese. 15th International Unicode Conference Copyright © 1999 Adobe Systems Incorporated What Is A Character Set? dc • A collection of characters that are intended to be used together to create meaningful text. -

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding A

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding Adobe-Symbol-Encoding csHPPSMath Adobe-Zapf-Dingbats-Encoding csZapfDingbats Arabic ISO-8859-6, csISOLatinArabic, iso-ir-127, ECMA-114, ASMO-708 ASCII US-ASCII, ANSI_X3.4-1968, iso-ir-6, ANSI_X3.4-1986, ISO646-US, us, IBM367, csASCI big-endian ISO-10646-UCS-2, BigEndian, 68k, PowerPC, Mac, Macintosh Big5 csBig5, cn-big5, x-x-big5 Big5Plus Big5+, csBig5Plus BMP ISO-10646-UCS-2, BMPstring CCSID-1027 csCCSID1027, IBM1027 CCSID-1047 csCCSID1047, IBM1047 CCSID-290 csCCSID290, CCSID290, IBM290 CCSID-300 csCCSID300, CCSID300, IBM300 CCSID-930 csCCSID930, CCSID930, IBM930 CCSID-935 csCCSID935, CCSID935, IBM935 CCSID-937 csCCSID937, CCSID937, IBM937 CCSID-939 csCCSID939, CCSID939, IBM939 CCSID-942 csCCSID942, CCSID942, IBM942 ChineseAutoDetect csChineseAutoDetect: Candidate encodings: GB2312, Big5, GB18030, UTF32:UTF8, UCS2, UTF32 EUC-H, csCNS11643EUC, EUC-TW, TW-EUC, H-EUC, CNS-11643-1992, EUC-H-1992, csCNS11643-1992-EUC, EUC-TW-1992, CNS-11643 TW-EUC-1992, H-EUC-1992 CNS-11643-1986 EUC-H-1986, csCNS11643_1986_EUC, EUC-TW-1986, TW-EUC-1986, H-EUC-1986 CP10000 csCP10000, windows-10000 CP10001 csCP10001, windows-10001 CP10002 csCP10002, windows-10002 CP10003 csCP10003, windows-10003 CP10004 csCP10004, windows-10004 CP10005 csCP10005, windows-10005 CP10006 csCP10006, windows-10006 CP10007 csCP10007, windows-10007 CP10008 csCP10008, windows-10008 CP10010 csCP10010, windows-10010 CP10017 csCP10017, windows-10017 CP10029 csCP10029, windows-10029 CP10079 csCP10079, windows-10079 -

Catalogue 1986-99

PUBLICATIONS BY SUBJECT LIFE SCIENCES .............................................................................................................................................. 1 Nuclear Medicine (including Radiopharmaceuticals) ............................................................................ 1 Radiation Biology........................................................................................................................................ 2 Medical Physics (including Dosimetry) .................................................................................................... 2 FOOD AND AGRICULTURE ............................................................................................................................ 4 Food Irradiation .......................................................................................................................................... 4 Insect and Pest Control.............................................................................................................................. 5 Mutation Plant Breeding............................................................................................................................ 6 Plant Biotechnology.................................................................................................................................... 7 Soil Fertility and Irrigation.......................................................................................................................... 7 Agrochemicals ........................................................................................................................................... -

Implementing Cross-Locale CJKV Code Conversion

Implementing Cross-Locale CJKV Code Conversion Ken Lunde CJKV Type Development Adobe Systems Incorporated bc ftp://ftp.oreilly.com/pub/examples/nutshell/ujip/unicode/iuc13-c2-paper.pdf ftp://ftp.oreilly.com/pub/examples/nutshell/ujip/unicode/iuc13-c2-slides.pdf Code Conversion Basics dc • Algorithmic code conversion — Within a single locale: Shift-JIS, EUC-JP, and ISO-2022-JP — A purely mathematical process • Table-driven code conversion — Required across locales: Chinese ↔ Japanese — Required when dealing with Unicode — Mapping tables are required — Can sometimes be faster than algorithmic code conversion— depends on the implementation September 10, 1998 Copyright © 1998 Adobe Systems Incorporated Code Conversion Basics (Cont’d) dc • CJKV character set differences — Different number of characters — Different ordering of characters — Different characters September 10, 1998 Copyright © 1998 Adobe Systems Incorporated Character Sets Versus Encodings dc • Common CJKV character set standards — China: GB 1988-89, GB 2312-80; GB 1988-89, GBK — Taiwan: ASCII, Big Five; CNS 5205-1989, CNS 11643-1992 — Hong Kong: ASCII, Big Five with Hong Kong extension — Japan: JIS X 0201-1997, JIS X 0208:1997, JIS X 0212-1990 — South Korea: KS X 1003:1993, KS X 1001:1992, KS X 1002:1991 — North Korea: ASCII (?), KPS 9566-97 — Vietnam: TCVN 5712:1993, TCVN 5773:1993, TCVN 6056:1995 • Common CJKV encodings — Locale-independent: EUC-*, ISO-2022-* — Locale-specific: GBK, Big Five, Big Five Plus, Shift-JIS, Johab, Unified Hangul Code — Other: UCS-2, UCS-4, UTF-7, UTF-8,