On Dead-End Detectors, the Traps They Set, and Trap Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Scheherazade

SCHEHERAZADE The MPC Literary Magazine Issue 9 Scheherazade Issue 9 Managing Editors Windsor Buzza Gunnar Cumming Readers Jeff Barnard, Michael Beck, Emilie Bufford, Claire Chandler, Angel Chenevert, Skylen Fail, Shay Golub, Robin Jepsen, Davis Mendez Faculty Advisor Henry Marchand Special Thanks Michele Brock Diane Boynton Keith Eubanks Michelle Morneau Lisa Ziska-Marchand i Submissions Scheherazade considers submissions of poetry, short fiction, creative nonfiction, novel excerpts, creative nonfiction book excerpts, graphic art, and photography from students of Monterey Peninsula College. To submit your own original creative work, please follow the instructions for uploading at: http://www.mpc.edu/scheherazade-submission There are no limitations on style or subject matter; bilingual submissions are welcome if the writer can provide equally accomplished work in both languages. Please do not include your name or page numbers in the work. The magazine is published in the spring semester annually; submissions are accepted year-round. Scheherazade is available in print form at the MPC library, area public libraries, Bookbuyers on Lighthouse Avenue in Monterey, The Friends of the Marina Library Community Bookstore on Reservation Road in Marina, and elsewhere. The magazine is also published online at mpc.edu/scheherazade ii Contents Issue 9 Spring 2019 Cover Art: Grandfather’s Walnut Tree, by Windsor Buzza Short Fiction Thank You Cards, by Cheryl Ku ................................................................... 1 The Bureau of Ungentlemanly Warfare, by Windsor Buzza ......... 18 Springtime in Ireland, by Rawan Elyas .................................................. 35 The Divine Wind of Midnight, by Clark Coleman .............................. 40 Westley, by Alivia Peters ............................................................................. 65 The Headmaster, by Windsor Buzza ...................................................... 74 Memoir Roller Derby Dreams, by Audrey Word ................................................ -

![Archons (Commanders) [NOTICE: They Are NOT Anlien Parasites], and Then, in a Mirror Image of the Great Emanations of the Pleroma, Hundreds of Lesser Angels](https://docslib.b-cdn.net/cover/8862/archons-commanders-notice-they-are-not-anlien-parasites-and-then-in-a-mirror-image-of-the-great-emanations-of-the-pleroma-hundreds-of-lesser-angels-438862.webp)

Archons (Commanders) [NOTICE: They Are NOT Anlien Parasites], and Then, in a Mirror Image of the Great Emanations of the Pleroma, Hundreds of Lesser Angels

A R C H O N S HIDDEN RULERS THROUGH THE AGES A R C H O N S HIDDEN RULERS THROUGH THE AGES WATCH THIS IMPORTANT VIDEO UFOs, Aliens, and the Question of Contact MUST-SEE THE OCCULT REASON FOR PSYCHOPATHY Organic Portals: Aliens and Psychopaths KNOWLEDGE THROUGH GNOSIS Boris Mouravieff - GNOSIS IN THE BEGINNING ...1 The Gnostic core belief was a strong dualism: that the world of matter was deadening and inferior to a remote nonphysical home, to which an interior divine spark in most humans aspired to return after death. This led them to an absorption with the Jewish creation myths in Genesis, which they obsessively reinterpreted to formulate allegorical explanations of how humans ended up trapped in the world of matter. The basic Gnostic story, which varied in details from teacher to teacher, was this: In the beginning there was an unknowable, immaterial, and invisible God, sometimes called the Father of All and sometimes by other names. “He” was neither male nor female, and was composed of an implicitly finite amount of a living nonphysical substance. Surrounding this God was a great empty region called the Pleroma (the fullness). Beyond the Pleroma lay empty space. The God acted to fill the Pleroma through a series of emanations, a squeezing off of small portions of his/its nonphysical energetic divine material. In most accounts there are thirty emanations in fifteen complementary pairs, each getting slightly less of the divine material and therefore being slightly weaker. The emanations are called Aeons (eternities) and are mostly named personifications in Greek of abstract ideas. -

Slayage, Number 16

Roz Kaveney A Sense of the Ending: Schrödinger's Angel This essay will be included in Stacey Abbott's Reading Angel: The TV Spinoff with a Soul, to be published by I. B. Tauris and appears here with the permission of the author, the editor, and the publisher. Go here to order the book from Amazon. (1) Joss Whedon has often stated that each year of Buffy the Vampire Slayer was planned to end in such a way that, were the show not renewed, the finale would act as an apt summation of the series so far. This was obviously truer of some years than others – generally speaking, the odd-numbered years were far more clearly possible endings than the even ones, offering definitive closure of a phase in Buffy’s career rather than a slingshot into another phase. Both Season Five and Season Seven were particularly planned as artistically satisfying conclusions, albeit with very different messages – Season Five arguing that Buffy’s situation can only be relieved by her heroic death, Season Seven allowing her to share, and thus entirely alleviate, slayerhood. Being the Chosen One is a fatal burden; being one of the Chosen Several Thousand is something a young woman might live with. (2) It has never been the case that endings in Angel were so clear-cut and each year culminated in a slingshot ending, an attention-grabber that kept viewers interested by allowing them to speculate on where things were going. Season One ended with the revelation that Angel might, at some stage, expect redemption and rehumanization – the Shanshu of the souled vampire – as the reward for his labours, and with the resurrection of his vampiric sire and lover, Darla, by the law firm of Wolfram & Hart and its demonic masters (‘To Shanshu in LA’, 1022). -

Buffy & Angel Watching Order

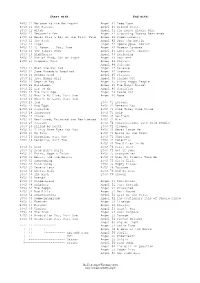

Start with: End with: BtVS 11 Welcome to the Hellmouth Angel 41 Deep Down BtVS 11 The Harvest Angel 41 Ground State BtVS 11 Witch Angel 41 The House Always Wins BtVS 11 Teacher's Pet Angel 41 Slouching Toward Bethlehem BtVS 12 Never Kill a Boy on the First Date Angel 42 Supersymmetry BtVS 12 The Pack Angel 42 Spin the Bottle BtVS 12 Angel Angel 42 Apocalypse, Nowish BtVS 12 I, Robot... You, Jane Angel 42 Habeas Corpses BtVS 13 The Puppet Show Angel 43 Long Day's Journey BtVS 13 Nightmares Angel 43 Awakening BtVS 13 Out of Mind, Out of Sight Angel 43 Soulless BtVS 13 Prophecy Girl Angel 44 Calvary Angel 44 Salvage BtVS 21 When She Was Bad Angel 44 Release BtVS 21 Some Assembly Required Angel 44 Orpheus BtVS 21 School Hard Angel 45 Players BtVS 21 Inca Mummy Girl Angel 45 Inside Out BtVS 22 Reptile Boy Angel 45 Shiny Happy People BtVS 22 Halloween Angel 45 The Magic Bullet BtVS 22 Lie to Me Angel 46 Sacrifice BtVS 22 The Dark Age Angel 46 Peace Out BtVS 23 What's My Line, Part One Angel 46 Home BtVS 23 What's My Line, Part Two BtVS 23 Ted BtVS 71 Lessons BtVS 23 Bad Eggs BtVS 71 Beneath You BtVS 24 Surprise BtVS 71 Same Time, Same Place BtVS 24 Innocence BtVS 71 Help BtVS 24 Phases BtVS 72 Selfless BtVS 24 Bewitched, Bothered and Bewildered BtVS 72 Him BtVS 25 Passion BtVS 72 Conversations with Dead People BtVS 25 Killed by Death BtVS 72 Sleeper BtVS 25 I Only Have Eyes for You BtVS 73 Never Leave Me BtVS 25 Go Fish BtVS 73 Bring on the Night BtVS 26 Becoming, Part One BtVS 73 Showtime BtVS 26 Becoming, Part Two BtVS 74 Potential BtVS 74 -

Goodbye, Kant!

1 Kant’s revolution1 Why start a revolution When he died at the age of eighty on the February 12, 1804, Kant was as forgetful as Ronald Reagan was at the end of his life.2 To overcome this, he wrote everything down on a large sheet of paper, on which metaphysical reflections are mixed in with laundry bills. He was the melancholy parody of what Kant regarded as the highest principle of his own philosophy, namely that an “I think” must accompany every representation or that there is a single world for the self that perceives it, that takes account of it, that remembers it, and that determines it through the categories. This is an idea that had done the rounds under various guises in philosophy before Kant, but he crucially transformed it. The reference to subjectivity did not conflict with objectivity, but rather made it possible inasmuch as the self is not just a disorderly bundle of sensations but a principle of order endowed with two pure forms of intuition—those of space and time—and with twelve categories—among which “substance” and “cause”—that constitute the real sources of what we call “objectivity.” The Copernican revolution to which Kant nailed his philosophical colors thus runs as follows: “Instead of asking what things are like in themselves, we should ask how they must be if they are to be known by us.”3 It is still worth asking why Kant should have undertaken so heroic and dangerous a task and why he, a docile subject of the enlightened despot the King of Prussia, to whom he had once even dedicated a poem,4 should have had to start a revolution. -

Cynthia Fuchs Epstein the City of Brotherly (And Sisterly) Society, Especially in the Current Political Love Welcomed an Onslaught of More Climate

VOLUME 33 SEPTEMBER/OCTOBER 2005 NUMBER 7 th Profile of the ASA President. The ASA Celebrates Its 100 Birthday Pushing Social Boundaries: in the Nation’s Birthplace Cynthia Fuchs Epstein The City of Brotherly (and sisterly) society, especially in the current political Love welcomed an onslaught of more climate. by Judith Lorber, Graduate School Cynthia’s father graduated from than 5000 sociologists to the 2005 The political undertones of the theme and Brooklyn College, Stuyvesant High School and had one American Sociological Association were reflected in two of the plenary City University of New York year of college, where he became a Annual Meeting. The centennial meeting sessions. The first discussed the impor- socialist. He outgrew some of his early proved to be busy, successful, and tant shifts in the political terrain of the In 1976, Cynthia Fuchs Epstein and idealism about the possibility of creating historical for being the second largest nation—most notably a new surge Rose Laub Coser were in England an egalitarian society, but he was an meeting in ASA history and only the rightward in our major political institu- organizing an international conference untiring worker in the reform wing of second to top 5,000 registrants. This tions—in the 21st century. The session, on women elites at King’s College, the Democratic Party until his death at number is quite an improvement over which featured distinguished historian Cambridge. Because they also shared a the age of 91. the 115 attendees at the inaugural ASA Dan T. Carter, two well-known legal love of gourmet food, they thought they Cynthia participated in a Zionist meeting. -

No Proxis on Judgment Day Revelation 20

No Proxies on Judgment Day: Revelation 20 By Charles Feinberg Is specifically no proxies on Judgment Day? Now I know how we use the word proxies today. We use that of people to dye their hair by using peroxide. But that's not what I mean by proxies. Proxy is a word that means a substitute. One who as a substitute is allowed to vote in a certain organization when a regular member of the organization is not there or is absent, detained for some reason. No substitutes then, no proxies on Judgment Day. I'm going to ask you to turn to a portion of Scripture, though it is brief. I know of no comparable portion anywhere in the Word of God and certainly not outside of it. Fifteen verses that are so remarkable in their disclosure of a piling up of events in the future. I'm speaking of the 20th chapter of the revelation. This chapter is intensely important and intensely significant because in a sense it marks a continental divide in the Bible. You may take all commentaries on the Revelation and all theological works. Theologies as we call them and they divide right down the line. Clean cut mark of demarcation, a line of demarcation between those who treat this 20th chapter of the Revelation one way and those who treat it another way. It is a dividing line. It is a chapter where if you have been interpreting the Bible incorrectly from Genesis on to this is pay day you come to a dead end. -

The Frontier Myth and the Frontier Thesis in Contemporary Genre Fiction

THE FRONTIER MYTH AND THE FRONTIER THESIS IN CONTEMPORARY GENRE FICTION _________________________________________________ A Dissertation presented to the Faculty of the Graduate School at the University of Missouri-Columbia _________________________________________________ In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy _________________________________________________ by JIHUN YOO Dr. Andrew Hoberek, Dissertation Supervisor DECEMBER 2015 The undersigned, appointed by the dean of the Graduate School, have examined the dissertation entitled THE FRONTIER MYTH AND THE FRONTIER THESIS IN CONTEMPORARY GENRE FICTION presented by Jihun Yoo, a candidate for the degree of doctor of philosophy, and hereby certify that, in their opinion, it is worthy of acceptance. _________________________________________________ Professor Andrew Hoberek ________________________________________________ Professor Sw. Anand Prahlad ________________________________________________ Associate Professor Joanna Hearne ________________________________________________ Associate Professor Valerie Kaussen ACKNOWLEDGEMENTS I would like to thank my dissertation committee—Drs. Andrew Hoberek, Sw. Anand Prahlad, Joanna Hearne and Valerie Kaussen—for helping me with this project from the very beginning until now. I would like to thank, in particular, my dissertation advisor, Dr. Hoberek, for guiding me through the years. This dissertation wouldn’t have been possible without his wise advice and guidance. The support and encouragement of Drs. Dan Bauer and Kathy Albertson, and my colleagues at Georgia Southern University was also greatly appreciated. Especially, I would like to thank my family—my wife, Hyangki and my daughter, Yena—and both of my parents and in-laws who sacrificed so much to offer support and prayers during the Ph.D. program. Also, I want to thank pastor Hanjoo Park and church members at Korean First Presbyterian Church of Columbia for their support and prayers. -

IN the COURT of APPEALS of IOWA No. 1-734 / 10-1443 Filed

IN THE COURT OF APPEALS OF IOWA No. 1-734 / 10-1443 Filed December 7, 2011 STATE OF IOWA, Plaintiff-Appellee, vs. MARQUES DUPREE WILSON, Defendant-Appellant. ________________________________________________________________ Appeal from the Iowa District Court for Scott County, Charles H. Pelton, Judge. Marques Wilson appeals from his convictions for willful injury causing serious injury and intimidation with a dangerous weapon with intent. AFFIRMED. Mark C. Smith, State Appellate Defender, and Rachel C. Regenold, Assistant Appellate Defender, for appellant. Thomas J. Miller, Attorney General, Benjamin M. Parrott, Assistant Attorney General, Michael J. Walton, County Attorney, and Jerald Feuerbach, Assistant County Attorney, for appellee. Considered by Vogel, P.J., and Potterfield and Danilson, JJ. 2 VOGEL, P.J. Marques Wilson was charged with willful injury causing serious injury under Iowa Code section 708.4(1) (2009), and intimidation with a dangerous weapon with intent under Iowa Code section 708.6, from events that transpired on September 12, 2009, between Wilson and T. Wayne Allen. As the record contains sufficient evidence to uphold the jury’s verdicts on both charges, the district court properly refused Wilson’s motion for judgment of acquittal. In addition, Wilson’s ineffective-assistance-of-counsel claim fails because, under the narrow facts of this case, trial counsel did not breach an essential duty by not objecting to the use of the word “sustained” instead of “caused” in the jury instruction for willful injury causing serious injury. We therefore affirm. I. Background Facts & Proceedings In the early morning hours of September 12, 2009, Allen was at Genesis East Hospital in Davenport, Iowa. -

DECLARATION of Jane Sunderland in Support of Request For

Columbia Pictures Industries Inc v. Bunnell Doc. 373 Att. 1 Exhibit 1 Twentieth Century Fox Film Corporation Motion Pictures 28 DAYS LATER 28 WEEKS LATER ALIEN 3 Alien vs. Predator ANASTASIA Anna And The King (1999) AQUAMARINE Banger Sisters, The Battle For The Planet Of The Apes Beach, The Beauty and the Geek BECAUSE OF WINN-DIXIE BEDAZZLED BEE SEASON BEHIND ENEMY LINES Bend It Like Beckham Beneath The Planet Of The Apes BIG MOMMA'S HOUSE BIG MOMMA'S HOUSE 2 BLACK KNIGHT Black Knight, The Brokedown Palace BROKEN ARROW Broken Arrow (1996) BROKEN LIZARD'S CLUB DREAD BROWN SUGAR BULWORTH CAST AWAY CATCH THAT KID CHAIN REACTION CHASING PAPI CHEAPER BY THE DOZEN CHEAPER BY THE DOZEN 2 Clearing, The CLEOPATRA COMEBACKS, THE Commando Conquest Of The Planet Of The Apes COURAGE UNDER FIRE DAREDEVIL DATE MOVIE 4 Dockets.Justia.com DAY AFTER TOMORROW, THE DECK THE HALLS Deep End, The DEVIL WEARS PRADA, THE DIE HARD DIE HARD 2 DIE HARD WITH A VENGEANCE DODGEBALL: A TRUE UNDERDOG STORY DOWN PERISCOPE DOWN WITH LOVE DRIVE ME CRAZY DRUMLINE DUDE, WHERE'S MY CAR? Edge, The EDWARD SCISSORHANDS ELEKTRA Entrapment EPIC MOVIE ERAGON Escape From The Planet Of The Apes Everyone's Hero Family Stone, The FANTASTIC FOUR FAST FOOD NATION FAT ALBERT FEVER PITCH Fight Club, The FIREHOUSE DOG First $20 Million, The FIRST DAUGHTER FLICKA Flight 93 Flight of the Phoenix, The Fly, The FROM HELL Full Monty, The Garage Days GARDEN STATE GARFIELD GARFIELD A TAIL OF TWO KITTIES GRANDMA'S BOY Great Expectations (1998) HERE ON EARTH HIDE AND SEEK HIGH CRIMES 5 HILLS HAVE -

Dead End? John 11:20-27

resurrection-lcms.org Signs of Lent: Dead End? John 11:20-27 - March 29, 2020 - Sunday Morning Worship Schedule 10:00 am– Streaming Service WHAT’S HAPPENING TODAY? As we gather online, we continue to look at the signs of Lent. This morning we look at how when it looks like we come to a dead end and all hope is lost, that Jesus can change even that sign! As he proclaims to Martha, ““I am the resurrection and the life. The one who believes in me will live, even though they die; and whoever lives by believing in me will never die.” Then he asks her and asks us today amid the dead ends, “Do you believe this?” This is the question we will address today as we lift our hands in all our different locations! Holy Communion: If you would like to commune with us this morning, please read the Holy Communion statement in the friendship booklet. We encourage not only our members to celebrate together this gift from God, but also those visiting from sister congregations and those who share our confession regarding Holy Communion. If you have any questions, please speak with an elder or pastor. March 29, 2020 10:00 am Service PRELUDE WELCOME/ANNOUNCEMENTS OPENING HYMN “Oh, For a Thousand Tongues to Sing” LSB 528 verses 1, 3, 6, 7 Oh, for a thousand tongues to sing My great Redeemer's praise, The glories of my God and King, The triumphs of His grace! Jesus! The name that charms our fears, That bids our sorrows cease; 'Tis music in the sinner's ears, 'Tis life and health and peace. -

Season 2, Episode 3 Hell, the Devil and the Afterlife

Season 2, Episode 3 Hell, The Devil and The Afterlife Paul Swanson: Welcome to season two of Another Name for Everything, casual conversations with Richard Rohr responding to listener questions from his new book, The Universal Christ, and from season one of this podcast. Brie Stoner: As mentioned previously, this podcast is recorded on the grounds of The Center for Action and Contemplation and may contain the quirky sounds of our neighborhood and setting. We are your hosts. I’m Brie Stoner. Paul Swanson: And I’m Paul Swanson. Brie Stoner: We’re staff members of The Center for Action and Contemplation and students of this contemplative path, trying our best to live the wisdom of this tradition amidst late night grocery runs, laundry overwhelm, and the shifting state of our world. Paul Swanson: This is the third of twelve weekly episodes. Today, we’re addressing your questions on the themes of hell, and the devil, and afterlife. Brie Stoner: Richard, one of my favorite stories that you’ve shared with us is about Teresa of Ávila being asked about hell. Richard Rohr: Oh, yeah. Brie Stoner: Do you believe in hell? How does it go? Do you believe in hell? Richard Rohr: You know, we’ve got to be honest. We’re not sure it’s historical. Brie Stoner: Okay. Richard Rohr: But some insist it is. I’ve never read it footnoted. But the story is that the sisters asked her, “Do you believe in hell?” She said, “Oh, yes,” because she had to keep her orthodoxy with the Spanish Catholic Church.