Recognition and Prediction in a Network Music Performance System for Indian Percussion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Musical Explorers Is Made Available to a Nationwide Audience Through Carnegie Hall’S Weill Music Institute

Weill Music Institute Teacher Musical Guide Explorers My City, My Song A Program of the Weill Music Institute at Carnegie Hall for Students in Grades K–2 2016 | 2017 Weill Music Institute Teacher Musical Guide Explorers My City, My Song A Program of the Weill Music Institute at Carnegie Hall for Students in Grades K–2 2016 | 2017 WEILL MUSIC INSTITUTE Joanna Massey, Director, School Programs Amy Mereson, Assistant Director, Elementary School Programs Rigdzin Pema Collins, Coordinator, Elementary School Programs Tom Werring, Administrative Assistant, School Programs ADDITIONAL CONTRIBUTERS Michael Daves Qian Yi Alsarah Nahid Abunama-Elgadi Etienne Charles Teni Apelian Yeraz Markarian Anaïs Tekerian Reph Starr Patty Dukes Shanna Lesniak Savannah Music Festival PUBLISHING AND CREATIVE SERVICES Carol Ann Cheung, Senior Editor Eric Lubarsky, Senior Editor Raphael Davison, Senior Graphic Designer ILLUSTRATIONS Sophie Hogarth AUDIO PRODUCTION Jeff Cook Weill Music Institute at Carnegie Hall 881 Seventh Avenue | New York, NY 10019 Phone: 212-903-9670 | Fax: 212-903-0758 [email protected] carnegiehall.org/MusicalExplorers Musical Explorers is made available to a nationwide audience through Carnegie Hall’s Weill Music Institute. Lead funding for Musical Explorers has been provided by Ralph W. and Leona Kern. Major funding for Musical Explorers has been provided by the E.H.A. Foundation and The Walt Disney Company. © Additional support has been provided by the Ella Fitzgerald Charitable Foundation, The Lanie & Ethel Foundation, and -

1St PROGRESS REPORT Scheme : “Safeguarding the Intangible

1 1st PROGRESS REPORT Scheme : “Safeguarding the Intangible Cultural Heritage and Diverse Cultural Traditions of India. Title of the Project/Purpose: Prepared by: Shri Bipul Ojah “A study of various aspects of Khol: Village- Dalahati A Sattriya Musical Instrument” Barpeta-781301 Assam During the first reporting period I proceeded with my works as per my plan, and took up two tasks mentioned in the plan. One of them was to acquire knowledge of ‘Ga-Maan’, “Chok’, “Ghat” etc. of the main time division (taal) of Khol from the experienced masters and Sattriyas of the Satras belonging to the three main groups. The other task was to prepare the pattern of the time division in a scientific method by maintaining the characteristic features of the Sattriya Khol on the basis of the acquired knowledge. For these two tasks, I first chose Barpeta Satra and the Satras of the Kamalabari group of Majuli, and apart from doing field study there, I acquired knowledge of the Khol from the masters there. In this course I talked to Sri Basistha Dev Sarma, Burha Sattriya of Barpeta Satra, Sri Nabajit Das, Deka Sattriya of Barpeta Satra, Sri Jagannath Barbayan (92 years), the chief ‘Bayan’ of Barpeta Satra and Sri Akan Chandra Bayan, besides several distinguished Sattriya artists and writers such as Sri Nilkanta Sutradhar, Sri Gunindra Nath Ojah, Dr. Birinchi Kumar Das, Dr. Babul Chandra Das etc. Moreover, I studied various ‘Charit Books’ (biographies of the Vaishnavite saints), research books etc. and got various data on the Khol practiced in Barpeta Satra. After completing these works on the Khol of Barpeta Satra, I worked on the time division (taal) of the Khol practiced in the Satras of the Kamalabari group of Majuli. -

Final Senior Fellowship Report

FINAL SENIOR FELLOWSHIP REPORT NAME OF THE FIELD: DANCE AND DANCE MUSIC SUB FIELD: MANIPURI FILE NO : CCRT/SF – 3/106/2015 A COMPARATIVE STUDY OF TWO VAISHNAVISM INFLUENCED CLASSICAL DANCE FORM, SATRIYA AND MANIPURI, FROM THE NORTH EAST INDIA NAME : REKHA TALUKDAR KALITA VILL – SARPARA. PO – SARPARA. PS- PALASBARI (MIRZA) DIST – KAMRUP (ASSAM) PIN NO _ 781122 MOBILE NO – 9854491051 0 HISTORY OF SATRIYA AND MANIPURI DANCE Satrya Dance: To know the history of Satriya dance firstly we have to mention that it is a unique and completely self creation of the great Guru Mahapurusha Shri Shankardeva. Shri Shankardeva was a polymath, a saint, scholar, great poet, play Wright, social-religious reformer and a figure of importance in cultural and religious history of Assam and India. In the 15th and 16th century, the founder of Nava Vaishnavism Mahapurusha Shri Shankardeva created the beautiful dance form which is used in the act called the Ankiya Bhaona. 1 Today it is recognised as a prime Indian classical dance like the Bharatnatyam, Odishi, and Kathak etc. According to the Natya Shastra, and Abhinaya Darpan it is found that before Shankardeva's time i.e. in the 2nd century BC. Some traditional dances were performed in ancient Assam. Again in the Kalika Purana, which was written in the 11th century, we found that in that time also there were uses of songs, musical instruments and dance along with Mudras of 108 types. Those Mudras are used in the Ojha Pali dance and Satriya dance later as the “Nritya“ and “Nritya hasta”. Besides, we found proof that in the temples of ancient Assam, there were use of “Nati” and “Devadashi Nritya” to please God. -

Transcription and Analysis of Ravi Shankar's Morning Love For

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 2013 Transcription and analysis of Ravi Shankar's Morning Love for Western flute, sitar, tabla and tanpura Bethany Padgett Louisiana State University and Agricultural and Mechanical College, [email protected] Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Part of the Music Commons Recommended Citation Padgett, Bethany, "Transcription and analysis of Ravi Shankar's Morning Love for Western flute, sitar, tabla and tanpura" (2013). LSU Doctoral Dissertations. 511. https://digitalcommons.lsu.edu/gradschool_dissertations/511 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. TRANSCRIPTION AND ANALYSIS OF RAVI SHANKAR’S MORNING LOVE FOR WESTERN FLUTE, SITAR, TABLA AND TANPURA A Written Document Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Musical Arts in The School of Music by Bethany Padgett B.M., Western Michigan University, 2007 M.M., Illinois State University, 2010 August 2013 ACKNOWLEDGEMENTS I am entirely indebted to many individuals who have encouraged my musical endeavors and research and made this project and my degree possible. I would first and foremost like to thank Dr. Katherine Kemler, professor of flute at Louisiana State University. She has been more than I could have ever hoped for in an advisor and mentor for the past three years. -

Detecting T¯Ala Computationally in Polyphonic Context - a Novel Approach

Detecting t¯ala Computationally in Polyphonic Context - A Novel Approach Susmita Bhaduri1, Anirban Bhaduri2, Dipak Ghosh3 1;2;3Deepa Ghosh Research Foundation, Kolkata-700031,India [email protected] [email protected] [email protected] Abstract the left-tabl¯a strokes, which, after matching with the grammar of t¯ala-system, determines the t¯ala and tempo of the composition. A large number of In North-Indian-Music-System(NIMS),tabl¯a is polyphonic(vocal+tabl¯a+other-instruments) com- mostly used as percussive accompaniment for positions has been analyzed with the methodology vocal-music in polyphonic-compositions. The hu- and the result clearly reveals the effectiveness of man auditory system uses perceptual grouping of proposed techniques. musical-elements and easily filters the tabl¯a compo- Keywords:Left-tabl¯a drum , T¯ala detection, nent, thereby decoding prominent rhythmic features Tempo detection, Polyphonic composition, Cyclic like t¯ala, tempo from a polyphonic-composition. For pattern, North Indian Music System Western music, lots of work have been reported for automated drum analysis of polyphonic-composition. However, attempts at computational analysis of t¯ala 1 Introduction by separating the tabl¯a-signal from mixed sig- nal in NIMS have not been successful. Tabl¯a is Current research in Music-Information- played with two components - right and left. The Retrieval(MIR) is largely limited to Western right-hand component has frequency overlap with music cultures and it does not address the North- voice and other instruments. So, t¯ala analysis of Indian-Music-System hereafter NIMS, cultures in polyphonic-composition, by accurately separating general. -

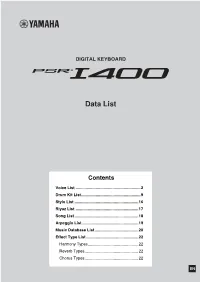

PSR-I400 Data List Voice List

DIGITAL KEYBOARD Data List Contents Voice List ..........................................................2 Drum Kit List.....................................................9 Style List .........................................................16 Riyaz List ........................................................17 Song List .........................................................18 Arpeggio List ..................................................19 Music Database List.......................................20 Effect Type List...............................................22 Harmony Types.............................................22 Reverb Types................................................22 Chorus Types................................................22 EN Voice List Maximum Polyphony The instrument has 48-note maximum polyphony. This means that it NOTE can play a maximum of up to 48 notes at once, regardless of what • The Voice List includes MIDI program change numbers functions are used. Auto accompaniment uses a number of the for each voice. Use these program change numbers available notes, so when auto accompaniment is used the total number when playing the instrument via MIDI from an external of available notes for playing on the keyboard is correspondingly device. reduced. The same applies to the Split Voice and Song functions. If the • Program change numbers are often specified as maximum polyphony is exceeded, earlier played notes will be cut off and numbers “0–127.” Since this list uses a “1–128” the most recent notes have -

Performing Arts (91 – 95)

PERFORMING ARTS (91 – 95) Aims: 5. To develop a co-operative attitude through the organisation and participation associated with 1. To develop a perceptive, sensitive and critical music, dance and drama. response to music, dance and drama in its historical and cultural contexts. 6. To provide an appropriate body of knowledge with understanding, and to develop appropriate 2. To stimulate and develop an appreciation and skills as a basis for further study or leisure or enjoyment of music, dance and drama through both. active involvement. One of the following five syllabuses may be offered: 3. To balance the demands of disciplined skills and challenging standards in an environment of Hindustani Music (91) emotional, aesthetic, imaginative and creative Carnatic Music (92) development. Western Music (93) 4. To develop performing skills, and so encourage a participation in the wide range of performance Indian Dance (94) activities likely to be found in the school and Drama (95) community. HINDUSTANI MUSIC (91) CLASS IX There will be one paper of two hours carrying (b) Detailed topics: Swara (Shuddha and 100 marks and Internal Assessment of 100 marks. VikritSwars), Jati (Odava, Shadava, The syllabus is divided into three sections: Sampoorna), Laya (Vilambit, Madhya, Section A - Vocal Music Drut), Varna (Sthai, Arohi, Avarohi, Sanchari), Forms of Geet – Swaramalika, Section B - Instrumental Music Lakshangeet, Khayal (BadaKhayal and Section C - Tabla ChotaKhayal), Dhrupad. Candidates will be required to attempt five questions 2. Description of the five ragas mentioned under in all, two questions from Section A and either three ‘practical’ – their Thaat, Jati, Vadi-Samvadi, questions from Section B or three questions from Swaras (Varjit and Vikrit), Aroha-Avaroha, Section C. -

A Set of Performance Practice Instructions for a Western Flautist Presenting Japanese and Indian Inspired Works

Edith Cowan University Research Online Theses : Honours Theses 2007 Blowing east : A set of performance practice instructions for a western flautist presenting Japanese and Indian inspired works Asha Henfry Edith Cowan University Follow this and additional works at: https://ro.ecu.edu.au/theses_hons Part of the Music Practice Commons Recommended Citation Henfry, A. (2007). Blowing east : A set of performance practice instructions for a western flautist presenting Japanese and Indian inspired works. https://ro.ecu.edu.au/theses_hons/1301 This Thesis is posted at Research Online. https://ro.ecu.edu.au/theses_hons/1301 Edith Cowan University Copyright Warning You may print or download ONE copy of this document for the purpose of your own research or study. The University does not authorize you to copy, communicate or otherwise make available electronically to any other person any copyright material contained on this site. You are reminded of the following: Copyright owners are entitled to take legal action against persons who infringe their copyright. A reproduction of material that is protected by copyright may be a copyright infringement. Where the reproduction of such material is done without attribution of authorship, with false attribution of authorship or the authorship is treated in a derogatory manner, this may be a breach of the author’s moral rights contained in Part IX of the Copyright Act 1968 (Cth). Courts have the power to impose a wide range of civil and criminal sanctions for infringement of copyright, infringement of moral rights and other offences under the Copyright Act 1968 (Cth). Higher penalties may apply, and higher damages may be awarded, for offences and infringements involving the conversion of material into digital or electronic form. -

Hindustani Music (91)

HINDUSTANI MUSIC (91) BIFURCATED SYLLABUS (As per the Reduced Syllabus for ICSE - Class X Year 2022 Examination) SEMESTER 1 SEMESTER 2 (Marks: 50) (Marks: 50) UNIT UNIT NAME OF THE UNIT NAME OF THE UNIT NO. NO. SECTION A: HINDUSTANI VOCAL MUSIC 1. (a) Non-detail terms: Sound (Dhwani), 1. (a) Non-detail terms: Thumri, Poorvang, Meend, Kan (Sparsha swar), Gamak, Uttarang, Poorva Raga and Uttar Tigun. Raga. (b) Detailed topics: Nad, three qualities of (b) Detailed topics: Shruti and placement Nad (volume, pitch, timbre). of 12 swaras; Dhrupad and Dhamar. 2. Description of one raga - Bhairav, its 2. Description of the two ragas - Bhoopali Thaat, Jati, Vadi-Samvadi, Swaras (Varjit and Malkauns, their Thaat, Jati, Vadi- and Vikrit), Aroha-Avaroha, Pakad, time Samvadi, Swaras (Varjit and Vikrit), of raga and similar raga. Aroha-Avaroha, Pakad, time of raga and similar raga. 3. Writing in the Taal notation, two Taals - 3. Writing in the Taal notation, the Taal - Rupak and Jhaptaal , their Dugun, Tigun Deepchandi (Chanchar), its Dugun, Tigun and Chaugun. and Chaugun. 4. Knowledge of musical notation system of 5. Identification of Ragas - Bhoopali and Pt. V.N. Bhatkhande (Swara and Taal- Malkauns (a few note combinations lipi); writing ChotaKhayal, Swarmalika given). and Lakshangeet. 5. Identification of Raga - Bhairav (a few note 6. Life and contribution in brief of Pt. Vishnu combinations given). Digambar Paluskar. 6. Life and contribution in brief of Amir Khusro. 7. Names of different parts (components) of the Tanpura with the help of a simple sketch. Tuning and handling of the instrument. SECTION B : HINDUSTANI INSTRUMENTAL MUSIC (EXCLUDING TABLA) 1. -

Ektaal,Œ I Decided to Reflect ¿ on It and Try to Transfer the Ektaal‐Feeling on ¿ to Western Instruments Andœ Sounds

Introduction 3 IVampsEX. have 3 always loved and admired Indian classical music and3 its great interpreters.3 Listening to great masters such as tabla gurus Pt. Suresh Talwalkar and Pt. Zakir Hussainœ makes you wonder if you ever will be ableœ to grasp the depth of it. Many years ago I acknowledged that I as a œ œ >œ œ œ œ œ >œ œ westVampsã œern composerare improvisedœ and percussion sectionsœ player betweenœ œ never composed will be able sections. to playœ the tablas properly,œ œbut since I fell in love∑ with the Ektaal,œ I decided to reflect ¿ on it and try to transfer the ektaal‐feeling on ¿ to western instruments andœ sounds. The instruments ¿ I have chosen ¿for this piece is westThe ernvamps modern should instruments always withbe improvisations only a fraction ofplayed soundcreating soft and possibilitiesin the style compared of ektaal towith the theIndian hi‐ hattablas. playing The intensions on 1‐5‐9 ‐of11th Ektaal beat. Reflections is therefore NOT an attempt to sound like tablas and real Indian music, but to make a crossover between Indian and western The vamps are to be repeated as you like. A specific fill will occur on the 8th beat when eyou decid to move on to the next music and to give all non‐tabla players an opportunity to enjoy this beautiful taal. section. Ektaal is one of Indias most popular taals. TAAlHere isare a re5 ‐examplesoccuring rhythmetic of vamp‐ improvisationsframework of certain with number elements of beats from divided the piece, in a butparticular it will way. -

History of Indian Percussion Instruments

DEPARTMENT OF MUSIC DR.HARISINGH GOUR VISHWAVIDYALAYA,SAGAR (M.P.) M.A. Hindustani Music- (Tabla) L T P C First Semester 4 1 - 5 Hours 75 MUT-CC-121 15 Hours per unit History of Indian Percussion Instruments Unit-1 i. A brief study of Percussion Instruments as mentioned in Natyashastra, Sangeet Ratnakar and other Granthas (fourth century onwards). Mridanga, Panava, Mardal, Patah, Muraj, Dundubhi, Kartaal, kansyataal, Damru, etc. ii. A brief study of playing style of ancient Ghan Vadyas as mentioned in various Granthas. Chimta, Jhanj, Manjeera,Tasha, Daf, Chipali, Ek tara, etc. Unit-2 i) Evolution and Historical developments of Tabla. ii) Evolution and Historical developments of Pakhawaj. Unit –3 Historical Development of Different Gharanas and Baaj of Tabla. Unit -4 A detailed knowledge of Karnataka Taal System. Unit -5 Comparative study of North Indian and Karnataka Taal System. Reference Books- 1. Pakhawaj aur table ke gharane evam paramparaye by Dr. Aban Mishtri Sangeet Sadan Allahabad. 2. Indian Concept of rhythm by dr.A.K.sen, kanishsk publication new delhi. 3. Pakhawaj aur tabla ke gharane evam parampara by dr.awan mishtri, sangeet sadan Allahabad 4. Sangeet me tal vadyon ki upyogita by dr.chitra gupta,radha publication delhi. 5. Tabla puran by pt.vijay Shankar mishra ,kanishsk publication new delhi 6. Tabla grunth by pt.chotelal mishra ,kanishsk publivation new delhi. 7. Table ki Paramparagat vadan shaili ka astitwa by Dr.Rahul Swarnkar,ND Publication(seminar Proceeding ) 8. Tabla ,Arvindra Moolgaonkar, Luminus Publication. DEPARTMENT OF MUSIC DR.HARISINGH GOUR VISHWAVIDYALAYA,SAGAR (M.P. ) T P C M.A. -

Music of INDIA

CARNEGIE HALL presents GLOBAL ENCOUNTERS MUSIC OF INDIA A Program of The Weill Music Institute at Carnegie Hall TEACHER GUIDE ACKNOWLEDGMENTS Contributing Writer / Editor Daniel Levy Consulting Writer Samita Sinha Delivery of The Weill Music Institute’s programs to national audiences is funded in part by the US Department of Education and by an endowment grant from the Citi Foundation. The Weill Music Institute at Carnegie Hall 881 Seventh Avenue New York, NY 10019 212-903-9670 212-903-0925 weillmusicinstitute.org © 2009 The Carnegie Hall Corporation. All rights reserved. GUIDE TO THE TEXT FORMATTING Throughout this curriculum, we have used different text formats to help simplify the directions for each lesson. Our hope is that this format will allow you to keep better track of your steps while you are on your feet in class teaching a lesson. There are two main formatting types to recognize. 1) Any “scripted” suggestions—especially all questions—appear in “blue” with quotation marks. 2) Basic action headings are set in bold italic. (Options are in parentheses.) Note: For CD tracks, we list the track number first, then the title. For example: MEET THE ARTIST • Read Sameer Gupta’s Meet the Artist handout (out loud). • Summarize what the artist has said (on paper). • “Based on what we know about Sameer, what might his music sound like?“ • Listen to CD Track 1, Sameer Gupta’s “Yaman.” • “Now that you have heard the music, were your guesses right?“ • Transition: Sameer Gupta is an expert at working with musical freedom and structure. To get to know his work, we will need to become experts on freedom and structure.