An Analysis of Defense Laboratory Patenting and Innovation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hoopclinics Pack Line Defense E-Book

Presented by HoopClinics Copyright 2008 HoopClinics All Rights Reserved Pack Line Table of Contents Introduction Acknowledgements……………………………………………………………………….. 1 Section I Defensive Philosophy Defensive Beliefs .…………..……………………………………………………………. 2 Defensive Expectations…………………………………………………………………… 4 Section II Six Defensive Phases Phase #1 Conversion……………………………………………………………………… 8 Phase #2 Establish and Maintain Defensive Spacing ………………………………….....10 Phase #3 Pressure the Ball……………………………………………………………….. 16 Phase #4 Keep the Ball Out of the Lane ….…………………………………………….. 17 Phase #5 Contest every shot …………………………………………………………….. 24 Phase #6 Block out, secure rebounds, loose balls……………………………………….. 24 Section III Special Situations Helping from Basket, End of Quarter, Free Throws, In Bounds, ………………………. 26 Catch Up Ball Screens……………………………………………………………………28 Section IV Teaching Pack Line Through Repetition in Practice Individual Skills, Six Phases, Shell Drill…………………………………………………29 Guarding Specific Movements, Competitive Drills, Disadvantage Drills………………. 30 Recovery Drills, Toughness Drills ……………………………………………………….31 Section V Pre-game Decisions and In-game Adjustments Defensive Matchups…………………………………………………………………..….32 Conclusion Defensive Evaluation………………………………………………………………..……33 Resources…………..……………………………………………………………..………34 Appendices Appendix A Playing Hard on Defense………………………………………..………….35 Appendix B Teaching Defense in Practice………..………………………..…………….36 The HoopClinics Version of the Pack Line Defense Introduction Thank you for your interest in our version of the pack line defense. This e-book is meant to complement the screen cast that we have prepared, not to be a word for word transcription. Some of the concepts and drills will be better presented with the animations on the screen cast. My hope is that between the two mediums, you will be able to pull some ideas that will help your defense. We are presenting this information as the complete pack line defense that we have used and that we feel that has fit our personnel the best over the years that we have used it. -

Tenets of a Regional Defense Strategy Considerations for the Indo-Pacific

the national bureau of asian research nbr special report #72 | august 2018 tenets of a regional defense strategy Considerations for the Indo-Pacific By Jonathan W. Greenert cover 2 NBR Board of Directors Charles W. Brady Ryo Kubota Gordon Smith (Chairman) Chairman, President, and CEO Chief Operating Officer Chairman Emeritus Acucela Inc. Exact Staff, Inc. Invesco LLC Quentin W. Kuhrau Scott Stoll John V. Rindlaub Chief Executive Officer Partner (Vice Chairman and Treasurer) Unico Properties LLC Ernst & Young LLP President, Asia Pacific Wells Fargo Regina Mayor David K.Y. Tang Principal, Global Sector Head and U.S. Managing Partner, Asia George Davidson National Sector Leader of Energy and K&L Gates LLP (Vice Chairman) Natural Resources Vice Chairman, M&A, Asia-Pacific KPMG LLP Mitchell B. Waldman HSBC Holdings plc (Ret.) Executive Vice President, Government Melody Meyer and Customer Relations Thomas W. Albrecht President Huntington Ingalls Industries, Inc. Partner Melody Meyer Energy LLC Sidley Austin LLP Joseph M. Naylor Dennis Blair Vice President of Policy, Government Honorary Directors Chairman and Public Affairs Sasakawa Peace Foundation USA Chevron Corporation Lawrence W. Clarkson U.S. Navy (Ret.) Senior Vice President Kenneth B. Pyle The Boeing Company (Ret.) Maria Livanos Cattaui Professor; Founding President Secretary General (Ret.) University of Washington; NBR Thomas E. Fisher International Chamber of Commerce Senior Vice President Jonathan Roberts Unocal Corporation (Ret.) Norman D. Dicks Founder and Partner Senior Policy Advisor Ignition Partners Joachim Kempin Van Ness Feldman LLP Senior Vice President Tom Robertson Microsoft Corporation (Ret.) Richard J. Ellings Vice President and President Deputy General Counsel Clark S. -

The Chinese Navy: Expanding Capabilities, Evolving Roles

The Chinese Navy: Expanding Capabilities, Evolving Roles The Chinese Navy Expanding Capabilities, Evolving Roles Saunders, EDITED BY Yung, Swaine, PhILLIP C. SAUNderS, ChrISToPher YUNG, and Yang MIChAeL Swaine, ANd ANdreW NIeN-dzU YANG CeNTer For The STUdY oF ChINeSe MilitarY AffairS INSTITUTe For NATIoNAL STrATeGIC STUdIeS NatioNAL deFeNSe UNIverSITY COVER 4 SPINE 990-219 NDU CHINESE NAVY COVER.indd 3 COVER 1 11/29/11 12:35 PM The Chinese Navy: Expanding Capabilities, Evolving Roles 990-219 NDU CHINESE NAVY.indb 1 11/29/11 12:37 PM 990-219 NDU CHINESE NAVY.indb 2 11/29/11 12:37 PM The Chinese Navy: Expanding Capabilities, Evolving Roles Edited by Phillip C. Saunders, Christopher D. Yung, Michael Swaine, and Andrew Nien-Dzu Yang Published by National Defense University Press for the Center for the Study of Chinese Military Affairs Institute for National Strategic Studies Washington, D.C. 2011 990-219 NDU CHINESE NAVY.indb 3 11/29/11 12:37 PM Opinions, conclusions, and recommendations expressed or implied within are solely those of the contributors and do not necessarily represent the views of the U.S. Department of Defense or any other agency of the Federal Government. Cleared for public release; distribution unlimited. Chapter 5 was originally published as an article of the same title in Asian Security 5, no. 2 (2009), 144–169. Copyright © Taylor & Francis Group, LLC. Used by permission. Library of Congress Cataloging-in-Publication Data The Chinese Navy : expanding capabilities, evolving roles / edited by Phillip C. Saunders ... [et al.]. p. cm. Includes bibliographical references and index. -

Drills to Build the Pack Line Defense -Chris Mack, Xavier [email protected]

Drills to Build The Pack Line Defense -Chris Mack, Xavier [email protected] -Practices their Pack Line every single day. Coaching Point: Have an identity. !-Hard to build habbits if you change your defense a lot. !-They more or less keep the pack line no matter who they are playing Their pack line d is not a soft one where you sit in the lane. !-Their PLD is an aggressive defense, with incredible ball pressure, and you must be smart off the ball. The PLD helps give you a “system” to play defense. The PLD: 1) Gives players answers and accountability !-Certain things players can/can’t do do !-They are given specific rules ex. “You can’t play behind the post” vs “Play hard” 2) Simplifies Scouting--> Their coaches watch a ton of film but do not give it all to the players. Instead they play certain actions in very specific ways and practice these every day. !Ex. Practice defending staggered screens so when they play a team they already !know how they will defend this and get better at it through out the year. 3) Creates a culture your players can believe in. !Varsity players “pass” down the defense by teaching your younger players !without a lot of involvement from coaches. ****Mark the pack line in practice every day**** Think of it as a 16 foot 3 point line. (Figure 1a) GOLDEN RULE: When your man does not have the ball YOU MUST HAVE 2 FEET IN THE PACK LINE. Exceptions: If your man is cutting you must chase the cutter. -

YOUTH BASKETBALL COACHES MANUAL 4-5Th Grade

YOUTH BASKETBALL COACHES MANUAL 4-5th Grade PRACTICE OUTLINE YMCA YOUTH SPORTS PRACTICE SESSION PLANS Warm-up (5 minutes) Fitness component (5 Minutes) Skills Drills (15 minutes) Game / Play (15 minutes) Team Circle (10 minutes) YMCA YOUTH SPORTS PRACTICE SESSION PLANS PRACTICE 1 Warm- Up (10 minutes) Begin each practice with 5 to 10 min- utes of warm-up activities to get players loosened up and ready to go. Players dribble, jump stop, and shoot, traveling from one basket to the next. All shooting should be two to four feet from the basket. Fitness Component (5 minutes) Key Idea: Flexibility Gather the team into a circle. “Is there such a thing as stretching your muscles too far, or should you stretch as far as you can to improve your flexibility?” Listen to their re- sponses. “Muscles should stretch only until you feel a slight pulling. You should never feel pain stretching. If you do, you’re stretching too far.” Choose a stretch for the team to try. “I want you to stretch until you feel the slight pulling. Everyone will have different levels of flexibility, but all of you need to stretch properly to stay flexible and prevent injuries.” PRACTICE 1 Skills Drills (15 minutes) 1. Introduce, demonstrate and explain creating passing lanes. 2. Practice providing support for teammates by creating passing lanes. Description Play 3 v 1 games. Offensive players move to open space. Defenders play cooperative to active defense. Use offensive positions on one or both sides of the basket. Players pass, then move to an offensive position (point, wing, baseline, or high or low post) adjacent to the ball. -

Soccer Coaching Manual

1 The LA84 Foundation is the organization created to manage Southern California’s share of the surplus from the 1984 Olympic Games. Located in the historic Britt House since 1985, the LA84 Foundation has committed more than $160 million to create, support and expand existing youth sports programs, and develop the Paul Ziffren Sports Resource Center. The Sports Resource Center is a state-of-the-art learning and cultural center for sports which contains sports books, films, videos, photographs and memorabilia. To date, more than two million boys and girls and more than 1,000 youth sports organizations throughout Southern California have benefited from our endowment. The goal of the LA84 Foundation is to be an innovator in youth sports and coaching, and to increase opportunities for achieving athletic excellence at every level. The Foundation grants financial assis- tance to organizations providing youth sports opportunities, initiates and operates its own youth sports programs including Run For Fun, Summer Swim, and offers free coaching education work- shops through the LA84 Foundation Coaching Program. For additional information regarding the LA84 Foundation please visit our web site at www.LA84Foundation.org. Peter V. Ueberroth, Chairman Anita L. DeFrantz, President Board of Directors: John Bryson Yvonne Burke Jae Min Chang Anita L. DeFrantz James Easton Janet Evans Priscilla Florence Bob Graziano Rafer Johnson Maureen Kindel Tom Larkin Charles D. Miller Peter O’Malley Joan Payden Amy Quinn Frank Sanchez Peter Ueberroth Gilbert Vasquez David L. Wolper, Chairman Emeritus John Ziffren 2 LA84 FOUNDATION SOCCER COACHING MANUAL Edited By Stacey Chapman Edward Derse Jacqueline Hansen Contributing Writers Amy Allmann Martin McGrogan Orlando Brenes Billy McNicol Roger Bryant Bob Myers Stacey Chapman Jen Netherwood Ellen Coleman, R.D. -

Basketball Pack Line Defense

Basketball Defense - the Pack Line Defense By James Gels, from the Coach’s Clipboard Basketball Playbook (http://www.coachesclipboard.net/Playbook.html), @ http://www.coachesclipboard.net (http://www.coachesclipboard.net) The pack-line defense is a variation of man-to-man defense developed by Dick Bennett (http://www.basketballstore- coachesclipboard.net/bd-02429-dick-bennett-the-pack-line-pressure-defense.html) for the Washington State University Cougars. Something similar has been used Sean Miller at University of Arizona, Tony Bennett at UVA, and Chris Mack at Xavier. First understand the basic tenets of pressure man-to-man defense (M2MDefense.html). I used to say that there is probably no reason to use the pack line defense if you have excellent, quick athletes who are good defenders... just keep the intense pressure on, using the standard pressure man-to-man defense. On the other hand, the pack line defense will perhaps help less talented teams "hang in there" with better teams, and can also help a team having difficulty preventing point guard dribble-penetration. However, since many teams nowadays use the dribble-drive to attack, it makes good sense to use the packline defense no matter what your team's skill level is. The packline will help clog those seams and stop the dribble- drive. The packline is a gap defense, that still puts pressure on the ball. The packline defense also puts your players in better position for defensive rebounding, and may result in less fouling. The "pack line" The pack line man-to-man defense is also called a "sagging" man-to-man defense. -

GUNS Magazine May 1960

SU,PER BLACKHAWK ® .44 MAGNUM WITH 7.'/2" BARREL Only RUGER':'"jfiet§< al~t",ls~fifiil,.ovem;hts ~s standard. ~ TINY NYLON (HYLOK) PLUG IN THE THREADS HOLDS· PAST FOR I,.JFE •...fjOMPANY, INC. CONNECTICUT, U.S.A. d~~~~ et9Jmrufh~ . @//d~ &b ~~~... 9J~ftk ~ '99" The "99" is Arthur Savage's gift to big-game Chambered to handle today's powerful car hunters the world over. tridges: .300, .250-3000, .358, .308, .243 ... the Sportsmen first asked for this over 60 years 99 is available in a famous family of models. ago ... seeking a versatile, fast-shooting, flat Above, the new de luxe model 99-DL in the shooting, dependable rifle. favored :t\,Ionte Carlo version preferred for Over 60 years ago a young designer, Arthur scope shooting. The 99-DL and a companion Savage, answered this desire with a revolu featherweight 99-F feature a new top-tang tionary new sporting arm ... the first of the safety and lever lock, plus a new trigger-sear 99 series. It utilizes a remarkably efficient mechanism. Standard models 99-E and -R have rotary magazine in a precisely engineered regular safety and lever lock on lower tang. loading system, a hamlr.erless lever action See these fine American-designed, American that lets you shoot as fast as you can aim, and made rifles at your sporting arms dealers now an exclusive cartridge indicator possible only or write Savage Arms Corporation, Chicopee with a rotary magazine. These innovations Falls 13, Mass. for a free illustrated book of are still the backbone of the Savage 99. -

The Air Jordan Rules: Image Advertising Adds New Dimension to Right of Publicity–First Amendment Tension

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Fordham University School of Law Fordham Intellectual Property, Media and Entertainment Law Journal Volume 26 Volume XXVI Number 4 Volume XXVI Book 4 Article 3 2016 The Air Jordan Rules: Image Advertising Adds New Dimension to Right of Publicity–First Amendment Tension Stephen McKelvey University of Massachusetts Amherst Jonathan Goins Lewis Brisbois Bisgaard & Smith LLP Frederick Krauss William S. Boyd School of Law at University of Nevada, Las Vegas Follow this and additional works at: https://ir.lawnet.fordham.edu/iplj Part of the Intellectual Property Law Commons Recommended Citation Stephen McKelvey, Jonathan Goins, and Frederick Krauss, The Air Jordan Rules: Image Advertising Adds New Dimension to Right of Publicity–First Amendment Tension, 26 Fordham Intell. Prop. Media & Ent. L.J. 945 (2016). Available at: https://ir.lawnet.fordham.edu/iplj/vol26/iss4/3 This Article is brought to you for free and open access by FLASH: The Fordham Law Archive of Scholarship and History. It has been accepted for inclusion in Fordham Intellectual Property, Media and Entertainment Law Journal by an authorized editor of FLASH: The Fordham Law Archive of Scholarship and History. For more information, please contact [email protected]. The Air Jordan Rules: Image Advertising Adds New Dimension to Right of Publicity–First Amendment Tension Cover Page Footnote Associate Professor, Mark H. McCormack Department of Sport Management, Isenberg School of Management, University of Massachusetts Amherst; J.D., Seton Hall School of Law; B.S., American Studies, Amherst College. † Partner, Lewis Brisbois Bisgaard & Smith LLP; Adjunct Professor, Atlanta’s John Marshall Law School; J.D., Howard University School of Law; B.A., Political Science, University of Louisville. -

20-25 Min TRANSITION (Conditioning) Passing Layups

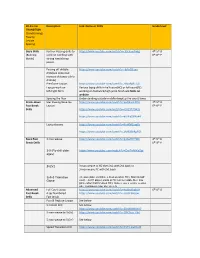

20-25 min Description Link: Demo or Drills Grade level TRANSITION (Conditioning) Passing Layups Spacing Basic Skills Partner Passing drills to https://www.youtube.com/watch?v=-9SOcopNykg 4th-5th D (Building work on catching with 6th-8th P blocks) strong hands/crisp passes Passing off dribble https://www.youtube.com/watch?v=-6jAsCBizqk drills(see demo but increase distance-add a dribble) Pre-Game Layups https://www.youtube.com/watch?v=hRxaBgBuC4I Layups-work on Various layup drills in half court(HC) or full court(FC) left/right form working on footwork/high jump finish-see Skills on website Spacing the floor Understanding outside-middle-deep(up the court) lanes Break-down Star Passing/Give-Go https://www.youtube.com/watch?v=qaQKiZsLNYU 4th-5th D Fast Break Layups 6th-8th P Drills https://www.youtube.com/watch?v=lGRZ3F7tW2c https://www.youtube.com/watch?v=aUWaZdiho44 Layup chasers https://www.youtube.com/watch?v=ParXMZbxgRc https://www.youtube.com/watch?v=dM83SbRgFQk Basic Fast 3 man weave https://www.youtube.com/watch?v=glc6aHFYHNk 4th-5th D Break Drills 6th-8th P 3-0 (Fly drill older https://www.youtube.com/watch?v=DwTnNIKz5gI ages) 3v2v1 3 man weave to HC then 3v2 with 2v1 back or 3 man weave FC with 2v1 back 3 teams (Blue vs White + Red on other HC). Start at half 3v3v3 Transition Game court…3v2(1 player starts at HC run to middle then into game when ball crosses HC). Make it take it to play vs other side. Continuous game play up to X Advanced Full Court Layup https://www.youtube.com/watch?v=kxdbukkqbLA 6th-8th D Fast Break 4 sec Numbered -

Coaching Manual (PDF)

2 Issaquah Parks & Recreation 301 Rainier Blvd S. / P.O. Box 1307 Issaquah, WA. 98027 Dear Volunteer Coach, On behalf of the Issaquah Parks and Recreation Department and the Issaquah Park Board, we thank you for volunteering your time and effort with the Issaquah Parks & Recreation Youth Basketball Program! Our program has grown steadily since its formation in 1973, and continues to be successful! This program should be a positive learning experience for the players, coaches, parents and staff. These are the primary objectives of the Issaquah Parks and Recreation Department. Please read and understand the goals of our program, and carry yourself in a manner that is consistent with the spirit of our league. As a volunteer coach, you are an integral part of our program. Your donation of time, effort, and leadership is much appreciated by our staff and community, and will greatly influence your players, parents and spectators. Sports programs are a fantastic way to learn important values of life, and with your guidance we believe everyone will have an exciting and pleasurable season! Sincerely, Jared Behl Lisa Echert Jared Behl Lisa Echert Recreation Supervisor Recreation Coordinator 425-837-3324 425-837-3308 [email protected] [email protected] 3 MANUAL OUTLINE • Mission, Philosophy and Program Goals ……………………………………………………………..…….4 • Coaches’ Expectations……………………………………………………………………………………..…….5 -8 • Facility Rules and Guidelines ………………………………………………………………….…………….…..9 • Teaching the game - Developmental Stages of learning basketball…….………….………. 10 FUNdamental Stage (K-3rd Grade)…………………………………………..………...…….11 Learning to train Stage (3rd –6th Grade)………….…….…………………….…………..14 Train to train Stage (6th-10th Grade…………………..…………………………………….18 • Grade Specific Tips and Expectations…………………………………………………………………..…..22 • Getting Started- Running Practices ……………………………..………………………………………. -

Dribble Drive Motion Offense

Notes on the Dribble-Drive Motion Offense - the Basics From the Coach’s Clipboard Basketball Playbook,@ http://www.coachesclipboard.net The "dribble-drive motion offense" is one of the latest, popular offenses seen at the college, pro, and high school levels. The original innovator is Vance Walberg of Fresno City College and Pepperdine, and now assistant coach at UMass, and he called it his "AASAA" offense... meaning "attack, attack, skip, attack, attack". At the college level, coach John Calipari has popularized it, modified it, and renamed it the "dribble-drive motion offense". Guard dribble-penetration is the key, and dribble-penetration is one of the most difficult things for a defense to stop... plus it exposes the defenders to picking up fouls, and gets us to the free-throw line. Keys are: spacing creating gaps Vance Walberg attacking with the dribble-drive. This is a guard-oriented offense that features spreading the floor and dribble-penetration to the rim for lay-ups or kick-out three-point shots. Coach Walberg uses the term "key or 3" meaning either a layup, or a 3- point shot. This offense uses a 4-out set. O1 and O5 are like double point guards, and their mentality is to attack with the dribble first, and are outside shooters secondly. O2 and O3 are set deep in the corners (and must be patient) and they are shooters first and dribble-attackers secondly. The post player does not look to post-up, but rather plays on the opposite block, elbow or short corner... as this creates more open lanes for dribble-penetration.