Color Science and Color Appearance Models for Cg, Hdtv, and D-Cinema

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

Chapter 6 : Color Image Processing

Chapter 6 : Color Image Processing CCU, Taiwan Wen-Nung Lie Color Fundamentals Spectrum that covers visible colors : 400 ~ 700 nm Three basic quantities Radiance : energy that flows from the light source (measured in Watts) Luminance : a measure of energy an observer perceives from a light source (in lumens) Brightness : a subjective descriptor difficult to measure CCU, Taiwan Wen-Nung Lie 6-1 About human eyes Primary colors for standardization blue : 435.8 nm, green : 546.1 nm, red : 700 nm Not all visible colors can be produced by mixing these three primaries in various intensity proportions Cones in human eyes are divided into three sensing categories 65% are sensitive to red light, 33% sensitive to green light, 2% sensitive to blue (but most sensitive) The R, G, and B colors perceived by CCU, Taiwan human eyes cover a range of spectrum Wen-Nung Lie 6-2 Primary and secondary colors of light and pigments Secondary colors of light magenta (R+B), cyan (G+B), yellow (R+G) R+G+B=white Primary colors of pigments magenta, cyan, and yellow M+C+Y=black CCU, Taiwan Wen-Nung Lie 6-3 Chromaticity Hue + saturation = chromaticity hue : an attribute associated with the dominant wavelength or dominant colors perceived by an observer saturation : relative purity or the amount of white light mixed with a hue (the degree of saturation is inversely proportional to the amount of added white light) Color = brightness + chromaticity Tristimulus values (the amount of R, G, and B needed to form any particular color : X, Y, Z trichromatic -

Package 'Colorscience'

Package ‘colorscience’ October 29, 2019 Type Package Title Color Science Methods and Data Version 1.0.8 Encoding UTF-8 Date 2019-10-29 Maintainer Glenn Davis <[email protected]> Description Methods and data for color science - color conversions by observer, illuminant, and gamma. Color matching functions and chromaticity diagrams. Color indices, color differences, and spectral data conversion/analysis. License GPL (>= 3) Depends R (>= 2.10), Hmisc, pracma, sp Enhances png LazyData yes Author Jose Gama [aut], Glenn Davis [aut, cre] Repository CRAN NeedsCompilation no Date/Publication 2019-10-29 18:40:02 UTC R topics documented: ASTM.D1925.YellownessIndex . .5 ASTM.E313.Whiteness . .6 ASTM.E313.YellownessIndex . .7 Berger59.Whiteness . .7 BVR2XYZ . .8 cccie31 . .9 cccie64 . 10 CCT2XYZ . 11 CentralsISCCNBS . 11 CheckColorLookup . 12 1 2 R topics documented: ChromaticAdaptation . 13 chromaticity.diagram . 14 chromaticity.diagram.color . 14 CIE.Whiteness . 15 CIE1931xy2CIE1960uv . 16 CIE1931xy2CIE1976uv . 17 CIE1931XYZ2CIE1931xyz . 18 CIE1931XYZ2CIE1960uv . 19 CIE1931XYZ2CIE1976uv . 20 CIE1960UCS2CIE1964 . 21 CIE1960UCS2xy . 22 CIE1976chroma . 23 CIE1976hueangle . 23 CIE1976uv2CIE1931xy . 24 CIE1976uv2CIE1960uv . 25 CIE1976uvSaturation . 26 CIELabtoDIN99 . 27 CIEluminanceY2NCSblackness . 28 CIETint . 28 ciexyz31 . 29 ciexyz64 . 30 CMY2CMYK . 31 CMY2RGB . 32 CMYK2CMY . 32 ColorBlockFromMunsell . 33 compuphaseDifferenceRGB . 34 conversionIlluminance . 35 conversionLuminance . 36 createIsoTempLinesTable . 37 daylightcomponents . 38 deltaE1976 -

PANTONE® Colorwebtm 1.0 COLORWEB USER MANUAL

User Manual PANTONE® ColorWebTM 1.0 COLORWEB USER MANUAL Copyright Pantone, Inc., 1996. All rights reserved. PANTONE® Computer Video simulations used in this product may not match PANTONE®-identified solid color standards. Use current PANTONE Color Reference Manuals for accurate color. All trademarks noted herein are either the property of Pantone, Inc. or their respective companies. PANTONE® ColorWeb™, ColorWeb™, PANTONE Internet Color System™, PANTONE® ColorDrive®, PANTONE Hexachrome™† and Hexachrome™ are trademarks of Pantone, Inc. Macintosh, Power Macintosh, System 7.xx, Macintosh Drag and Drop, Apple ColorSync and Apple Script are registered trademarks of Apple® Computer, Inc. Adobe Photoshop™ and PageMill™ are trademarks of Adobe Systems Incorporated. Claris Home Page is a trademark of Claris Corporation. Netscape Navigator™ Gold is a trademark of Netscape Communications Corporation. HoTMetaL™ is a trademark of SoftQuad Inc. All other products are trademarks or registered trademarks of their respective owners. † Six-color Process System Patent Pending - Pantone, Inc.. PANTONE ColorWeb Team: Mark Astmann, Al DiBernardo, Ithran Einhorn, Andrew Hatkoff, Richard Herbert, Rosemary Morretta, Stuart Naftel, Diane O’Brien, Ben Sanders, Linda Schulte, Ira Simon and Annmarie Williams. 1 COLORWEB™ USER MANUAL WELCOME Thank you for purchasing PANTONE® ColorWeb™. ColorWeb™ contains all of the resources nec- essary to ensure accurate, cross-platform, non-dithered and non-substituting colors when used in the creation of Web pages. ColorWeb works with any Web authoring program and makes it easy to choose colors for use within the design of Web pages. By using colors from the PANTONE Internet Color System™ (PICS) color palette, Web authors can be sure their page designs have rich, crisp, solid colors, no matter which computer platform these pages are created on or viewed. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

CDOT Shaded Color and Grayscale Printing Reference File Management

CDOT Shaded Color and Grayscale Printing This document guides you through the set-up and printing process for shaded color and grayscale Sheet Files. This workflow may be used any time the user wants to highlight specific areas for things such as phasing plans, public meetings, ROW exhibits, etc. Please note that the use of raster images (jpg, bmp, tif, ets.) will dramatically increase the processing time during printing. Reference File Management Adjustments must be made to some of the reference file settings in order to achieve the desired print quality. The settings that need to be changed are the Slot Numbers and the Update Sequence. Slot Numbers are unique identifiers assigned to reference files and can be called out individually or in groups within a pen table for special processing during printing. The Update Sequence determines the order in which files are refreshed on the screen and printed on paper. Reference file Slot Number Categories: 0 = Sheet File (black)(this is the active dgn file.) 1-99 = Proposed Primary Discipline (Black) 100-199 = Existing Topo (light gray) 200-299 = Proposed Other Discipline (dark gray) 300-399 = Color Shaded Areas Note: All data placed in the Sheet File will be printed black. Items to be printed in color should be in their own file. Create a new file using the standard CDOT seed files and reference design elements to create color areas. If printing to a black and white printer all colors will be printed grayscale. Files can still be printed using the default printer drivers and pen tables, however shaded areas will lose their transparency and may print black depending on their level. -

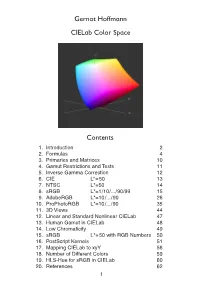

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Expanded Gamut Shoot-Out: Real Systems, Real Results

Expanded Gamut Shoot-Out: Real Systems, Real Results Abhay Sharma Click toRyerson edit Master University, subtitle Toronto style Advisors Roger Breton, Marc Levine, John Seymour, Bill Pope Comprehensive Report – 450+ downloads tinyurl.com/ExpandedGamut Agenda – Expanded Gamut § Why do we need Expanded Gamut? § What is Expanded Gamut? (CMYK-OGV) § Use cases – Spot Colors vs Images PANTONE 109 C § Printing Spot Colors with Kodak Spotless (KSS) § Increased Accuracy § Using only 3 inks § Print all spot colors, without spot color inks § How do I implement EG? § Issues with Adobe and Pantone § Flexo testing in 2020 Vendors and Participants Software Solutions 1. Alwan – Toolbox, ColorHub 2. CGS ORIS – X GAMUT 3. ColorLogic – ColorAnt, CoPrA, ZePrA 4. GMG Color – OpenColor, ColorServer 5. Heidelberg – Prinect ColorToolbox 6. Kodak – Kodak Spotless Software, Prinergy PDF Editor § Hybrid Software - PACKZ (pronounced “packs”) RIP/DFE § efi Fiery XF (Command WorkStation) – Epson P9000 § SmartStream Production Pro – HP Indigo 7900 Color Management Solutions § X-Rite i1Profiler Expanded Gamut Tools § PANTONE Color Manager, Adobe Acrobat Pro, Adobe Photoshop Why do we need Expanded Gamut? - because imaging systems are imperfect Printing inks and dyes CMYK color gamut is small Color negative film What are the Use Cases for Expanded Gamut? ✓ 1. Spot Colors 2. Images PANTONE 301 C PANTONE 109 C Expanded gamut is most urgently needed in spot color reproduction for labels and package printing. Orange, Green, Violet - expands the colorspace Y G O C+Y M+Y -

Harlequin RIP OEM Manual

0RIPMate for Windows operating systems Harlequin PLUS Server RIP v9.0 June 2011 AG12325 Rev. 13 Copyright and Trademarks Harlequin PLUS Server RIP June 2011 Part number: HK‚9.0‚ÄìOEM‚ÄìWIN Document issue: 106 Copyright ¬© 2011 Global Graphics Software Ltd. All rights reserved. Certificate of Computer Registration of Computer Software. Registration No. 2006SR05517 No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, elec- tronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of Global Graphics Software Ltd. The information in this publication is provided for information only and is subject to change without notice. Global Graphics Software Ltd and its affiliates assume no responsibility or liability for any loss or damage that may arise from the use of any information in this publication. The software described in this book is furnished under license and may only be used or cop- ied in accordance with the terms of that license. Harlequin is a registered trademark of Global Graphics Software Ltd. The Global Graphics Software logo, the Harlequin at Heart Logo, Cortex, Harlequin RIP, Harlequin ColorPro, EasyTrap, FireWorks, FlatOut, Harlequin Color Management System (HCMS), Harlequin Color Production Solutions (HCPS), Harlequin Color Proofing (HCP), Harlequin Error Diffusion Screening Plugin 1-bit (HEDS1), Harlequin Error Diffusion Screening Plugin 2-bit (HEDS2), Harlequin Full Color System (HFCS), Harlequin ICC Profile Processor (HIPP), Harlequin Standard Color System (HSCS), Harlequin Chain Screening (HCS), Harlequin Display List Technology (HDLT), Harlequin Dispersed Screening (HDS), Harlequin Micro Screening (HMS), Harlequin Precision Screening (HPS), HQcrypt, Harlequin Screening Library (HSL), ProofReady, Scalable Open Architecture (SOAR), SetGold, SetGoldPro, TrapMaster, TrapWorks, TrapPro, TrapProLite, Harlequin RIP Eclipse Release and Harlequin RIP Genesis Release are all trademarks of Global Graphics Software Ltd. -

NEC Multisync® PA311D Wide Gamut Color Critical Display Designed for Photography and Video Production

NEC MultiSync® PA311D Wide gamut color critical display designed for photography and video production 1419058943 High resolution and incredible, predictable color accuracy. The 31” MultiSync PA311D is the ultimate desktop display for applications where precise color is essential. The innovative wide-gamut LED backlight provides 100% coverage of Adobe RGB color space and 98% coverage of DCI-P3, enabling more accurate colors to be displayed on screen. Utilizing a high performance IPS LCD panel and backed by a 4 year warranty with Advanced Exchange, the MultiSync PA311D delivers high quality, accurate images simply and beautifully. Impeccable Image Performance The wide-gamut LED LCD backlight combined with NEC’s exclusive SpectraView Engine deliver precise color in every environment. • True 4K resolution (4096 x 2160) offers a high pixel density • Up to 100% coverage of Adobe RGB color space and 98% coverage of DCI-P3 • 10-bit HDMI and DisplayPort inputs display up to 1.07 billion colors out of a palette of 4.3 trillion colors Ultimate Color Management The sophisticated SpectraView Engine provides extensive, intuitive control over color settings. • MultiProfiler software and on-screen controls provide access to thousands of color gamut, gamma, white point, brightness and contrast combinations • Internal 14-bit 3D lookup tables (LUTs) work with optional SpectraViewII color calibration solution for unparalleled color accuracy A Perfect Fit for Your Workspace A Better Workflow Future-proof connectivity, great ergonomics, and VESA mount Exclusive, -

Medtronic Brand Color Chart

Medtronic Brand Color Chart Medtronic Visual Identity System: Color Ratios Navy Blue Medtronic Blue Cobalt Blue Charcoal Blue Gray Dark Gray Yellow Light Orange Gray Orange Medium Blue Sky Blue Light Blue Light Gray Pale Gray White Purple Green Turquoise Primary Blue Color Palette 70% Primary Neutral Color Palette 20% Accent Color Palette 10% The Medtronic Brand Color Palette was created for use in all material. Please use the CMYK, RGB, HEX, and LAB values as often as possible. If you are not able to use the LAB values, you can use the Pantone equivalents, but be aware the color output will vary from the other four color breakdowns. If you need a spot color, the preference is for you to use the LAB values. Primary Blue Color Palette Navy Blue Medtronic Blue C: 100 C: 99 R: 0 L: 15 R: 0 L: 31 M: 94 Web/HEX Pantone M: 74 Web/HEX Pantone G: 30 A: 2 G: 75 A: -2 Y: 47 #001E46 533 C Y: 17 #004B87 2154 C B: 70 B: -20 B: 135 B: -40 K: 43 K: 4 Cobalt Blue Medium Blue C: 81 C: 73 R: 0 L: 52 R: 0 L: 64 M: 35 Web/HEX Pantone M: 12 Web/HEX Pantone G: 133 A: -12 G: 169 A: -23 Y: 0 #0085CA 2382 C Y: 0 #00A9E0 2191 C B: 202 B: -45 B: 224 B: -39 K: 0 K : 0 Sky Blue Light Blue C: 55 C: 29 R: 113 L: 75 R: 185 L: 85 M: 4 Web/HEX Pantone M: 5 Web/HEX Pantone G: 197 A: -20 G: 217 A: -9 Y: 4 #71C5E8 297 C Y: 5 #B9D9EB 290 C B: 232 B: -26 B: 235 B: -13 K: 0 K: 0 Primary Neutral Color Palette Charcoal Gray Blue Gray C: 0 Pantone C: 68 R: 83 L: 36 R: 91 L: 51 M: 0 Web/HEX Cool M: 40 Web/HEX Pantone G: 86 A: 0 G: 127 A: -9 Y: 0 #53565a Gray Y: 28 #5B7F95 5415 -

Adobe Photoshop 7.0 Design Professional

Adobe Photoshop CS Design Professional INCORPORATING COLOR TECHNIQUES Chapter Lessons Work with color to transform an image User the Color Picker and the Swatches palette Place a border around an image Blend colors using the Gradient Tool Add color to a grayscale image Use filters, opacity, and blending modes Match colors Incorporating Color Techniques Chapter D 2 Incorporating Color Techniques Using Color Develop an understanding of color theory and color terminology Identify how Photoshop measures, displays and prints color Learn which colors can be reproduced well and which ones cannot Incorporating Color Techniques Chapter D 3 Color Modes Photoshop displays and prints images using specific color modes Color mode or image mode determines how colors combine based on the number of channels in a color model Different color modes result in different levels of color detail and file size – CMYK color mode used for images in a full-color print brochure – RGB color mode used for images in web or e-mail to reduce file size while maintaining color integrity Incorporating Color Techniques Chapter D 4 Color Modes L*a*b Model – Based on human perception of color – Numeric values describe all colors seen by a person with normal vision Grayscale Model – Grayscale mode uses different shades of gray RGB (Red Green Blue) Mode used for online images – Assign intensity value to each pixel – Intensity values range from 0 (black) to 255 (white) for each of the RGB (red, green, blue) components in a color image • Bright red color has an R value of 246, a G value of 20, and a B value of 50.