A Finite-State Approach to Shallow Parsing and Grammatical Functions Annotation of German

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Deutscher Bundestag

Deutscher Bundestag 178. Sitzung des Deutschen Bundestages am Donnerstag, 10.Mai 2012 Endgültiges Ergebnis der Namentlichen Abstimmung Nr. 2 Beschlussempfehlung des Ausschusses für Umwelt, Naturschutz und Reaktorsicherheit (16. Ausschuss) zu dem Antrag der Abgeordneten Frank Schwabe, Ingrid Arndt-Brauer, Dirk Becker, weiterer Abgeordneter und der Fraktion der SPD über Leitlinien für Tranzparenz und Umweltverträglichkeit bei der Förderung von unkonventionellem Erdgas - Drucksachen 17/7612 und 17/9450 - Abgegebene Stimmen insgesamt: 553 Nicht abgegebene Stimmen: 67 Ja-Stimmen: 301 Nein-Stimmen: 192 Enthaltungen: 60 Ungültige: 0 Berlin, den 10. Mai. 12 Beginn: 19:26 Ende: 19:29 Seite: 1 Seite: 1 CDU/CSU Ja Nein Enthaltung Ungült. Nicht abg.Name Ilse Aigner X Peter Altmaier X Peter Aumer X Dorothee Bär X Thomas Bareiß X Norbert Barthle X Günter Baumann X Ernst-Reinhard Beck (Reutlingen) X Manfred Behrens (Börde) X Veronika Bellmann X Dr. Christoph Bergner X Peter Beyer X Steffen Bilger X Clemens Binninger X Peter Bleser X Dr. Maria Böhmer X Wolfgang Börnsen (Bönstrup) X Wolfgang Bosbach X Norbert Brackmann X Klaus Brähmig X Michael Brand X Dr. Reinhard Brandl X Helmut Brandt X Dr. Ralf Brauksiepe X Dr. Helge Braun X Heike Brehmer X Ralph Brinkhaus X Cajus Caesar X Gitta Connemann X Alexander Dobrindt X Thomas Dörflinger X Marie-Luise Dött X Dr. Thomas Feist X Enak Ferlemann X Ingrid Fischbach X Hartwig Fischer (Göttingen) X Dirk Fischer (Hamburg) X Axel E. Fischer (Karlsruhe-Land) X Dr. Maria Flachsbarth X Klaus-Peter Flosbach X Herbert Frankenhauser X Dr. Hans-Peter Friedrich (Hof) X Michael Frieser X Erich G. -

21.9 Parlamentarische Versammlung Der NATO (NATO PV) 24.10.2016

DHB Kapitel 21.9 Parlamentarische Versammlung der NATO (NATO PV) 24.10.2016 21.9 Parlamentarische Versammlung der NATO (NATO PV) Stand: 1.5.2016 Im Jahr 1955 erstmals als eine Konferenz bestehend aus Parlamentariern der NATO- Mitgliedstaaten zusammengetreten, hat sich die Versammlung im Laufe der Jahre zu einem euroatlantischen Parlament entwickelt, in dem Parlamentarier aus Europa und Nordamerika über Fragen und Probleme diskutieren, die die Nordatlantische Allianz betreffen. Die Versammlung erarbeitet zu allen das Bündnis betreffenden Fragen Berichte, Empfehlungen und Entschließungen. Diese werden auf Plenarsitzungen verabschiedet und richten sich an die Regierungen der NATO-Mitgliedstaaten und an den Nordatlantikrat. Der Generalsekretär der NATO und der Nordatlantikrat erstatten der Versammlung regelmäßig Bericht über die Arbeit und die aktuellen Aufgaben der Allianz. Aufgaben der Versammlung sind die Förderung der Kooperation in der Sicherheits- und Verteidigungspolitik, die Vermittlung von NATO-Entscheidungen auf die Ebene nationaler Politik und die Stärkung der transatlantischen Solidarität. Die Versammlung hat das Ziel, als Bindeglied zwischen den nationalen Parlamenten und der NATO zu wirken. 257 Parlamentarier aus 28 Mitgliedstaaten der NATO gehören der Versammlung als Vollmitglieder an. Hinzu kommen assoziierte Mitglieder, Partner und Beobachter. Die deutsche Delegation in der Versammlung besteht aus zwölf Abgeordneten des Deutschen Bundestages und sechs Mitgliedern des Bundesrates. Die Plenarsitzungen der Versammlung finden zweimal jährlich in Form einer Frühjahrs- und einer Jahrestagung im Wechsel in den Mitgliedsländern oder assoziierten Mitgliedsländern des Bündnisses statt. Die fünf Fachausschüsse und mehrere Sondergremien der Versammlung, die sich mit speziellen inhaltlichen Schwerpunkten beschäftigen, tagen während der Jahrestagungen sowie mehrmals jährlich in unterschiedlichen Mitgliedstaaten. Im Folgenden sind die seit der 13. -

Fraktion Intern Nr. 3/2017

fraktion intern* INFORMATIONSDIENST DER SPD-BUNDESTAGSFRAKTION www.spdfraktion.de nr. 03 . 12.07.2017 *Inhalt ................................................................................................................................................................................ 02 Völlige Gleichstellung: Ehe für alle gilt! 10 Mehr Transparenz über Sponsoring 03 Editorial bei Parteien 03 Bund investiert in Schulsanierung 11 Kinderehen werden verboten 04 Betriebsrente für mehr Beschäftigte 11 Paragraph 103 „Majestätsbeleidigung“ 05 Ab 2025: Gleiche Renten in Ost und West wird abgeschafft 05 Krankheit oder Unfall sollen nicht 12 Damit auch Mieter etwas von der arm machen Energiewende haben 06 Neuordnung der 12 Kosten der Energiewende Bund-Länder-Finanzbeziehungen gerechter verteilen 07 Privatisierung der Autobahnen verhindert 13 Pflegeausbildung wird reformiert 08 Einbruchdiebstahl 13 Bessere Pflege in Krankenhäusern soll effektiver bekämpft werden 14 24. Betriebs- und Personalrätekonferenz 08 Rechtssicherheit für WLAN-Hotspots 14 Afrika braucht nachhaltige Entwicklung 09 Rechtsdurchsetzung 15 33 SPD-Abgeordnete in sozialen Netzwerken wird verbessert verabschieden sich aus dem Bundestag 10 Keine staatliche Finanzierung 16 Verschiedenes für verfassungsfeindliche Parteien Mehr Informationen gibt es hier: www.spdfraktion.de www.spdfraktion.de/facebook www.spdfraktion.de/googleplus www.spdfraktion.de/twitter www.spdfraktion.de/youtube www.spdfraktion.de/flickr fraktion intern nr. 03 · 12.07.17 · rechtspolitik Völlige Gleichstellung: Ehe -

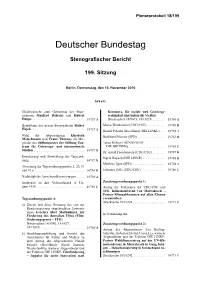

Plenarprotokoll 17/89

Plenarprotokoll 17/89 Deutscher Bundestag Stenografischer Bericht 89. Sitzung Berlin, Mittwoch, den 9. Februar 2011 Inhalt: Erweiterung und Abwicklung der Tagesord- Dr. Philipp Rösler, Bundesminister nung . 9963 A BMG . 9979 C Priska Hinz (Herborn) (BÜNDNIS 90/ DIE GRÜNEN) . Zusatztagesordnungspunkt 1: 9979 C Vereinbarte Debatte: zur Entwicklung in Dr. Philipp Rösler, Bundesminister BMG . Ägypten . 9963 B 9979 C Dr. Guido Westerwelle, Bundesminister Jens Ackermann (FDP) . 9979 D AA . 9963 B Dr. Philipp Rösler, Bundesminister Dr. Rolf Mützenich (SPD) . 9965 A BMG . 9980 A Volker Kauder (CDU/CSU) . 9966 C Britta Haßelmann (BÜNDNIS 90/ DIE GRÜNEN) . 9980 B Jan van Aken (DIE LINKE) . 9968 A Dr. Philipp Rösler, Bundesminister Kerstin Müller (Köln) (BÜNDNIS 90/ BMG . 9980 B DIE GRÜNEN) . 9969 D Dr. Ernst Dieter Rossmann (SPD) . 9980 D Dr. Rainer Stinner (FDP) . 9971 D Dr. Philipp Rösler, Bundesminister Dr. Andreas Schockenhoff (CDU/CSU) . 9973 A BMG . 9981 A Heidemarie Wieczorek-Zeul (SPD) . 9974 A Rudolf Henke (CDU/CSU) . 9981 A Holger Haibach (CDU/CSU) . 9975 D Dr. Philipp Rösler, Bundesminister Thomas Silberhorn (CDU/CSU) . 9977 A BMG . 9981 B Dr. Petra Sitte (DIE LINKE) . 9981 B Tagesordnungspunkt 1: Dr. Philipp Rösler, Bundesminister BMG . 9981 C Befragung der Bundesregierung: Vierter Er- fahrungsbericht der Bundesregierung über Priska Hinz (Herborn) (BÜNDNIS 90/ die Durchführung des Stammzellgesetzes; DIE GRÜNEN) . 9981 D sonstige Fragen zur Kabinettsitzung . 9978 B Dr. Philipp Rösler, Bundesminister Dr. Philipp Rösler, Bundesminister BMG . 9981 D BMG . 9978 B Dr. Ernst Dieter Rossmann (SPD) . 9982 A Dr. Petra Sitte (DIE LINKE) . 9978 D Dr. Philipp Rösler, Bundesminister Dr. Philipp Rösler, Bundesminister BMG . 9982 A BMG . -

Plenarprotokoll 18/199

Plenarprotokoll 18/199 Deutscher Bundestag Stenografischer Bericht 199. Sitzung Berlin, Donnerstag, den 10. November 2016 Inhalt: Glückwünsche zum Geburtstag der Abge- Kommerz, für soziale und Genderge- ordneten Manfred Behrens und Hubert rechtigkeit und kulturelle Vielfalt Hüppe .............................. 19757 A Drucksachen 18/8073, 18/10218 ....... 19760 A Begrüßung des neuen Abgeordneten Rainer Marco Wanderwitz (CDU/CSU) .......... 19760 B Hajek .............................. 19757 A Harald Petzold (Havelland) (DIE LINKE) .. 19762 A Wahl der Abgeordneten Elisabeth Burkhard Blienert (SPD) ................ 19763 B Motschmann und Franz Thönnes als Mit- glieder des Stiftungsrates der Stiftung Zen- Tabea Rößner (BÜNDNIS 90/ trum für Osteuropa- und internationale DIE GRÜNEN) ..................... 19765 C Studien ............................. 19757 B Dr. Astrid Freudenstein (CDU/CSU) ...... 19767 B Erweiterung und Abwicklung der Tagesord- Sigrid Hupach (DIE LINKE) ............ 19768 B nung. 19757 B Matthias Ilgen (SPD) .................. 19769 A Absetzung der Tagesordnungspunkte 5, 20, 31 und 41 a ............................. 19758 D Johannes Selle (CDU/CSU) ............. 19769 C Nachträgliche Ausschussüberweisungen ... 19759 A Gedenken an den Volksaufstand in Un- Zusatztagesordnungspunkt 1: garn 1956 ........................... 19759 C Antrag der Fraktionen der CDU/CSU und SPD: Klimakonferenz von Marrakesch – Pariser Klimaabkommen auf allen Ebenen Tagesordnungspunkt 4: vorantreiben Drucksache 18/10238 .................. 19771 C a) -

PROGRAMM.Doc

DEUTSCHER BUNDESTAG 11011 Berlin, den 2. April 2007 Delegation Platz der Republik 1 der Bundesrepublik Deutschland in der Parlamentarischen Versammlung Dienstgebäude: Tel.: (030) 227-33061/-33733 der OSZE Luisenstr. 32-34 Fax: (030) 227-36959/-36414 e-mail: - Sekretariat - [email protected] Referat WI 2 Bearbeiter/in: VA’e Martina Koerbel Q:\OSZE PV\16. Wahlperiode\2007\2007-02 Wintertagung Wien\PROGRAMM.doc SECHSTE WINTERTAGUNG DER PARLAMENTARISCHEN VERSAMMLUNG DER OSZE WIEN, 22. bis 23. FEBRUAR 2007 Programm (Stand: 2. April 2007) Zur Information: Alle Sitzungen des Ständigen Ausschusses und der drei Allgemeinen Ausschüsse finden im Kongress-Zentrum der Hofburg in Wien statt. Unterkunft der Delegation im Hotel: Vienna Marriott Hotel (alle Delegierten außer Abg. Willy Wimmer) Bestätigungs-Nr. 80 93 26 23, German delegation/NTBA Parkring 12a, 1010 Wien Frau Michele Zemansky (Reservation Sales Agent), Tel.: +43 1 515 18-53, Fax: +43 1 515 18-6722 Hotel „König von Ungarn“ (nur Abg. Willy Wimmer) Reservierungs-Nr. 3692 Schulerstr. 10, 1010 Wien Tel.: +43 1 515 84-0, Fax: +43 1 515-848 2 Teilnehmer: Mitglieder der deutschen Delegation in der OSZE PV: Abg. Willy Wimmer (CDU/CSU) Ordentliches Delegationsmitglied Amtierender Leiter der Delegation Abg. Doris Barnett (SPD) Ordentliches Delegationsmitglied Abg. Marieluise Beck (Bü90/Grü) Ordentliches Delegationsmitglied Abg. Uwe Beckmeyer (SPD) Stellvertretendes Delegationsmitglied Abg. Michael Link (FDP) Ordentliches Delegationsmitglied Abg. Hans Raidel (CDU/CSU) Ordentliches Delegationsmitglied Abg. Kurt J. Rossmanith (CDU/CSU) Stellvertretendes Delegationsmitglied Abg. Hedi Wegener (SPD) Ordentliches Delegationsmitglied Abg. Prof. Gert Weisskirchen (SPD) Ordentliches Delegationsmitglied Sonderbeauftragter der OSZE zur Bekämpfung des Antisemitismus Abg. Karl-Georg Wellmann (CDU/CSU) Ordentliches Delegationsmitglied Abg. -

Bundestagswahl 2013 Im Land Bremen: Endgültiges Ergebnis Steht Fest

Der Landeswahlleiter Pressemitteilung vom 2. Oktober 2013 Bundestagswahl 2013 im Land Bremen: Endgültiges Ergebnis steht fest BREMEN – Nachdem der Kreiswahlausschuss am 30. September 2013 das end- gültige Ergebnis für die Wahlkreise 54 und 55 und der Landeswahlaus- schuss am 2. Oktober 2013 für die Freie Hansestadt Bremen festgestellt haben, verkünden Landeswahlleiter Jürgen Wayand und Kreiswahlleiterin Carola Janssen das endgültige Ergebnis (siehe Tabelle). Zwischen dem in der Wahlnacht festgestellten vorläufigen und dem end- gültigen Ergebnis haben sich lediglich kleinere Veränderungen erge- ben. Der Kreiswahlausschuss hat bereits die beiden gewählten Wahlkreisab- geordneten festgestellt. Die übrigen, über die Landeslisten der Par- teien gewählten Bremer Abgeordneten stellt der Bundeswahlausschuss am 9. Oktober 2013 fest. Die neu gewählten Abgeordneten erwerben ihre Mitgliedschaft im Bun- destag mit dessen erster Sitzung. Weitere Auskünfte erteilt: Jan Morgenstern Telefon: (0421) 361 4159 E-Mail: [email protected] Herausgeber Kontakt Anschrift © Der Landeswahlleiter für Bre- Telefon: (0421) 361 4159 An der Weide 14 – 16 men Telefax: (0421) 361 2278 28195 Bremen Verbreitung mit Quellenangabe E-Mail: landeswahllei- erwünscht [email protected] www.wahlen.bremen.de Endgültiges Ergebnis der Wahl zum 18. Deutschen Bundestag am 22. September 2013 im Land Bremen Wahlkreis 54 Wahlkreis 55 Land Gegenstand der Nachweisung Bremen I Bremen II - Bremerhaven Freie Hansestadt Bremen Anzahl % Anzahl % Anzahl % Wahlberechtigte 256 547 x 227 276 x 483 823 x Wähler / Wahlbeteiligung 184 512 71,9 148 510 65,3 333 022 68,8 Ungültige Erststimmen 2 128 1,2 2 083 1,4 4 211 1,3 Gültige Erststimmen 182 384 98,8 146 427 98,6 328 811 98,7 davon entfielen auf die Bewerberin/den Bewerber 1 Sozialdemokratische Partei Deutschlands (SPD) 69 161 37,9 64 276 43,9 133 437 40,6 Name des Bewerbers/der Bewerberin Dr. -

227. Am: Donnerstag, 18. Juni 2009 Namentliche Abstimmung Nr.: 3

Beginn: 16:38 Ende: 16:40 227. Sitzung des Deutschen Bundestages am: Donnerstag, 18. Juni 2009 Namentliche Abstimmung Nr.: 3 zum Thema: Gesetzentwurf der Abgeordneten Wolfgang Bosbach, René Röspel, Katrin Göring-Eckardt, und weiterer Abgeordneter - Entwurf eines Gesetzes zur Verankerung der Patienenverfügung im Betreuungsrecht (Patientenverfügungsgesetz - PatVerfG); Drsn.: 16/11360 und 16/13314 Endgültiges Ergebnis: Abgegebene Stimmen insgesamt: 566 nicht abgegebene-Stimmen: 46 Ja-Stimmen: 220 Nein-Stimmen: 344 Enthaltungen: 2 ungültige: 0 Berlin, den 18. Jun. 09 Seite: 1 CDU/CSU Name Ja Nein Enthaltung Ungült. Nicht abg. Ulrich Adam X Ilse Aigner X Peter Albach X Peter Altmaier X Dorothee Bär X Thomas Bareiß X Norbert Barthle X Dr. Wolf Bauer X Günter Baumann X Ernst-Reinhard Beck (Reutlingen) X Veronika Bellmann X Dr. Christoph Bergner X Otto Bernhardt X Clemens Binninger X Renate Blank X Peter Bleser X Antje Blumenthal X Dr. Maria Böhmer X Jochen Borchert X Wolfgang Börnsen (Bönstrup) X Wolfgang Bosbach X Klaus Brähmig X Michael Brand X Helmut Brandt X Dr. Ralf Brauksiepe X Monika Brüning X Georg Brunnhuber X Cajus Caesar X Gitta Connemann X Leo Dautzenberg X Hubert Deittert X Alexander Dobrindt X Thomas Dörflinger X Marie-Luise Dött X Maria Eichhorn X Dr. Stephan Eisel X Anke Eymer (Lübeck) X Ilse Falk X Dr. Hans Georg Faust X Enak Ferlemann X Ingrid Fischbach X Hartwig Fischer (Göttingen) X Dirk Fischer (Hamburg) X Axel E. Fischer (Karlsruhe-Land) X Dr. Maria Flachsbarth X Klaus-Peter Flosbach X Herbert Frankenhauser X Dr. Hans-Peter Friedrich (Hof) X Erich G. Fritz X Jochen-Konrad Fromme X Dr. -

Plenarprotokoll 17/126

Plenarprotokoll 17/126 Deutscher Bundestag Stenografischer Bericht 126. Sitzung Berlin, Mittwoch, den 21. September 2011 Inhalt: Erweiterung der Tagesordnung . 14789 A Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14793 A Streichung der Tagesordnungspunkte 13 und 16 14789 C Nicole Maisch (BÜNDNIS 90/ DIE GRÜNEN) . 14793 A Tagesordnungspunkt 1: Dr. Gerd Müller, Parl. Staatssekretär Befragung der Bundesregierung: Waldstra- BMELV . 14793 B tegie 2020 – Internationales Jahr der Wäl- Georg Schirmbeck (CDU/CSU) . 14793 B der 2011 . 14789 C Dr. Gerd Müller, Parl. Staatssekretär Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14793 B BMELV . 14789 D Ulrich Kelber (SPD) . 14793 C Cajus Caesar (CDU/CSU) . 14790 D Dr. Gerd Müller, Parl. Staatssekretär Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14793 D BMELV . 14791 A Hartwig Fischer (Göttingen) (CDU/CSU) . 14793 D Cornelia Behm (BÜNDNIS 90/ DIE GRÜNEN) . 14791 A Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14794 A Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14791 B Alexander Süßmair (DIE LINKE) . 14794 A Dr. Christel Happach-Kasan (FDP) . 14791 C Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14794 B Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14791 D Cajus Caesar (CDU/CSU) . 14794 C Petra Crone (SPD) . 14792 A Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14794 C Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14792 A Harald Ebner (BÜNDNIS 90/ DIE GRÜNEN) . 14794 D Franz-Josef Holzenkamp (CDU/CSU) . 14792 B Dr. Gerd Müller, Parl. Staatssekretär Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14795 A BMELV . 14792 B Petra Crone (SPD) . 14795 B Dr. Kirsten Tackmann (DIE LINKE) . 14792 C Dr. Gerd Müller, Parl. Staatssekretär Dr. Gerd Müller, Parl. Staatssekretär BMELV . 14795 B BMELV . -

Deutscher Bundestag

Plenarprotokoll 16/105 Deutscher Bundestag Stenografischer Bericht 105. Sitzung Berlin, Donnerstag, den 21. Juni 2007 Inhalt: Glückwünsche zum Geburtstag des Abgeord- d) Beschlussempfehlung und Bericht des neten Wolfgang Zöller . 10701 A Ausschusses für Bildung, Forschung und Technikfolgenabschätzung Benennung des Abgeordneten Uwe Schummer in den Stiftungsrat der Stiftung – zu dem Antrag der Abgeordneten Uwe CAESAR . 10701 B Schummer, Ilse Aigner, Michael Kretschmer, weiterer Abgeordneter Erweiterung und Abwicklung der Tagesord- und der Fraktion der CDU/CSU sowie nung . 10701 B der Abgeordneten Willi Brase, Jörg Tauss, Nicolette Kressl, weiterer Ab- Absetzung der Tagesordnungspunkte 12, 16 b geordneter und der Fraktion der SPD: und 29 . 10702 A Weiterentwicklung der europäi- schen Berufsbildungspolitik – zu dem Antrag der Abgeordneten Tagesordnungspunkt 3: Priska Hinz (Herborn), Grietje Bettin, Ekin Deligöz, weiterer Abgeordneter a) Antrag der Abgeordneten Uwe und der Fraktion des BÜNDNIS- Schummer, Ilse Aigner, Michael SES 90/DIE GRÜNEN: Den Europäi- Kretschmer, weiterer Abgeordneter und schen Bildungsraum weiter gestal- der Fraktion der CDU/CSU sowie der Ab- ten – Transparenz und Durchlässig- geordneten Willi Brase, Nicolette Kressl, keit durch einen Europäischen Jörg Tauss, weiterer Abgeordneter und der Qualifikationsrahmen stärken Fraktion der SPD: Junge Menschen för- – zu dem Antrag der Abgeordneten dern – Ausbildung schaffen und Quali- Cornelia Hirsch, Dr. Petra Sitte, Volker fizierung sichern Schneider (Saarbrücken), weiterer Ab- (Drucksache 16/5730) . 10702 A geordneter und der Fraktion der LIN- KEN: Anforderungen an die Gestal- b) Antrag der Abgeordneten Priska Hinz tung eines europäischen und eines (Herborn), Britta Haßelmann, Brigitte nationalen Qualifikationsrahmens Pothmer, Josef Philip Winkler und der Fraktion des BÜNDNISSES 90/DIE (Drucksachen 16/2996, 16/1063, 16/1127, GRÜNEN: Perspektiven schaffen – An- 16/5760) . -

Plenarprotokoll 16/33

Plenarprotokoll 16/33 Deutscher Bundestag Stenografischer Bericht 33. Sitzung Berlin, Freitag, den 7. April 2006 Inhalt: Tagesordnungspunkt 22: Wahl . 2778 A Beschlussempfehlung und Bericht des Aus- Ergebnis . 2783 A wärtigen Ausschusses zu dem Antrag der Petra Pau (DIE LINKE) . 2783 B Bundesregierung: Fortsetzung der Beteili- gung deutscher Streitkräfte an der Frie- densmission der Vereinten Nationen im Tagesordnungspunkt 34: Sudan (UNMIS) auf Grundlage der Reso- lution 1663 (2006) des Sicherheitsrates der Beschlussempfehlung und Bericht des Aus- schusses für Wahlprüfung, Immunität und Ge- Vereinten Nationen vom 24. März 2006 schäftsordnung zu dem Antrag der Abgeord- (Drucksachen 16/1052, 16/1148, 16/1177) . 2769 A neten Jens Ackermann, Dr. Karl Addicks, Gernot Erler, Staatsminister AA . 2769 C Christian Ahrendt und weiterer Abgeordneter: Einsetzung eines Untersuchungsaus- Dr. Rainer Stinner (FDP) . 2770 C schusses (Drucksachen 16/990, 16/1179) . 2781 A Eckart von Klaeden (CDU/CSU) . 2771 D Monika Knoche (DIE LINKE) . 2773 B Tagesordnungspunkt 24: Winfried Nachtwei (BÜNDNIS 90/ Zweite und dritte Beratung des von der Bun- DIE GRÜNEN) . 2774 B desregierung eingebrachten Entwurfs eines Gesetzes zur Neuregelung der Flugsiche- Monika Knoche (DIE LINKE) . 2775 C rung (Drucksachen 16/240, 16/1161, 16/1178) . 2781 C Winfried Nachtwei (BÜNDNIS 90/ Uwe Beckmeyer (SPD) . 2781 D DIE GRÜNEN) . 2775 D Horst Friedrich (Bayreuth) (FDP) . 2783 C Brunhilde Irber (SPD) . 2776 C Norbert Königshofen (CDU/CSU) . 2784 D Hans Raidel (CDU/CSU) . 2777 C Dorothee Menzner (DIE LINKE) . 2786 D Winfried Hermann (BÜNDNIS 90/ Namentliche Abstimmung . 2777 D DIE GRÜNEN) . 2787 D Ergebnis . 2778 D Ulrich Kasparick, Parl. Staatssekretär BMVBS . 2789 B Dorothee Menzner (DIE LINKE) . -

11. Nationale Maritime Konferenz

Dokumentation 11. Nationale Maritime Konferenz 22. und 23. Mai 2019 – Friedrichshafen Deutschland maritim global · smart · green Impressum Herausgeber Bundesministerium für Wirtschaft und Energie (BMWi) Öffentlichkeitsarbeit 11019 Berlin www.bmwi.de Stand Oktober 2019 Gestaltung PRpetuum GmbH, 80801 München Bildnachweis BILDKRAFTWERK S. 4, 10, 13, 15, 17, 21, 26, 38, 44, 49, 50, 58 BMWi / Susanne Eriksson / S. 5 Diese und weitere Broschüren erhalten Sie bei: Bundesministerium für Wirtschaft und Energie Referat Öffentlichkeitsarbeit E-Mail: [email protected] www.bmwi.de Zentraler Bestellservice: Telefon: 030 182722721 Bestellfax: 030 18102722721 Diese Publikation wird vom Bundesministerium für Wirtschaft und Energie im Rahmen der Öffentlichkeitsarbeit herausgegeben. Die Publi- kation wird kostenlos abgegeben und ist nicht zum Verkauf bestimmt. Sie darf weder von Parteien noch von Wahlwerbern oder Wahlhelfern während eines Wahlkampfes zum Zwecke der Wahlwerbung verwendet werden. Dies gilt für Bundestags-, Landtags- und Kommunalwahlen sowie für Wahlen zum Europäischen Parlament. Dokumentation 11. Nationale Maritime Konferenz 22. und 23. Mai 2019 – Friedrichshafen Deutschland maritim global · smart · green 3 Inhaltsverzeichnis Vorwort Norbert Brackmann, MdB . 5. Koordinator der Bundesregierung für die maritime Wirtschaft 11. Nationale Maritime Konferenz Konferenzprogramm ......................................................................................................................................................................................................................................................8