On Symmetry and Multiple-View Geometry: Structure, Pose, and Calibration from a Single Image

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Molecular Symmetry

Molecular Symmetry Symmetry helps us understand molecular structure, some chemical properties, and characteristics of physical properties (spectroscopy) – used with group theory to predict vibrational spectra for the identification of molecular shape, and as a tool for understanding electronic structure and bonding. Symmetrical : implies the species possesses a number of indistinguishable configurations. 1 Group Theory : mathematical treatment of symmetry. symmetry operation – an operation performed on an object which leaves it in a configuration that is indistinguishable from, and superimposable on, the original configuration. symmetry elements – the points, lines, or planes to which a symmetry operation is carried out. Element Operation Symbol Identity Identity E Symmetry plane Reflection in the plane σ Inversion center Inversion of a point x,y,z to -x,-y,-z i Proper axis Rotation by (360/n)° Cn 1. Rotation by (360/n)° Improper axis S 2. Reflection in plane perpendicular to rotation axis n Proper axes of rotation (C n) Rotation with respect to a line (axis of rotation). •Cn is a rotation of (360/n)°. •C2 = 180° rotation, C 3 = 120° rotation, C 4 = 90° rotation, C 5 = 72° rotation, C 6 = 60° rotation… •Each rotation brings you to an indistinguishable state from the original. However, rotation by 90° about the same axis does not give back the identical molecule. XeF 4 is square planar. Therefore H 2O does NOT possess It has four different C 2 axes. a C 4 symmetry axis. A C 4 axis out of the page is called the principle axis because it has the largest n . By convention, the principle axis is in the z-direction 2 3 Reflection through a planes of symmetry (mirror plane) If reflection of all parts of a molecule through a plane produced an indistinguishable configuration, the symmetry element is called a mirror plane or plane of symmetry . -

Chapter 1 – Symmetry of Molecules – P. 1

Chapter 1 – Symmetry of Molecules – p. 1 - 1. Symmetry of Molecules 1.1 Symmetry Elements · Symmetry operation: Operation that transforms a molecule to an equivalent position and orientation, i.e. after the operation every point of the molecule is coincident with an equivalent point. · Symmetry element: Geometrical entity (line, plane or point) which respect to which one or more symmetry operations can be carried out. In molecules there are only four types of symmetry elements or operations: · Mirror planes: reflection with respect to plane; notation: s · Center of inversion: inversion of all atom positions with respect to inversion center, notation i · Proper axis: Rotation by 2p/n with respect to the axis, notation Cn · Improper axis: Rotation by 2p/n with respect to the axis, followed by reflection with respect to plane, perpendicular to axis, notation Sn Formally, this classification can be further simplified by expressing the inversion i as an improper rotation S2 and the reflection s as an improper rotation S1. Thus, the only symmetry elements in molecules are Cn and Sn. Important: Successive execution of two symmetry operation corresponds to another symmetry operation of the molecule. In order to make this statement a general rule, we require one more symmetry operation, the identity E. (1.1: Symmetry elements in CH4, successive execution of symmetry operations) 1.2. Systematic classification by symmetry groups According to their inherent symmetry elements, molecules can be classified systematically in so called symmetry groups. We use the so-called Schönfliess notation to name the groups, Chapter 1 – Symmetry of Molecules – p. 2 - which is the usual notation for molecules. -

Symmetry of Graphs. Circles

Symmetry of graphs. Circles Symmetry of graphs. Circles 1 / 10 Today we will be interested in reflection across the x-axis, reflection across the y-axis and reflection across the origin. Reflection across y reflection across x reflection across (0; 0) Sends (x,y) to (-x,y) Sends (x,y) to (x,-y) Sends (x,y) to (-x,-y) Examples with Symmetry What is Symmetry? Take some geometrical object. It is called symmetric if some geometric move preserves it Symmetry of graphs. Circles 2 / 10 Reflection across y reflection across x reflection across (0; 0) Sends (x,y) to (-x,y) Sends (x,y) to (x,-y) Sends (x,y) to (-x,-y) Examples with Symmetry What is Symmetry? Take some geometrical object. It is called symmetric if some geometric move preserves it Today we will be interested in reflection across the x-axis, reflection across the y-axis and reflection across the origin. Symmetry of graphs. Circles 2 / 10 Sends (x,y) to (-x,y) Sends (x,y) to (x,-y) Sends (x,y) to (-x,-y) Examples with Symmetry What is Symmetry? Take some geometrical object. It is called symmetric if some geometric move preserves it Today we will be interested in reflection across the x-axis, reflection across the y-axis and reflection across the origin. Reflection across y reflection across x reflection across (0; 0) Symmetry of graphs. Circles 2 / 10 Sends (x,y) to (-x,y) Sends (x,y) to (x,-y) Sends (x,y) to (-x,-y) Examples with Symmetry What is Symmetry? Take some geometrical object. -

Geometry Topics

GEOMETRY TOPICS Transformations Rotation Turn! Reflection Flip! Translation Slide! After any of these transformations (turn, flip or slide), the shape still has the same size so the shapes are congruent. Rotations Rotation means turning around a center. The distance from the center to any point on the shape stays the same. The rotation has the same size as the original shape. Here a triangle is rotated around the point marked with a "+" Translations In Geometry, "Translation" simply means Moving. The translation has the same size of the original shape. To Translate a shape: Every point of the shape must move: the same distance in the same direction. Reflections A reflection is a flip over a line. In a Reflection every point is the same distance from a central line. The reflection has the same size as the original image. The central line is called the Mirror Line ... Mirror Lines can be in any direction. Reflection Symmetry Reflection Symmetry (sometimes called Line Symmetry or Mirror Symmetry) is easy to see, because one half is the reflection of the other half. Here my dog "Flame" has her face made perfectly symmetrical with a bit of photo magic. The white line down the center is the Line of Symmetry (also called the "Mirror Line") The reflection in this lake also has symmetry, but in this case: -The Line of Symmetry runs left-to-right (horizontally) -It is not perfect symmetry, because of the lake surface. Line of Symmetry The Line of Symmetry (also called the Mirror Line) can be in any direction. But there are four common directions, and they are named for the line they make on the standard XY graph. -

Symmetry in Chemistry - Group Theory

Symmetry in Chemistry - Group Theory Group Theory is one of the most powerful mathematical tools used in Quantum Chemistry and Spectroscopy. It allows the user to predict, interpret, rationalize, and often simplify complex theory and data. At its heart is the fact that the Set of Operations associated with the Symmetry Elements of a molecule constitute a mathematical set called a Group. This allows the application of the mathematical theorems associated with such groups to the Symmetry Operations. All Symmetry Operations associated with isolated molecules can be characterized as Rotations: k (a) Proper Rotations: Cn ; k = 1,......, n k When k = n, Cn = E, the Identity Operation n indicates a rotation of 360/n where n = 1,.... k (b) Improper Rotations: Sn , k = 1,....., n k When k = 1, n = 1 Sn = , Reflection Operation k When k = 1, n = 2 Sn = i , Inversion Operation In general practice we distinguish Five types of operation: (i) E, Identity Operation k (ii) Cn , Proper Rotation about an axis (iii) , Reflection through a plane (iv) i, Inversion through a center k (v) Sn , Rotation about an an axis followed by reflection through a plane perpendicular to that axis. Each of these Symmetry Operations is associated with a Symmetry Element which is a point, a line, or a plane about which the operation is performed such that the molecule's orientation and position before and after the operation are indistinguishable. The Symmetry Elements associated with a molecule are: (i) A Proper Axis of Rotation: Cn where n = 1,.... This implies n-fold rotational symmetry about the axis. -

Symmetry Groups

Symmetry Groups Symmetry plays an essential role in particle theory. If a theory is invariant under transformations by a symmetry group one obtains a conservation law and quantum numbers. For example, invariance under rotation in space leads to angular momentum conservation and to the quantum numbers j, mj for the total angular momentum. Gauge symmetries: These are local symmetries that act differently at each space-time point xµ = (t,x) . They create particularly stringent constraints on the structure of a theory. For example, gauge symmetry automatically determines the interaction between particles by introducing bosons that mediate the interaction. All current models of elementary particles incorporate gauge symmetry. (Details on p. 4) Groups: A group G consists of elements a , inverse elements a−1, a unit element 1, and a multiplication rule “ ⋅ ” with the properties: 1. If a,b are in G, then c = a ⋅ b is in G. 2. a ⋅ (b ⋅ c) = (a ⋅ b) ⋅ c 3. a ⋅ 1 = 1 ⋅ a = a 4. a ⋅ a−1 = a−1 ⋅ a = 1 The group is called Abelian if it obeys the additional commutation law: 5. a ⋅ b = b ⋅ a Product of Groups: The product group G×H of two groups G, H is defined by pairs of elements with the multiplication rule (g1,h1) ⋅ (g2,h2) = (g1 ⋅ g2 , h1 ⋅ h2) . A group is called simple if it cannot be decomposed into a product of two other groups. (Compare a prime number as analog.) If a group is not simple, consider the factor groups. Examples: n×n matrices of complex numbers with matrix multiplication. -

Symmetry in 2D

Symmetry in 2D 4/24/2013 L. Viciu| AC II | Symmetry in 2D 1 Outlook • Symmetry: definitions, unit cell choice • Symmetry operations in 2D • Symmetry combinations • Plane Point groups • Plane (space) groups • Finding the plane group: examples 4/24/2013 L. Viciu| AC II | Symmetry in 2D 2 Symmetry Symmetry is the preservation of form and configuration across a point, a line, or a plane. The techniques that are used to "take a shape and match it exactly to another” are called transformations Inorganic crystals usually have the shape which reflects their internal symmetry 4/24/2013 L. Viciu| AC II | Symmetry in 2D 3 Lattice = an array of points repeating periodically in space (2D or 3D). Motif/Basis = the repeating unit of a pattern (ex. an atom, a group of atoms, a molecule etc.) Unit cell = The smallest repetitive volume of the crystal, which when stacked together with replication reproduces the whole crystal 4/24/2013 L. Viciu| AC II | Symmetry in 2D 4 Unit cell convention By convention the unit cell is chosen so that it is as small as possible while reflecting the full symmetry of the lattice (b) to (e) correct unit cell: choice of origin is arbitrary but the cells should be identical; (f) incorrect unit cell: not permissible to isolate unit cells from each other (1 and 2 are not identical)4/24/2013 L. Viciu| AC II | Symmetry in 2D 5 A. West: Solid state chemistry and its applications Some Definitions • Symmetry element: An imaginary geometric entity (line, point, plane) about which a symmetry operation takes place • Symmetry Operation: a permutation of atoms such that an object (molecule or crystal) is transformed into a state indistinguishable from the starting state • Invariant point: point that maps onto itself • Asymmetric unit: The minimum unit from which the structure can be generated by symmetry operations 4/24/2013 L. -

39 Symmetry of Plane Figures

39 Symmetry of Plane Figures In this section, we are interested in the symmetric properties of plane figures. By a symmetry of a plane figure we mean a motion of the plane that moves the figure so that it falls back on itself. The two types of symmetry that we discuss are Reflection Symmetry: A plane figure is symmetric about a line if it is its own image when flipped across the line. We call the reflection line the line of symmetry. In other words, a figure has a line symmetry if it can be folded along the line so that one half of the figure matches the other half. Reflection symmetry is also known as mirror symmetry, since the line of symmetry acts like a double-sided mirror. Points on each side are reflected to the opposite side. Many plane figures have several line symmetries. Figure 39.1 shows some of the plane figures with their line symmetries shown dashed. Figure 39.1 Remark 39.1 Using reflection symmetry we can establish properties for some plane figures. For example, since an isosceles triangle has one line of symmetry then the 1 base angles, i.e. angles opposed the congruent sides, are congruent. A similar property holds for isosceles trapezoids. Rotational Symmetry: A plane figure has rotational symmetry if and only if it can be rotated more than 0◦ and less than or equal to 360◦ about a fixed point called the center of rotation so that its image coincides with its original position. Figure 39.2 shows the four different rotations of a square. -

Finite Subgroups of the Group of 3D Rotations

FINITE SUBGROUPS OF THE 3D ROTATION GROUP Student : Nathan Hayes Mentor : Jacky Chong SYMMETRIES OF THE SPHERE • Let’s think about the rigid rotations of the sphere. • Rotations can be composed together to form new rotations. • Every rotation has an inverse rotation. • There is a “non-rotation,” or an identity rotation. • For us, the distinct rotational symmetries of the sphere form a group, where each rotation is an element and our operation is composing our rotations. • This group happens to be infinite, since you can continuously rotate the sphere. • What if we wanted to study a smaller collection of symmetries, or in some sense a subgroup of our rotations? THEOREM • A finite subgroup of SO3 (the group of special rotations in 3 dimensions, or rotations in 3D space) is isomorphic to either a cyclic group, a dihedral group, or a rotational symmetry group of one of the platonic solids. • These can be represented by the following solids : Cyclic Dihedral Tetrahedron Cube Dodecahedron • The rotational symmetries of the cube and octahedron are the same, as are those of the dodecahedron and icosahedron. GROUP ACTIONS • The interesting portion of study in group theory is not the study of groups, but the study of how they act on things. • A group action is a form of mapping, where every element of a group G represents some permutation of a set X. • For example, the group of permutations of the integers 1,2, 3 acting on the numbers 1, 2, 3, 4. So for example, the permutation (1,2,3) -> (3,2,1) will swap 1 and 3. -

Symmetry: Cut & Fold Square

Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Square Cut out the square. There are 4 lines of symmetry on the square. Fold it on its lines of symmetry. Super Teacher Worksheets - www.superteacherworksheets.com Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Rectangle Cut out the rectangle. Fold the rectangle to show the lines of symmetry. A rectangle only has two lines of symmetry. Super Teacher Worksheets - www.superteacherworksheets.com Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Heart Cut out the heart. Fold the heart to show a line of symmetry. There is only one line of symmetry in this heart shape. Super Teacher Worksheets - www.superteacherworksheets.com Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Circle Cut out the circle. Fold the circle to show lines of symmetry. How many lines of symmetry does a circle have? It actually has more than you can count. Any straight line that goes through the center is a line of symmetry. Super Teacher Worksheets - www.superteacherworksheets.com Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Octagon Cut out the octagon. Fold the octagon to show lines of symmetry. How many are there? Super Teacher Worksheets - www.superteacherworksheets.com Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Shape Cut out the shape. Fold the shape to show lines of symmetry. How many lines of symmetry does it have? Super Teacher Worksheets - www.superteacherworksheets.com Name: _____________________________ Symmetry Cut & Fold Shapes Symmetry: Cut & Fold Crescent Cut out the crescent. Fold the shape to show lines of symmetry. -

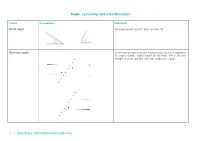

Angle, Symmetry and Transformation 1 | Numeracy and Mathematics Glossary

Angle, symmetry and transformation T e rms Illustrations Definition Acute angle An angle greater than 0° and less than 90°. Alternate angles Where two straight lines are cut by a third, as in the diagrams, the angles d and e (also c and f) are alternate. Where the two straight lines are parallel, alternate angles are equal. 1 | Numeracy and mathematics glossary Angle, symmetry and transformation Angle An angle measures the amount of ‘turning’ between two straight lines that meet at a vertex (point). Angles are classified by their size e.g. can be obtuse, acute, right angle etc. They are measured in degrees (°) using a protractor. Axis A fixed, reference line from which locations, distances or angles are taken. Usually grids have an x axis and y axis. Bearings A bearing is used to represent the direction of one point relative to another point. It is the number of degrees in the angle measured in a clockwise direction from the north line. In this example, the bearing of NBA is 205°. Bearings are commonly used in ship navigation. 2 | Numeracy and mathematics glossary Angle, symmetry and transformation Circumference The distance around a circle (or other curved shape). Compass (in An instrument containing a magnetised pointer which shows directions) the direction of magnetic north and bearings from it. Used to help with finding location and directions. Compass points Used to help with finding location and directions. North, South, East, West, (N, S, E, W), North East (NE), South West (SW), North West (NW), South East (SE) as well as: • NNE (north-north-east), • ENE (east-north-east), • ESE (east-south-east), • SSE (south-south-east), • SSW (south-south-west), • WSW (west-south-west), • WNW (west-north-west), • NNW (north-north-west) 3 | Numeracy and mathematics glossary Angle, symmetry and transformation Complementary Two angles which add together to 90°. -

Lecture 6: Introduction to Symmetry Operations

Crystallography Morphology, symmetry operations and crystal classification Morphology The study of external crystal form. A crystal is a regular geometric solid, bounded by smooth plane surfaces. Single crystals on the most basic level may be euhedral, subhedral or anhedral. Hedron (Greek – face); Eu and An (Greek – good and without); Sub (Latin – somewhat). Euhedral to Euhedral subhedral Garnet dolomite Anhedral Garnet Which is which in this sketch? External crystal form is an expression of internal order The Unit Cell The smallest unit of a structure that can be indefinitely repeated to generate the whole structure. Irrespective of the external form (euhedral, subhedral, or anhedral) the properties and symmetry of every crystal can be condensed into the study of one single unit cell. Repetition of unit cell creates a motif In this example the unit cell is a cube. It is a constant. The arrangement and stacking differs between shapes. Elements of symmetry identified in the unit cell will be present in the crystal Elements without translation Mirror (reflection) Center of symmetry (inversion) Rotation Glide These are all referred to as (a) symmetry operation(s). An operation (t) that generates a Translation pattern a regular identical intervals. tx tz In 3 dimensional space the translations can be labelled x, y and z (or 1, 2 and 3). Rotational symmetry is expressed as a whole number (n) between 1 and ∞. n refers to the number of times a motif is repeated during a complete 360° rotation. 3 1 6 2 4 ∞ Rotation produces patterns where the original motif is RETAINED. 6 turns of rotation , , Each is 60° Both original and rotation have the same “handedness”.