Cryptography Cryptography Basics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber

Outline • Chapter 19: Security (cont) • A Method for Obtaining Digital Signatures and Public-Key Cryptosystems Ronald L. Rivest, Adi Shamir, and Leonard M. Adleman. Communications of the ACM 21,2 (Feb. 1978) – RSA Algorithm – First practical public key crypto system • Authentication in Distributed Systems: Theory and Practice, Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber – Butler Lampson (MSR) - He was one of the designers of the SDS 940 time-sharing system, the Alto personal distributed computing system, the Xerox 9700 laser printer, two-phase commit protocols, the Autonet LAN, and several programming languages – Martin Abadi (Bell Labs) – Michael Burrows, Edward Wobber (DEC/Compaq/HP SRC) Oct-21-03 CSE 542: Operating Systems 1 Encryption • Properties of good encryption technique: – Relatively simple for authorized users to encrypt and decrypt data. – Encryption scheme depends not on the secrecy of the algorithm but on a parameter of the algorithm called the encryption key. – Extremely difficult for an intruder to determine the encryption key. Oct-21-03 CSE 542: Operating Systems 2 Strength • Strength of crypto system depends on the strengths of the keys • Computers get faster – keys have to become harder to keep up • If it takes more effort to break a code than is worth, it is okay – Transferring money from my bank to my credit card and Citibank transferring billions of dollars with another bank should not have the same key strength Oct-21-03 CSE 542: Operating Systems 3 Encryption methods • Symmetric cryptography – Sender and receiver know the secret key (apriori ) • Fast encryption, but key exchange should happen outside the system • Asymmetric cryptography – Each person maintains two keys, public and private • M ≡ PrivateKey(PublicKey(M)) • M ≡ PublicKey (PrivateKey(M)) – Public part is available to anyone, private part is only known to the sender – E.g. -

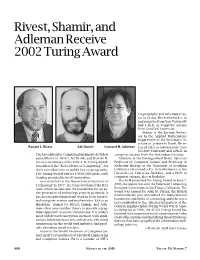

Rivest, Shamir, and Adleman Receive 2002 Turing Award, Volume 50

Rivest, Shamir, and Adleman Receive 2002 Turing Award Cryptography and Information Se- curity Group. He received a B.A. in mathematics from Yale University and a Ph.D. in computer science from Stanford University. Shamir is the Borman Profes- sor in the Applied Mathematics Department of the Weizmann In- stitute of Science in Israel. He re- Ronald L. Rivest Adi Shamir Leonard M. Adleman ceived a B.S. in mathematics from Tel Aviv University and a Ph.D. in The Association for Computing Machinery (ACM) has computer science from the Weizmann Institute. named RONALD L. RIVEST, ADI SHAMIR, and LEONARD M. Adleman is the Distinguished Henry Salvatori ADLEMAN as winners of the 2002 A. M. Turing Award, Professor of Computer Science and Professor of considered the “Nobel Prize of Computing”, for Molecular Biology at the University of Southern their contributions to public key cryptography. California. He earned a B.S. in mathematics at the The Turing Award carries a $100,000 prize, with University of California, Berkeley, and a Ph.D. in funding provided by Intel Corporation. computer science, also at Berkeley. As researchers at the Massachusetts Institute of The ACM presented the Turing Award on June 7, Technology in 1977, the team developed the RSA 2003, in conjunction with the Federated Computing code, which has become the foundation for an en- Research Conference in San Diego, California. The tire generation of technology security products. It award was named for Alan M. Turing, the British mathematician who articulated the mathematical has also inspired important work in both theoret- foundation and limits of computing and who was a ical computer science and mathematics. -

Cryptography: DH And

1 ì Key Exchange Secure Software Systems Fall 2018 2 Challenge – Exchanging Keys & & − 1 6(6 − 1) !"#ℎ%&'() = = = 15 & 2 2 The more parties in communication, ! $ the more keys that need to be securely exchanged Do we have to use out-of-band " # methods? (e.g., phone?) % Secure Software Systems Fall 2018 3 Key Exchange ì Insecure communica-ons ì Alice and Bob agree on a channel shared secret (“key”) that ì Eve can see everything! Eve doesn’t know ì Despite Eve seeing everything! ! " (alice) (bob) # (eve) Secure Software Systems Fall 2018 Whitfield Diffie and Martin Hellman, 4 “New directions in cryptography,” in IEEE Transactions on Information Theory, vol. 22, no. 6, Nov 1976. Proposed public key cryptography. Diffie-Hellman key exchange. Secure Software Systems Fall 2018 5 Diffie-Hellman Color Analogy (1) It’s easy to mix two colors: + = (2) Mixing two or more colors in a different order results in + + = the same color: + + = (3) Mixing colors is one-way (Impossible to determine which colors went in to produce final result) https://www.crypto101.io/ Secure Software Systems Fall 2018 6 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) + + $ $ = = Mix Mix (1) Start with public color ▇ – share across network (2) Alice picks secret color ▇ and mixes it to get ▇ (3) Bob picks secret color ▇ and mixes it to get ▇ Secure Software Systems Fall 2018 7 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) $ $ Mix Mix = = Eve can’t calculate ▇ !! (secret keys were never shared) (4) Alice and Bob exchange their mixed colors (▇,▇) (5) Eve will -

Magnetic RSA

Magnetic RSA Rémi Géraud-Stewart1;2 and David Naccache2 1 QPSI, Qualcomm Technologies Incorporated, USA [email protected] 2 ÉNS (DI), Information Security Group CNRS, PSL Research University, 75005, Paris, France [email protected] Abstract. In a recent paper Géraud-Stewart and Naccache [GSN21] (GSN) described an non-interactive process allowing a prover P to con- vince a verifier V that a modulus n is the product of two randomly generated primes (p; q) of about the same size. A heuristic argument conjectures that P cannot control p; q to make n easy to factor. GSN’s protocol relies upon elementary number-theoretic properties and can be implemented efficiently using very few operations. This contrasts with state-of-the-art zero-knowledge protocols for RSA modulus proper generation assessment. This paper proposes an alternative process applicable in settings where P co-generates a modulus n “ p1q1p2q2 with a certification authority V. If P honestly cooperates with V, then V will only learn the sub-products n1 “ p1q1 and n2 “ p2q2. A heuristic argument conjectures that at least two of the factors of n are beyond P’s control. This makes n appropriate for cryptographic use provided that at least one party (of P and V) is honest. This heuristic argument calls for further cryptanalysis. 1 Introduction Several cryptographic protocols rely on the assumption that an integer n “ pq is hard to factor. This includes for instance RSA [RSA78], Rabin [Rab79], Paillier [Pai99] or Fiat–Shamir [FS86]. One way to ascertain that n is such a product is to generate it oneself; however, this becomes a concern when n is provided by a third-party. -

Fall 2016 Dear Electrical Engineering Alumni and Friends, This Past

ABBAS EL GAMAL Fortinet Founders Chair of the Department of Electrical Engineering Hitachi America Professor Fall 2016 Dear Electrical Engineering Alumni and Friends, This past academic year was another very successful one for the department. We made great progress toward implementing the vision of our strategic plan (EE in the 21st Century, or EE21 for short), which I outlined in my letter to you last year. I am also proud to share some of the exciting research in the department and the significant recognitions our faculty have received. I will first briefly describe the progress we have made toward implementing our EE21 plan. Faculty hiring. The top priority in our strategic plan is hiring faculty with complementary vision and expertise and who enhance our faculty diversity. This past academic year, we conducted a junior faculty broad area search and participated in a School of Engineering wide search in the area of robotics. I am happy to report that Mary Wootters joined our faculty in September as an assistant professor jointly with Computer Science. Mary’s research focuses on applying probability to coding theory, signal processing, and randomized algorithms. She also explores quantum information theory and complexity theory. Mary was previously an NSF postdoctoral fellow in the CS department at Carnegie Mellon University. The robotics search yielded two top candidates. I will report on the final results of this search in my next year’s letter. Reinventing the undergraduate curriculum. We continue to innovate our undergraduate curriculum, introducing two new, exciting project-oriented courses: EE107: Embedded Networked Systems and EE267: Virtual Reality. -

Martin Hellman, Walker and Company, New York,1988, Pantheon Books, New York,1986

Risk Analysis of Nuclear Deterrence by Dr. Martin E. Hellman, New York Epsilon ’66 he first fundamental canon of The Code of A terrorist attack involving a nuclear weapon would Ethics for Engineers adopted by Tau Beta Pi be a catastrophe of immense proportions: “A 10-kiloton states that “Engineers shall hold paramount bomb detonated at Grand Central Station on a typical work the safety, health, and welfare of the public day would likely kill some half a million people, and inflict in the performance of their professional over a trillion dollars in direct economic damage. America Tduties.” When we design systems, we routinely use large and its way of life would be changed forever.” [Bunn 2003, safety factors to account for unforeseen circumstances. pages viii-ix]. The Golden Gate Bridge was designed with a safety factor The likelihood of such an attack is also significant. For- several times the anticipated load. This “over design” saved mer Secretary of Defense William Perry has estimated the bridge, along with the lives of the 300,000 people who the chance of a nuclear terrorist incident within the next thronged onto it in 1987 to celebrate its fiftieth anniver- decade to be roughly 50 percent [Bunn 2007, page 15]. sary. The weight of all those people presented a load that David Albright, a former weapons inspector in Iraq, was several times the design load1, visibly flattening the estimates those odds at less than one percent, but notes, bridge’s arched roadway. Watching the roadway deform, “We would never accept a situation where the chance of a bridge engineers feared that the span might collapse, but major nuclear accident like Chernobyl would be anywhere engineering conservatism saved the day. -

The Impetus to Creativity in Technology

The Impetus to Creativity in Technology Alan G. Konheim Professor Emeritus Department of Computer Science University of California Santa Barbara, California 93106 [email protected] [email protected] Abstract: We describe the technical developments ensuing from two well-known publications in the 20th century containing significant and seminal results, a paper by Claude Shannon in 1948 and a patent by Horst Feistel in 1971. Near the beginning, Shannon’s paper sets the tone with the statement ``the fundamental problem of communication is that of reproducing at one point either exactly or approximately a message selected *sent+ at another point.‛ Shannon’s Coding Theorem established the relationship between the probability of error and rate measuring the transmission efficiency. Shannon proved the existence of codes achieving optimal performance, but it required forty-five years to exhibit an actual code achieving it. These Shannon optimal-efficient codes are responsible for a wide range of communication technology we enjoy today, from GPS, to the NASA rovers Spirit and Opportunity on Mars, and lastly to worldwide communication over the Internet. The US Patent #3798539A filed by the IBM Corporation in1971 described Horst Feistel’s Block Cipher Cryptographic System, a new paradigm for encryption systems. It was largely a departure from the current technology based on shift-register stream encryption for voice and the many of the electro-mechanical cipher machines introduced nearly fifty years before. Horst’s vision directed to its application to secure the privacy of computer files. Invented at a propitious moment in time and implemented by IBM in automated teller machines for the Lloyds Bank Cashpoint System. -

Diffie and Hellman Receive 2015 Turing Award Rod Searcey/Stanford University

Diffie and Hellman Receive 2015 Turing Award Rod Searcey/Stanford University. Linda A. Cicero/Stanford News Service. Whitfield Diffie Martin E. Hellman ernment–private sector relations, and attracts billions of Whitfield Diffie, former chief security officer of Sun Mi- dollars in research and development,” said ACM President crosystems, and Martin E. Hellman, professor emeritus Alexander L. Wolf. “In 1976, Diffie and Hellman imagined of electrical engineering at Stanford University, have been a future where people would regularly communicate awarded the 2015 A. M. Turing Award of the Association through electronic networks and be vulnerable to having for Computing Machinery for their critical contributions their communications stolen or altered. Now, after nearly to modern cryptography. forty years, we see that their forecasts were remarkably Citation prescient.” The ability for two parties to use encryption to commu- “Public-key cryptography is fundamental for our indus- nicate privately over an otherwise insecure channel is try,” said Andrei Broder, Google Distinguished Scientist. fundamental for billions of people around the world. On “The ability to protect private data rests on protocols for a daily basis, individuals establish secure online connec- confirming an owner’s identity and for ensuring the integ- tions with banks, e-commerce sites, email servers, and the rity and confidentiality of communications. These widely cloud. Diffie and Hellman’s groundbreaking 1976 paper, used protocols were made possible through the ideas and “New Directions in Cryptography,” introduced the ideas of methods pioneered by Diffie and Hellman.” public-key cryptography and digital signatures, which are Cryptography is a practice that facilitates communi- the foundation for most regularly used security protocols cation between two parties so that the communication on the Internet today. -

The Best Nurturers in Computer Science Research

The Best Nurturers in Computer Science Research Bharath Kumar M. Y. N. Srikant IISc-CSA-TR-2004-10 http://archive.csa.iisc.ernet.in/TR/2004/10/ Computer Science and Automation Indian Institute of Science, India October 2004 The Best Nurturers in Computer Science Research Bharath Kumar M.∗ Y. N. Srikant† Abstract The paper presents a heuristic for mining nurturers in temporally organized collaboration networks: people who facilitate the growth and success of the young ones. Specifically, this heuristic is applied to the computer science bibliographic data to find the best nurturers in computer science research. The measure of success is parameterized, and the paper demonstrates experiments and results with publication count and citations as success metrics. Rather than just the nurturer’s success, the heuristic captures the influence he has had in the indepen- dent success of the relatively young in the network. These results can hence be a useful resource to graduate students and post-doctoral can- didates. The heuristic is extended to accurately yield ranked nurturers inside a particular time period. Interestingly, there is a recognizable deviation between the rankings of the most successful researchers and the best nurturers, which although is obvious from a social perspective has not been statistically demonstrated. Keywords: Social Network Analysis, Bibliometrics, Temporal Data Mining. 1 Introduction Consider a student Arjun, who has finished his under-graduate degree in Computer Science, and is seeking a PhD degree followed by a successful career in Computer Science research. How does he choose his research advisor? He has the following options with him: 1. Look up the rankings of various universities [1], and apply to any “rea- sonably good” professor in any of the top universities. -

The RSA Algorithm

The RSA Algorithm Evgeny Milanov 3 June 2009 In 1978, Ron Rivest, Adi Shamir, and Leonard Adleman introduced a cryptographic algorithm, which was essentially to replace the less secure National Bureau of Standards (NBS) algorithm. Most impor- tantly, RSA implements a public-key cryptosystem, as well as digital signatures. RSA is motivated by the published works of Diffie and Hellman from several years before, who described the idea of such an algorithm, but never truly developed it. Introduced at the time when the era of electronic email was expected to soon arise, RSA implemented two important ideas: 1. Public-key encryption. This idea omits the need for a \courier" to deliver keys to recipients over another secure channel before transmitting the originally-intended message. In RSA, encryption keys are public, while the decryption keys are not, so only the person with the correct decryption key can decipher an encrypted message. Everyone has their own encryption and decryption keys. The keys must be made in such a way that the decryption key may not be easily deduced from the public encryption key. 2. Digital signatures. The receiver may need to verify that a transmitted message actually origi- nated from the sender (signature), and didn't just come from there (authentication). This is done using the sender's decryption key, and the signature can later be verified by anyone, using the corresponding public encryption key. Signatures therefore cannot be forged. Also, no signer can later deny having signed the message. This is not only useful for electronic mail, but for other electronic transactions and transmissions, such as fund transfers. -

Cache Timing Side-Channel Vulnerability Checking With

Cache Timing Side-Channel Vulnerability Checking with Computation Tree Logic Shuwen Deng, Wenjie Xiong and Jakub Szefer Yale University New Haven, Connecticut {shuwen.deng,wenjie.xiong,jakub.szefer}@yale.edu ABSTRACT can then be used to leak secrets of a victim program, and this has Caches are one of the key features of modern processors as they been exploited by the various cache timing side-channel attacks, help to improve memory access timing through caching recently e.g., [1, 5, 7, 21, 30]. used data. However, due to the timing dierences between cache To address the security issues of cache timing side-channel at- hits and misses, numerous timing side-channels have been discov- tacks, numerous secure processor caches have been designed and ered and exploited in the past. In this paper, Computation Tree analyzed through simulation and probability analysis in attempt Logic is used to model execution paths of the processor cache logic, to prove that the proposed caches indeed prevent attacks [12, 13, and to derive formulas for paths that can lead to timing side-channel 26, 27, 33–36, 39, 40]. Furthermore, to reason about the dierent vulnerabilities. In total, 28 types of cache attacks are presented: 20 attacks, researchers have classied cache timing side-channels on types of which map to attacks previously categorized or discussed basis whether the attacks leverage cache hits or misses and whether in literature, and 8 types are new. Furthermore, to enable practical they are access-based or reuse-based, and whether the attacks lever- vulnerability checking, we present a new approach that limits the age internal or external interference [22, 41]. -

Rsa and Rabin Functions: Certain Parts Are As Hard As the Whole*

SIAM J. COMPUT. (C) 1988 Society for Industrial and Applied Mathematics Vol. 17, No. 2, April 1988 0O2 RSA AND RABIN FUNCTIONS: CERTAIN PARTS ARE AS HARD AS THE WHOLE* WERNER ALEXI, BENNY CHOR$, ODED GOLDREICH AND CLAUS P. SCHNORR' Abstract. The RSA and Rabin encryption functions EN(" are respectively defined by raising x ZN to the power e (where e is relatively prime to q(N)) and squaring modulo N (i.e., En(x)- x (mod N), En(x)-- x (mod N), respectively). We prove that for both functions, the following problems are computa- tionally equivalent (each is probabilistic polynomial-time reducible to the other): (1) Given E (x), find x. (2) Given EN(x), guess the least-significant bit of x with success probability 1/2+ 1/poly (n) (where n is the length of the modulus N). This equivalence implies that an adversary, given the RSA/Rabin ciphertext, cannot have a non-negligible advantage (over a random coin flip) in guessing the least-significant bit of the plaintext, unless he can invert RSA/factor N. The proof techniques also yield the simultaneous security of the log n least-significant bits. Our results improve the efficiency of pseudorandom number generation and probabilistic encryption schemes based on the intractability of factoring. Key words, cryptography, concrete complexity, RSA encryption, factoring integers, partial information, predicate reductions AMS(MOS) subject classifications, llA51, 11K45, llT71, llY05, llX16, llZS0, 68Q99, 94A60 1. Introduction. One-way functions are the basis for modern cryptography [11] and have many applications to pseudorandomness and complexity theories [6], [29].