A Memorable Trip Abhisekh Sankaran Research Scholar, IIT Bombay

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Efficient Algorithms with Asymmetric Read and Write Costs

Efficient Algorithms with Asymmetric Read and Write Costs Guy E. Blelloch1, Jeremy T. Fineman2, Phillip B. Gibbons1, Yan Gu1, and Julian Shun3 1 Carnegie Mellon University 2 Georgetown University 3 University of California, Berkeley Abstract In several emerging technologies for computer memory (main memory), the cost of reading is significantly cheaper than the cost of writing. Such asymmetry in memory costs poses a fun- damentally different model from the RAM for algorithm design. In this paper we study lower and upper bounds for various problems under such asymmetric read and write costs. We con- sider both the case in which all but O(1) memory has asymmetric cost, and the case of a small cache of symmetric memory. We model both cases using the (M, ω)-ARAM, in which there is a small (symmetric) memory of size M and a large unbounded (asymmetric) memory, both random access, and where reading from the large memory has unit cost, but writing has cost ω 1. For FFT and sorting networks we show a lower bound cost of Ω(ωn logωM n), which indicates that it is not possible to achieve asymptotic improvements with cheaper reads when ω is bounded by a polynomial in M. Moreover, there is an asymptotic gap (of min(ω, log n)/ log(ωM)) between the cost of sorting networks and comparison sorting in the model. This contrasts with the RAM, and most other models, in which the asymptotic costs are the same. We also show a lower bound for computations on an n × n diamond DAG of Ω(ωn2/M) cost, which indicates no asymptotic improvement is achievable with fast reads. -

Tarjan Transcript Final with Timestamps

A.M. Turing Award Oral History Interview with Robert (Bob) Endre Tarjan by Roy Levin San Mateo, California July 12, 2017 Levin: My name is Roy Levin. Today is July 12th, 2017, and I’m in San Mateo, California at the home of Robert Tarjan, where I’ll be interviewing him for the ACM Turing Award Winners project. Good afternoon, Bob, and thanks for spending the time to talk to me today. Tarjan: You’re welcome. Levin: I’d like to start by talking about your early technical interests and where they came from. When do you first recall being interested in what we might call technical things? Tarjan: Well, the first thing I would say in that direction is my mom took me to the public library in Pomona, where I grew up, which opened up a huge world to me. I started reading science fiction books and stories. Originally, I wanted to be the first person on Mars, that was what I was thinking, and I got interested in astronomy, started reading a lot of science stuff. I got to junior high school and I had an amazing math teacher. His name was Mr. Wall. I had him two years, in the eighth and ninth grade. He was teaching the New Math to us before there was such a thing as “New Math.” He taught us Peano’s axioms and things like that. It was a wonderful thing for a kid like me who was really excited about science and mathematics and so on. The other thing that happened was I discovered Scientific American in the public library and started reading Martin Gardner’s columns on mathematical games and was completely fascinated. -

Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber

Outline • Chapter 19: Security (cont) • A Method for Obtaining Digital Signatures and Public-Key Cryptosystems Ronald L. Rivest, Adi Shamir, and Leonard M. Adleman. Communications of the ACM 21,2 (Feb. 1978) – RSA Algorithm – First practical public key crypto system • Authentication in Distributed Systems: Theory and Practice, Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber – Butler Lampson (MSR) - He was one of the designers of the SDS 940 time-sharing system, the Alto personal distributed computing system, the Xerox 9700 laser printer, two-phase commit protocols, the Autonet LAN, and several programming languages – Martin Abadi (Bell Labs) – Michael Burrows, Edward Wobber (DEC/Compaq/HP SRC) Oct-21-03 CSE 542: Operating Systems 1 Encryption • Properties of good encryption technique: – Relatively simple for authorized users to encrypt and decrypt data. – Encryption scheme depends not on the secrecy of the algorithm but on a parameter of the algorithm called the encryption key. – Extremely difficult for an intruder to determine the encryption key. Oct-21-03 CSE 542: Operating Systems 2 Strength • Strength of crypto system depends on the strengths of the keys • Computers get faster – keys have to become harder to keep up • If it takes more effort to break a code than is worth, it is okay – Transferring money from my bank to my credit card and Citibank transferring billions of dollars with another bank should not have the same key strength Oct-21-03 CSE 542: Operating Systems 3 Encryption methods • Symmetric cryptography – Sender and receiver know the secret key (apriori ) • Fast encryption, but key exchange should happen outside the system • Asymmetric cryptography – Each person maintains two keys, public and private • M ≡ PrivateKey(PublicKey(M)) • M ≡ PublicKey (PrivateKey(M)) – Public part is available to anyone, private part is only known to the sender – E.g. -

Great Ideas in Computing

Great Ideas in Computing University of Toronto CSC196 Winter/Spring 2019 Week 6: October 19-23 (2020) 1 / 17 Announcements I added one final question to Assignment 2. It concerns search engines. The question may need a little clarification. I have also clarified question 1 where I have defined (in a non standard way) the meaning of a strict binary search tree which is what I had in mind. Please answer the question for a strict binary search tree. If you answered the quiz question for a non strict binary search tree withn a proper explanation you will get full credit. Quick poll: how many students feel that Q1 was a fair quiz? A1 has now been graded by Marta. I will scan over the assignments and hope to release the grades later today. If you plan to make a regrading request, you have up to one week to submit your request. You must specify clearly why you feel that a question may not have been graded fairly. In general, students did well which is what I expected. 2 / 17 Agenda for the week We will continue to discuss search engines. We ended on what is slide 10 (in Week 5) on Friday and we will continue with where we left off. I was surprised that in our poll, most students felt that the people advocating the \AI view" of search \won the debate" whereas today I will try to argue that the people (e.g., Salton and others) advocating the \combinatorial, algebraic, statistical view" won the debate as to current search engines. -

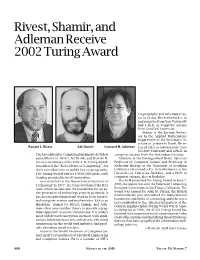

Rivest, Shamir, and Adleman Receive 2002 Turing Award, Volume 50

Rivest, Shamir, and Adleman Receive 2002 Turing Award Cryptography and Information Se- curity Group. He received a B.A. in mathematics from Yale University and a Ph.D. in computer science from Stanford University. Shamir is the Borman Profes- sor in the Applied Mathematics Department of the Weizmann In- stitute of Science in Israel. He re- Ronald L. Rivest Adi Shamir Leonard M. Adleman ceived a B.S. in mathematics from Tel Aviv University and a Ph.D. in The Association for Computing Machinery (ACM) has computer science from the Weizmann Institute. named RONALD L. RIVEST, ADI SHAMIR, and LEONARD M. Adleman is the Distinguished Henry Salvatori ADLEMAN as winners of the 2002 A. M. Turing Award, Professor of Computer Science and Professor of considered the “Nobel Prize of Computing”, for Molecular Biology at the University of Southern their contributions to public key cryptography. California. He earned a B.S. in mathematics at the The Turing Award carries a $100,000 prize, with University of California, Berkeley, and a Ph.D. in funding provided by Intel Corporation. computer science, also at Berkeley. As researchers at the Massachusetts Institute of The ACM presented the Turing Award on June 7, Technology in 1977, the team developed the RSA 2003, in conjunction with the Federated Computing code, which has become the foundation for an en- Research Conference in San Diego, California. The tire generation of technology security products. It award was named for Alan M. Turing, the British mathematician who articulated the mathematical has also inspired important work in both theoret- foundation and limits of computing and who was a ical computer science and mathematics. -

Towards a Secure Agent Society

Towards A Secure Agent So ciety Qi He Katia Sycara The Rob otics Institute The Rob otics Institute Carnegie Mellon University Carnegie Mellon University Pittsburgh, PA 15213, U.S.A. Pittsburgh, PA 15213, U.S.A [email protected] [email protected] March 23, 1998 Abstract We present a general view of what a \secure agent so ciety" should b e and howtode- velop it rather than fo cus on any sp eci c details or particular agent-based application . We b elieve that the main e ort to achieve security in agent so cieties consists of the following three asp ects:1 agent authentication mechanisms that form the secure so ciety's foundation, 2 a security architecture design within an agent that enables security p olicy making, se- curity proto col generation and security op eration execution, and 3 the extension of agent communication languages for agent secure communication and trust management. In this pap er, all of the three main asp ects are systematically discussed for agent security based on an overall understanding of mo dern cryptographic technology. One purp ose of the pap er is to give some answers to those questions resulting from absence of a complete picture. Area: Software Agents Keywords: security, agent architecture, agent-based public key infrastructure PKI, public key cryptosystem PKCS, con dentiality, authentication, integrity, nonrepudiation. 1 1 Intro duction If you are going to design and develop a software agent-based real application system for elec- tronic commerce, you would immediately learn that there exists no such secure communication between agents, which is assumed by most agent mo del designers. -

Computational Learning Theory: New Models and Algorithms

Computational Learning Theory: New Models and Algorithms by Robert Hal Sloan S.M. EECS, Massachusetts Institute of Technology (1986) B.S. Mathematics, Yale University (1983) Submitted to the Department- of Electrical Engineering and Computer Science in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 1989 @ Robert Hal Sloan, 1989. All rights reserved The author hereby grants to MIT permission to reproduce and to distribute copies of this thesis document in whole or in part. Signature of Author Department of Electrical Engineering and Computer Science May 23, 1989 Certified by Ronald L. Rivest Professor of Computer Science Thesis Supervisor Accepted by Arthur C. Smith Chairman, Departmental Committee on Graduate Students Abstract In the past several years, there has been a surge of interest in computational learning theory-the formal (as opposed to empirical) study of learning algorithms. One major cause for this interest was the model of probably approximately correct learning, or pac learning, introduced by Valiant in 1984. This thesis begins by presenting a new learning algorithm for a particular problem within that model: learning submodules of the free Z-module Zk. We prove that this algorithm achieves probable approximate correctness, and indeed, that it is within a log log factor of optimal in a related, but more stringent model of learning, on-line mistake bounded learning. We then proceed to examine the influence of noisy data on pac learning algorithms in general. Previously it has been shown that it is possible to tolerate large amounts of random classification noise, but only a very small amount of a very malicious sort of noise. -

Efficient Algorithms with Asymmetric Read and Write Costs

Efficient Algorithms with Asymmetric Read and Write Costs Guy E. Blelloch1, Jeremy T. Fineman2, Phillip B. Gibbons1, Yan Gu1, and Julian Shun3 1 Carnegie Mellon University 2 Georgetown University 3 University of California, Berkeley Abstract In several emerging technologies for computer memory (main memory), the cost of reading is significantly cheaper than the cost of writing. Such asymmetry in memory costs poses a fun- damentally different model from the RAM for algorithm design. In this paper we study lower and upper bounds for various problems under such asymmetric read and write costs. We con- sider both the case in which all but O(1) memory has asymmetric cost, and the case of a small cache of symmetric memory. We model both cases using the (M, ω)-ARAM, in which there is a small (symmetric) memory of size M and a large unbounded (asymmetric) memory, both random access, and where reading from the large memory has unit cost, but writing has cost ω 1. For FFT and sorting networks we show a lower bound cost of Ω(ωn logωM n), which indicates that it is not possible to achieve asymptotic improvements with cheaper reads when ω is bounded by a polynomial in M. Moreover, there is an asymptotic gap (of min(ω, log n)/ log(ωM)) between the cost of sorting networks and comparison sorting in the model. This contrasts with the RAM, and most other models, in which the asymptotic costs are the same. We also show a lower bound for computations on an n × n diamond DAG of Ω(ωn2/M) cost, which indicates no asymptotic improvement is achievable with fast reads. -

1. Course Information Are Handed Out

6.826—Principles of Computer Systems 2006 6.826—Principles of Computer Systems 2006 course secretary's desk. They normally cover the material discussed in class during the week they 1. Course Information are handed out. Delayed submission of the solutions will be penalized, and no solutions will be accepted after Thursday 5:00PM. Students in the class will be asked to help grade the problem sets. Each week a team of students Staff will work with the TA to grade the week’s problems. This takes about 3-4 hours. Each student will probably only have to do it once during the term. Faculty We will try to return the graded problem sets, with solutions, within a week after their due date. Butler Lampson 32-G924 425-703-5925 [email protected] Policy on collaboration Daniel Jackson 32-G704 8-8471 [email protected] We encourage discussion of the issues in the lectures, readings, and problem sets. However, if Teaching Assistant you collaborate on problem sets, you must tell us who your collaborators are. And in any case, you must write up all solutions on your own. David Shin [email protected] Project Course Secretary During the last half of the course there is a project in which students will work in groups of three Maria Rebelo 32-G715 3-5895 [email protected] or so to apply the methods of the course to their own research projects. Each group will pick a Office Hours real system, preferably one that some member of the group is actually working on but possibly one from a published paper or from someone else’s research, and write: Messrs. -

Reducibility

Reducibility t REDUCIBILITY AMONG COMBINATORIAL PROBLEMS Richard M. Karp University of California at Berkeley Abstract: A large class of computational problems involve the determination of properties of graphs, digraphs, integers, arrays of integers, finite families of finite sets, boolean formulas and elements of other countable domains. Through simple encodings from such domains into the set of words over a finite alphabet these problems can be converted into language recognition problems, and we can inquire into their computational complexity. It is reasonable to consider such a problem satisfactorily solved when an algorithm for its solution is found which terminates within a . number of steps bounded by a polynomial in the length of the input. We show that a large number of classic unsolved problems of cover- ing. matching, packing, routing, assignment and sequencing are equivalent, in the sense that either each of them possesses a polynomial-bounded algorithm or none of them does. 1. INTRODUCTION All the general methods presently known for computing the chromatic number of a graph, deciding whether a graph has a Hamilton circuit. or solving a system of linear inequalities in which the variables are constrained to be 0 or 1, require a combinatorial search for which the worst case time requirement grows exponentially with the length of the input. In this paper we give theorems which strongly suggest, but do not imply, that these problems, as well as many others, will remain intractable perpetually. t This research was partially supported by National Science Founda- tion Grant GJ-474. 85 R. E. Miller et al. (eds.), Complexity of Computer Computations © Plenum Press, New York 1972 86 RICHARD M. -

Cryptography: DH And

1 ì Key Exchange Secure Software Systems Fall 2018 2 Challenge – Exchanging Keys & & − 1 6(6 − 1) !"#ℎ%&'() = = = 15 & 2 2 The more parties in communication, ! $ the more keys that need to be securely exchanged Do we have to use out-of-band " # methods? (e.g., phone?) % Secure Software Systems Fall 2018 3 Key Exchange ì Insecure communica-ons ì Alice and Bob agree on a channel shared secret (“key”) that ì Eve can see everything! Eve doesn’t know ì Despite Eve seeing everything! ! " (alice) (bob) # (eve) Secure Software Systems Fall 2018 Whitfield Diffie and Martin Hellman, 4 “New directions in cryptography,” in IEEE Transactions on Information Theory, vol. 22, no. 6, Nov 1976. Proposed public key cryptography. Diffie-Hellman key exchange. Secure Software Systems Fall 2018 5 Diffie-Hellman Color Analogy (1) It’s easy to mix two colors: + = (2) Mixing two or more colors in a different order results in + + = the same color: + + = (3) Mixing colors is one-way (Impossible to determine which colors went in to produce final result) https://www.crypto101.io/ Secure Software Systems Fall 2018 6 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) + + $ $ = = Mix Mix (1) Start with public color ▇ – share across network (2) Alice picks secret color ▇ and mixes it to get ▇ (3) Bob picks secret color ▇ and mixes it to get ▇ Secure Software Systems Fall 2018 7 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) $ $ Mix Mix = = Eve can’t calculate ▇ !! (secret keys were never shared) (4) Alice and Bob exchange their mixed colors (▇,▇) (5) Eve will -

Magnetic RSA

Magnetic RSA Rémi Géraud-Stewart1;2 and David Naccache2 1 QPSI, Qualcomm Technologies Incorporated, USA [email protected] 2 ÉNS (DI), Information Security Group CNRS, PSL Research University, 75005, Paris, France [email protected] Abstract. In a recent paper Géraud-Stewart and Naccache [GSN21] (GSN) described an non-interactive process allowing a prover P to con- vince a verifier V that a modulus n is the product of two randomly generated primes (p; q) of about the same size. A heuristic argument conjectures that P cannot control p; q to make n easy to factor. GSN’s protocol relies upon elementary number-theoretic properties and can be implemented efficiently using very few operations. This contrasts with state-of-the-art zero-knowledge protocols for RSA modulus proper generation assessment. This paper proposes an alternative process applicable in settings where P co-generates a modulus n “ p1q1p2q2 with a certification authority V. If P honestly cooperates with V, then V will only learn the sub-products n1 “ p1q1 and n2 “ p2q2. A heuristic argument conjectures that at least two of the factors of n are beyond P’s control. This makes n appropriate for cryptographic use provided that at least one party (of P and V) is honest. This heuristic argument calls for further cryptanalysis. 1 Introduction Several cryptographic protocols rely on the assumption that an integer n “ pq is hard to factor. This includes for instance RSA [RSA78], Rabin [Rab79], Paillier [Pai99] or Fiat–Shamir [FS86]. One way to ascertain that n is such a product is to generate it oneself; however, this becomes a concern when n is provided by a third-party.