The Impetus to Creativity in Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chapter 3 – Block Ciphers and the Data Encryption Standard

Chapter 3 –Block Ciphers and the Data Cryptography and Network Encryption Standard Security All the afternoon Mungo had been working on Stern's Chapter 3 code, principally with the aid of the latest messages which he had copied down at the Nevin Square drop. Stern was very confident. He must be well aware London Central knew about that drop. It was obvious Fifth Edition that they didn't care how often Mungo read their messages, so confident were they in the by William Stallings impenetrability of the code. —Talking to Strange Men, Ruth Rendell Lecture slides by Lawrie Brown Modern Block Ciphers Block vs Stream Ciphers now look at modern block ciphers • block ciphers process messages in blocks, each one of the most widely used types of of which is then en/decrypted cryptographic algorithms • like a substitution on very big characters provide secrecy /hii/authentication services – 64‐bits or more focus on DES (Data Encryption Standard) • stream ciphers process messages a bit or byte at a time when en/decrypting to illustrate block cipher design principles • many current ciphers are block ciphers – better analysed – broader range of applications Block vs Stream Ciphers Block Cipher Principles • most symmetric block ciphers are based on a Feistel Cipher Structure • needed since must be able to decrypt ciphertext to recover messages efficiently • bloc k cihiphers lklook like an extremely large substitution • would need table of 264 entries for a 64‐bit block • instead create from smaller building blocks • using idea of a product cipher 1 Claude -

The Evolution of Ibm Research Looking Back at 50 Years of Scientific Achievements and Innovations

FEATURES THE EVOLUTION OF IBM RESEARCH LOOKING BACK AT 50 YEARS OF SCIENTIFIC ACHIEVEMENTS AND INNOVATIONS l Chris Sciacca and Christophe Rossel – IBM Research – Zurich, Switzerland – DOI: 10.1051/epn/2014201 By the mid-1950s IBM had established laboratories in New York City and in San Jose, California, with San Jose being the first one apart from headquarters. This provided considerable freedom to the scientists and with its success IBM executives gained the confidence they needed to look beyond the United States for a third lab. The choice wasn’t easy, but Switzerland was eventually selected based on the same blend of talent, skills and academia that IBM uses today — most recently for its decision to open new labs in Ireland, Brazil and Australia. 16 EPN 45/2 Article available at http://www.europhysicsnews.org or http://dx.doi.org/10.1051/epn/2014201 THE evolution OF IBM RESEARCH FEATURES he Computing-Tabulating-Recording Com- sorting and disseminating information was going to pany (C-T-R), the precursor to IBM, was be a big business, requiring investment in research founded on 16 June 1911. It was initially a and development. Tmerger of three manufacturing businesses, He began hiring the country’s top engineers, led which were eventually molded into the $100 billion in- by one of world’s most prolific inventors at the time: novator in technology, science, management and culture James Wares Bryce. Bryce was given the task to in- known as IBM. vent and build the best tabulating, sorting and key- With the success of C-T-R after World War I came punch machines. -

IBM Research AI Residency Program

IBM Research AI Residency Program The IBM Research™ AI Residency Program is a 13-month program Topics of focus include: that provides opportunity for scientists, engineers, domain experts – Trust in AI (Causal modeling, fairness, explainability, and entrepreneurs to conduct innovative research and development robustness, transparency, AI ethics) on important and emerging topics in Artificial Intelligence (AI). The program aims at creating and investigating novel approaches – Natural Language Processing and Understanding in AI that progress capabilities towards significant technical (Question and answering, reading comprehension, and real-world challenges. AI Residents work closely with IBM language embeddings, dialog, multi-lingual NLP) Research scientists and are expected to fully complete a project – Knowledge and Reasoning (Knowledge/graph embeddings, within the 13-month residency. The results of the project may neuro-symbolic reasoning) include publications in top AI conferences and journals, development of prototypes demonstrating important new AI functionality – AI Automation, Scaling, and Management (Automated data and fielding of working AI systems. science, neural architecture search, AutoML, transfer learning, few-shot/one-shot/meta learning, active learning, AI planning, As part of the selection process, candidates must submit parallel and distributed learning) a 500-word statement of research interest and goals. – AI and Software Engineering (Big code analysis and understanding, software life cycle including modernize, build, debug, test and manage, software synthesis including refactoring and automated programming) – Human-Centered AI (HCI of AI, human-AI collaboration, AI interaction models and modalities, conversational AI, novel AI experiences, visual AI and data visualization) Deadline to apply: January 31, 2021 Earliest start date: June 1, 2021 Duration: 13 months Locations: IBM Thomas J. -

Chapter 3 – Block Ciphers and the Data Encryption Standard

Symmetric Cryptography Chapter 6 Block vs Stream Ciphers • Block ciphers process messages into blocks, each of which is then en/decrypted – Like a substitution on very big characters • 64-bits or more • Stream ciphers process messages a bit or byte at a time when en/decrypting – Many current ciphers are block ciphers • Better analyzed. • Broader range of applications. Block vs Stream Ciphers Block Cipher Principles • Block ciphers look like an extremely large substitution • Would need table of 264 entries for a 64-bit block • Arbitrary reversible substitution cipher for a large block size is not practical – 64-bit general substitution block cipher, key size 264! • Most symmetric block ciphers are based on a Feistel Cipher Structure • Needed since must be able to decrypt ciphertext to recover messages efficiently Ideal Block Cipher Substitution-Permutation Ciphers • in 1949 Shannon introduced idea of substitution- permutation (S-P) networks – modern substitution-transposition product cipher • These form the basis of modern block ciphers • S-P networks are based on the two primitive cryptographic operations we have seen before: – substitution (S-box) – permutation (P-box) (transposition) • Provide confusion and diffusion of message Diffusion and Confusion • Introduced by Claude Shannon to thwart cryptanalysis based on statistical analysis – Assume the attacker has some knowledge of the statistical characteristics of the plaintext • Cipher needs to completely obscure statistical properties of original message • A one-time pad does this Diffusion -

Cryptography: DH And

1 ì Key Exchange Secure Software Systems Fall 2018 2 Challenge – Exchanging Keys & & − 1 6(6 − 1) !"#ℎ%&'() = = = 15 & 2 2 The more parties in communication, ! $ the more keys that need to be securely exchanged Do we have to use out-of-band " # methods? (e.g., phone?) % Secure Software Systems Fall 2018 3 Key Exchange ì Insecure communica-ons ì Alice and Bob agree on a channel shared secret (“key”) that ì Eve can see everything! Eve doesn’t know ì Despite Eve seeing everything! ! " (alice) (bob) # (eve) Secure Software Systems Fall 2018 Whitfield Diffie and Martin Hellman, 4 “New directions in cryptography,” in IEEE Transactions on Information Theory, vol. 22, no. 6, Nov 1976. Proposed public key cryptography. Diffie-Hellman key exchange. Secure Software Systems Fall 2018 5 Diffie-Hellman Color Analogy (1) It’s easy to mix two colors: + = (2) Mixing two or more colors in a different order results in + + = the same color: + + = (3) Mixing colors is one-way (Impossible to determine which colors went in to produce final result) https://www.crypto101.io/ Secure Software Systems Fall 2018 6 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) + + $ $ = = Mix Mix (1) Start with public color ▇ – share across network (2) Alice picks secret color ▇ and mixes it to get ▇ (3) Bob picks secret color ▇ and mixes it to get ▇ Secure Software Systems Fall 2018 7 Diffie-Hellman Color Analogy ! # " (alice) (eve) (bob) $ $ Mix Mix = = Eve can’t calculate ▇ !! (secret keys were never shared) (4) Alice and Bob exchange their mixed colors (▇,▇) (5) Eve will -

Fall 2016 Dear Electrical Engineering Alumni and Friends, This Past

ABBAS EL GAMAL Fortinet Founders Chair of the Department of Electrical Engineering Hitachi America Professor Fall 2016 Dear Electrical Engineering Alumni and Friends, This past academic year was another very successful one for the department. We made great progress toward implementing the vision of our strategic plan (EE in the 21st Century, or EE21 for short), which I outlined in my letter to you last year. I am also proud to share some of the exciting research in the department and the significant recognitions our faculty have received. I will first briefly describe the progress we have made toward implementing our EE21 plan. Faculty hiring. The top priority in our strategic plan is hiring faculty with complementary vision and expertise and who enhance our faculty diversity. This past academic year, we conducted a junior faculty broad area search and participated in a School of Engineering wide search in the area of robotics. I am happy to report that Mary Wootters joined our faculty in September as an assistant professor jointly with Computer Science. Mary’s research focuses on applying probability to coding theory, signal processing, and randomized algorithms. She also explores quantum information theory and complexity theory. Mary was previously an NSF postdoctoral fellow in the CS department at Carnegie Mellon University. The robotics search yielded two top candidates. I will report on the final results of this search in my next year’s letter. Reinventing the undergraduate curriculum. We continue to innovate our undergraduate curriculum, introducing two new, exciting project-oriented courses: EE107: Embedded Networked Systems and EE267: Virtual Reality. -

Martin Hellman, Walker and Company, New York,1988, Pantheon Books, New York,1986

Risk Analysis of Nuclear Deterrence by Dr. Martin E. Hellman, New York Epsilon ’66 he first fundamental canon of The Code of A terrorist attack involving a nuclear weapon would Ethics for Engineers adopted by Tau Beta Pi be a catastrophe of immense proportions: “A 10-kiloton states that “Engineers shall hold paramount bomb detonated at Grand Central Station on a typical work the safety, health, and welfare of the public day would likely kill some half a million people, and inflict in the performance of their professional over a trillion dollars in direct economic damage. America Tduties.” When we design systems, we routinely use large and its way of life would be changed forever.” [Bunn 2003, safety factors to account for unforeseen circumstances. pages viii-ix]. The Golden Gate Bridge was designed with a safety factor The likelihood of such an attack is also significant. For- several times the anticipated load. This “over design” saved mer Secretary of Defense William Perry has estimated the bridge, along with the lives of the 300,000 people who the chance of a nuclear terrorist incident within the next thronged onto it in 1987 to celebrate its fiftieth anniver- decade to be roughly 50 percent [Bunn 2007, page 15]. sary. The weight of all those people presented a load that David Albright, a former weapons inspector in Iraq, was several times the design load1, visibly flattening the estimates those odds at less than one percent, but notes, bridge’s arched roadway. Watching the roadway deform, “We would never accept a situation where the chance of a bridge engineers feared that the span might collapse, but major nuclear accident like Chernobyl would be anywhere engineering conservatism saved the day. -

Treatment and Differential Diagnosis Insights for the Physician's

Treatment and differential diagnosis insights for the physician’s consideration in the moments that matter most The role of medical imaging in global health systems is literally fundamental. Like labs, medical images are used at one point or another in almost every high cost, high value episode of care. Echocardiograms, CT scans, mammograms, and x-rays, for example, “atlas” the body and help chart a course forward for a patient’s care team. Imaging precision has improved as a result of technological advancements and breakthroughs in related medical research. Those advancements also bring with them exponential growth in medical imaging data. The capabilities referenced throughout this document are in the research and development phase and are not available for any use, commercial or non-commercial. Any statements and claims related to the capabilities referenced are aspirational only. There were roughly 800 million multi-slice exams performed in the United States in 2015 alone. Those studies generated approximately 60 billion medical images. At those volumes, each of the roughly 31,000 radiologists in the U.S. would have to view an image every two seconds of every working day for an entire year in order to extract potentially life-saving information from a handful of images hidden in a sea of data. 31K 800MM 60B radiologists exams medical images What’s worse, medical images remain largely disconnected from the rest of the relevant data (lab results, patient-similar cases, medical research) inside medical records (and beyond them), making it difficult for physicians to place medical imaging in the context of patient histories that may unlock clues to previously unconsidered treatment pathways. -

Chapter 2 the Data Encryption Standard (DES)

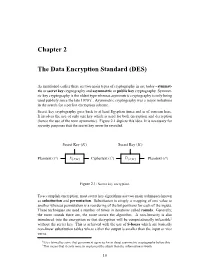

Chapter 2 The Data Encryption Standard (DES) As mentioned earlier there are two main types of cryptography in use today - symmet- ric or secret key cryptography and asymmetric or public key cryptography. Symmet- ric key cryptography is the oldest type whereas asymmetric cryptography is only being used publicly since the late 1970’s1. Asymmetric cryptography was a major milestone in the search for a perfect encryption scheme. Secret key cryptography goes back to at least Egyptian times and is of concern here. It involves the use of only one key which is used for both encryption and decryption (hence the use of the term symmetric). Figure 2.1 depicts this idea. It is necessary for security purposes that the secret key never be revealed. Secret Key (K) Secret Key (K) ? ? - - - - Plaintext (P ) E{P,K} Ciphertext (C) D{C,K} Plaintext (P ) Figure 2.1: Secret key encryption. To accomplish encryption, most secret key algorithms use two main techniques known as substitution and permutation. Substitution is simply a mapping of one value to another whereas permutation is a reordering of the bit positions for each of the inputs. These techniques are used a number of times in iterations called rounds. Generally, the more rounds there are, the more secure the algorithm. A non-linearity is also introduced into the encryption so that decryption will be computationally infeasible2 without the secret key. This is achieved with the use of S-boxes which are basically non-linear substitution tables where either the output is smaller than the input or vice versa. 1It is claimed by some that government agencies knew about asymmetric cryptography before this. -

Diffie and Hellman Receive 2015 Turing Award Rod Searcey/Stanford University

Diffie and Hellman Receive 2015 Turing Award Rod Searcey/Stanford University. Linda A. Cicero/Stanford News Service. Whitfield Diffie Martin E. Hellman ernment–private sector relations, and attracts billions of Whitfield Diffie, former chief security officer of Sun Mi- dollars in research and development,” said ACM President crosystems, and Martin E. Hellman, professor emeritus Alexander L. Wolf. “In 1976, Diffie and Hellman imagined of electrical engineering at Stanford University, have been a future where people would regularly communicate awarded the 2015 A. M. Turing Award of the Association through electronic networks and be vulnerable to having for Computing Machinery for their critical contributions their communications stolen or altered. Now, after nearly to modern cryptography. forty years, we see that their forecasts were remarkably Citation prescient.” The ability for two parties to use encryption to commu- “Public-key cryptography is fundamental for our indus- nicate privately over an otherwise insecure channel is try,” said Andrei Broder, Google Distinguished Scientist. fundamental for billions of people around the world. On “The ability to protect private data rests on protocols for a daily basis, individuals establish secure online connec- confirming an owner’s identity and for ensuring the integ- tions with banks, e-commerce sites, email servers, and the rity and confidentiality of communications. These widely cloud. Diffie and Hellman’s groundbreaking 1976 paper, used protocols were made possible through the ideas and “New Directions in Cryptography,” introduced the ideas of methods pioneered by Diffie and Hellman.” public-key cryptography and digital signatures, which are Cryptography is a practice that facilitates communi- the foundation for most regularly used security protocols cation between two parties so that the communication on the Internet today. -

Introduction to the Cell Multiprocessor

Introduction J. A. Kahle M. N. Day to the Cell H. P. Hofstee C. R. Johns multiprocessor T. R. Maeurer D. Shippy This paper provides an introductory overview of the Cell multiprocessor. Cell represents a revolutionary extension of conventional microprocessor architecture and organization. The paper discusses the history of the project, the program objectives and challenges, the design concept, the architecture and programming models, and the implementation. Introduction: History of the project processors in order to provide the required Initial discussion on the collaborative effort to develop computational density and power efficiency. After Cell began with support from CEOs from the Sony several months of architectural discussion and contract and IBM companies: Sony as a content provider and negotiations, the STI (SCEI–Toshiba–IBM) Design IBM as a leading-edge technology and server company. Center was formally opened in Austin, Texas, on Collaboration was initiated among SCEI (Sony March 9, 2001. The STI Design Center represented Computer Entertainment Incorporated), IBM, for a joint investment in design of about $400,000,000. microprocessor development, and Toshiba, as a Separate joint collaborations were also set in place development and high-volume manufacturing technology for process technology development. partner. This led to high-level architectural discussions A number of key elements were employed to drive the among the three companies during the summer of 2000. success of the Cell multiprocessor design. First, a holistic During a critical meeting in Tokyo, it was determined design approach was used, encompassing processor that traditional architectural organizations would not architecture, hardware implementation, system deliver the computational power that SCEI sought structures, and software programming models. -

IBM Infosphere

Software Steve Mills Senior Vice President and Group Executive Software Group Software Performance A Decade of Growth Revenue + $3.2B Grew revenue 1.7X and profit 2.9X + $5.6B expanding margins 13 points $18.2B$18.2B $21.4B$21.4B #1 Middleware Market Leader * $12.6B$12.6B Increased Key Branded Middleware 2000 2006 2009 from 38% to 59% of Software revenues Acquired 60+ companies Pre-Tax Income 34% Increased number of development labs Margin globally from 15 to 42 27% 7 pts Margin 2010 Roadmap Performance Segment PTI Growth Model 12% - 15% $8.1B$8.1B 21% 6 pts • Grew PTI to $8B at a 14% CGR Margin • Expanded PTI Margin by 7 points $5.5B$5.5B $2.8B$2.8B ’00–’06’00–’06 ’06–’09’06–’09 Launched high growth initiatives CGRCGR CGRCGR 12%12% 14%14% • Smarter Planet solutions 2000 2006 2009 • Business Analytics & Optimization GAAP View © 2010 International Business Machines Corporation * Source: IBM Market Insights 04/20/10 Software Will Help Deliver IBM’s 2015 Roadmap IBM Roadmap to 2015 Base Growth Future Operating Portfolio Revenue Acquisitions Leverage Mix Growth Initiatives Continue to drive growth and share gain Accelerate shift to higher value middleware Capitalize on market opportunity * business • Middleware opportunity growth of 5% CGR Invest for growth – High growth products growing 2X faster than rest of • Developer population = 33K middleware Extend Global Reach – Growth markets growing 2X faster than major markets • 42 global development labs with skills in 31 – BAO opportunity growth of 7% countries Acquisitions to extend