The Mathematics of the RSA Public-Key Cryptosystem Burt Kaliski RSA Laboratories

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Public-Key Cryptography

Public Key Cryptography EJ Jung Basic Public Key Cryptography public key public key ? private key Alice Bob Given: Everybody knows Bob’s public key - How is this achieved in practice? Only Bob knows the corresponding private key Goals: 1. Alice wants to send a secret message to Bob 2. Bob wants to authenticate himself Requirements for Public-Key Crypto ! Key generation: computationally easy to generate a pair (public key PK, private key SK) • Computationally infeasible to determine private key PK given only public key PK ! Encryption: given plaintext M and public key PK, easy to compute ciphertext C=EPK(M) ! Decryption: given ciphertext C=EPK(M) and private key SK, easy to compute plaintext M • Infeasible to compute M from C without SK • Decrypt(SK,Encrypt(PK,M))=M Requirements for Public-Key Cryptography 1. Computationally easy for a party B to generate a pair (public key KUb, private key KRb) 2. Easy for sender to generate ciphertext: C = EKUb (M ) 3. Easy for the receiver to decrypt ciphertect using private key: M = DKRb (C) = DKRb[EKUb (M )] Henric Johnson 4 Requirements for Public-Key Cryptography 4. Computationally infeasible to determine private key (KRb) knowing public key (KUb) 5. Computationally infeasible to recover message M, knowing KUb and ciphertext C 6. Either of the two keys can be used for encryption, with the other used for decryption: M = DKRb[EKUb (M )] = DKUb[EKRb (M )] Henric Johnson 5 Public-Key Cryptographic Algorithms ! RSA and Diffie-Hellman ! RSA - Ron Rives, Adi Shamir and Len Adleman at MIT, in 1977. • RSA -

500 Natural Sciences and Mathematics

500 500 Natural sciences and mathematics Natural sciences: sciences that deal with matter and energy, or with objects and processes observable in nature Class here interdisciplinary works on natural and applied sciences Class natural history in 508. Class scientific principles of a subject with the subject, plus notation 01 from Table 1, e.g., scientific principles of photography 770.1 For government policy on science, see 338.9; for applied sciences, see 600 See Manual at 231.7 vs. 213, 500, 576.8; also at 338.9 vs. 352.7, 500; also at 500 vs. 001 SUMMARY 500.2–.8 [Physical sciences, space sciences, groups of people] 501–509 Standard subdivisions and natural history 510 Mathematics 520 Astronomy and allied sciences 530 Physics 540 Chemistry and allied sciences 550 Earth sciences 560 Paleontology 570 Biology 580 Plants 590 Animals .2 Physical sciences For astronomy and allied sciences, see 520; for physics, see 530; for chemistry and allied sciences, see 540; for earth sciences, see 550 .5 Space sciences For astronomy, see 520; for earth sciences in other worlds, see 550. For space sciences aspects of a specific subject, see the subject, plus notation 091 from Table 1, e.g., chemical reactions in space 541.390919 See Manual at 520 vs. 500.5, 523.1, 530.1, 919.9 .8 Groups of people Add to base number 500.8 the numbers following —08 in notation 081–089 from Table 1, e.g., women in science 500.82 501 Philosophy and theory Class scientific method as a general research technique in 001.4; class scientific method applied in the natural sciences in 507.2 502 Miscellany 577 502 Dewey Decimal Classification 502 .8 Auxiliary techniques and procedures; apparatus, equipment, materials Including microscopy; microscopes; interdisciplinary works on microscopy Class stereology with compound microscopes, stereology with electron microscopes in 502; class interdisciplinary works on photomicrography in 778.3 For manufacture of microscopes, see 681. -

Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber

Outline • Chapter 19: Security (cont) • A Method for Obtaining Digital Signatures and Public-Key Cryptosystems Ronald L. Rivest, Adi Shamir, and Leonard M. Adleman. Communications of the ACM 21,2 (Feb. 1978) – RSA Algorithm – First practical public key crypto system • Authentication in Distributed Systems: Theory and Practice, Butler Lampson, Martin Abadi, Michael Burrows, Edward Wobber – Butler Lampson (MSR) - He was one of the designers of the SDS 940 time-sharing system, the Alto personal distributed computing system, the Xerox 9700 laser printer, two-phase commit protocols, the Autonet LAN, and several programming languages – Martin Abadi (Bell Labs) – Michael Burrows, Edward Wobber (DEC/Compaq/HP SRC) Oct-21-03 CSE 542: Operating Systems 1 Encryption • Properties of good encryption technique: – Relatively simple for authorized users to encrypt and decrypt data. – Encryption scheme depends not on the secrecy of the algorithm but on a parameter of the algorithm called the encryption key. – Extremely difficult for an intruder to determine the encryption key. Oct-21-03 CSE 542: Operating Systems 2 Strength • Strength of crypto system depends on the strengths of the keys • Computers get faster – keys have to become harder to keep up • If it takes more effort to break a code than is worth, it is okay – Transferring money from my bank to my credit card and Citibank transferring billions of dollars with another bank should not have the same key strength Oct-21-03 CSE 542: Operating Systems 3 Encryption methods • Symmetric cryptography – Sender and receiver know the secret key (apriori ) • Fast encryption, but key exchange should happen outside the system • Asymmetric cryptography – Each person maintains two keys, public and private • M ≡ PrivateKey(PublicKey(M)) • M ≡ PublicKey (PrivateKey(M)) – Public part is available to anyone, private part is only known to the sender – E.g. -

Making Sense of Snowden, Part II: What's Significant in the NSA

web extra Making Sense of Snowden, Part II: What’s Significant in the NSA Revelations Susan Landau, Google hen The Guardian began publishing a widely used cryptographic standard has vastly wider impact, leaked documents from the National especially because industry relies so heavily on secure Inter- Security Agency (NSA) on 6 June 2013, net commerce. Then The Guardian and Der Spiegel revealed each day brought startling news. From extensive, directed US eavesdropping on European leaders7 as the NSA’s collection of metadata re- well as broad surveillance by its fellow members of the “Five cords of all calls made within the US1 Eyes”: Australia, Canada, New Zealand, and the UK. Finally, to programs that collected and stored data of “non-US” per- there were documents showing the NSA targeting Google and W2 8,9 sons to the UK Government Communications Headquarters’ Yahoo’s inter-datacenter communications. (GCHQs’) interception of 200 transatlantic fiberoptic cables I summarized the initial revelations in the July/August is- at the point where they reached Britain3 to the NSA’s pene- sue of IEEE Security & Privacy.10 This installment, written in tration of communications by leaders at the G20 summit, the late December, examines the more recent ones; as a Web ex- pot was boiling over. But by late June, things had slowed to a tra, it offers more details on the teaser I wrote for the January/ simmer. The summer carried news that the NSA was partially February 2014 issue of IEEE Security & Privacy magazine funding the GCHQ’s surveillance efforts,4 and The Guardian (www.computer.org/security). -

Public Key Cryptography And

PublicPublic KeyKey CryptographyCryptography andand RSARSA Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] Audio/Video recordings of this lecture are available at: http://www.cse.wustl.edu/~jain/cse571-11/ Washington University in St. Louis CSE571S ©2011 Raj Jain 9-1 OverviewOverview 1. Public Key Encryption 2. Symmetric vs. Public-Key 3. RSA Public Key Encryption 4. RSA Key Construction 5. Optimizing Private Key Operations 6. RSA Security These slides are based partly on Lawrie Brown’s slides supplied with William Stallings’s book “Cryptography and Network Security: Principles and Practice,” 5th Ed, 2011. Washington University in St. Louis CSE571S ©2011 Raj Jain 9-2 PublicPublic KeyKey EncryptionEncryption Invented in 1975 by Diffie and Hellman at Stanford Encrypted_Message = Encrypt(Key1, Message) Message = Decrypt(Key2, Encrypted_Message) Key1 Key2 Text Ciphertext Text Keys are interchangeable: Key2 Key1 Text Ciphertext Text One key is made public while the other is kept private Sender knows only public key of the receiver Asymmetric Washington University in St. Louis CSE571S ©2011 Raj Jain 9-3 PublicPublic KeyKey EncryptionEncryption ExampleExample Rivest, Shamir, and Adleman at MIT RSA: Encrypted_Message = m3 mod 187 Message = Encrypted_Message107 mod 187 Key1 = <3,187>, Key2 = <107,187> Message = 5 Encrypted Message = 53 = 125 Message = 125107 mod 187 = 5 = 125(64+32+8+2+1) mod 187 = {(12564 mod 187)(12532 mod 187)... (1252 mod 187)(125 mod 187)} mod 187 Washington University in -

Rivest, Shamir, and Adleman Receive 2002 Turing Award, Volume 50

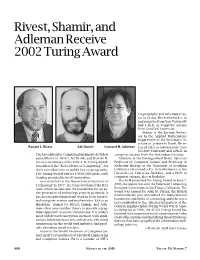

Rivest, Shamir, and Adleman Receive 2002 Turing Award Cryptography and Information Se- curity Group. He received a B.A. in mathematics from Yale University and a Ph.D. in computer science from Stanford University. Shamir is the Borman Profes- sor in the Applied Mathematics Department of the Weizmann In- stitute of Science in Israel. He re- Ronald L. Rivest Adi Shamir Leonard M. Adleman ceived a B.S. in mathematics from Tel Aviv University and a Ph.D. in The Association for Computing Machinery (ACM) has computer science from the Weizmann Institute. named RONALD L. RIVEST, ADI SHAMIR, and LEONARD M. Adleman is the Distinguished Henry Salvatori ADLEMAN as winners of the 2002 A. M. Turing Award, Professor of Computer Science and Professor of considered the “Nobel Prize of Computing”, for Molecular Biology at the University of Southern their contributions to public key cryptography. California. He earned a B.S. in mathematics at the The Turing Award carries a $100,000 prize, with University of California, Berkeley, and a Ph.D. in funding provided by Intel Corporation. computer science, also at Berkeley. As researchers at the Massachusetts Institute of The ACM presented the Turing Award on June 7, Technology in 1977, the team developed the RSA 2003, in conjunction with the Federated Computing code, which has become the foundation for an en- Research Conference in San Diego, California. The tire generation of technology security products. It award was named for Alan M. Turing, the British mathematician who articulated the mathematical has also inspired important work in both theoret- foundation and limits of computing and who was a ical computer science and mathematics. -

Math 788M: Computational Number Theory (Instructor’S Notes)

Math 788M: Computational Number Theory (Instructor’s Notes) The Very Beginning: • A positive integer n can be written in n steps. • The role of numerals (now O(log n) steps) • Can we do better? (Example: The largest known prime contains 258716 digits and doesn’t take long to write down. It’s 2859433 − 1.) Running Time of Algorithms: • A positive integer n in base b contains [logb n] + 1 digits. • Big-Oh & Little-Oh Notation (as well as , , ∼, ) Examples 1: log (1 + (1/n)) = O(1/n) Examples 2: [logb n] + 1 log n Examples 3: 1 + 2 + ··· + n n2 These notes are for a course taught by Michael Filaseta in the Spring of 1996 and being updated for Fall of 2007. Computational Number Theory Notes 2 Examples 4: f a polynomial of degree k =⇒ f(n) = O(nk) Examples 5: (r + 1)π ∼ rπ • We will want algorithms to run quickly (in a small number of steps) in comparison to the length of the input. For example, we may ask, “How quickly can we factor a positive integer n?” One considers the length of the input n to be of order log n (corresponding to the number of binary digits n has). An algorithm runs in polynomial time if the number of steps it takes is bounded above by a polynomial in the length of the input. An algorithm to factor n in polynomial time would require that it take O (log n)k steps (and that it factor n). Addition and Subtraction (of n and m): • We are taught how to do binary addition and subtraction in O(log n + log m) steps. -

Number Theory

“mcs-ftl” — 2010/9/8 — 0:40 — page 81 — #87 4 Number Theory Number theory is the study of the integers. Why anyone would want to study the integers is not immediately obvious. First of all, what’s to know? There’s 0, there’s 1, 2, 3, and so on, and, oh yeah, -1, -2, . Which one don’t you understand? Sec- ond, what practical value is there in it? The mathematician G. H. Hardy expressed pleasure in its impracticality when he wrote: [Number theorists] may be justified in rejoicing that there is one sci- ence, at any rate, and that their own, whose very remoteness from or- dinary human activities should keep it gentle and clean. Hardy was specially concerned that number theory not be used in warfare; he was a pacifist. You may applaud his sentiments, but he got it wrong: Number Theory underlies modern cryptography, which is what makes secure online communication possible. Secure communication is of course crucial in war—which may leave poor Hardy spinning in his grave. It’s also central to online commerce. Every time you buy a book from Amazon, check your grades on WebSIS, or use a PayPal account, you are relying on number theoretic algorithms. Number theory also provides an excellent environment for us to practice and apply the proof techniques that we developed in Chapters 2 and 3. Since we’ll be focusing on properties of the integers, we’ll adopt the default convention in this chapter that variables range over the set of integers, Z. 4.1 Divisibility The nature of number theory emerges as soon as we consider the divides relation a divides b iff ak b for some k: D The notation, a b, is an abbreviation for “a divides b.” If a b, then we also j j say that b is a multiple of a. -

Crypto Wars of the 1990S

Danielle Kehl, Andi Wilson, and Kevin Bankston DOOMED TO REPEAT HISTORY? LESSONS FROM THE CRYPTO WARS OF THE 1990S CYBERSECURITY June 2015 | INITIATIVE © 2015 NEW AMERICA This report carries a Creative Commons license, which permits non-commercial re-use of New America content when proper attribution is provided. This means you are free to copy, display and distribute New America’s work, or in- clude our content in derivative works, under the following conditions: ATTRIBUTION. NONCOMMERCIAL. SHARE ALIKE. You must clearly attribute the work You may not use this work for If you alter, transform, or build to New America, and provide a link commercial purposes without upon this work, you may distribute back to www.newamerica.org. explicit prior permission from the resulting work only under a New America. license identical to this one. For the full legal code of this Creative Commons license, please visit creativecommons.org. If you have any questions about citing or reusing New America content, please contact us. AUTHORS Danielle Kehl, Senior Policy Analyst, Open Technology Institute Andi Wilson, Program Associate, Open Technology Institute Kevin Bankston, Director, Open Technology Institute ABOUT THE OPEN TECHNOLOGY INSTITUTE ACKNOWLEDGEMENTS The Open Technology Institute at New America is committed to freedom The authors would like to thank and social justice in the digital age. To achieve these goals, it intervenes Hal Abelson, Steven Bellovin, Jerry in traditional policy debates, builds technology, and deploys tools with Berman, Matt Blaze, Alan David- communities. OTI brings together a unique mix of technologists, policy son, Joseph Hall, Lance Hoffman, experts, lawyers, community organizers, and urban planners to examine the Seth Schoen, and Danny Weitzner impacts of technology and policy on people, commerce, and communities. -

Ch 13 Digital Signature

1 CH 13 DIGITAL SIGNATURE Cryptography and Network Security HanJung Mason Yun 2 Index 13.1 Digital Signatures 13.2 Elgamal Digital Signature Scheme 13.3 Schnorr Digital Signature Scheme 13.4 NIST Digital Signature Algorithm 13.6 RSA-PSS Digital Signature Algorithm 3 13.1 Digital Signature - Properties • It must verify the author and the date and time of the signature. • It must authenticate the contents at the time of the signature. • It must be verifiable by third parties, to resolve disputes. • The digital signature function includes authentication. 4 5 6 Attacks and Forgeries • Key-Only attack • Known message attack • Generic chosen message attack • Directed chosen message attack • Adaptive chosen message attack 7 Attacks and Forgeries • Total break • Universal forgery • Selective forgery • Existential forgery 8 Digital Signature Requirements • It must be a bit pattern that depends on the message. • It must use some information unique to the sender to prevent both forgery and denial. • It must be relatively easy to produce the digital signature. • It must be relatively easy to recognize and verify the digital signature. • It must be computationally infeasible to forge a digital signature, either by constructing a new message for an existing digital signature or by constructing a fraudulent digital signature for a given message. • It must be practical to retain a copy of the digital signature in storage. 9 Direct Digital Signature • Digital signature scheme that involves only the communication parties. • It must authenticate the contents at the time of the signature. • It must be verifiable by third parties, to resolve disputes. • Thus, the digital signature function includes the authentication function. -

What Is a Cryptosystem?

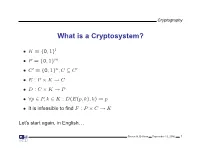

Cryptography What is a Cryptosystem? • K = {0, 1}l • P = {0, 1}m • C′ = {0, 1}n,C ⊆ C′ • E : P × K → C • D : C × K → P • ∀p ∈ P, k ∈ K : D(E(p, k), k) = p • It is infeasible to find F : P × C → K Let’s start again, in English. Steven M. Bellovin September 13, 2006 1 Cryptography What is a Cryptosystem? A cryptosystem is pair of algorithms that take a key and convert plaintext to ciphertext and back. Plaintext is what you want to protect; ciphertext should appear to be random gibberish. The design and analysis of today’s cryptographic algorithms is highly mathematical. Do not try to design your own algorithms. Steven M. Bellovin September 13, 2006 2 Cryptography A Tiny Bit of History • Encryption goes back thousands of years • Classical ciphers encrypted letters (and perhaps digits), and yielded all sorts of bizarre outputs. • The advent of military telegraphy led to ciphers that produced only letters. Steven M. Bellovin September 13, 2006 3 Cryptography Codes vs. Ciphers • Ciphers operate syntactically, on letters or groups of letters: A → D, B → E, etc. • Codes operate semantically, on words, phrases, or sentences, per this 1910 codebook Steven M. Bellovin September 13, 2006 4 Cryptography A 1910 Commercial Codebook Steven M. Bellovin September 13, 2006 5 Cryptography Commercial Telegraph Codes • Most were aimed at economy • Secrecy from casual snoopers was a useful side-effect, but not the primary motivation • That said, a few such codes were intended for secrecy; I have some in my collection, including one intended for union use Steven M. -

CS 255: Intro to Cryptography 1 Introduction 2 End-To-End

Programming Assignment 2 Winter 2021 CS 255: Intro to Cryptography Prof. Dan Boneh Due Monday, March 1st, 11:59pm 1 Introduction In this assignment, you are tasked with implementing a secure and efficient end-to-end encrypted chat client using the Double Ratchet Algorithm, a popular session setup protocol that powers real- world chat systems such as Signal and WhatsApp. As an additional challenge, assume you live in a country with government surveillance. Thereby, all messages sent are required to include the session key encrypted with a fixed public key issued by the government. In your implementation, you will make use of various cryptographic primitives we have discussed in class—notably, key exchange, public key encryption, digital signatures, and authenticated encryption. Because it is ill-advised to implement your own primitives in cryptography, you should use an established library: in this case, the Stanford Javascript Crypto Library (SJCL). We will provide starter code that contains a basic template, which you will be able to fill in to satisfy the functionality and security properties described below. 2 End-to-end Encrypted Chat Client 2.1 Implementation Details Your chat client will use the Double Ratchet Algorithm to provide end-to-end encrypted commu- nications with other clients. To evaluate your messaging client, we will check that two or more instances of your implementation it can communicate with each other properly. We feel that it is best to understand the Double Ratchet Algorithm straight from the source, so we ask that you read Sections 1, 2, and 3 of Signal’s published specification here: https://signal.