Choosing Key Sizes for Cryptography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Public-Key Cryptography

Public Key Cryptography EJ Jung Basic Public Key Cryptography public key public key ? private key Alice Bob Given: Everybody knows Bob’s public key - How is this achieved in practice? Only Bob knows the corresponding private key Goals: 1. Alice wants to send a secret message to Bob 2. Bob wants to authenticate himself Requirements for Public-Key Crypto ! Key generation: computationally easy to generate a pair (public key PK, private key SK) • Computationally infeasible to determine private key PK given only public key PK ! Encryption: given plaintext M and public key PK, easy to compute ciphertext C=EPK(M) ! Decryption: given ciphertext C=EPK(M) and private key SK, easy to compute plaintext M • Infeasible to compute M from C without SK • Decrypt(SK,Encrypt(PK,M))=M Requirements for Public-Key Cryptography 1. Computationally easy for a party B to generate a pair (public key KUb, private key KRb) 2. Easy for sender to generate ciphertext: C = EKUb (M ) 3. Easy for the receiver to decrypt ciphertect using private key: M = DKRb (C) = DKRb[EKUb (M )] Henric Johnson 4 Requirements for Public-Key Cryptography 4. Computationally infeasible to determine private key (KRb) knowing public key (KUb) 5. Computationally infeasible to recover message M, knowing KUb and ciphertext C 6. Either of the two keys can be used for encryption, with the other used for decryption: M = DKRb[EKUb (M )] = DKUb[EKRb (M )] Henric Johnson 5 Public-Key Cryptographic Algorithms ! RSA and Diffie-Hellman ! RSA - Ron Rives, Adi Shamir and Len Adleman at MIT, in 1977. • RSA -

Public Key Cryptography And

PublicPublic KeyKey CryptographyCryptography andand RSARSA Raj Jain Washington University in Saint Louis Saint Louis, MO 63130 [email protected] Audio/Video recordings of this lecture are available at: http://www.cse.wustl.edu/~jain/cse571-11/ Washington University in St. Louis CSE571S ©2011 Raj Jain 9-1 OverviewOverview 1. Public Key Encryption 2. Symmetric vs. Public-Key 3. RSA Public Key Encryption 4. RSA Key Construction 5. Optimizing Private Key Operations 6. RSA Security These slides are based partly on Lawrie Brown’s slides supplied with William Stallings’s book “Cryptography and Network Security: Principles and Practice,” 5th Ed, 2011. Washington University in St. Louis CSE571S ©2011 Raj Jain 9-2 PublicPublic KeyKey EncryptionEncryption Invented in 1975 by Diffie and Hellman at Stanford Encrypted_Message = Encrypt(Key1, Message) Message = Decrypt(Key2, Encrypted_Message) Key1 Key2 Text Ciphertext Text Keys are interchangeable: Key2 Key1 Text Ciphertext Text One key is made public while the other is kept private Sender knows only public key of the receiver Asymmetric Washington University in St. Louis CSE571S ©2011 Raj Jain 9-3 PublicPublic KeyKey EncryptionEncryption ExampleExample Rivest, Shamir, and Adleman at MIT RSA: Encrypted_Message = m3 mod 187 Message = Encrypted_Message107 mod 187 Key1 = <3,187>, Key2 = <107,187> Message = 5 Encrypted Message = 53 = 125 Message = 125107 mod 187 = 5 = 125(64+32+8+2+1) mod 187 = {(12564 mod 187)(12532 mod 187)... (1252 mod 187)(125 mod 187)} mod 187 Washington University in -

Cryptography in Modern World

Cryptography in Modern World Julius O. Olwenyi, Aby Tino Thomas, Ayad Barsoum* St. Mary’s University, San Antonio, TX (USA) Emails: [email protected], [email protected], [email protected] Abstract — Cryptography and Encryption have been where a letter in plaintext is simply shifted 3 places down used for secure communication. In the modern world, the alphabet [4,5]. cryptography is a very important tool for protecting information in computer systems. With the invention ABCDEFGHIJKLMNOPQRSTUVWXYZ of the World Wide Web or Internet, computer systems are highly interconnected and accessible from DEFGHIJKLMNOPQRSTUVWXYZABC any part of the world. As more systems get interconnected, more threat actors try to gain access The ciphertext of the plaintext “CRYPTOGRAPHY” will to critical information stored on the network. It is the be “FUBSWRJUASLB” in a Caesar cipher. responsibility of data owners or organizations to keep More recent derivative of Caesar cipher is Rot13 this data securely and encryption is the main tool used which shifts 13 places down the alphabet instead of 3. to secure information. In this paper, we will focus on Rot13 was not all about data protection but it was used on different techniques and its modern application of online forums where members could share inappropriate cryptography. language or nasty jokes without necessarily being Keywords: Cryptography, Encryption, Decryption, Data offensive as it will take those interested in those “jokes’ security, Hybrid Encryption to shift characters 13 spaces to read the message and if not interested you do not need to go through the hassle of converting the cipher. I. INTRODUCTION In the 16th century, the French cryptographer Back in the days, cryptography was not all about Blaise de Vigenere [4,5], developed the first hiding messages or secret communication, but in ancient polyalphabetic substitution basically based on Caesar Egypt, where it began; it was carved into the walls of cipher, but more difficult to crack the cipher text. -

On the NIST Lightweight Cryptography Standardization

On the NIST Lightweight Cryptography Standardization Meltem S¨onmez Turan NIST Lightweight Cryptography Team ECC 2019: 23rd Workshop on Elliptic Curve Cryptography December 2, 2019 Outline • NIST's Cryptography Standards • Overview - Lightweight Cryptography • NIST Lightweight Cryptography Standardization Process • Announcements 1 NIST's Cryptography Standards National Institute of Standards and Technology • Non-regulatory federal agency within U.S. Department of Commerce. • Founded in 1901, known as the National Bureau of Standards (NBS) prior to 1988. • Headquarters in Gaithersburg, Maryland, and laboratories in Boulder, Colorado. • Employs around 6,000 employees and associates. NIST's Mission to promote U.S. innovation and industrial competitiveness by advancing measurement science, standards, and technology in ways that enhance economic security and improve our quality of life. 2 NIST Organization Chart Laboratory Programs Computer Security Division • Center for Nanoscale Science and • Cryptographic Technology Technology • Secure Systems and Applications • Communications Technology Lab. • Security Outreach and Integration • Engineering Lab. • Security Components and Mechanisms • Information Technology Lab. • Security Test, Validation and • Material Measurement Lab. Measurements • NIST Center for Neutron Research • Physical Measurement Lab. Information Technology Lab. • Advanced Network Technologies • Applied and Computational Mathematics • Applied Cybersecurity • Computer Security • Information Access • Software and Systems • Statistical -

CS 255: Intro to Cryptography 1 Introduction 2 End-To-End

Programming Assignment 2 Winter 2021 CS 255: Intro to Cryptography Prof. Dan Boneh Due Monday, March 1st, 11:59pm 1 Introduction In this assignment, you are tasked with implementing a secure and efficient end-to-end encrypted chat client using the Double Ratchet Algorithm, a popular session setup protocol that powers real- world chat systems such as Signal and WhatsApp. As an additional challenge, assume you live in a country with government surveillance. Thereby, all messages sent are required to include the session key encrypted with a fixed public key issued by the government. In your implementation, you will make use of various cryptographic primitives we have discussed in class—notably, key exchange, public key encryption, digital signatures, and authenticated encryption. Because it is ill-advised to implement your own primitives in cryptography, you should use an established library: in this case, the Stanford Javascript Crypto Library (SJCL). We will provide starter code that contains a basic template, which you will be able to fill in to satisfy the functionality and security properties described below. 2 End-to-end Encrypted Chat Client 2.1 Implementation Details Your chat client will use the Double Ratchet Algorithm to provide end-to-end encrypted commu- nications with other clients. To evaluate your messaging client, we will check that two or more instances of your implementation it can communicate with each other properly. We feel that it is best to understand the Double Ratchet Algorithm straight from the source, so we ask that you read Sections 1, 2, and 3 of Signal’s published specification here: https://signal. -

A Review on Elliptic Curve Cryptography for Embedded Systems

International Journal of Computer Science & Information Technology (IJCSIT), Vol 3, No 3, June 2011 A REVIEW ON ELLIPTIC CURVE CRYPTOGRAPHY FOR EMBEDDED SYSTEMS Rahat Afreen 1 and S.C. Mehrotra 2 1Tom Patrick Institute of Computer & I.T, Dr. Rafiq Zakaria Campus, Rauza Bagh, Aurangabad. (Maharashtra) INDIA [email protected] 2Department of C.S. & I.T., Dr. B.A.M. University, Aurangabad. (Maharashtra) INDIA [email protected] ABSTRACT Importance of Elliptic Curves in Cryptography was independently proposed by Neal Koblitz and Victor Miller in 1985.Since then, Elliptic curve cryptography or ECC has evolved as a vast field for public key cryptography (PKC) systems. In PKC system, we use separate keys to encode and decode the data. Since one of the keys is distributed publicly in PKC systems, the strength of security depends on large key size. The mathematical problems of prime factorization and discrete logarithm are previously used in PKC systems. ECC has proved to provide same level of security with relatively small key sizes. The research in the field of ECC is mostly focused on its implementation on application specific systems. Such systems have restricted resources like storage, processing speed and domain specific CPU architecture. KEYWORDS Elliptic curve cryptography Public Key Cryptography, embedded systems, Elliptic Curve Digital Signature Algorithm ( ECDSA), Elliptic Curve Diffie Hellman Key Exchange (ECDH) 1. INTRODUCTION The changing global scenario shows an elegant merging of computing and communication in such a way that computers with wired communication are being rapidly replaced to smaller handheld embedded computers using wireless communication in almost every field. This has increased data privacy and security requirements. -

Cryptographic Control Standard, Version

Nuclear Regulatory Commission Office of the Chief Information Officer Computer Security Standard Office Instruction: OCIO-CS-STD-2009 Office Instruction Title: Cryptographic Control Standard Revision Number: 2.0 Issuance: Date of last signature below Effective Date: October 1, 2017 Primary Contacts: Kathy Lyons-Burke, Senior Level Advisor for Information Security Responsible Organization: OCIO Summary of Changes: OCIO-CS-STD-2009, “Cryptographic Control Standard,” provides the minimum security requirements that must be applied to the Nuclear Regulatory Commission (NRC) systems which utilize cryptographic algorithms, protocols, and cryptographic modules to provide secure communication services. This update is based on the latest versions of the National Institute of Standards and Technology (NIST) Guidance and Federal Information Processing Standards (FIPS) publications, Committee on National Security System (CNSS) issuances, and National Security Agency (NSA) requirements. Training: Upon request ADAMS Accession No.: ML17024A095 Approvals Primary Office Owner Office of the Chief Information Officer Signature Date Enterprise Security Kathy Lyons-Burke 09/26/17 Architecture Working Group Chair CIO David Nelson /RA/ 09/26/17 CISO Jonathan Feibus 09/26/17 OCIO-CS-STD-2009 Page i TABLE OF CONTENTS 1 PURPOSE ............................................................................................................................. 1 2 INTRODUCTION .................................................................................................................. -

Basics of Digital Signatures &

> DOCUMENT SIGNING > eID VALIDATION > SIGNATURE VERIFICATION > TIMESTAMPING & ARCHIVING > APPROVAL WORKFLOW Basics of Digital Signatures & PKI This document provides a quick background to PKI-based digital signatures and an overview of how the signature creation and verification processes work. It also describes how the cryptographic keys used for creating and verifying digital signatures are managed. 1. Background to Digital Signatures Digital signatures are essentially “enciphered data” created using cryptographic algorithms. The algorithms define how the enciphered data is created for a particular document or message. Standard digital signature algorithms exist so that no one needs to create these from scratch. Digital signature algorithms were first invented in the 1970’s and are based on a type of cryptography referred to as “Public Key Cryptography”. By far the most common digital signature algorithm is RSA (named after the inventors Rivest, Shamir and Adelman in 1978), by our estimates it is used in over 80% of the digital signatures being used around the world. This algorithm has been standardised (ISO, ANSI, IETF etc.) and been extensively analysed by the cryptographic research community and you can say with confidence that it has withstood the test of time, i.e. no one has been able to find an efficient way of cracking the RSA algorithm. Another more recent algorithm is ECDSA (Elliptic Curve Digital Signature Algorithm), which is likely to become popular over time. Digital signatures are used everywhere even when we are not actually aware, example uses include: Retail payment systems like MasterCard/Visa chip and pin, High-value interbank payment systems (CHAPS, BACS, SWIFT etc), e-Passports and e-ID cards, Logging on to SSL-enabled websites or connecting with corporate VPNs. -

Quantum Computing Threat: How to Keep Ahead

Think openly, build securely White paper: Quantum Computing Threat: How to Keep Ahead Ë PQShield Ƕ February 2021 © PQShield Ltd | www.pqshield.com | PQShield Ltd, Oxford, OX2 7HT, UK Cryptographic agility and a clear roadmap to the upcoming NIST standards are key to a smooth and secure transition. 1 Background 1.1 New Cryptography Standards The NIST (U.S. National Institute of Standards and Technology) Post‐Quantum Cryptography (PQC) Project has been running since 2016 and is now in its third, final evaluation round. This project standardizes new key establishment and digital signature algorithms that have been designed to be resistant against attacks by quantum computers. These new algorithms are intended to replace current classical‐security RSA and Elliptic Cryptography (ECDH, ECDSA) standards in applications. The particular mathematical problems that RSA and Elliptic Cryptography are based onareeasy (polynomial‐time solvable) for quantum computers. Unless complemented with quantum‐safe cryptography, this will allow for the forgery of digital signatures (integrity compromise) and de‐ cryption of previously encrypted data (confidentiality compromise) in the future. This is aheight‐ ened risk for organizations that need to secure data for long periods of time; government orga‐ nizations that handle and secure classified information have largely been the drivers ofthepost‐ quantum standardization and its adoption in the field, but banks, financial services, healthcare providers, those developing intellectual property and many others increasingly feel the need to ensure that they can protect their customers and IP – now and in the future. 1.2 Quantum Threat and Post-Quantum Cryptography The current position of NCSC (UK’s National Cyber Security Centre) and NSA (USA’s National Se‐ curity Agency) is that the best mitigation against the threat of quantum computers is quantum‐ safe cryptography, also known as post‐quantum cryptography (PQC). -

SHA-3 and the Hash Function Keccak

Christof Paar Jan Pelzl SHA-3 and The Hash Function Keccak An extension chapter for “Understanding Cryptography — A Textbook for Students and Practitioners” www.crypto-textbook.com Springer 2 Table of Contents 1 The Hash Function Keccak and the Upcoming SHA-3 Standard . 1 1.1 Brief History of the SHA Family of Hash Functions. .2 1.2 High-level Description of Keccak . .3 1.3 Input Padding and Generating of Output . .6 1.4 The Function Keccak- f (or the Keccak- f Permutation) . .7 1.4.1 Theta (q)Step.......................................9 1.4.2 Steps Rho (r) and Pi (p).............................. 10 1.4.3 Chi (c)Step ........................................ 10 1.4.4 Iota (i)Step......................................... 11 1.5 Implementation in Software and Hardware . 11 1.6 Discussion and Further Reading . 12 1.7 Lessons Learned . 14 Problems . 15 References ......................................................... 17 v Chapter 1 The Hash Function Keccak and the Upcoming SHA-3 Standard This document1 is a stand-alone description of the Keccak hash function which is the basis of the upcoming SHA-3 standard. The description is consistent with the approach used in our book Understanding Cryptography — A Textbook for Students and Practioners [11]. If you own the book, this document can be considered “Chap- ter 11b”. However, the book is most certainly not necessary for using the SHA-3 description in this document. You may want to check the companion web site of Understanding Cryptography for more information on Keccak: www.crypto-textbook.com. In this chapter you will learn: A brief history of the SHA-3 selection process A high-level description of SHA-3 The internal structure of SHA-3 A discussion of the software and hardware implementation of SHA-3 A problem set and recommended further readings 1 We would like to thank the Keccak designers as well as Pawel Swierczynski and Christian Zenger for their extremely helpful input to this document. -

Chapter 2 the Data Encryption Standard (DES)

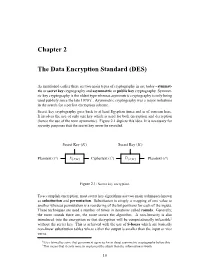

Chapter 2 The Data Encryption Standard (DES) As mentioned earlier there are two main types of cryptography in use today - symmet- ric or secret key cryptography and asymmetric or public key cryptography. Symmet- ric key cryptography is the oldest type whereas asymmetric cryptography is only being used publicly since the late 1970’s1. Asymmetric cryptography was a major milestone in the search for a perfect encryption scheme. Secret key cryptography goes back to at least Egyptian times and is of concern here. It involves the use of only one key which is used for both encryption and decryption (hence the use of the term symmetric). Figure 2.1 depicts this idea. It is necessary for security purposes that the secret key never be revealed. Secret Key (K) Secret Key (K) ? ? - - - - Plaintext (P ) E{P,K} Ciphertext (C) D{C,K} Plaintext (P ) Figure 2.1: Secret key encryption. To accomplish encryption, most secret key algorithms use two main techniques known as substitution and permutation. Substitution is simply a mapping of one value to another whereas permutation is a reordering of the bit positions for each of the inputs. These techniques are used a number of times in iterations called rounds. Generally, the more rounds there are, the more secure the algorithm. A non-linearity is also introduced into the encryption so that decryption will be computationally infeasible2 without the secret key. This is achieved with the use of S-boxes which are basically non-linear substitution tables where either the output is smaller than the input or vice versa. 1It is claimed by some that government agencies knew about asymmetric cryptography before this. -

Cryptographic Algorithms and Key Sizes for Personal Identity Verification

Archived NIST Technical Series Publication The attached publication has been archived (withdrawn), and is provided solely for historical purposes. It may have been superseded by another publication (indicated below). Archived Publication Series/Number: NIST Special Publication 800-78-2 Title: Cryptographic Algorithms and Key Sizes for Personal Identity Verification Publication Date(s): February 2010 Withdrawal Date: December 2010 Withdrawal Note: SP 800-78-2 is superseded in its entirety by the publication of SP 800-78-3 (December 2010). Superseding Publication(s) The attached publication has been superseded by the following publication(s): Series/Number: NIST Special Publication 800-78-3 Title: Cryptographic Algorithms and Key Sizes for Personal Identity Verification Author(s): W. Timothy Polk, Donna F. Dodson, William E. Burr, Hildegard Ferraiolo, David Cooper Publication Date(s): December 2010 URL/DOI: http://dx.doi.org/10.6028/NIST.SP.800-78-3 Additional Information (if applicable) Contact: Computer Security Division (Information Technology Lab) Latest revision of the SP 800-78-4 (as of August 7, 2015) attached publication: Related information: http://csrc.nist.gov/groups/SNS/piv/ Withdrawal N/A announcement (link): Date updated: ƵŐƵƐƚϳ, 2015 NIST Special Publication 800-78-2 Cryptographic Algorithms and Key Sizes for Personal Identity Verification W. Timothy Polk Donna F. Dodson William. E. Burr I N F O R M A T I O N S E C U R I T Y Computer Security Division Information Technology Laboratory National Institute of Standards and Technology Gaithersburg, MD, 20899-8930 February 2010 U.S. Department of Commerce Gary Locke, Secretary National Institute of Standards and Technology Dr.