Copyright by Azadeh Mostofi 2018

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Regional Plan for Vassforvaltning for Møre Og Romsdal Vassregion» Samsvarer Med Desse Prinsippa

[Skriv inn tekst] Regional plan for vassforvaltning i Vassregion Møre og Romsdal 2016±2021 20.10.2015 Innhald Forord ......................................................................................................................................... 4 Samandrag .................................................................................................................................. 6 1 Planbeskriving ..................................................................................................................... 8 1.1 Formålet med planen ................................................................................................... 8 1.2 Verknaden av planen ................................................................................................. 10 1.3 Hovudinnhald i planen ............................................................................................... 11 1.4 Forhold til konsekvensutgreiingsforskrifta ................................................................ 11 1.5 Handlingsprogram ..................................................................................................... 11 1.6 Forhold til rammer og retningslinjer som gjeld for området ..................................... 12 1.6.1 Nasjonale føringar .............................................................................................. 12 1.6.2 Om statlege planretningslinjer ........................................................................... 13 1.6.3 Forhold til fornybardirektivet (2009/28/EF) ..................................................... -

Dovrefjell Reinheimen 2008 Lesja - Dovre

Dovrefjell Reinheimen 2008 Lesja - Dovre 6 www.nasjonalparkriket.no Mogop Velkommen Kjære gjest! Velkommen til Bjorli-Lesja og Dovre. Dovrefjell - Sunndalsfjella og Reinheimen nasjonalpark strekker seg utover begge kommunene, med mektige fjelltinder. Snøhetta (2286 moh) ruver majestetisk i landskapet, og er et kjent og kjært turmål. Vi har et mangfold av aktiviteter å tilby dere. Bjorli og Dombås har begge alpinbakker og Reinheimen langrennsløyper, og Dombås har også et flott skiskytteranlegg. Hos oss har du muligheten til å fiske i både fjellvann og elver, du kan jakte på både små- og storvilt, kjøre kanefart, spise god mat på en gammel seter, gå fine turer eller bare ligge i lyngen og se på moskusen… Vi ønsker deg hjertelig velkommen som gjest til vårt rike! Welcome Dear guest! Welcome to Borli-Lesja and Dovre. The Dovrefjell - Sunndalsfjella and Reinheimen National Park, with its mighty mountain peaks, extends over both districts. Snøhetta (2286 metres) rises majestetically in the skyline and is a well-known and popular destination. We can offer you a range of activities. Both Bjorli and Dombås have alpine slopes and cross country tracks, and Dombås has a good ski-shooting range. You can fish in mountain lakes and rivers, you can hunt small and big game, and Reinheimen do canoe- tours, eat good food in historic mountain farms, having nice hiking tours or just lie in the heather and watch the musk ox grazing … A warm welcome to you as a guest in our kingdom! Herzlich Willkommen Lieber Gast! Herzlich Willkommen in Bjorli-Lesja und Dovre. Der Dovrefjell-Sunndalsfjella og Reinheimen Nationalpark erstreckt sich mit seinen mächtigen Berggipfeln über die beiden Gemeinden. -

WEST NORWEGIAN FJORDS UNESCO World Heritage

GEOLOGICAL GUIDES 3 - 2014 RESEARCH WEST NORWEGIAN FJORDS UNESCO World Heritage. Guide to geological excursion from Nærøyfjord to Geirangerfjord By: Inge Aarseth, Atle Nesje and Ola Fredin 2 ‐ West Norwegian Fjords GEOLOGIAL SOCIETY OF NORWAY—GEOLOGICAL GUIDE S 2014‐3 © Geological Society of Norway (NGF) , 2014 ISBN: 978‐82‐92‐39491‐5 NGF Geological guides Editorial committee: Tom Heldal, NGU Ole Lutro, NGU Hans Arne Nakrem, NHM Atle Nesje, UiB Editor: Ann Mari Husås, NGF Front cover illustrations: Atle Nesje View of the outer part of the Nærøyfjord from Bakkanosi mountain (1398m asl.) just above the village Bakka. The picture shows the contrast between the preglacial mountain plateau and the deep intersected fjord. Levels geological guides: The geological guides from NGF, is divided in three leves. Level 1—Schools and the public Level 2—Students Level 3—Research and professional geologists This is a level 3 guide. Published by: Norsk Geologisk Forening c/o Norges Geologiske Undersøkelse N‐7491 Trondheim, Norway E‐mail: [email protected] www.geologi.no GEOLOGICALSOCIETY OF NORWAY —GEOLOGICAL GUIDES 2014‐3 West Norwegian Fjords‐ 3 WEST NORWEGIAN FJORDS: UNESCO World Heritage GUIDE TO GEOLOGICAL EXCURSION FROM NÆRØYFJORD TO GEIRANGERFJORD By Inge Aarseth, University of Bergen Atle Nesje, University of Bergen and Bjerkenes Research Centre, Bergen Ola Fredin, Geological Survey of Norway, Trondheim Abstract Acknowledgements Brian Robins has corrected parts of the text and Eva In addition to magnificent scenery, fjords may display a Bjørseth has assisted in making the final version of the wide variety of geological subjects such as bedrock geol‐ figures . We also thank several colleagues for inputs from ogy, geomorphology, glacial geology, glaciology and sedi‐ their special fields: Haakon Fossen, Jan Mangerud, Eiliv mentology. -

Informasjon Og Priser

INFORMASJON OG PRISER 2020/2021 Veltdalshytta. Foto: Marius Dalseg Sætre Informasjonshefte for DNT Sunnmøres hytter Norsk Engelsk Innhold - Content Innhold - Content .................................................................................................................................... 1 PRAKTISK INFORMASJON COVID-19 ................................................................................................... 2 PRAKTISK INFORMASJON ........................................................................................................................ 5 BETALING FOR HYTTEOPPHOLD .............................................................................................................. 8 PRISER ...................................................................................................................................................... 9 GLUTENFRI MAT PÅ VÅRE HYTTER ........................................................................................................ 10 MIDTUKETILBUD .................................................................................................................................... 11 HYTTENE OG ÅPNINGSTIDER ................................................................................................................. 12 NØKLER .................................................................................................................................................. 12 PROGRAM REINDALSETER .................................................................................................................... -

Kyrkjeblad 1

16 HVAD SOLSKIN ER FOR ... KYRKJEBLAD 1. Hvad solskin er for det sorte muld, er sand oplysning for muldets frænde. Langt mere værd end det røde guld det er sin Gud og sig selv at kende. for NORDDAL Trods mørkets harme, i strålearme af lys og varme Nr 2.2016 47 årgang er lykken klar! 2 Som solen skinner i forårstid, 3. Som urter blomstre og kornet gror og som den varmer i sommerdage, i varme dage og lyse nætter, al sand oplysning er mild og blid, så livs-oplysning i høje Nord så den vort hjerte må vel behage. vor ungdom blomster og frugt forjætter. Trods mørkets harme, Trods mørkets harme, i strålearme i strålearme af lys og varme af lys og varme er hjertensfryd! er frugtbarhed! 4. Som fuglesangen i grønne lund, der liflig klinger i vår og sommer, 5. Vorherre vidner, at lys er godt, vort modersmål i vor ungdoms mund som sandhed elskes, så lyset yndes, skal liflig klinge, når lyset kommer. og med Vorherre, som ler ad spot, Trods mørkets harme, skal værket lykkes, som her begyndes. i strålearme Trods mørkets harme, af lys og varme i strålearme er røsten klar! af lys og varme vor skole stå! Det er den danske presten Grundtvig som har skrive denne songen. Grundtvig var m.a. forfattar, historikar og filosof. Ut frå tanken «menneske først—så kristen» skapte han folkehøyskolen. Den første skulen vart etablert i 1844, og Sagatun folkehøyskole starta i 1864, som den første i Noreg. Grundtvig omtalast ofte som folkehøyskolen sin far, og denne songen som folkehøyskolesangen. -

VIF 8 Lay Eng.Indd

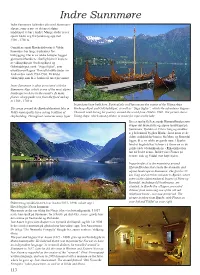

Indre Sunnmøre Indre Sunnmøre forbindes ofte med Sunnmørs- alpene, som er noe av det mest alpine landskapet vi har i landet. Mange steder reiser spisse tinder seg fra fjorden og opp mot 1500 - 1700 m. Områdene rundt Bjørkedalsvatnet i Volda kommune har lange tradisjoner for båtbygging. Her er en rekke båttyper bygget gjennom århundrene. Særlig kjent er kopiene av vikingskipene Osebergskipet og Gokstadskipet, samt “Saga Siglar”, som eventyreren Ragnar Thorseth brukte under sin ferd verden rundt 1984-1986. På bildet vikingskip som bl.a. brukes til turer på vannet. Inner Sunnmøre is often associated with the Sunnmøre Alps, which is one of the most alpine landscape we have in the country. In many places, sharp peaks rise from the fjord and up to 1500 - 1700 m. boats have been built here. Particularly well known are the copies of the Viking ships The areas around the Bjørkedalsvatnet lake in Oseberg-skipet and Gokstadskipet, as well as “Saga Siglar”, which the adventurer Ragnar Volda municipality have a long tradition of Thorseth used during his journey around the world from 1984 to 1986. The picture shows shipbuilding. Throughout centuries many types Viking ships, which,among others, is in use for trips on the lake. Det er særlig fjellene rundt Hjørundfjorden som skaper det dramatiske og alpine landskapet på Sunnmøre. Fjorden er 33 km. lang og strekker seg helt inn til bygden Bjørke, hvor noen av de eldste middelalder-husene fra Møre og Romsdal ligger, bl.a. en rekke av gamle nøst. I Bjørke hindret bygdefolket Telenor i å fjerne en av de gamle røde telefonkioskene - Riksantikvaren har nå fredet denne. -

Konsesjonssøknad

Konsesj onssøknad For 22kV landstrøm til 4 oppdrettsanlegg Innhold 1. Sammendrag..................................................................................................4 2. Kort beskrivelse av søker..................................................................................5 3. Hva søkes det konsesjon for ..............................................................................5 4. Lovverk som er vurdert....................................................................................5 5. Allerede innhentede tillatelser ..........................................................................6 6. Urdaneset .....................................................................................................7 6.1. Overordnet beskrivelse av tiltaket ................................................................7 6.2. Teknisk beskrivelse av tiltaket .....................................................................7 6.3. Grensesnitt mot områdekonsesjonær og bakgrunn for valg................................... 7 6.4. Alternat ive løsninger for valg av grensesnit t .................................................... 8 6.5. Kapasit et i overliggende net t ...................................................................... 8 6.6. Forlegging av kabel ..................................................................................8 6.7. Henvisning t il andre konsesj oner som berøres av t ilt aket (NVEs referansesystem).....................................................................................8 6.8. Begrunnelse -

Norddal 2011

Forord På oppdrag frå fylkesmannen i Møre og Romsdal, har biolog Dag Holtan utført supplerande kartlegging av naturtypar i Norddal kommune. Oppdraget har omfatta kartlegging, verdisetting og avgrensing av naturtypar med artsinformasjon (unntatt vilt), ved både eigne feltundersøkingar og innsamling og systematisering av eksisterande informasjon, og er ei vidareføring av det tilsvarande arbeidet i 1999. Sidan John Bjarne Jordal hadde eige prosjekt med kartlegging av kulturlandskapet i Storfjorden i 2009 og 2010, har det meste av feltarbeidet gått med til nykartlegging i skog og kvalitetssikring av svært viktige område. Bakgrunnen for kartlegginga av naturtypar er mellom anna den politiske målsetjinga, uttrykt i Stortingsmelding 58 (1996-97), om at alle kommunar i landet skal kartlegge og ha oversikt over viktige område for biologisk mangfald på sitt areal. Noreg har òg, saman med fleire andre land, slutta seg til ei internasjonal målsetjing om å stanse tap av biologisk mangfald innan 2010, det såkalla 2010- målet (”Countdown 2010”, no justert til 2020). For å kunne ta vare på biologiske verdiar må ein vite kva verdiar ein har og kor desse finst. Den føreliggjande oversikta over verdifulle naturtypar i Norddal er nok eit viktig steg på vegen i å få betre kunnskap om dei biologiske naturverdiane i kommunen. Underteikna takkar for eit godt samarbeid med Kjell Lyse ved fylkesmannen si miljøvernavdeling. Feltarbeidet er utført av Dag Holtan i perioden mai til oktober 2010. Perry Larsen (Skodje) har delteke i mykje av feltarbeidet, både i 2010 og tidlegare. Resultata frå talrike feltturar på fritida i perioden 2001 – 2009 er no inkludert i omtalen av dei respektive lokalitetane. -

Naturarven I Møre Og Romsdal

Fylkesmannen Forvaltning i Møre og Romsdal Verneformål Nasjonalparkar og Framtidig verneplanarbeid i Møre og Romsdal Desse har forvaltningsansvaret på plass for alle dei fire store verneområda (Troll- Den eine av kolonnane i tabellen gir informasjon om mune) og Remman (Smøla kommune). Etter ei forskrift heimen, Geiranger-Herdalen, Dovrefjell-Sunndalsfjella landskapsvernområde Marint vern Forvaltning av eit verneområde inneber utøving av det viktigaste formålet med kvart einskild naturreser- om fiske i sjøen, er eit korallrev i Breisunddjupet utafor og Reinheimen) i løpet av 2012. Tabellen på kartsida Marine verneområde vert oppretta for å ta vare på mynde i form av sakshandsaming etter verneforskrif- vat. Dei tidlegare vedtekne verneplanane har sikra eit Ålesund verna mot reiskap som kan skade revet. informerer om kven som har ansvaret for kvart einskild Trollheimen landskapsvernområde ligg i fleire kom- marine verneverdiar. Verneformålet kan gjelde anten ter, forvaltnings-/skjøtselsplanlegging og rapporte- mangfald av verneverdige naturtypar i fylket. Verne- verneområde. munar, medan Innerdalen landskapsvernområde som sjøbotnen, vassøyla eller havoverflata eller ein kombi- formåla spenner frå varmekjær og artsrik edellauvskog . ring. Ansvaret inneber også praktiske oppgåver som grenser inn til Trollheimen ligg i Sunndal kommune. nasjon av desse. Det er eit nasjonalt politisk mål å sikre Skogvern til havstrender og skjer i havgapet. I fleire av verne- informasjon, overvaking, registreringar og eventuelt Dei er viktige som friluftsområde og har mange ulike ørset nokre av sjøområda i fylket ein eller annan form for vern På både nasjonalt- og fylkespolitisk hald er det eit Forvaltningsplanar områda er det behov for ulike skjøtselstiltak for å sikre fysiske tiltak som er naudsynt for å fremme føremålet plante- og dyreartar. -

Biologisk Mangfald Innafor Geiranger-Herdalen Landskapsvernområde

Fylkesmannen i Møre og Romsdal Miljøvernavdelinga Biologisk mangfald innafor Geiranger-Herdalen landskapsvernområde RAPPORT 2001:03 FRAMSIDE: Lengst til venstre: Nyklekt mnemosynesommerfugl Til venstre: Beitemarksopp Lengst til venstre: Fjellmarinøkkel i Vinsåsskredene Til venstre: Stivsildre, Djupdalen Alle foto Dag Holtan FYLKESMANNEN I MØRE OG ROMSDAL Miljøvernavdelinga Fylkeshuset, 6404 Molde Rapport nr.: 2001:03: T ilgjenge Open Tittel: Dato: Biologisk mangfald innafor Geiranger-Herdalen landskapsvernområde. 01.12.2001 Forfattarar: Sidetal: Geir Gaarder, Dag Holt an og Jon Bjarne Jordal 82 Sam an drag: På oppdrag frå Fylkesmannen har Miljøfaglig Utredning, saman med Dag Holtan og John Bjarne Jordal, stilt saman kjent kunnskap og utført eigne biologiske undersøkingar innafor det planlagde Geiranger- Herdalen landskapsvernområde. Utgreiinga tek både for seg kvalitetane til heile området og lokalitetar og artar som reknast for spesielt interessante. Resultata syner at det planlagde landskapsvernområdet har ein variert og spanande natur, med mange biologisk viktige miljø. I alt er 68 verdfulle lokalitetar omtala, fordelt på ei havstrand, 23 kulturlandskap, ei myr, 4 ferskvassområde, 24 skogsmiljø, 4 berg, rasmark og kantkratt og 11 fjellområde. Rapporten inneheld 10 hovuddelar: samandrag, innleiing, materiale og metodar, naturgrunnlaget, flora og vegetasjon, dyreliv, raudlisteartar, verdfulle einskildlokalitetar, diskusjon og til sist litteraturoversikt. Emneord: ISBN 82-7430-124-2 Biologisk mangfald ISSN 0801-9363 Registrering Landskapsvernområde Fagansvarleg: For administrasjonen: _____________________________ __________________________ Ulf Lucasen (seksjonsleiar) Per Fredrik Brun (fylkesmiljøvernsjef) FØREORD FRÅ FORFATTARANE På oppdrag frå Fylkesmannen i M øre og Romsdal, miljøvernavdelinga, har Miljøfaglig Utredning ANS v/Geir Gaarder i samarbeid med Dag Holtan og John Bjarne Jordal skildra dei kjende biologiske verdiane innafor det føreslegne Geiranger-Herdal landskap svernområde. -

From an Unstable Rock Slope at Åkerneset to Tsunami Risk in Western Norway

Coastal Engineering 88 (2014) 101–122 Contents lists available at ScienceDirect Coastal Engineering journal homepage: www.elsevier.com/locate/coastaleng Rockslide tsunamis in complex fjords: From an unstable rock slope at Åkerneset to tsunami risk in western Norway C.B. Harbitz a,b,c,⁎, S. Glimsdal a,b,c, F. Løvholt a,b,c,V.Kveldsvikb,G.K.Pedersena,c, A. Jensen a a Department of Mathematics, University of Oslo, PO Box 1053 Blindern, 0316 Oslo, Norway b Norwegian Geotechnical Institute (NGI), PO Box 3930 Ullevaal Stadion, N-0806 OSLO, Norway c International Centre for Geohazards (ICG), c/o NGI, PO Box 3930 Ullevaal Stadion, N-0806 OSLO, Norway article info abstract Article history: An unstable rock volume of more than 50 million m3 has been detected in the Åkerneset rock slope in the narrow Received 17 December 2012 fjord, Storfjorden, Møre & Romsdal County, Western Norway. If large portions of the volume are released as a Received in revised form 2 February 2014 whole, the rockslide will generate a tsunami that may be devastating to several settlements and numerous vis- Accepted 5 February 2014 iting tourists along the fjord. The threat is analysed by a multidisciplinary approach spanning from rock-slope sta- Available online xxxx bility via rockslide and wave mechanics to hazard zoning and risk assessment. The rockslide tsunami hazard and the tsunami early-warning system related to the two unstable rock slopes at Keywords: Rockslide Åkerneset and Hegguraksla in the complex fjord system are managed by Åknes/Tafjord Beredskap IKS (previous- Tsunami ly the Åknes/Tafjord project). The present paper focuses on the tsunami analyses performed for this company to Risk better understand the effects of rockslide-generated tsunamis from Åkerneset and Hegguraksla. -

Vedtak I Saker Om Grensejustering Mellom Stranda, Norddal Og Stordal Kommunar Eg Viser Til Brev Av 23

Statsråden Ifølgje liste Dykkar ref Vår ref Dato 17/1212-171 2. juli 2018 Vedtak i saker om grensejustering mellom Stranda, Norddal og Stordal kommunar Eg viser til brev av 23. mars frå Fylkesmannen i Møre og Romsdal med fylkesmannens utgreiing og tilråding i sakene om grensejustering mellom kommunane Stranda, Norddal og Stordal, for områda Liabygda og Norddal/Eidsdal. Bakgrunn 8. juni 2017 vedtok Stortinget samanslåing av Norddal og Stordal kommunar. 28. september 2017 gav departementet fylkesmannen i oppdrag å greie ut grensejustering mellom kommunane Stranda og Norddal og Stordal, for områdane Liabygda og Norddal/Eidsdal. Oppdraget for Norddal/Eidsdal, frå Norddal kommune til Stranda, blei gitt etter initiativ frå innbyggarar i Norddal, som fremja søknaden til fylkesmannen i juni 2016. Oppdraget for Liabygda, frå Stranda til den nye kommunen av Norddal og Stordal, blei gitt etter at fylkesmannen tidlegare hadde tilrådd ei utgreiing av dette området, og det var naturleg å sjå dei to sakene i samanheng. Norddal og Eidsdal Det er 488 innbyggarar i bygdene Norddal og Eidsdal, noko som utgjer 29 prosent av innbyggartalet i dagens Norddal kommune. Bygdene utgjer om lag 21 prosent av arealet i dagens Norddal. Det er barnehage og barneskule i bygdene, andre kommunale tenester blir gitt av Norddal kommune med utgangspunkt i kommunesenteret. Det er litt ulik reisetid frå ulike delar av bygdene til kommunale tenester i Stranda og Norddal, men det er kortare veg til kommunesenteret i Norddal kommune enn til kommunesenteret i Stranda. Postadresse: Postboks 8112 Dep 0032 Oslo Kontoradresse: Akersg. 59 Telefon* 22 24 90 90 Org no.: 972 417 858 Ei spørjeundersøking blant innbyggarane i bygdene viser at 57 prosent ønsker å høyre til Stranda kommune, og at 26 prosent ønsker å høyre til Norddal kommune.