A Hybrid Deep Learning Architecture for the Classification of Superhero

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pdfs/Ent Malaysia.Pdf Bagozzi, R., Baumgartner, H., and Yi, Y

Editorial Board Balakrishnan Parasuraman Universiti Malaysia Sabah, Malaysia Benjamin James. Inyang University of Calabar, Nigeria Cyril Foropon University of Manitoba, Canada David H. Kent Golden Gate University, USA Donald Henry Ah Pak Xi’an Jiaotong-Liverpool University, China Fulford Richard Edith Cowan University, Australia Goi Chai Lee Curtin University of Technology, Malaysia Hafizi Muhamad Ali Universiti Utara Malaysia, Malaysia Kevin James Daly University of Western Sydney, Australia K. G. Viswanadhan NSS College of Engineering, India Lisa Wang Canadian Center of Science and Education, Canada Mahdi Salehi Zanjan University, Iran Matthew M.Chew Hong Kong Baptist University, Hong Kong Muhammad Aslam Khan HITEC University Taxila, Pakistan Muhammad Madi Bin Abdullah Universiti Teknologi Malaysia, Malaysia Roberto Bergami Vitoria University, Australia Sam C. Okoroafo University of Toledo, USA Sathya Swaroop Debasish Fakir Mohan University, India Tobias Basse University of Applied Sciences, Germany Wen-Hsien Tsai National Central University, TAIWAN International Journal of Business and Management October, 2009 Contents The Impact of the Marketing Activities of Family Owned Businesses on Consumer Purchase Intentions 3 Sam C. Okoroafo & Anthony Koh The Review of Empirical Researches on IT Investment Announcements on the Market Value of Firms 14 Lu Zhang & Jinghua Huang The Value Relevance of Book Values, Earnings and Cash Flows: Evidence from Korea 28 Gee-Jung, Kwon The Distinguishing Background, the Path and the Pattern--Analysis on -

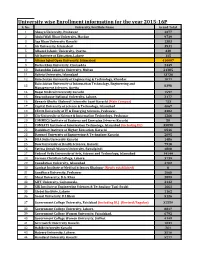

University Wise Enrollment Information for the Year 2015-16P S

University wise Enrollment information for the year 2015-16P S. No. University/Institute Name Grand Total 1 Abasyn University, Peshawar 4377 2 Abdul Wali Khan University, Mardan 9739 3 Aga Khan University Karachi 1383 4 Air University, Islamabad 3531 5 Alhamd Islamic University, Quetta. 338 6 Ali Institute of Education, Lahore 115 8 Allama Iqbal Open University, Islamabad 416607 9 Bacha Khan University, Charsadda 2449 10 Bahauddin Zakariya University, Multan 21385 11 Bahria University, Islamabad 13736 12 Balochistan University of Engineering & Technology, Khuzdar 1071 Balochistan University of Information Technology, Engineering and 13 8398 Management Sciences, Quetta 14 Baqai Medical University Karachi 1597 15 Beaconhouse National University, Lahore. 2177 16 Benazir Bhutto Shaheed University Lyari Karachi (Main Campus) 753 17 Capital University of Science & Technology, Islamabad 4067 18 CECOS University of IT & Emerging Sciences, Peshawar. 3382 19 City University of Science & Information Technology, Peshawar 1266 20 COMMECS Institute of Business and Emerging Sciences Karachi 50 21 COMSATS Institute of Information Technology, Islamabad (including DL) 35890 22 Dadabhoy Institute of Higher Education, Karachi 6546 23 Dawood University of Engineering & Technology Karachi 2095 24 DHA Suffa University Karachi 1486 25 Dow University of Health Sciences, Karachi 7918 26 Fatima Jinnah Women University, Rawalpindi 4808 27 Federal Urdu University of Arts, Science and Technology, Islamabad 14144 28 Forman Christian College, Lahore. 3739 29 Foundation University, Islamabad 4702 30 Gambat Institute of Medical Sciences Khairpur (Newly established) 0 31 Gandhara University, Peshawar 1068 32 Ghazi University, D.G. Khan 2899 33 GIFT University, Gujranwala. 2132 34 GIK Institute of Engineering Sciences & Technology Topi-Swabi 1661 35 Global Institute, Lahore 1162 36 Gomal University, D.I.Khan 5126 37 Government College University, Faislabad (including DL) (Revised/Regular) 32559 38 Government College University, Lahore. -

Sports Directory for Public and Private Universities Public Sector Universities Federal

Sports Directory for Public and Private Universities Public Sector Universities Federal: 1. Allama Iqbal Open University, Islamabad Name of Sports Officer: there is no sports officer in said university as per information received from university. Designation: Office Phone No: Celll No: E-mail: Mailing Address: 2. Bahria University, Islamabad Name of Sports Officer: Malik Bashir Ahmad Designation: Dy. Director Sports Office Phone No: 051-9261279 Cell No: 0331-2221333 E-mail: [email protected] Mailing Address: Bahria University, Naval Complex, E-8, Shangrila Road, Islamabad Name of Sports Officer: Mirza Tasleem Designation: Sports Officer Office Phone No: 051-9261279 Cell No: E-mail: [email protected] Mailing Address: as above 3. International Islamic University, Islamabad Name of Sports Officer: Muhammad Khalid Chaudhry Designation: Sports Officer Office Phone No: 051-9019656 Cell No: 0333-5120533 E-mail: [email protected] Mailing Address: Sports Officer, IIUI, H-12, Islamabad 4. National University of Modern Languages (NUML), Islamabad Name of Sports Officer: No Sports Officer Designation: Office Phone No: 051-9257646 Cell No: E-mail: Mailing Address: Sector H-9, Islamabad Name of Sports Officer: Saeed Ahmed Designation: Demonstrator Office Phone No: 051-9257646 Cell No: 0335-5794434 E-mail: [email protected] Mailing Address: as above 5. Quaid-e-Azam University, Islamabad Name of Sports Officer: M. Safdar Ali Designation: Dy. Director Sports Office Phone No: 051-90642173 Cell No: 0333-6359863 E-mail: [email protected] Mailing Address: Quaid-e-Azam University, Islamabad 6. National University of Sciences and Technology, Islamabad Name of Sports Officer: Mrs. -

Wrinkling Prediction of Aluminum 5456-H116 Sheet Metals Under Uni-Axial and Bi-Axial Loading Through FE Simulations

INTERNATIONAL JOURNAL OF MULTIDISCIPLINARY SCIENCES AND ENGINEERING , VOL . 2, NO. 5, AUGUST 2011 ` Wrinkling Prediction of Aluminum 5456-H116 sheet Metals under Uni-Axial and Bi-Axial Loading through FE Simulations Amer Sattar 1 and Irfan A. Manarvi 2 1,2 Department of Mechanical Engineering, HITEC University, Taxila, Pakistan Abstract — Wrinkling is one of the most undesirable defects in properties of thin sheets are different from the bulk materials, forming processes, especially when dealing with thin sheets. the fact which has come to limelight due to better measuring Methods have to be devised to control the extent of damage. This tools now available to engineers. The miniaturization of the defect if left un-attended can cause irretrievable loss. Remedial forming geometry causes the so called “scaling effect”, which measures include proper constraining of the thin sheet as well as leads to a different material behaviour in the micro scale appropriate loading techniques.FEM software can be effectively used not only to predict the extent of damage, but damage control compared to the macro scale [8]. Thus it has become measures can also be simulated to suggest appropriate action to imperative to study material properties and their behavior be taken at forming stage. This would drastically cut the cost when small dimensions are encountered during manufacturing incurred to remove the “Wrinkles” at later stage. Removal of this processes. In this paper focus is on thin sheet of .2mm defect after the forming process may be not only expensive but thickness. There are likely chances that deformation would sometimes impossible, as has been observed in die making. -

Molybdenum and Cobalt Silicide Field Emitter Arrays

Experimental characterization of gasoline sprays under highly evaporating conditions Muhammad Mahabat Khan 1,2 ,3*, Nadeem Ahmed Sheikh 3, Azfar Khalid 1, Waqas Akbar Lughmani1 1 Department of Mechanical Engineering, Capital University of Science and Technology, Islamabad, Pakistan 2 Laboratoire de Mécanique des Fluides et d’Acoustique,, Ecole Centrale de Lyon, France 3 Department of Mechanical Engineering, HITEC University, Taxila, Pakistan * Corresponding Author email: [email protected] -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Abstract An experimental investigation of multistream gasoline sprays under highly evaporating condi- tions is carried out in this paper. Temperature increase of fuel and low engine pressure could lead to flash boiling. The spray shape is normally modified significantly under flash boiling conditions. The spray plumes expansion along with reduction in the axial momentum causes the jets to merge and creates a low-pressure area below the injector’s nozzle. These effects initiate the collapse of spray cone and lead to the formation of a single jet plume or a big cluster like structure. The collapsing sprays reduces exposed surface and therefore they last longer and subsequently penetrate more. Spray plume momentum increase, jet plume reduction and spray target widening could delay or prevent the closure condition and limit the penetration (delayed formation of the cluster promotes evaporation). These spray characteristics are investigated experimentally using shadowgraphy, for five and six hole injectors, under various boundary conditions. Six hole injectors produce more col- lapsing sprays in comparison to five hole injector due to enhanced jet to jet interactions. The spray collapse tendency reduces with increase in injection pressure due high axial momentum of spray plumes. -

Contemporary Issues Relating to Labour Relations and Human Resources Practices in the Lumber Industry in Quebec

Global Journal of Management And Business Research Volume 11 Issue 1 Version 1.0 February 2011 Type: Double Blind Peer Reviewed International Research Journal Publisher: Global Journals Inc. (USA) ISSN: 0975-5853 Analyzing the Terrorist Activities and Their Implications in Pakistan through Datamining By Shan Majeed Khan, Dr. Irfan Manarvi HITEC University, Taxila Abstract- the events of September 11, 2001 changed the global political scenario fundamentally. The U.S. traced the terrorist outrages in New York and Washington to the Al-Qaeda and the Taliban regime in Afghanistan. As a result, the U.S. declared war against international Terrorism, targeting Afghanistan, for which Pakistan's support was imperative. The cooperation with the U.S. required withdrawing support to the Taliban and start crackdown on the militant Jihadi and sectarian outfits, which had close links with the Taliban and Al-Qaeda, for which Pakistan had to pay a high price. In 2008, Suicide Attacks in Pakistan reached an unprecedented level in the history of modern terrorism. It has been the scene of horrific acts of terrorist violence, and suicide bombings in different areas of Pakistan most notably in NWFP and FATA. The deteriorating law and order situation in the NWFP and FATA resulted in many deaths and casualties of the security forces and civilians. Keywords: Pakistan, War on Terror, Terrorism, Civilian, Suicide Bombing, Federally Administered Tribal Areas. Classification: GJMBR-A FOR Classification: 080109 Contemporary Issues Relating to Labour Relations and Human Resources Practices in the Lumber Industry in Quebec Strictly as per the compliance and regulations of: © 2011 Shan Majeed Khan, Dr. -

University of Wah Annual Report 2015

UNIVERSITY OF WAH ANNUAL REPORT 2015 BISMILLAH IR-RaHMAN ir-RAHiM “(I START) IN THE NAME OF ALLAH, the most BENEFICENT, the most merciful” Table of Contents Message from the Chancellor..............................................................................01 Message from the Chairman Board of Governors...........................................02 Message from the Vice Chancellor.....................................................................03 Brief History of the University............................................................................04 Year in Review........................................................................................................05 University Management.......................................................................................07 Academic Activities...............................................................................................19 Academic Seminars / Conferences / Workshops.............................................31 Research and Development.................................................................................37 Quality Assurance.................................................................................................39 Faculty Development............................................................................................42 Students Enrolment...............................................................................................44 University Building Economies..........................................................................47 -

Kashif Imdad Electrical Engineer

IJENS-RPG [IJENS Researchers Promotion Group] ID: IJENS-1125-Kashif Kashif Imdad Electrical Engineer Address: House # 13-B, Street # 4, Rehber Coloney Mohallah Awanabad HMC Taxila, Pakistan Phone: +92-314-5320579 Cell: +92-314-5320579 Email: [email protected] SUMMARY: Engineer with experience in Power Systems, High voltage Engineering, Electrical drives. Sustainable energy devices, Ability to formulate and implement new ideas. AWARD: Won Best Technical Design Award with cash prize of Rs. 20,000 worth 695 Euro in National Power Engineering Contest 2005 Won Presentation skill Award from NWFP University of Engineering Peshawar EDUCATION: Start Finish Awarding Qualification Institution Year Year Date PhD (Experience Based) 2012 Corllins University USA MS Electrical Engineering University of Engineering & 2007 2010 2011 Specialization in Power Technology Taxila NWFP University of Engineering BE Electrical Engineering 2002 2006 2007 &Technology HSSC (Pre Engineering) 2000 2002 2002 Gandhara college, Wah Cantt SSC (Science) 1998 2000 2000 FG School NO-7 , Wah Cantt FIELD EXPERIENCE Executive Engineer Construction Electricity (Sep 2011-March 2012) (A senior engineer slot) i. To design and construct new 11KV and low voltage lines ii. To prepare annual budget, arrange stores and manage logistics for the entire division iii. Electrical estimations, valuations and its specifications for Electrical overhead and under Ground system Assistant Manager, HMC, Taxila (July 2009 – Aug 2010) . In charge Electrical Power Systems, High Voltage engineering etc . Instructional material preparation and delivery Sale’s Manager, Akhtar solars Pakistan www.akhtersolar.com (June 2006/08) . Marketing, and delivery . Labs preparation and supervision International Journals of Engineering & Sciences IJENS www.ijens.org IJENS-RPG [IJENS Researchers Promotion Group] ID: IJENS-1125-Kashif CERTIFICATIONS . -

Table of Contents

Table of Contents International Journal of Manufacturing, Materials, and Mechanical Engineering Volume 6 • Issue 1 • January-March-2016 • ISSN: 2156-1680 • eISSN: 2156-1672 An official publication of the Information Resources Management Association Research Articles 1 Cutting Speed in Nano-Cutting as MD Modelling Parameter Nikolaos E. Karkalos, National Technical University of Athens, Athens, Greece Angelos P. Markopoulos, National Technical University of Athens, Athens, Greece Dimitrios E. Manolakos, National Technical University of Athens, Athens, Greece 14 Electroless Nickel-Phosphorus Composite Coatings: A Review Prasanna Gadhari, Jadavpur University, Kolkata, India Prasanta Sahoo, Jadavpur University, Kolkata, India 51 FEA Simulation Based Performance Study of Multi Speed Transmission Gearbox Ashwani Kumar, Graphic Era University, Dehradun, India Pravin P Patil, Graphic Era University, Dehradun, India 62 Improvement in Power Efficiency of Injection Molding Machine by Reduction in Plasticization Losses Muhammad Khan, Mechanical Engineering Department, HITEC University, Taxila, Pakistan Nizar Ullah Khan, Department of Mechanical Engineering, Oakland University, Rochester, MI, USA Muhammad Junaid Aziz, Mechanical Engineering Department, University of Engineering & Technology, Taxila, Pakistan Hafiz Kaleem Ullah, Mechanical Engineering Department, HITEC University, Taxila, Pakistan Muhammad Ali Shahid, Ripah International University, Islamabad, Pakistan CopyRight The International Journal of Manufacturing, Materials, and Mechanical Engineering (IJMMME) (ISSN 2156-1680; eISSN 2156-1672), Copyright © 2016 IGI Global. All rights, including translation into other languages reserved by the publisher. No part of this journal may be reproduced or used in any form or by any means without written permission from the publisher, except for noncommercial, educational use including classroom teaching purposes. Product or company names used in this journal are for identification purposes only. -

5Th Ranking of Pakistani Higher Education Institutions (Heis) 2015

HIG HER EDUC ATION COMMISSION 5th Ranking of Pakistani Higher Education Institutions (HEIs) 2015 Announced: 23.2.2016 Objective To create culture of competition among the Higher Education Institutions (HEIs). Encourage HEIs to compete at international level, improve quality/standards of education and use as tool for HEIs self-assessment of their performance for improvement. Introduction Ranking provide complete picture to stakeholders like researchers, students, business community, parents, industry etc. to compare institutions according to different parameters of their need, such as Quality & Research etc. For the development and progress of any country, quality of higher education is the key factor. HEIs are considered to be the originators of change and progress of the nations. In order to strengthen the quality of higher education in Pakistan, Higher Education Commission (HEC) has taken various initiatives to bring the HEIs of Pakistan at par with international standards. Ranking is one of the measures to scale the success of efforts of the HEIs to achieve the international competitiveness in education, research and innovation. Rankings is a debatable subject all over the world, In spite of the difficulties associated in ranking. This is the 5th ranking of Pakistani Universities being announced by Higher Education Commission of Pakistan. Last four rankings for the year were announced in 2006, 2012, 2013 and 2015. Methodology The methodologies for ranking got improved over the period of time in the light of feedback received from HEIs and making HEC's ranking more compatible with global rankings. There is no change in the ranking criteria for the current year ( 2015 ) and criteria is the same which was used for ranking 2014. -

Participating Institutions

LIST OF PARTICIPATING INSTITUTES Main Campus S.No Name Campus City Province Khyber 1 Abasyn University Islamabad Islamabad Pakhtunkhwa Khyber 2 Abasyn University Peshawar Peshawar Pakhtunkhwa Khyber 3 Abbottabad University of Science and Technology Havelian Abbottabad Pakhtunkhwa Khyber 4 Abdul Wali Khan University Mardan Mardan Pakhtunkhwa 5 Air University Islamabad Islamabad Federal Capital 6 Air University Multan Multan Federal Capital 7 Al-Hamd Islamic University Quetta Quetta Balochistan 8 Allama Iqbal Open University Main Islamabad Federal Capital Khyber 9 Bacha Khan University Charsada Charsada Pakhtunkhwa 10 Bahauddin Zakariya University Main Multan Punjab 11 Bahria University Islamabad Islamabad Federal Capital 12 Bahria University Karachi Karachi Federal Capital 13 Bahria University Lahore Lahore Federal Capital Balochistan University of Engineering and Information 14 Khuzdar Khuzdar Balochistan Technology Balochistan University of Information Technology, 15 Quetta Quetta Balochistan Engineering & Management Sciences 16 Barret Hodgson University The Salim Habib Karachi Sindh 17 Beaconhouse National University Main Lahore Punjab 18 Benazir Bhutto Shaheed University Lyari Karachi Karachi Sindh 19 Bilquis Postgraduate College For Women PAF Base Nur Khan Rawalpindi Punjab Khyber 20 Brains Institute Peshawar Peshawar Pakhtunkhwa 21 Capital University of Science and Technology Main Islamabad Federal Capital LIST OF PARTICIPATING INSTITUTES Main Campus S.No Name Campus City Province CECOS University of Information Technology & Khyber -

August 11, 2021

3rd Conference on Sustainability in Civil Engineering ISBN: 978-969-23344-2-6 SECOND CALL FOR PAPERS August 11, 2021 Department of Civil Engineering Capital University of Science & Technology, Islamabad Expressway, Kahuta Road, Zone-V Islamabad. Tel: +92-51-111-555-666 EXT 359 & 401 3rd Conference on Sustainability in Civil Engineering Dr. Rabee Shamass London South Bank University, UK Dr. Shunde Qin WSP, UK Dr. Hamid Mehmood Transport Dept. Victoria, Australia Dr. Mizan Ahmed Monash University, Australia Dr. Wajiha Mohsin Shehzad Massey University, New Zealand Dr. Mohsin Shehzad Mott MacDonald, New Zealand Dr. Anas Bin Ibrahim UiTM Cawangan Pulau Pinang, Malaysia Dr. Khairunisa Binti Universiti Malaysia Pahang, Malaysia Muthusamy Dr. Waris Ali Khan Universiti Putra Malaysia Dr. Wesam Salah Alaloul Universiti Teknologi PETRONAS (UTP), Malaysia Dr Teh Hee Min Universiti Teknologi PETRONAS (UTP), Malaysia Dr. Cao Mingli Dalian University of Technology (DUT), China Dr. Li Li Northwest A&F University, China Dr. Fenyvesi Oliver University in Budapest, Hungary Dr. Libo Yan Technische Universität Braunschweig, Germany Dr. Piotr Smarzewski Lublin University of Technology, Poland Dr. You Dong The Hong Kong Polytechnic University, Hong Kong Dr. Mehran Khan The Hong Kong Polytechnic University, Hong Kong Dr. Claudio Oyarzo Vera Universidad Católica de la Santísima Concepción, Chile Dr. Munir Ahmed DAR Engineering, Kingdom of Saudi Arabia Dr. Umar Farooq Islamic University of Madinah, Kingdom of Saudi Arabia Dr. Mohsin Usman Qureshi University in Sohar, Oman Dr. M Zia ur Rehman Global Change Impact Studies Centre, Pakistan Hashmi Dr. Irfan Yousuf National Electric Power Regulatory Authority, Pakistan Dr. Sher Jamal Khan NUST, Islamabad, Pakistan Dr.