Solving High-Speed Memory Interface Challenges with Low-Cost Fpgas

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory & Devices

Memory & Devices Memory • Random Access Memory (vs. Serial Access Memory) • Different flavors at different levels – Physical Makeup (CMOS, DRAM) – Low Level Architectures (FPM,EDO,BEDO,SDRAM, DDR) • Cache uses SRAM: Static Random Access Memory – No refresh (6 transistors/bit vs. 1 transistor • Main Memory is DRAM: Dynamic Random Access Memory – Dynamic since needs to be refreshed periodically (1% time) – Addresses divided into 2 halves (Memory as a 2D matrix): • RAS or Row Access Strobe • CAS or Column Access Strobe Random-Access Memory (RAM) Key features – RAM is packaged as a chip. – Basic storage unit is a cell (one bit per cell). – Multiple RAM chips form a memory. Static RAM (SRAM) – Each cell stores bit with a six-transistor circuit. – Retains value indefinitely, as long as it is kept powered. – Relatively insensitive to disturbances such as electrical noise. – Faster and more expensive than DRAM. Dynamic RAM (DRAM) – Each cell stores bit with a capacitor and transistor. – Value must be refreshed every 10-100 ms. – Sensitive to disturbances. – Slower and cheaper than SRAM. Semiconductor Memory Types Static RAM • Bits stored in transistor “latches” à no capacitors! – no charge leak, no refresh needed • Pro: no refresh circuits, faster • Con: more complex construction, larger per bit more expensive transistors “switch” faster than capacitors charge ! • Cache Static RAM Structure 1 “NOT ” 1 0 six transistors per bit 1 0 (“flip flop”) 0 1 0/1 = example 0 Static RAM Operation • Transistor arrangement (flip flop) has 2 stable logic states • Write 1. signal bit line: High à 1 Low à 0 2. address line active à “switch” flip flop to stable state matching bit line • Read no need 1. -

DDR and DDR2 SDRAM Controller Compiler User Guide

DDR and DDR2 SDRAM Controller Compiler User Guide 101 Innovation Drive Software Version: 9.0 San Jose, CA 95134 Document Date: March 2009 www.altera.com Copyright © 2009 Altera Corporation. All rights reserved. Altera, The Programmable Solutions Company, the stylized Altera logo, specific device designations, and all other words and logos that are identified as trademarks and/or service marks are, unless noted otherwise, the trademarks and service marks of Altera Corporation in the U.S. and other countries. All other product or service names are the property of their respective holders. Altera products are protected under numerous U.S. and foreign patents and pending ap- plications, maskwork rights, and copyrights. Altera warrants performance of its semiconductor products to current specifications in accordance with Altera's standard warranty, but reserves the right to make changes to any products and services at any time without notice. Altera assumes no responsibility or liability arising out of the application or use of any information, product, or service described herein except as expressly agreed to in writing by Altera Corporation. Altera customers are advised to obtain the latest version of device specifications before relying on any published information and before placing orders for products or services. UG-DDRSDRAM-10.0 Contents Chapter 1. About This Compiler Release Information . 1–1 Device Family Support . 1–1 Features . 1–2 General Description . 1–2 Performance and Resource Utilization . 1–4 Installation and Licensing . 1–5 OpenCore Plus Evaluation . 1–6 Chapter 2. Getting Started Design Flow . 2–1 SOPC Builder Design Flow . 2–1 DDR & DDR2 SDRAM Controller Walkthrough . -

AXP Internal 2-Apr-20 1

2-Apr-20 AXP Internal 1 2-Apr-20 AXP Internal 2 2-Apr-20 AXP Internal 3 2-Apr-20 AXP Internal 4 2-Apr-20 AXP Internal 5 2-Apr-20 AXP Internal 6 Class 6 Subject: Computer Science Title of the Book: IT Planet Petabyte Chapter 2: Computer Memory GENERAL INSTRUCTIONS: • Exercises to be written in the book. • Assignment questions to be done in ruled sheets. • You Tube link is for the explanation of Primary and Secondary Memory. YouTube Link: ➢ https://youtu.be/aOgvgHiazQA INTRODUCTION: ➢ Computer can store a large amount of data safely in their memory for future use. ➢ A computer’s memory is measured either in Bits or Bytes. ➢ The memory of a computer is divided into two categories: Primary Memory, Secondary Memory. ➢ There are two types of Primary Memory: ROM and RAM. ➢ Cache Memory is used to store program and instructions that are frequently used. EXPLANATION: Computer Memory: Memory plays a very important role in a computer. It is the basic unit where data and instructions are stored temporarily. Memory usually consists of one or more chips on the mother board, or you can say it consists of electronic components that store instructions waiting to be executed by the processor, data needed by those instructions, and the results of processing the data. Memory Units: Computer memory is measured in bits and bytes. A bit is the smallest unit of information that a computer can process and store. A group of 4 bits is known as nibble, and a group of 8 bits is called byte. -

Semiconductor Memories

Semiconductor Memories Prof. MacDonald Types of Memories! l" Volatile Memories –" require power supply to retain information –" dynamic memories l" use charge to store information and require refreshing –" static memories l" use feedback (latch) to store information – no refresh required l" Non-Volatile Memories –" ROM (Mask) –" EEPROM –" FLASH – NAND or NOR –" MRAM Memory Hierarchy! 100pS RF 100’s of bytes L1 1nS SRAM 10’s of Kbytes 10nS L2 100’s of Kbytes SRAM L3 100’s of 100nS DRAM Mbytes 1us Disks / Flash Gbytes Memory Hierarchy! l" Large memories are slow l" Fast memories are small l" Memory hierarchy gives us illusion of large memory space with speed of small memory. –" temporal locality –" spatial locality Register Files ! l" Fastest and most robust memory array l" Largest bit cell size l" Basically an array of large latches l" No sense amps – bits provide full rail data out l" Often multi-ported (i.e. 8 read ports, 2 write ports) l" Often used with ALUs in the CPU as source/destination l" Typically less than 10,000 bits –" 32 32-bit fixed point registers –" 32 60-bit floating point registers SRAM! l" Same process as logic so often combined on one die l" Smaller bit cell than register file – more dense but slower l" Uses sense amp to detect small bit cell output l" Fastest for reads and writes after register file l" Large per bit area costs –" six transistors (single port), eight transistors (dual port) l" L1 and L2 Cache on CPU is always SRAM l" On-chip Buffers – (Ethernet buffer, LCD buffer) l" Typical sizes 16k by 32 Static Memory -

AN-371, Interfacing RC32438 with DDR SDRAM Memory

Interfacing the RC32438 with Application Note DDR SDRAM Memory AN-371 By Kasi Chopperla and Harold Gomard Notes Revision History July 3, 2003: Initial publication. October 23, 2003: Added DDR Loading section. October 29, 2003: Added Passive DDR Termination Scheme for Lightly Loaded Systems section. Introduction The RC32438 is a high performance, general-purpose, integrated processor that incorporates a 32-bit CPU core with a number of peripherals including: – A memory controller supporting DDR SDRAM – A separate memory/peripheral controller interfacing to EPROM, flash and I/O peripherals – Two Ethernet MACs – 32-bit v.2.2 compatible PCI interface – Other generic modules, such as two serial ports, I2C, SPI, interrupt controller, GPIO, and DMA. Internally, the RC32438 features a dual bus system. The CPU bus interfaces directly to the memory controller over a 32-bit bus running at CPU pipeline speed. Since it is not possible to run an external bus at the speed of the CPU, the only way to provide data fast enough to keep the CPU from starving is to use either a wider DRAM or a double data rate DRAM. As the memory technology used on PC motherboards transitions from SDRAM to DDR, the steep ramp- up in the volume of DDR memory being shipped is driving pricing to the point where DDR-based memory subsystems are primed to drop below those of traditional SDRAM modules. Anticipating this, IDT opted to incorporate a double data rate DRAM interface on its RC32438 integrated processor. DDR memory requires a VDD/2 reference voltage and combination series and parallel terminations that SDRAM does not have. -

Design and Implementation of High Speed DDR SDRAM Controller on FPGA

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 4 Issue 07, July-2015 Design and Implementation of High Speed DDR SDRAM Controller on FPGA Veena H K Dr. A H Masthan Ali M.Tech Student, Department of ECE, Associate Professor, Department of ECE, Sambhram Institute of Technology, Bangalore, Sambhram Institute of Technology, Bangalore, Karnataka, India Karnataka, India Abstract — The dedicated memory controller is important is and column. To point to a location in the memory these three the applications in high end applications where it doesn’t are mandatory. The ACTIVE command signal along with contains microprocessors. Command signals for memory registered address bits point to the specific bank and row to be refresh, read and write operation and SDRAM initialisation has accessed. And column is given by READ/WRITE signal been provided by memory controller. Our work will focus on along with the registered address bits that shall point to a FPGA implementation of Double Data Rate (DDR) SDRAM location for burst access. The DDR SDRAM interface makes controller. The DDR SDRAM controller is located in between higher transfer rates possible compared to single data rate the DDR SDRAM and bus master. The operations of DDR (SDR) SDRAM, by more firm control of the timing of the SDRAM controller is to simplify the SDRAM command data and clock signals. Implementations frequently have to use interface to the standard system read/ write interface and also schemes such as phase-locked loops (PLL) and self- optimization of the access time of read/write cycle. The proposed design will offers effective power utilization, reduce the gate calibration to reach the required timing precision [1][2]. -

Bioshock Infinite

SOUTH AFRICA’S LEADING GAMING, COMPUTER & TECHNOLOGY MAGAZINE VOL 15 ISSUE 10 Reviews Call of Duty: Black Ops II ZombiU Hitman: Absolution PC / PLAYSTATION / XBOX / NINTENDO + MORE The best and wors t of 2012 We give awards to things – not in a traditional way… BioShock Infi nite Loo k! Up in the sky! Editor Michael “RedTide“ James [email protected] Contents Features Assistant editor 24 THE BEST AND WORST OF 2012 Geoff “GeometriX“ Burrows Regulars We like to think we’re totally non-conformist, 8 Ed’s Note maaaaan. Screw the corporations. Maaaaan, etc. So Staff writer 10 Inbox when we do a “Best of [Year X]” list, we like to do it Dane “Barkskin “ Remendes our way. Here are the best, the worst, the weirdest 14 Bytes and, most importantly, the most memorable of all our Contributing editor 41 home_coded gaming experiences in 2012. Here’s to 2013 being an Lauren “Guardi3n “ Das Neves 62 Everything else equally memorable year in gaming! Technical writer Neo “ShockG“ Sibeko Opinion 34 BIOSHOCK INFINITE International correspondent How do you take one of the most infl uential, most Miktar “Miktar” Dracon 14 I, Gamer evocative experiences of this generation and make 16 The Game Stalker it even more so? You take to the skies, of course. Contributors 18 The Indie Investigator Miktar’s played a few hours of Irrational’s BioShock Rodain “Nandrew” Joubert 20 Miktar’s Meanderings Infi nite, and it’s left him breathless – but fi lled with Walt “Shryke” Pretorius 67 Hardwired beautiful, descriptive words. Go read them. Miklós “Mikit0707 “ Szecsei 82 Game Over Pippa -

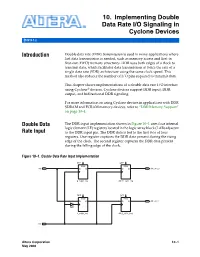

10. Implementing Double Data Rate I/O Signaling in Cyclone Devices

10. Implementing Double Data Rate I/O Signaling in Cyclone Devices C51010-1.2 Introduction Double data rate (DDR) transmission is used in many applications where fast data transmission is needed, such as memory access and first-in first-out (FIFO) memory structures. DDR uses both edges of a clock to transmit data, which facilitates data transmission at twice the rate of a single data rate (SDR) architecture using the same clock speed. This method also reduces the number of I/O pins required to transmit data. This chapter shows implementations of a double data rate I/O interface using Cyclone® devices. Cyclone devices support DDR input, DDR output, and bidirectional DDR signaling. For more information on using Cyclone devices in applications with DDR SDRAM and FCRAM memory devices, refer to “DDR Memory Support” on page 10–4. Double Data The DDR input implementation shown in Figure 10–1 uses four internal logic element (LE) registers located in the logic array block (LAB) adjacent Rate Input to the DDR input pin. The DDR data is fed to the first two of four registers. One register captures the DDR data present during the rising edge of the clock. The second register captures the DDR data present during the falling edge of the clock. Figure 10–1. Double Data Rate Input Implementation DFF DFF PRN PRN ddr DQ DQ ddr_out_h p_edge_reg ddr_h_sync_reg DFF DFF PRN PRN DQ DQ ddr_out_l NOT n_edge_reg ddr_l_sync_reg clk Altera Corporation 10–1 May 2008 Preliminary Cyclone Device Handbook, Volume 1 The third and fourth registers synchronize the two data streams to the rising edge of the clock. -

Memory Devices

Memory Devices CEN433 King Saud University Dr. Mohammed Amer Arafah 1 Types of Memory Devices Two main types of memory: ROM Read Only Memory Non Volatile data storage (remains valid after power off) For permanent storage of system software and data Can be PROM, EPROM or EEPROM (Flash) memory RAM Random Access Memory Volatile data storage (data disappears after power off) For temporary storage of application software and data Can be SRAM (static) or DRAM (dynamic) CEN433 - King Saud University 2 Mohammed Amer Arafah Memory Pin Connections Address Inputs: Select the required location in memory. Memory A0 Address lines are numbered from A1 A0 to as many as required to A2 Address . O0 address all memory locations Connections . O1 Output . Or O2 . Input/Output Example: 12-bit address: A0-A11 . Connections 12 . 2 = 4K memory locations AN OM Today’s memory devices range in capacities upto 1G locations (30 CE/ address lines) OE/ WE/ Example: 4K memory: 12 bits: 000H-FFFH. e.g. from 40000H to 40FFFH. Decode this part for CS CEN433 - King Saud University 3 Mohammed Amer Arafah Memory Pin Connections Data Inputs/Outputs (RAM) Data Outputs (ROM) Number of lines = width of data Memory A storage, usually a byte D0-D7 0 A (M=7) 1 A2 Address . O0 Connections . Wider processor data buses use . O1 Output . O Or multiple of such byte-wide . 2 . Input/Output memory devices, e.g. 64-bit . Connections AN . O 8 x 8-bit devices M CE/ Sometimes the total memory capacity is expressed in bits, e.g. OE/ a 64K x 8-bit = 512 Kbit WE/ CEN433 - King Saud University 4 Mohammed Amer Arafah Memory Pin Connections Control Inputs: Chip Enable (CE/), or Chip Select (CS/), or simply Select (S/): Select the memory device for READ or Memory WRITE operations. -

Introduction to Advanced Semiconductor Memories

CHAPTER 1 INTRODUCTION TO ADVANCED SEMICONDUCTOR MEMORIES 1.1. SEMICONDUCTOR MEMORIES OVERVIEW The goal of Advanced Semiconductor Memories is to complement the material already covered in Semiconductor Memories. The earlier book covered the fol- lowing topics: random access memory technologies (SRAMs and DRAMs) and their application to specific architectures; nonvolatile technologies such as the read-only memories (ROMs), programmable read-only memories (PROMs), and erasable PROMs in both ultraviolet erasable (UVPROM) and electrically erasable (EEPROM) versions; memory fault modeling and testing; memory design for testability and fault tolerance; semiconductor memory reliability; semiconductor memories radiation effects; advanced memory technologies; and high-density memory packaging technologies [1]. This section provides a general overview of the semiconductor memories topics that are covered in Semiconductor Memories. In the last three decades of semiconductor memories' phenomenal growth, the DRAMs have been the largest volume volatile memory produced for use as main computer memories because of their high density and low cost per bit advantage. SRAM densities have generally lagged a generation behind the DRAM. However, the SRAMs offer low-power consumption and high-per- formance features, which makes them practical alternatives to the DRAMs. Nowadays, a vast majority of SRAMs are being fabricated in the NMOS and CMOS technologies (and a combination of two technologies, also referred to as the mixed-MOS) for commodity SRAMs. 1 2 INTRODUCTION TO ADVANCED SEMICONDUCTOR MEMORIES MOS Memory Market ($M) Non-Memory IC Market ($M) Memory % of Total IC Market 300,000 40% 250,000 30% 200,00U "o Q 15 150,000 20% 2 </> a. o 100,000 2 10% 50,000 0 0% 96 97 98 99 00 01* 02* 03* 04* 05* MOS Memory Market ($M) 36,019 29,335 22,994 32,288 49,112 51,646 56,541 70,958 94,541 132,007 Non-Memory IC Market ($M) 78,923 90,198 86,078 97,930 126,551 135,969 148,512 172,396 207,430 262,172 Memory % of Total IC Market 31% . -

DDR Industrial SO-DIMM

DDR Industrial SO-DIMM DDR Industrial SO-DIMM is high-speed, low power memory module that use DDR SDRAM and a 2048 bits serial EEPROM on a 200-pin printed circuit board. DDR Industrial SO-DIMM is a Dual In-Line Memory Module and is intended for mounting into 200-pin edge connector sockets. Synchronous design allows precise cycle control with the use of system clock. Data I/O transactions are possible on both edges of DQS. Range of operation frequencies, programmable latencies allow the same device to be useful for a variety of high bandwidth, high performance memory system applications. Features Pin Identification RoHS compliant products. Symbol Function 400MHz Power supply: VDD: 2.6V ± 0.1V A0~A12, BA0~BA1 Address/Bank input VDDQ: 2.6V ±0.1V DQ0~DQ63 Bi-direction data bus. Clock Freq: 200MHZ for 400Mb/s/Pin. DQS0~DQS7 Data strobes CK0,/CK0,CK1,/CK1, Clock Input. (Differential pair) MRS cycle with address key programs. Ck2,/CK2 CAS Latency: 3 CKE0, CKE1 Clock Enable Input. Burst Length: 2,4,8 /CS0, /CS1 DIMM rank select lines. /RAS Row address strobe Data Sequence(Sequential & Interleave) /CAS Column address strobe Burst Mode Operation /WE Write Enable Auto and Self Refresh. DM0~DM7 Data masks/high data strobes Data I/O transaction on both edge of data strobe. VDD Power supply Edge aligned data output, center aligned data input. VREF Power Supply for Reference SSTL-2 compatible inputs and outputs. VDDSPD SPD EEPROM power supply Serial presence detect with EEPROM SA0~SA2 Address select for EEPROM SCL Clock for EEPROM SDA Data for EEPROM VSS Ground NC No Connection Dimensions Placement Side Millimeters Inches A 67.60 2.661 B 47.40 1.866 C 11.40 0.449 D 4.20 0.165 E 2.15 0.085 F 1.80 0.071 G 4.00 0.157 H 6.00 0.236 I 20.00 0.787 J 31.75 1.250 K 1.000.10 0.0390.004 Note: 1. -

Dynamic Random Access Memory Topics

Dynamic Random Access Memory Topics Simple DRAM Fast Page Mode (FPM) DRAM Extended Data Out (EDO) DRAM Burst EDO (BEDO) DRAM Synchronous DRAM (SDRAM) Rambus DRAM (RDRAM) Double Data Rate (DDR) SDRAM One capacitor and transistor of power, the discharge y Leaks a smallcapacitor amount slowly Simplicit refresh Requires top sk de in ed Us le ti la o v General DRAM Formats • DRAM is produced as integrated circuits (ICs) bonded and mounted into plastic packages with metal pins for connection to control signals and buses • In early use individual DRAM ICs were usually either installed directly to the motherboard or on ISA expansion cards • later they were assembled into multi-chip plug-in modules (DIMMs, SIMMs, etc.) General DRAM formats • Some Standard Module Type: • DRAM chips (Integrated Circuit or IC) • Dual in-line Package (DIP) • DRAM (memory) modules • Single in-line in Package(SIPP) • Single In-line Memory Module (SIMM) • Dual In-line Memory Module (DIMM) • Rambus In-line Memory Module (RIMM) • Small outline DIMM (SO-DIMM) Dual in-line Package (DIP) • is an electronic component package with a rectangular housing and two parallel rows of electrical connecting pins • 14 pins Single in-line in Package (SIPP) • It consisted of a small printed circuit board upon which were mounted a number of memory chips. • It had 30 pins along one edge which mated with matching holes in the motherboard of the computer. Single In-line Memory Module (SIMM) SIMM can be a 30 pin memory module or a 72 pin Dual In-line Memory Module (DIMM) Two types of DIMMs: a 168-pin SDRAM module and a 184-pin DDR SDRAM module.