Uses and Abuses of Logarithmic Axes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Black Hills State University

Black Hills State University MATH 341 Concepts addressed: Mathematics Number sense and numeration: meaning and use of numbers; the standard algorithms of the four basic operations; appropriate computation strategies and reasonableness of results; methods of mathematical investigation; number patterns; place value; equivalence; factors and multiples; ratio, proportion, percent; representations; calculator strategies; and number lines Students will be able to demonstrate proficiency in utilizing the traditional algorithms for the four basic operations and to analyze non-traditional student-generated algorithms to perform these calculations. Students will be able to use non-decimal bases and a variety of numeration systems, such as Mayan, Roman, etc, to perform routine calculations. Students will be able to identify prime and composite numbers, compute the prime factorization of a composite number, determine whether a given number is prime or composite, and classify the counting numbers from 1 to 200 into prime or composite using the Sieve of Eratosthenes. A prime number is a number with exactly two factors. For example, 13 is a prime number because its only factors are 1 and itself. A composite number has more than two factors. For example, the number 6 is composite because its factors are 1,2,3, and 6. To determine if a larger number is prime, try all prime numbers less than the square root of the number. If any of those primes divide the original number, then the number is prime; otherwise, the number is composite. The number 1 is neither prime nor composite. It is not prime because it does not have two factors. It is not composite because, otherwise, it would nullify the Fundamental Theorem of Arithmetic, which states that every composite number has a unique prime factorization. -

Saxon Course 1 Reteachings Lessons 21-30

Name Reteaching 21 Math Course 1, Lesson 21 • Divisibility Last-Digit Tests Inspect the last digit of the number. A number is divisible by . 2 if the last digit is even. 5 if the last digit is 0 or 5. 10 if the last digit is 0. Sum-of-Digits Tests Add the digits of the number and inspect the total. A number is divisible by . 3 if the sum of the digits is divisible by 3. 9 if the sum of the digits is divisible by 9. Practice: 1. Which of these numbers is divisible by 2? A. 2612 B. 1541 C. 4263 2. Which of these numbers is divisible by 5? A. 1399 B. 1395 C. 1392 3. Which of these numbers is divisible by 3? A. 3456 B. 5678 C. 9124 4. Which of these numbers is divisible by 9? A. 6754 B. 8124 C. 7938 Saxon Math Course 1 © Harcourt Achieve Inc. and Stephen Hake. All rights reserved. 23 Name Reteaching 22 Math Course 1, Lesson 22 • “Equal Groups” Word Problems with Fractions What number is __3 of 12? 4 Example: 1. Divide the total by the denominator (bottom number). 12 ÷ 4 = 3 __1 of 12 is 3. 4 2. Multiply your answer by the numerator (top number). 3 × 3 = 9 So, __3 of 12 is 9. 4 Practice: 1. If __1 of the 18 eggs were cracked, how many were not cracked? 3 2. What number is __2 of 15? 3 3. What number is __3 of 72? 8 4. How much is __5 of two dozen? 6 5. -

RATIO and PERCENT Grade Level: Fifth Grade Written By: Susan Pope, Bean Elementary, Lubbock, TX Length of Unit: Two/Three Weeks

RATIO AND PERCENT Grade Level: Fifth Grade Written by: Susan Pope, Bean Elementary, Lubbock, TX Length of Unit: Two/Three Weeks I. ABSTRACT A. This unit introduces the relationships in ratios and percentages as found in the Fifth Grade section of the Core Knowledge Sequence. This study will include the relationship between percentages to fractions and decimals. Finally, this study will include finding averages and compiling data into various graphs. II. OVERVIEW A. Concept Objectives for this unit: 1. Students will understand and apply basic and advanced properties of the concept of ratios and percents. 2. Students will understand the general nature and uses of mathematics. B. Content from the Core Knowledge Sequence: 1. Ratio and Percent a. Ratio (p. 123) • determine and express simple ratios, • use ratio to create a simple scale drawing. • Ratio and rate: solve problems on speed as a ratio, using formula S = D/T (or D = R x T). b. Percent (p. 123) • recognize the percent sign (%) and understand percent as “per hundred” • express equivalences between fractions, decimals, and percents, and know common equivalences: 1/10 = 10% ¼ = 25% ½ = 50% ¾ = 75% find the given percent of a number. C. Skill Objectives 1. Mathematics a. Compare two fractional quantities in problem-solving situations using a variety of methods, including common denominators b. Use models to relate decimals to fractions that name tenths, hundredths, and thousandths c. Use fractions to describe the results of an experiment d. Use experimental results to make predictions e. Use table of related number pairs to make predictions f. Graph a given set of data using an appropriate graphical representation such as a picture or line g. -

Frequency Response and Bode Plots

1 Frequency Response and Bode Plots 1.1 Preliminaries The steady-state sinusoidal frequency-response of a circuit is described by the phasor transfer function Hj( ) . A Bode plot is a graph of the magnitude (in dB) or phase of the transfer function versus frequency. Of course we can easily program the transfer function into a computer to make such plots, and for very complicated transfer functions this may be our only recourse. But in many cases the key features of the plot can be quickly sketched by hand using some simple rules that identify the impact of the poles and zeroes in shaping the frequency response. The advantage of this approach is the insight it provides on how the circuit elements influence the frequency response. This is especially important in the design of frequency-selective circuits. We will first consider how to generate Bode plots for simple poles, and then discuss how to handle the general second-order response. Before doing this, however, it may be helpful to review some properties of transfer functions, the decibel scale, and properties of the log function. Poles, Zeroes, and Stability The s-domain transfer function is always a rational polynomial function of the form Ns() smm as12 a s m asa Hs() K K mm12 10 (1.1) nn12 n Ds() s bsnn12 b s bsb 10 As we have seen already, the polynomials in the numerator and denominator are factored to find the poles and zeroes; these are the values of s that make the numerator or denominator zero. If we write the zeroes as zz123,, zetc., and similarly write the poles as pp123,, p , then Hs( ) can be written in factored form as ()()()s zsz sz Hs() K 12 m (1.2) ()()()s psp12 sp n 1 © Bob York 2009 2 Frequency Response and Bode Plots The pole and zero locations can be real or complex. -

Computational Entropy and Information Leakage∗

Computational Entropy and Information Leakage∗ Benjamin Fuller Leonid Reyzin Boston University fbfuller,[email protected] February 10, 2011 Abstract We investigate how information leakage reduces computational entropy of a random variable X. Recall that HILL and metric computational entropy are parameterized by quality (how distinguishable is X from a variable Z that has true entropy) and quantity (how much true entropy is there in Z). We prove an intuitively natural result: conditioning on an event of probability p reduces the quality of metric entropy by a factor of p and the quantity of metric entropy by log2 1=p (note that this means that the reduction in quantity and quality is the same, because the quantity of entropy is measured on logarithmic scale). Our result improves previous bounds of Dziembowski and Pietrzak (FOCS 2008), where the loss in the quantity of entropy was related to its original quality. The use of metric entropy simplifies the analogous the result of Reingold et. al. (FOCS 2008) for HILL entropy. Further, we simplify dealing with information leakage by investigating conditional metric entropy. We show that, conditioned on leakage of λ bits, metric entropy gets reduced by a factor 2λ in quality and λ in quantity. ∗Most of the results of this paper have been incorporated into [FOR12a] (conference version in [FOR12b]), where they are applied to the problem of building deterministic encryption. This paper contains a more focused exposition of the results on computational entropy, including some results that do not appear in [FOR12a]: namely, Theorem 3.6, Theorem 3.10, proof of Theorem 3.2, and results in Appendix A. -

A Weakly Informative Default Prior Distribution for Logistic and Other

The Annals of Applied Statistics 2008, Vol. 2, No. 4, 1360–1383 DOI: 10.1214/08-AOAS191 c Institute of Mathematical Statistics, 2008 A WEAKLY INFORMATIVE DEFAULT PRIOR DISTRIBUTION FOR LOGISTIC AND OTHER REGRESSION MODELS By Andrew Gelman, Aleks Jakulin, Maria Grazia Pittau and Yu-Sung Su Columbia University, Columbia University, University of Rome, and City University of New York We propose a new prior distribution for classical (nonhierarchi- cal) logistic regression models, constructed by first scaling all nonbi- nary variables to have mean 0 and standard deviation 0.5, and then placing independent Student-t prior distributions on the coefficients. As a default choice, we recommend the Cauchy distribution with cen- ter 0 and scale 2.5, which in the simplest setting is a longer-tailed version of the distribution attained by assuming one-half additional success and one-half additional failure in a logistic regression. Cross- validation on a corpus of datasets shows the Cauchy class of prior dis- tributions to outperform existing implementations of Gaussian and Laplace priors. We recommend this prior distribution as a default choice for rou- tine applied use. It has the advantage of always giving answers, even when there is complete separation in logistic regression (a common problem, even when the sample size is large and the number of pre- dictors is small), and also automatically applying more shrinkage to higher-order interactions. This can be useful in routine data analy- sis as well as in automated procedures such as chained equations for missing-data imputation. We implement a procedure to fit generalized linear models in R with the Student-t prior distribution by incorporating an approxi- mate EM algorithm into the usual iteratively weighted least squares. -

1 1. Data Transformations

260 Archives ofDisease in Childhood 1993; 69: 260-264 STATISTICS FROM THE INSIDE Arch Dis Child: first published as 10.1136/adc.69.2.260 on 1 August 1993. Downloaded from 1 1. Data transformations M J R Healy Additive and multiplicative effects adding a constant quantity to the correspond- In the statistical analyses for comparing two ing before readings. Exactly the same is true of groups of continuous observations which I the unpaired situation, as when we compare have so far considered, certain assumptions independent samples of treated and control have been made about the data being analysed. patients. Here the assumption is that we can One of these is Normality of distribution; in derive the distribution ofpatient readings from both the paired and the unpaired situation, the that of control readings by shifting the latter mathematical theory underlying the signifi- bodily along the axis, and this again amounts cance probabilities attached to different values to adding a constant amount to each of the of t is based on the assumption that the obser- control variate values (fig 1). vations are drawn from Normal distributions. This is not the only way in which two groups In the unpaired situation, we make the further of readings can be related in practice. Suppose assumption that the distributions in the two I asked you to guess the size of the effect of groups which are being compared have equal some treatment for (say) increasing forced standard deviations - this assumption allows us expiratory volume in one second in asthmatic to simplify the analysis and to gain a certain children. -

Random Denominators and the Analysis of Ratio Data

Environmental and Ecological Statistics 11, 55-71, 2004 Corrected version of manuscript, prepared by the authors. Random denominators and the analysis of ratio data MARTIN LIERMANN,1* ASHLEY STEEL, 1 MICHAEL ROSING2 and PETER GUTTORP3 1Watershed Program, NW Fisheries Science Center, 2725 Montlake Blvd. East, Seattle, WA 98112 E-mail: [email protected] 2Greenland Institute of Natural Resources 3National Research Center for Statistics and the Environment, Box 354323, University of Washington, Seattle, WA 98195 Ratio data, observations in which one random value is divided by another random value, present unique analytical challenges. The best statistical technique varies depending on the unit on which the inference is based. We present three environmental case studies where ratios are used to compare two groups, and we provide three parametric models from which to simulate ratio data. The models describe situations in which (1) the numerator variance and mean are proportional to the denominator, (2) the numerator mean is proportional to the denominator but its variance is proportional to a quadratic function of the denominator and (3) the numerator and denominator are independent. We compared standard approaches for drawing inference about differences between two distributions of ratios: t-tests, t-tests with transformations, permutation tests, the Wilcoxon rank test, and ANCOVA-based tests. Comparisons between tests were based both on achieving the specified alpha-level and on statistical power. The tests performed comparably with a few notable exceptions. We developed simple guidelines for choosing a test based on the unit of inference and relationship between the numerator and denominator. Keywords: ANCOVA, catch per-unit effort, fish density, indices, per-capita, per-unit, randomization tests, t-tests, waste composition 1352-8505 © 2004 Kluwer Academic Publishers 1. -

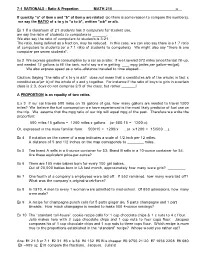

7-1 RATIONALS - Ratio & Proportion MATH 210 F8

7-1 RATIONALS - Ratio & Proportion MATH 210 F8 If quantity "a" of item x and "b" of item y are related (so there is some reason to compare the numbers), we say the RATIO of x to y is "a to b", written "a:b" or a/b. Ex 1 If a classroom of 21 students has 3 computers for student use, we say the ratio of students to computers is . We also say the ratio of computers to students is 3:21. The ratio, being defined as a fraction, may be reduced. In this case, we can also say there is a 1:7 ratio of computers to students (or a 7:1 ratio of students to computers). We might also say "there is one computer per seven students" . Ex 2 We express gasoline consumption by a car as a ratio: If w e traveled 372 miles since the last fill-up, and needed 12 gallons to fill the tank, we'd say we're getting mpg (miles per gallon— mi/gal). We also express speed as a ratio— distance traveled to time elapsed... Caution: Saying “ the ratio of x to y is a:b” does not mean that x constitutes a/b of the whole; in fact x constitutes a/(a+ b) of the whole of x and y together. For instance if the ratio of boys to girls in a certain class is 2:3, boys do not comprise 2/3 of the class, but rather ! A PROPORTION is an equality of tw o ratios. E.x 3 If our car travels 500 miles on 15 gallons of gas, how many gallons are needed to travel 1200 miles? We believe the fuel consumption we have experienced is the most likely predictor of fuel use on the trip. -

Measurement & Transformations

Chapter 3 Measurement & Transformations 3.1 Measurement Scales: Traditional Classifica- tion Statisticians call an attribute on which observations differ a variable. The type of unit on which a variable is measured is called a scale.Traditionally,statisticians talk of four types of measurement scales: (1) nominal,(2)ordinal,(3)interval, and (4) ratio. 3.1.1 Nominal Scales The word nominal is derived from nomen,theLatinwordforname.Nominal scales merely name differences and are used most often for qualitative variables in which observations are classified into discrete groups. The key attribute for anominalscaleisthatthereisnoinherentquantitativedifferenceamongthe categories. Sex, religion, and race are three classic nominal scales used in the behavioral sciences. Taxonomic categories (rodent, primate, canine) are nomi- nal scales in biology. Variables on a nominal scale are often called categorical variables. For the neuroscientist, the best criterion for determining whether a variable is on a nominal scale is the “plotting” criteria. If you plot a bar chart of, say, means for the groups and order the groups in any way possible without making the graph “stupid,” then the variable is nominal or categorical. For example, were the variable strains of mice, then the order of the means is not material. Hence, “strain” is nominal or categorical. On the other hand, if the groups were 0 mgs, 10mgs, and 15 mgs of active drug, then having a bar chart with 15 mgs first, then 0 mgs, and finally 10 mgs is stupid. Here “milligrams of drug” is not anominalorcategoricalvariable. 1 CHAPTER 3. MEASUREMENT & TRANSFORMATIONS 2 3.1.2 Ordinal Scales Ordinal scales rank-order observations. -

C-17Bpages Pdf It

SPECIFICATION DEFINITIONS FOR LOGARITHMIC AMPLIFIERS This application note is presented to engineers who may use logarithmic amplifiers in a variety of system applica- tions. It is intended to help engineers understand logarithmic amplifiers, how to specify them, and how the loga- rithmic amplifiers perform in a system environment. A similar paper addressing the accuracy and error contributing elements in logarithmic amplifiers will follow this application note. INTRODUCTION The need to process high-density pulses with narrow pulse widths and large amplitude variations necessitates the use of logarithmic amplifiers in modern receiving systems. In general, the purpose of this class of amplifier is to condense a large input dynamic range into a much smaller, manageable one through a logarithmic transfer func- tion. As a result of this transfer function, the output voltage swing of a logarithmic amplifier is proportional to the input signal power range in dB. In most cases, logarithmic amplifiers are used as amplitude detectors. Since output voltage (in mV) is proportion- al to the input signal power (in dB), the amplitude information is displayed in a much more usable format than accomplished by so-called linear detectors. LOGARITHMIC TRANSFER FUNCTION INPUT RF POWER VS. DETECTED OUTPUT VOLTAGE 0V 50 MILLIVOLTS/DIV. 250 MILLIVOLTS/DIV. 0V 50.0 ns/DIV. 50.0 ns/DIV. There are three basic types of logarithmic amplifiers. These are: • Detector Log Video Amplifiers • Successive Detection Logarithmic Amplifiers • True Log Amplifiers DETECTOR LOG VIDEO AMPLIFIER (DLVA) is a type of logarithmic amplifier in which the envelope of the input RF signal is detected with a standard "linear" diode detector. -

Linear and Logarithmic Scales

Linear and logarithmic scales. Defining Scale You may have thought of a scale as something to weigh yourself with or the outer layer on the bodies of fish and reptiles. For this lesson, we're using a different definition of a scale. A scale, in this sense, is a leveled range of values/numbers from lowest to highest that measures something at regular intervals. A great example is the classic number line that has numbers lined up at consistent intervals along a line. What Is a Linear Scale? A linear scale is much like the number line described above. They key to this type of scale is that the value between two consecutive points on the line does not change no matter how high or low you are on it. For instance, on the number line, the distance between the numbers 0 and 1 is 1 unit. The same distance of one unit is between the numbers 100 and 101, or -100 and -101. However you look at it, the distance between the points is constant (unchanging) regardless of the location on the line. A great way to visualize this is by looking at one of those old school intro to Geometry or mid- level Algebra examples of how to graph a line. One of the properties of a line is that it is the shortest distance between two points. Another is that it is has a constant slope. Having a constant slope means that the change in x and y from one point to another point on the line doesn't change.