Random Denominators and the Analysis of Ratio Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Black Hills State University

Black Hills State University MATH 341 Concepts addressed: Mathematics Number sense and numeration: meaning and use of numbers; the standard algorithms of the four basic operations; appropriate computation strategies and reasonableness of results; methods of mathematical investigation; number patterns; place value; equivalence; factors and multiples; ratio, proportion, percent; representations; calculator strategies; and number lines Students will be able to demonstrate proficiency in utilizing the traditional algorithms for the four basic operations and to analyze non-traditional student-generated algorithms to perform these calculations. Students will be able to use non-decimal bases and a variety of numeration systems, such as Mayan, Roman, etc, to perform routine calculations. Students will be able to identify prime and composite numbers, compute the prime factorization of a composite number, determine whether a given number is prime or composite, and classify the counting numbers from 1 to 200 into prime or composite using the Sieve of Eratosthenes. A prime number is a number with exactly two factors. For example, 13 is a prime number because its only factors are 1 and itself. A composite number has more than two factors. For example, the number 6 is composite because its factors are 1,2,3, and 6. To determine if a larger number is prime, try all prime numbers less than the square root of the number. If any of those primes divide the original number, then the number is prime; otherwise, the number is composite. The number 1 is neither prime nor composite. It is not prime because it does not have two factors. It is not composite because, otherwise, it would nullify the Fundamental Theorem of Arithmetic, which states that every composite number has a unique prime factorization. -

Saxon Course 1 Reteachings Lessons 21-30

Name Reteaching 21 Math Course 1, Lesson 21 • Divisibility Last-Digit Tests Inspect the last digit of the number. A number is divisible by . 2 if the last digit is even. 5 if the last digit is 0 or 5. 10 if the last digit is 0. Sum-of-Digits Tests Add the digits of the number and inspect the total. A number is divisible by . 3 if the sum of the digits is divisible by 3. 9 if the sum of the digits is divisible by 9. Practice: 1. Which of these numbers is divisible by 2? A. 2612 B. 1541 C. 4263 2. Which of these numbers is divisible by 5? A. 1399 B. 1395 C. 1392 3. Which of these numbers is divisible by 3? A. 3456 B. 5678 C. 9124 4. Which of these numbers is divisible by 9? A. 6754 B. 8124 C. 7938 Saxon Math Course 1 © Harcourt Achieve Inc. and Stephen Hake. All rights reserved. 23 Name Reteaching 22 Math Course 1, Lesson 22 • “Equal Groups” Word Problems with Fractions What number is __3 of 12? 4 Example: 1. Divide the total by the denominator (bottom number). 12 ÷ 4 = 3 __1 of 12 is 3. 4 2. Multiply your answer by the numerator (top number). 3 × 3 = 9 So, __3 of 12 is 9. 4 Practice: 1. If __1 of the 18 eggs were cracked, how many were not cracked? 3 2. What number is __2 of 15? 3 3. What number is __3 of 72? 8 4. How much is __5 of two dozen? 6 5. -

A Parametric Bayesian Approach in Density Ratio Estimation

Article A Parametric Bayesian Approach in Density Ratio Estimation Abdolnasser Sadeghkhani 1,* , Yingwei Peng 2 and Chunfang Devon Lin 3 1 Department of Mathematics & Statistics, Brock University, St. Catharines, ON L2S 3A1, Canada 2 Departments of Public Health Sciences, Queen’s University, Kingston, ON K7L 3N6, Canada; [email protected] 3 Department of Mathematics & Statistics, Queen’s University, Kingston, ON K7L 3N6, Canada; [email protected] * Correspondence: [email protected] Received: 7 March 2019; Accepted: 26 March 2019; Published: 30 March 2019 Abstract: This paper is concerned with estimating the ratio of two distributions with different parameters and common supports. We consider a Bayesian approach based on the log–Huber loss function, which is resistant to outliers and useful for finding robust M-estimators. We propose two different types of Bayesian density ratio estimators and compare their performance in terms of frequentist risk function. Some applications, such as classification and divergence function estimation, are addressed. Keywords: Bayes estimator; Bregman divergence; density ratio; exponential family; log–Huber loss 1. Introduction The problem of estimating the ratio of two densities appears in many areas of statistical and computer science. Density ratio estimation (DRE) is widely considered to be the most important factor in machine learning and information theory (Sugiyama et al. [1], and Kanamori et al. [2]). In a series of papers, Sugiyama et al. [3,4] developed DRE in different statistical data analysis problems. Some useful applications of DRE are as follows: non-stationary adaptation (Sugiyama and Müller [5] and Quiñonero-Candela et al. [6]), variable selection (Suzuki et al. -

RATIO and PERCENT Grade Level: Fifth Grade Written By: Susan Pope, Bean Elementary, Lubbock, TX Length of Unit: Two/Three Weeks

RATIO AND PERCENT Grade Level: Fifth Grade Written by: Susan Pope, Bean Elementary, Lubbock, TX Length of Unit: Two/Three Weeks I. ABSTRACT A. This unit introduces the relationships in ratios and percentages as found in the Fifth Grade section of the Core Knowledge Sequence. This study will include the relationship between percentages to fractions and decimals. Finally, this study will include finding averages and compiling data into various graphs. II. OVERVIEW A. Concept Objectives for this unit: 1. Students will understand and apply basic and advanced properties of the concept of ratios and percents. 2. Students will understand the general nature and uses of mathematics. B. Content from the Core Knowledge Sequence: 1. Ratio and Percent a. Ratio (p. 123) • determine and express simple ratios, • use ratio to create a simple scale drawing. • Ratio and rate: solve problems on speed as a ratio, using formula S = D/T (or D = R x T). b. Percent (p. 123) • recognize the percent sign (%) and understand percent as “per hundred” • express equivalences between fractions, decimals, and percents, and know common equivalences: 1/10 = 10% ¼ = 25% ½ = 50% ¾ = 75% find the given percent of a number. C. Skill Objectives 1. Mathematics a. Compare two fractional quantities in problem-solving situations using a variety of methods, including common denominators b. Use models to relate decimals to fractions that name tenths, hundredths, and thousandths c. Use fractions to describe the results of an experiment d. Use experimental results to make predictions e. Use table of related number pairs to make predictions f. Graph a given set of data using an appropriate graphical representation such as a picture or line g. -

ESTIMATING POPULATION MEAN USING KNOWN MEDIAN of the STUDY VARIABLE Dharmendra Kumar Yadav 1, Ravendra Kumar *2, Prof Sheela Misra3 & S.K

ISSN: 2277-9655 [Kumar* et al., 6(7): July, 2017] Impact Factor: 4.116 IC™ Value: 3.00 CODEN: IJESS7 IJESRT INTERNATIONAL JOURNAL OF ENGINEERING SCIENCES & RESEARCH TECHNOLOGY ESTIMATING POPULATION MEAN USING KNOWN MEDIAN OF THE STUDY VARIABLE Dharmendra Kumar Yadav 1, Ravendra Kumar *2, Prof Sheela Misra3 & S.K. Yadav4 *1Department of Mathematics, Dr Ram Manohar Lohiya Government Degree College, Aonla, Bareilly, U.P., India 2&3Department of Statistics, University of Lucknow, Lucknow-226007, U.P., INDIA 4Department of Mathematics and Statistics (A Centre of Excellence), Dr RML Avadh University, Faizabad-224001, U.P., INDIA DOI: 10.5281/zenodo.822944 ABSTRACT This present paper concerns with the estimation of population mean of the study variable by utilizing the known median of the study variable. A generalized ratio type estimator has been proposed for this purpose. The expressions for the bias and mean squared error of the proposed estimator have been derived up to the first order of approximation. The optimum value of the characterizing scalar has also been obtained. The minimum value of the proposed estimator for this optimum value of the characterizing scalar is obtained. A theoretical efficiency comparison of the proposed estimator has been madewith the mean per unit estimator, usual ratio estimator of Cochran (1940), usual regression estimator of Watson (1937),Bahl and Tuteja (1991), Kadilar (2016) and Subramani (2016) estimators. Through the numerical study, the theoretical findings are validated and it has been found that proposed estimate or performs better than the existing estimators. KEYWORDS: Study variable,Bias, Ratio estimator, Mean squared error, Simple random sampling, Efficiency. -

Ratio Estimators in Simple Random Sampling Using Information on Auxiliary Attribute

Ratio Estimators in Simple Random Sampling Using Information on Auxiliary Attribute Rajesh Singh Department of Statistics, Banaras Hindu University Varanasi (U.P.), India Email: [email protected] Pankaj Chauhan School of Statistics, DAVV, Indore (M.P.), India Nirmala Sawan School of Statistics, DAVV, Indore (M.P.), India Florentin Smarandache Department of Mathematics, University of New Mexico, Gallup, USA Email: [email protected] Abstract Some ratio estimators for estimating the population mean of the variable under study, which make use of information regarding the population proportion possessing certain attribute, are proposed. Under simple random sampling without replacement (SRSWOR) scheme, the expressions of bias and mean-squared error (MSE) up to the first order of approximation are derived. The results obtained have been illustrated numerically by taking some empirical population considered in the literature. Key words: Proportion, bias, MSE, ratio estimator. 1. Introduction The use of auxiliary information can increase the precision of an estimator when study variable y is highly correlated with auxiliary variable x. There exist situations when information is available in the form of attribute φ , which is highly correlated with y. For example a) Sex and height of the persons, b) Amount of milk produced and a particular breed of the cow, c) Amount of yield of wheat crop and a particular variety of wheat etc. (see Jhajj et. al. [1]). Consider a sample of size n drawn by SRSWOR from a population of size N. Let th yi and φi denote the observations on variable y and φ respectively for i unit (i =1,2,....N) . -

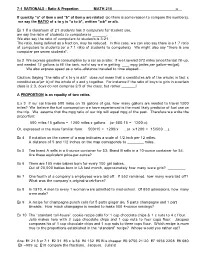

7-1 RATIONALS - Ratio & Proportion MATH 210 F8

7-1 RATIONALS - Ratio & Proportion MATH 210 F8 If quantity "a" of item x and "b" of item y are related (so there is some reason to compare the numbers), we say the RATIO of x to y is "a to b", written "a:b" or a/b. Ex 1 If a classroom of 21 students has 3 computers for student use, we say the ratio of students to computers is . We also say the ratio of computers to students is 3:21. The ratio, being defined as a fraction, may be reduced. In this case, we can also say there is a 1:7 ratio of computers to students (or a 7:1 ratio of students to computers). We might also say "there is one computer per seven students" . Ex 2 We express gasoline consumption by a car as a ratio: If w e traveled 372 miles since the last fill-up, and needed 12 gallons to fill the tank, we'd say we're getting mpg (miles per gallon— mi/gal). We also express speed as a ratio— distance traveled to time elapsed... Caution: Saying “ the ratio of x to y is a:b” does not mean that x constitutes a/b of the whole; in fact x constitutes a/(a+ b) of the whole of x and y together. For instance if the ratio of boys to girls in a certain class is 2:3, boys do not comprise 2/3 of the class, but rather ! A PROPORTION is an equality of tw o ratios. E.x 3 If our car travels 500 miles on 15 gallons of gas, how many gallons are needed to travel 1200 miles? We believe the fuel consumption we have experienced is the most likely predictor of fuel use on the trip. -

Measurement & Transformations

Chapter 3 Measurement & Transformations 3.1 Measurement Scales: Traditional Classifica- tion Statisticians call an attribute on which observations differ a variable. The type of unit on which a variable is measured is called a scale.Traditionally,statisticians talk of four types of measurement scales: (1) nominal,(2)ordinal,(3)interval, and (4) ratio. 3.1.1 Nominal Scales The word nominal is derived from nomen,theLatinwordforname.Nominal scales merely name differences and are used most often for qualitative variables in which observations are classified into discrete groups. The key attribute for anominalscaleisthatthereisnoinherentquantitativedifferenceamongthe categories. Sex, religion, and race are three classic nominal scales used in the behavioral sciences. Taxonomic categories (rodent, primate, canine) are nomi- nal scales in biology. Variables on a nominal scale are often called categorical variables. For the neuroscientist, the best criterion for determining whether a variable is on a nominal scale is the “plotting” criteria. If you plot a bar chart of, say, means for the groups and order the groups in any way possible without making the graph “stupid,” then the variable is nominal or categorical. For example, were the variable strains of mice, then the order of the means is not material. Hence, “strain” is nominal or categorical. On the other hand, if the groups were 0 mgs, 10mgs, and 15 mgs of active drug, then having a bar chart with 15 mgs first, then 0 mgs, and finally 10 mgs is stupid. Here “milligrams of drug” is not anominalorcategoricalvariable. 1 CHAPTER 3. MEASUREMENT & TRANSFORMATIONS 2 3.1.2 Ordinal Scales Ordinal scales rank-order observations. -

Mean and Variance of Ratio Estimators Used in Fluorescence Ratio Imaging

© 2000 Wiley-Liss, Inc. Cytometry 39:300–305 (2000) Mean and Variance of Ratio Estimators Used in Fluorescence Ratio Imaging G.M.P. van Kempen1* and L.J. van Vliet2 1Central Analytical Sciences, Unilever Research Vlaardingen, Vlaardingen, The Netherlands 2Pattern Recognition Group, Faculty of Applied Sciences, Delft University of Technology, Delft, The Netherlands Received 7 April 1999; Revision Received 14 October 1999; Accepted 26 October 1999 Background: The ratio of two measured fluorescence Results: We tested the three estimators on simulated data, signals (called x and y) is used in different applications in real-world fluorescence test images, and comparative ge- fluorescence microscopy. Multiple instances of both sig- nome hybridization (CGH) data. The results on the simu- nals can be combined in different ways to construct dif- lated and real-world test images confirm the presented ferent ratio estimators. theory. The CGH experiments show that our new estima- Methods: The mean and variance of three estimators for tor performs better than the existing estimators. the ratio between two random variables, x and y, are Conclusions: We have derived an unbiased ratio estima- discussed. Given n samples of x and y, we can intuitively tor that outperforms intuitive ratio estimators. Cytometry construct two different estimators: the mean of the ratio 39:300–305, 2000. © 2000 Wiley-Liss, Inc. of each x and y and the ratio between the mean of x and the mean of y. The former is biased and the latter is only asymptotically unbiased. Using the statistical characteris- tics of this estimator, a third, unbiased estimator can be Key terms: ratio imaging; CGH; ratio estimation; mean; constructed. -

A Comparison of Two Estimates of Standard Error for a Ratio-Of-Means Estimator for a Mapped-Plot Sample Design in Southeast Alas

United States Department of Agriculture A Comparison of Two Forest Service Estimates of Standard Error Pacific Northwest Research Station for a Ratio-of-Means Estimator Research Note PNW-RN-532 June 2002 for a Mapped-Plot Sample Design in Southeast Alaska Willem W.S. van Hees Abstract Comparisons of estimated standard error for a ratio-of-means (ROM) estimator are presented for forest resource inventories conducted in southeast Alaska between 1995 and 2000. Estimated standard errors for the ROM were generated by using a traditional variance estimator and also approximated by bootstrap methods. Estimates of standard error generated by both traditional and boot- strap methods were similar. Percentage differences between the traditional and bootstrap estimates of standard error for productive forest acres and for gross cubic-foot growth were generally greater than respective differences for non- productive forest acres, net cubic volume, or nonforest acres. Keywords: Sampling, inventory (forest), error estimation. Introduction Between 1995 and 2000, the Pacific Northwest Research Station (PNW) Forest Inventory and Analysis (FIA) Program in Anchorage, Alaska, conducted a land cover resource inventory of southeast Alaska (fig. 1). Land cover attribute esti- mates derived from this sample describe such features as area, timber volume, and understory vegetation. Each of these estimates is subject to measurement and sampling error. Measurement error is minimized through training and a program of quality control. Sampling error must be estimated mathematically. This paper presents a comparison of bootstrap estimation of standard error for a ratio-of-means estimator with a traditional estimation method. Bootstrap esti- mation techniques can be used when a complex sampling strategy hinders development of unbiased or reliable variance estimators (Schreuder and others 1993.) Bootstrap techniques are resampling methods that treat the sample as if it were the whole population. -

5 RATIO and REGRESSION ESTIMATION 5.1 Ratio Estimation

5 RATIO AND REGRESSION ESTIMATION 5.1 Ratio Estimation Suppose the researcher believes an auxiliary variable (or covariate) x is associated with • the variable of interest y. Examples: { Variable of interest: the amount of lumber (in board feet) produced by a tree. Auxiliary variable: the diameter of the tree. { Variable of interest: the current number of farms per county in the United States. Auxiliary variable: the number of farms per county taken from the previous census. { Variable of interest: the income level of a person who is 40 years old. Auxiliary variable: the number of years of education completed by the person. Situation: we have bivariate (X; Y ) data and assume there is a positive proportional rela- • tionship between X and Y . That is, on every sampling unit we take a pair of measurements and assume that Y BX for some constant B > 0. ≈ There are two cases that may be of interest to the researcher: • 1. To estimate the ratio of two population characteristics. The most common case is the population ratio B of means or totals: B = 2. To use the relationship between X and Y to improve estimation of ty or yU . The sampling plan will be to take a SRS of n pairs (x1; y1);:::; (xn; yn) from the population • of N pairs. We will use the following notation: N N N N xU = xi =N tx = xi yU = yi =N ty = yi i=1 ! i=1 i=1 ! i=1 n X X n X X x = xi=n = the sample mean of x's. -

Proportions: a Ratio Is the Quotient of Two Numbers. for Example, 2 3 Is A

Proportions: 2 A ratio is the quotient of two numbers. For example, is a ratio of 2 and 3. 3 An equality of two ratios is a proportion. For example, 3 15 = is a proportion. 7 45 If two sets of numbers (none of which is 0) are in a proportion, then we say that the numbers are proportional. a c Example, if = , then a and b are proportional to c and d. b d a c Remember from algebra that, if = , then ad = bc. b d The following proportions are equivalent: a c = b d a b = c d b d = a c c d = a b Theorem: Three or more parallel lines divide trasversals into proportion. l a c m b d n In the picture above, lines l, m, and n are parallel to each other. This means that they divide the two transversals into proportion. In other words, a c = b d a b = c d b d = a c c d = a b Theorem: In any triangle, a line that is paralle to one of the sides divides the other two sides in a proportion. E a c F G b d A B In picture above, FG is parallel to AB, so it divides the other two sides of 4AEB, EA and EB into proportions. In particular, a c = b d b d = a c a b = c d c d = a b Theorem: The bisector of an angle in a triangle divides the opposite side into segments that are proportional to the adjacent side.