Manifesto for a Musebot Ensemble: a Platform for Live Interactive Performance Between Multiple Autonomous Musical Agents

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

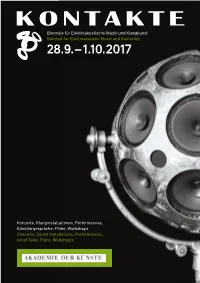

Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops

Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art 28.9. – 1.10.2017 Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops 1 KONTAKTE’17 28.9.–1.10.2017 Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops KONTAKTE '17 INHALT 28. September bis 1. Oktober 2017 Akademie der Künste, Berlin Programmübersicht 9 Ein Festival des Studios für Elektroakustische Musik der Akademie der Künste A festival presented by the Studio for Electro acoustic Music of the Akademie der Künste Konzerte 10 Im Zusammenarbeit mit In collaboration with Installationen 48 Deutsche Gesellschaft für Elektroakustische Musik Berliner Künstlerprogramm des DAAD Forum 58 Universität der Künste Berlin Hochschule für Musik Hanns Eisler Berlin Technische Universität Berlin Ausstellung 62 Klangzeitort Helmholtz Zentrum Berlin Workshop 64 Ensemble ascolta Musik der Jahrhunderte, Stuttgart Institut für Elektronische Musik und Akustik der Kunstuniversität Graz Laboratorio Nacional de Música Electroacústica Biografien 66 de Cuba singuhr – projekte Partner 88 Heroines of Sound Lebenshilfe Berlin Deutschlandfunk Kultur Lageplan 92 France Culture Karten, Information 94 Studio für Elektroakustische Musik der Akademie der Künste Hanseatenweg 10, 10557 Berlin Fon: +49 (0) 30 200572236 www.adk.de/sem EMail: [email protected] KONTAKTE ’17 www.adk.de/kontakte17 #kontakte17 KONTAKTE’17 Die zwei Jahre, die seit der ersten Ausgabe von KONTAKTE im Jahr 2015 vergangen sind, waren für das Studio für Elektroakustische Musik eine ereignisreiche Zeit. Mitte 2015 erhielt das Studio eine großzügige Sachspende ausgesonderter Studiotechnik der Deut schen Telekom, die nach entsprechenden Planungs und Wartungsarbeiten seit 2016 neue Produktionsmöglichkeiten eröffnet. -

An AI Collaborator for Gamified Live Coding Music Performances

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Falmouth University Research Repository (FURR) Autopia: An AI Collaborator for Gamified Live Coding Music Performances Norah Lorway,1 Matthew Jarvis,1 Arthur Wilson,1 Edward J. Powley,2 and John Speakman2 Abstract. Live coding is \the activity of writing (parts of) regards to this dynamic between the performer and audience, a program while it runs" [20]. One significant application of where the level of risk involved in the performance is made ex- live coding is in algorithmic music, where the performer mod- plicit. However, in the context of the system proposed in this ifies the code generating the music in a live context. Utopia3 paper, we are more concerned with the effect that this has on is a software tool for collaborative live coding performances, the performer themselves. Any performer at an algorave must allowing several performers (each with their own laptop pro- be prepared to share their code publicly, which inherently en- ducing its own sound) to communicate and share code during courages a mindset of collaboration and communal learning a performance. We propose an AI bot, Autopia, which can with live coders. participate in such performances, communicating with human performers through Utopia. This form of human-AI collabo- 1.2 Collaborative live coding ration allows us to explore the implications of computational creativity from the perspective of live coding. Collaborative live coding takes its roots from laptop or- chestra/ensemble such as the Princeton Laptop Orchestra (PLOrk), an ensemble of computer based instruments formed 1 Background at Princeton University [19]. -

Spaces to Fail In: Negotiating Gender, Community and Technology in Algorave

Spaces to Fail in: Negotiating Gender, Community and Technology in Algorave Feature Article Joanne Armitage University of Leeds (UK) Abstract Algorave presents itself as a community that is open and accessible to all, yet historically, there has been a lack of diversity on both the stage and dance floor. Through women- only workshops, mentoring and other efforts at widening participation, the number of women performing at algorave events has increased. Grounded in existing research in feminist technology studies, computing education and gender and electronic music, this article unpacks how techno, social and cultural structures have gendered algorave. These ideas will be elucidated through a series of interviews with women participating in the algorave community, to centrally argue that gender significantly impacts an individual’s ability to engage and interact within the algorave community. I will also consider how live coding, as an embodied techno-social form, is represented at events and hypothesise as to how it could grow further as an inclusive and feminist practice. Keywords: gender; algorave; embodiment; performance; electronic music Joanne Armitage lectures in Digital Media at the School of Media and Communication, University of Leeds. Her work covers areas such as physical computing, digital methods and critical computing. Currently, her research focuses on coding practices, gender and embodiment. In 2017 she was awarded the British Science Association’s Daphne Oram award for digital innovation. She is a current recipient of Sound and Music’s Composer-Curator fund. Outside of academia she regularly leads community workshops in physical computing, live coding and experimental music making. Joanne is an internationally recognised live coder and contributes to projects including laptop ensemble, OFFAL and algo-pop duo ALGOBABEZ. -

International Computer Music Conference (ICMC/SMC)

Conference Program 40th International Computer Music Conference joint with the 11th Sound and Music Computing conference Music Technology Meets Philosophy: From digital echos to virtual ethos ICMC | SMC |2014 14-20 September 2014, Athens, Greece ICMC|SMC|2014 14-20 September 2014, Athens, Greece Programme of the ICMC | SMC | 2014 Conference 40th International Computer Music Conference joint with the 11th Sound and Music Computing conference Editor: Kostas Moschos PuBlished By: x The National anD KapoDistrian University of Athens Music Department anD Department of Informatics & Telecommunications Panepistimioupolis, Ilissia, GR-15784, Athens, Greece x The Institute for Research on Music & Acoustics http://www.iema.gr/ ADrianou 105, GR-10558, Athens, Greece IEMA ISBN: 978-960-7313-25-6 UOA ISBN: 978-960-466-133-6 Ξ^ĞƉƚĞŵďĞƌϮϬϭϰʹ All copyrights reserved 2 ICMC|SMC|2014 14-20 September 2014, Athens, Greece Contents Contents ..................................................................................................................................................... 3 Sponsors ..................................................................................................................................................... 4 Preface ....................................................................................................................................................... 5 Summer School ....................................................................................................................................... -

Combining Live Coding and Vjing for Live Visual Performance

CJing: Combining Live Coding and VJing for Live Visual Performance by Jack Voldemars Purvis A thesis submitted to the Victoria University of Wellington in fulfilment of the requirements for the degree of Master of Science in Computer Graphics. Victoria University of Wellington 2019 Abstract Live coding focuses on improvising content by coding in textual inter- faces, but this reliance on low level text editing impairs usability by not al- lowing for high level manipulation of content. VJing focuses on remixing existing content with graphical user interfaces and hardware controllers, but this focus on high level manipulation does not allow for fine-grained control where content can be improvised from scratch or manipulated at a low level. This thesis proposes the code jockey practice (CJing), a new hybrid practice that combines aspects of live coding and VJing practice. In CJing, a performer known as a code jockey (CJ) interacts with code, graphical user interfaces and hardware controllers to create or manipulate real-time visuals. CJing harnesses the strengths of live coding and VJing to enable flexible performances where content can be controlled at both low and high levels. Live coding provides fine-grained control where con- tent can be improvised from scratch or manipulated at a low level while VJing provides high level manipulation where content can be organised, remixed and interacted with. To illustrate CJing, this thesis contributes Visor, a new environment for live visual performance that embodies the practice. Visor's design is based on key ideas of CJing and a study of live coders and VJs in practice. -

Improv: Live Coding for Robot Motion Design

Improv: Live Coding for Robot Motion Design Alexandra Q. Nilles Chase Gladish Department of Computer Science Department of Computer Science University of Illinois at Urbana-Champaign University of Illinois at Urbana-Champaign [email protected] [email protected] Mattox Beckman Amy LaViers Department of Computer Science Mechanical Science and Engineering University of Illinois at Urbana-Champaign Department [email protected] University of Illinois at Urbana-Champaign [email protected] ABSTRACT Often, people such as educators, artists, and researchers wish to quickly generate robot motion. However, current toolchains for programming robots can be difficult to learn, especially for people without technical training. This paper presents the Improv system, a programming language for high-level description of robot motion with immediate visualization of the resulting motion on a physical or simulated robot. Improv includes a "live coding" wrapper for ROS (“Robot Operating System", an open-source robot software framework which is widely used in academia and industry, and integrated with many commercially available robots). Commands in Improv are compiled to ROS messages. The language is inspired by choreographic techniques, and allows the user to compose and transform movements in space and time. In this paper, we present our work on Improv so far, as well as the design decisions made throughout its creation. Permission to make digital or hard copies of part or all of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. -

AIMC2021 Paper

Autopia: An AI collaborator for live networked computer music performance Norah Lorway1, Edward J. Powley2 and Arthur Wilson3 1 Academy of Music & Theatre Arts, Falmouth University, UK 2 Games Academy, Falmouth University, UK 3 School of Communication, Royal College of Art, UK [email protected], [email protected], [email protected] Abstract. This paper describes an AI system, Autopia, designed to participate in collaborative live coding music performances using the Utopia1 software tool for SuperCollider. This form of human-AI collaboration allows us to explore the implications of mixed-initiative computational creativity from the perspective of live coding. As well as collaboration with human performers, one of our motivations with Autopia is to explore audience collaboration through a gamified mode of interaction, namely voting through a web-based interface accessed by the audience on their smartphones. The results of this are often emergent, chaotic, and surprising to performers and audience alike. Keywords: 1 Introduction 1.1 Live Coding Live coding is the activity of manipulating, interacting and writing parts of a program whilst it runs (Ward et al., 2004). Whilst live coding can be used in a variety of contexts, it is most commonly used to create improvised computer music and visual art. The diversity of musical and artistic output achievable with live coding techniques has seen practitioners perform in many different settings, including jazz bars, festivals and algoraves --- an event in which performers use algorithms to create both music and visuals that can be performed in the context of a rave. What began as a niche practice has evolved into an international community of artists, programmers, and researchers. -

Fun and Software

Fun and Software Fun and Software Exploring Pleasure, Paradox and Pain in Computing Edited by Olga Goriunova Bloomsbury Academic An imprint of Bloomsbury Publishing Inc 1385 Broadway 50 Bedford Square New York London NY 10018 WC1B 3DP USA UK www.bloomsbury.com Bloomsbury is a registered trade mark of Bloomsbury Publishing Plc First published 2014 © Olga Goriunova and contributors, 2014 All rights reserved. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage or retrieval system, without prior permission in writing from the publishers. No responsibility for loss caused to any individual or organization acting on or refraining from action as a result of the material in this publication can be accepted by Bloomsbury or the author. Library of Congress Cataloging-in-Publication Data A catalog record for this book is available from the Library of Congress ISBN: HB: 978-1-6235-6094-2 ePub: 978-1-6235-6756-9 ePDF: 978-1-6235-6887-0 Typeset by Fakenham Prepress Solutions, Fakenham, Norfolk NR21 8NN Printed and bound in the United States of America Contents Acknowledgements vi Introduction Olga Goriunova 1 1 Technology, Logistics and Logic: Rethinking the Problem of Fun in Software Andrew Goffey 21 2 Bend Sinister: Monstrosity and Normative Effect in Computational Practice Simon Yuill 41 3 Always One Bit More, Computing and the Experience of Ambiguity Matthew Fuller 91 4 Do Algorithms Have Fun? On Completion, Indeterminacy -

Dancecult 10(1) Programming Technique

Algorithmic Electronic Dance Music Guest Editors Shelly Knotts and Nick Collins Volume 10 Number 1 2018 Dancecult Journal of Electronic In memory of Ed Montano, Dancecult Reviews Editor, Production Dance Music Culture Editor, Production Assistant, Operations Director 2009-2018. Issue 10(1) 2018 ISSN 1947-5403 Dancecult: Journal of Electronic Dance Music Culture ©2018 Dancecult is a peer-reviewed, open-access e-journal for the study of electronic Published yearly at <http://dj.dancecult.net> dance music culture (EDMC). Launched in 2009, as a platform for interdisciplinary scholarship on the shifting terrain of EDMCs worldwide, Dancecult houses research exploring the sites, technologies, Executive Editor sounds and cultures of electronic music in historical and contemporary Graham St John perspectives. Playing host to studies of emergent forms of electronic (University of Fribourg, CH) music production, performance, distribution, and reception, as a portal for cutting-edge research on the relation between bodies, From the floor Editors Alice O’Grady technologies, and cyberspace, as a medium through which the cultural (University of Leeds, UK) politics of dance is critically investigated, and as a venue for innovative Dave Payling multimedia projects, Dancecult is the leading venue for research on (Staffordshire University, UK) EDMC. Reviews Editor Toby Young Cover: The Yorkshire Programming Ensemble (TYPE) live at Open Data Institute in 2017. Image shows laptop band members (University of Oxford, UK) Lucy Cheesman, Ryan Kirkbride, and Laurie -

Reflections on the Creative and Technological Development of the Audiovisual Duo—The Rebel Scum

Rogue Two Reflections on the Creative and Technological Development of the Audiovisual Duo—The Rebel Scum Feature Article Ryan Ross Smith and Shawn Lawson Monash University (Australia) / Rensselaer Polytechnic Institute (US) Abstract This paper examines the development of the audiovisual duo Obi-Wan Codenobi and The Wookie (authors Shawn Lawson and Ryan Ross Smith respectively). The authors trace a now four-year trajectory of technological and artistic development, while highlighting the impact that a more recent physical displacement has had on the creative and collaborative aspects of the project. We seek to reflect upon the creative and technological journey through our collaboration, including Lawson’s development of The Force, an OpenGL shader-based live-coding environment for generative visuals, while illuminating our experiences with, and takeaways from, live coding in practice and performance, EDM in general and algorave culture specifically. Keywords: live coding; collaboration; EDM; audiovisual; Star Wars Ryan Ross Smith is a composer, performer and educator based in Melbourne, Australia. Smith has performed and had his music performed in North America, Iceland, Denmark, Australia and the UK, and has presented his work and research at conferences including NIME, ISEA, ICLI, ICLC, SMF and TENOR. Smith is also known for his work with Animated Notation, and his Ph.D. research website is archived at animatednotation.com. He is a Lecturer in composition and creative music technology at Monash University in Melbourne, Australia. Email: ryanrosssmith [@] gmail [.] com . Web: <http://www.ryanrosssmith.com/> Shawn Lawson is a visual media artist creating the computational sublime. As Obi-Wan Codenobi, he live-codes, real-time computer graphics with his software: The Force & The Dark Side. -

In Kepler's Gardens

A global journey mapping the ‘new’ world In Kepler’s Gardens AUTONOMY Helsinki Jerusalem Cairo Bangkok Esch Tallinn Nicosia Grenoble Melbourne Linz UNCERTAINTY Paris London ECOLOGY Oslo Moscow Auckland Vilnius Amsterdam Tokyo Johannesburg Munich Bucharest Belgrade San Sebastian Riga DEMOCRACY Dublin Daejeon Utrecht Vienna Los Angeles Ljubljana Buenos Aires Barcelona Montréal Chicago TECHNOLOGY Seoul Prague Hong Kong Athens Bergen Berlin Leiden Milan New York Brussels HUMANITY Taipei Jakarta ARS ELECTRONICA 2020 Festival for Art, Technology & Society In Kepler’s Gardens A global journey mapping the ‘new’ world Edited by Gerfried Stocker / Christine Schöpf / Hannes Leopoldseder Ars Electronica 2020 Festival for Art, Technology, and Society September 9 — 13, 2020 Ars Electronica, Linz Editors: Hannes Leopoldseder, Christine Schöpf, Gerfried Stocker Editing: Veronika Liebl, Anna Grubauer, Maria Koller, Alexander Wöran Translations: German — English: Douglas Deitemyer, Daniel Benedek Copyediting: Laura Freeburn, Mónica Belevan Graphic design and production: Main layout: Cornelia Prokop, Lunart Werbeagentur Cover: Gerhard Kirchschläger Minion Typeface: IBM Plex Sans PEFC Certified This product is from Printed by: Gutenberg-Werbering Gesellschaft m.b.H., Linz sustainably managed forests and controlled sources Paper: MagnoEN Bulk 1,1 Vol., 115 g/m², 300 g/m² www.pefc.org PEFC Certified © 2020 Ars Electronica PEFC Certified PEFC Certified This product is This product is This product is PEFC Certified © 2020 for the reproduced works by the artists, -

The Social Mediation of Electronic Music Production

People, Platforms, Practice: The Social Mediation of Electronic Music Production Paul Chambers Thesis submitted for the degree of Doctor of Philosophy Faculty of Arts School of Social Sciences Discipline of Anthropology The University of Adelaide May 2019 Contents Contents 2 Abstract 5 Thesis Declaration 6 Acknowledgements 7 List of Figures 8 Introduction Introduction 9 Electronic Music: Material, Aesthetics and Distinction 12 Electronic Music: Born’s Four Planes of Social Mediation 17 Methodology 21 Thesis Overview 31 Section 1: The Subject 38 Chapter 1 Music & Identity in the ‘Post-Internet’ 39 Music, Gender and the Self 42 Val: Clubbing in ‘Digitopia' 45 Sacha: Pasifika Futurism 50 Nico & Alex: ‘Digital Queering’ 54 Travis & Moe: ‘Persona Empires’ 61 Conclusion 66 Chapter 2 Politics of the Personal 70 Music, Freedom & Modernity 73 Brother Lucid & Kyle: The Studio as Autonomous Zone 75 Nix & Bloodbottler: Transposing Alienation 80 Klaus, Clifford & Red Robin: Frequency Response 87 Ali & Miles: Laptop Lyricists 92 Conclusion 98 2 Section 2: The Object 100 Chapter 3 Anyone Can Make It 101 The DAW & the Future of Pedagogy 103 Ableton: Meet the Superstar 107 Learning the Tropes: The Role of the Institution 113 Jamming with Myself: Who Needs a Band? 125 Code Composers: Who Needs a Human? 128 Conclusion 132 Chapter 4 Eurorack Revolution & the Maker Movement 135 Towards a Theory of the Modular 138 Sound Affects: How Low Can I Go? 145 Patching into the Field: Interacting with a Sonic Ecology 150 Glad Obsession: Capitalism and Audiophilia 155