Decomposition Theory: a Practice-Based Study of Popular Music Composition Strategies

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

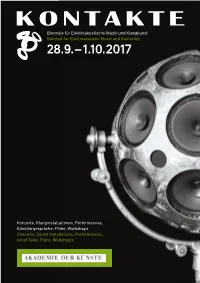

Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops

Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art 28.9. – 1.10.2017 Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops 1 KONTAKTE’17 28.9.–1.10.2017 Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops KONTAKTE '17 INHALT 28. September bis 1. Oktober 2017 Akademie der Künste, Berlin Programmübersicht 9 Ein Festival des Studios für Elektroakustische Musik der Akademie der Künste A festival presented by the Studio for Electro acoustic Music of the Akademie der Künste Konzerte 10 Im Zusammenarbeit mit In collaboration with Installationen 48 Deutsche Gesellschaft für Elektroakustische Musik Berliner Künstlerprogramm des DAAD Forum 58 Universität der Künste Berlin Hochschule für Musik Hanns Eisler Berlin Technische Universität Berlin Ausstellung 62 Klangzeitort Helmholtz Zentrum Berlin Workshop 64 Ensemble ascolta Musik der Jahrhunderte, Stuttgart Institut für Elektronische Musik und Akustik der Kunstuniversität Graz Laboratorio Nacional de Música Electroacústica Biografien 66 de Cuba singuhr – projekte Partner 88 Heroines of Sound Lebenshilfe Berlin Deutschlandfunk Kultur Lageplan 92 France Culture Karten, Information 94 Studio für Elektroakustische Musik der Akademie der Künste Hanseatenweg 10, 10557 Berlin Fon: +49 (0) 30 200572236 www.adk.de/sem EMail: [email protected] KONTAKTE ’17 www.adk.de/kontakte17 #kontakte17 KONTAKTE’17 Die zwei Jahre, die seit der ersten Ausgabe von KONTAKTE im Jahr 2015 vergangen sind, waren für das Studio für Elektroakustische Musik eine ereignisreiche Zeit. Mitte 2015 erhielt das Studio eine großzügige Sachspende ausgesonderter Studiotechnik der Deut schen Telekom, die nach entsprechenden Planungs und Wartungsarbeiten seit 2016 neue Produktionsmöglichkeiten eröffnet. -

Autechre Confield Mp3, Flac, Wma

Autechre Confield mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic Album: Confield Country: UK Released: 2001 Style: Abstract, IDM, Experimental MP3 version RAR size: 1635 mb FLAC version RAR size: 1586 mb WMA version RAR size: 1308 mb Rating: 4.3 Votes: 182 Other Formats: AU DXD AC3 MPC MP4 TTA MMF Tracklist 1 VI Scose Poise 6:56 2 Cfern 6:41 3 Pen Expers 7:08 4 Sim Gishel 7:14 5 Parhelic Triangle 6:03 6 Bine 4:41 7 Eidetic Casein 6:12 8 Uviol 8:35 9 Lentic Catachresis 8:30 Companies, etc. Phonographic Copyright (p) – Warp Records Limited Copyright (c) – Warp Records Limited Published By – Warp Music Published By – Electric And Musical Industries Made By – Universal M & L, UK Credits Mastered By – Frank Arkwright Producer [AE Production] – Brown*, Booth* Notes Published by Warp Music Electric and Musical Industries p and c 2001 Warp Records Limited Made In England Packaging: White tray jewel case with four page booklet. As with some other Autechre releases on Warp, this album was assigned a catalogue number that was significantly ahead of the normal sequence (i.e. WARPCD127 and WARPCD129 weren't released until February and March 2005 respectively). Some copies came with miniature postcards with a sheet a stickers on the front that say 'autechre' in the Confield font. Barcode and Other Identifiers Barcode (Sticker): 5 021603 128125 Barcode (Printed): 5021603128125 Matrix / Runout (Variant 1 to 3): WARPCD128 03 5 Matrix / Runout (Variant 1 to 3, Etched In Inner Plastic Hub): MADE IN THE UK BY UNIVERSAL M&L Mastering SID Code -

French Underground Raves of the Nineties. Aesthetic Politics of Affect and Autonomy Jean-Christophe Sevin

French underground raves of the nineties. Aesthetic politics of affect and autonomy Jean-Christophe Sevin To cite this version: Jean-Christophe Sevin. French underground raves of the nineties. Aesthetic politics of affect and autonomy. Political Aesthetics: Culture, Critique and the Everyday, Arundhati Virmani, pp.71-86, 2016, 978-0-415-72884-3. halshs-01954321 HAL Id: halshs-01954321 https://halshs.archives-ouvertes.fr/halshs-01954321 Submitted on 13 Dec 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. French underground raves of the 1990s. Aesthetic politics of affect and autonomy Jean-Christophe Sevin FRENCH UNDERGROUND RAVES OF THE 1990S. AESTHETIC POLITICS OF AFFECT AND AUTONOMY In Arundhati Virmani (ed.), Political Aesthetics: Culture, Critique and the Everyday, London, Routledge, 2016, p.71-86. The emergence of techno music – commonly used in France as electronic dance music – in the early 1990s is inseparable from rave parties as a form of spatiotemporal deployment. It signifies that the live diffusion via a sound system powerful enough to diffuse not only its volume but also its sound frequencies spectrum, including infrabass, is an integral part of the techno experience. In other words listening on domestic equipment is not a sufficient condition to experience this music. -

An AI Collaborator for Gamified Live Coding Music Performances

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Falmouth University Research Repository (FURR) Autopia: An AI Collaborator for Gamified Live Coding Music Performances Norah Lorway,1 Matthew Jarvis,1 Arthur Wilson,1 Edward J. Powley,2 and John Speakman2 Abstract. Live coding is \the activity of writing (parts of) regards to this dynamic between the performer and audience, a program while it runs" [20]. One significant application of where the level of risk involved in the performance is made ex- live coding is in algorithmic music, where the performer mod- plicit. However, in the context of the system proposed in this ifies the code generating the music in a live context. Utopia3 paper, we are more concerned with the effect that this has on is a software tool for collaborative live coding performances, the performer themselves. Any performer at an algorave must allowing several performers (each with their own laptop pro- be prepared to share their code publicly, which inherently en- ducing its own sound) to communicate and share code during courages a mindset of collaboration and communal learning a performance. We propose an AI bot, Autopia, which can with live coders. participate in such performances, communicating with human performers through Utopia. This form of human-AI collabo- 1.2 Collaborative live coding ration allows us to explore the implications of computational creativity from the perspective of live coding. Collaborative live coding takes its roots from laptop or- chestra/ensemble such as the Princeton Laptop Orchestra (PLOrk), an ensemble of computer based instruments formed 1 Background at Princeton University [19]. -

2018 Celebrity Birthday Book!

2 Contents 1 2018 17 1.1 January ............................................... 17 January 1 - Verne Troyer gets the start of a project (2018-01-01 00:02) . 17 January 2 - Jack Hanna gets animal considerations (2018-01-02 09:00) . 18 January 3 - Dan Harmon gets pestered (2018-01-03 09:00) . 18 January 4 - Dave Foley gets an outdoor slumber (2018-01-04 09:00) . 18 January 5 - deadmau5 gets a restructured week (2018-01-05 09:00) . 19 January 6 - Julie Chen gets variations on a dining invitation (2018-01-06 09:00) . 19 January 7 - Katie Couric gets a baristo’s indolence (2018-01-07 09:00) . 20 January 8 - Jenny Lewis gets a young Peter Pan (2018-01-08 09:00) . 20 January 9 - Joan Baez gets Mickey Brennan’d (2018-01-09 09:00) . 20 January 10 - Jemaine Clement gets incremental name dropping (2018-01-10 09:00) . 21 January 11 - Mary J. Blige gets transferable Bop-It skills (2018-01-11 09:00) . 22 January 12 - Raekwon gets world leader factoids (2018-01-12 09:00) . 22 January 13 - Julia Louis-Dreyfus gets a painful hallumination (2018-01-13 09:00) . 22 January 14 - Jason Bateman gets a squirrel’s revenge (2018-01-14 09:00) . 23 January 15 - Charo gets an avian alarm (2018-01-15 09:00) . 24 January 16 – Lin-Manuel Miranda gets an alternate path to a coveted award (2018-01-16 09:00) .................................... 24 January 17 - Joshua Malina gets a Baader-Meinhof’d rice pudding (2018-01-17 09:00) . 25 January 18 - Jason Segel gets a body donation (2018-01-18 09:00) . -

Spaces to Fail In: Negotiating Gender, Community and Technology in Algorave

Spaces to Fail in: Negotiating Gender, Community and Technology in Algorave Feature Article Joanne Armitage University of Leeds (UK) Abstract Algorave presents itself as a community that is open and accessible to all, yet historically, there has been a lack of diversity on both the stage and dance floor. Through women- only workshops, mentoring and other efforts at widening participation, the number of women performing at algorave events has increased. Grounded in existing research in feminist technology studies, computing education and gender and electronic music, this article unpacks how techno, social and cultural structures have gendered algorave. These ideas will be elucidated through a series of interviews with women participating in the algorave community, to centrally argue that gender significantly impacts an individual’s ability to engage and interact within the algorave community. I will also consider how live coding, as an embodied techno-social form, is represented at events and hypothesise as to how it could grow further as an inclusive and feminist practice. Keywords: gender; algorave; embodiment; performance; electronic music Joanne Armitage lectures in Digital Media at the School of Media and Communication, University of Leeds. Her work covers areas such as physical computing, digital methods and critical computing. Currently, her research focuses on coding practices, gender and embodiment. In 2017 she was awarded the British Science Association’s Daphne Oram award for digital innovation. She is a current recipient of Sound and Music’s Composer-Curator fund. Outside of academia she regularly leads community workshops in physical computing, live coding and experimental music making. Joanne is an internationally recognised live coder and contributes to projects including laptop ensemble, OFFAL and algo-pop duo ALGOBABEZ. -

Exposing Corruption in Progressive Rock: a Semiotic Analysis of Gentle Giant’S the Power and the Glory

University of Kentucky UKnowledge Theses and Dissertations--Music Music 2019 EXPOSING CORRUPTION IN PROGRESSIVE ROCK: A SEMIOTIC ANALYSIS OF GENTLE GIANT’S THE POWER AND THE GLORY Robert Jacob Sivy University of Kentucky, [email protected] Digital Object Identifier: https://doi.org/10.13023/etd.2019.459 Right click to open a feedback form in a new tab to let us know how this document benefits ou.y Recommended Citation Sivy, Robert Jacob, "EXPOSING CORRUPTION IN PROGRESSIVE ROCK: A SEMIOTIC ANALYSIS OF GENTLE GIANT’S THE POWER AND THE GLORY" (2019). Theses and Dissertations--Music. 149. https://uknowledge.uky.edu/music_etds/149 This Doctoral Dissertation is brought to you for free and open access by the Music at UKnowledge. It has been accepted for inclusion in Theses and Dissertations--Music by an authorized administrator of UKnowledge. For more information, please contact [email protected]. STUDENT AGREEMENT: I represent that my thesis or dissertation and abstract are my original work. Proper attribution has been given to all outside sources. I understand that I am solely responsible for obtaining any needed copyright permissions. I have obtained needed written permission statement(s) from the owner(s) of each third-party copyrighted matter to be included in my work, allowing electronic distribution (if such use is not permitted by the fair use doctrine) which will be submitted to UKnowledge as Additional File. I hereby grant to The University of Kentucky and its agents the irrevocable, non-exclusive, and royalty-free license to archive and make accessible my work in whole or in part in all forms of media, now or hereafter known. -

Festival 30000 LP SERIES 1961-1989

AUSTRALIAN RECORD LABELS FESTIVAL 30,000 LP SERIES 1961-1989 COMPILED BY MICHAEL DE LOOPER AUGUST 2020 Festival 30,000 LP series FESTIVAL LP LABEL ABBREVIATIONS, 1961 TO 1973 AML, SAML, SML, SAM A&M SINL INFINITY SODL A&M - ODE SITFL INTERFUSION SASL A&M - SUSSEX SIVL INVICTUS SARL AMARET SIL ISLAND ML, SML AMPAR, ABC PARAMOUNT, KL KOMMOTION GRAND AWARD LL LEEDON SAT, SATAL ATA SLHL LEE HAZLEWOOD INTERNATIONAL AL, SAL ATLANTIC LYL, SLYL, SLY LIBERTY SAVL AVCO EMBASSY DL LINDA LEE SBNL BANNER SML, SMML METROMEDIA BCL, SBCL BARCLAY PL, SPL MONUMENT BBC BBC MRL MUSHROOM SBTL BLUE THUMB SPGL PAGE ONE BL BRUNSWICK PML, SPML PARAMOUNT CBYL, SCBYL CARNABY SPFL PENNY FARTHING SCHL CHART PJL, SPJL PROJECT 3 SCYL CHRYSALIS RGL REG GRUNDY MCL CLARION RL REX NDL, SNDL, SNC COMMAND JL, SJL SCEPTER SCUL COMMONWEALTH UNITED SKL STAX CML, CML, CMC CONCERT-DISC SBL STEADY CL, SCL CORAL NL, SNL SUN DDL, SDDL DAFFODIL QL, SQL SUNSHINE SDJL DJM EL, SEL SPIN ZL, SZL DOT TRL, STRL TOP RANK DML, SDML DU MONDE TAL, STAL TRANSATLANTIC SDRL DURIUM TL, STL 20TH CENTURY-FOX EL EMBER UAL, SUAL, SUL UNITED ARTISTS EC, SEC, EL, SEL EVEREST SVHL VIOLETS HOLIDAY SFYL FANTASY VL VOCALION DL, SDL FESTIVAL SVL VOGUE FC FESTIVAL APL VOX FL, SFL FESTIVAL WA WALLIS GNPL, SGNPL GNP CRESCENDO APC, WC, SWC WESTMINSTER HVL, SHVL HISPAVOX SWWL WHITE WHALE SHWL HOT WAX IRL, SIRL IMPERIAL IL IMPULSE 2 Festival 30,000 LP series FL 30,001 THE BEST OF THE TRAPP FAMILY SINGERS, RECORD 1 TRAPP FAMILY SINGERS FL 30,002 THE BEST OF THE TRAPP FAMILY SINGERS, RECORD 2 TRAPP FAMILY SINGERS SFL 930,003 BRAZAN BRASS HENRY JEROME ORCHESTRA SEC 930,004 THE LITTLE TRAIN OF THE CAIPIRA LONDON SYMPHONY ORCHESTRA SFL 930,005 CONCERTO FLAMENCO VINCENTE GOMEZ SFL 930,006 IRISH SING-ALONG BILL SHEPHERD SINGERS FL 30,007 FACE TO FACE, RECORD 1 INTERVIEWS BY PETE MARTIN FL 30,008 FACE TO FACE, RECORD 2 INTERVIEWS BY PETE MARTIN SCL 930,009 LIBERACE AT THE PALLADIUM LIBERACE RL 30,010 RENDEZVOUS WITH NOELINE BATLEY AUS NOELEEN BATLEY 6.61 30,011 30,012 RL 30,013 MORIAH COLLEGE JUNIOR CHOIR AUS ARR. -

Neotrance and the Psychedelic Festival DC

Neotrance and the Psychedelic Festival GRAHAM ST JOHN UNIVERSITY OF REGINA, UNIVERSITY OF QUEENSLAND Abstract !is article explores the religio-spiritual characteristics of psytrance (psychedelic trance), attending speci"cally to the characteristics of what I call neotrance apparent within the contemporary trance event, the countercultural inheritance of the “tribal” psytrance festival, and the dramatizing of participants’ “ultimate concerns” within the festival framework. An exploration of the psychedelic festival offers insights on ecstatic (self- transcendent), performative (self-expressive) and re!exive (conscious alternative) trajectories within psytrance music culture. I address this dynamic with reference to Portugal’s Boom Festival. Keywords psytrance, neotrance, psychedelic festival, trance states, religion, new spirituality, liminality, neotribe Figure 1: Main Floor, Boom Festival 2008, Portugal – Photo by jakob kolar www.jacomedia.net As electronic dance music cultures (EDMCs) flourish in the global present, their relig- ious and/or spiritual character have become common subjects of exploration for scholars of religion, music and culture.1 This article addresses the religio-spiritual Dancecult: Journal of Electronic Dance Music Culture 1(1) 2009, 35-64 + Dancecult ISSN 1947-5403 ©2009 Dancecult http://www.dancecult.net/ DC Journal of Electronic Dance Music Culture – DOI 10.12801/1947-5403.2009.01.01.03 + D DC –C 36 Dancecult: Journal of Electronic Dance Music Culture • vol 1 no 1 characteristics of psytrance (psychedelic trance), attending specifically to the charac- teristics of the contemporary trance event which I call neotrance, the countercultural inheritance of the “tribal” psytrance festival, and the dramatizing of participants’ “ul- timate concerns” within the framework of the “visionary” music festival. -

Development of Musical Ideas in Compositions by Tortoise

DEVELOPMENT OF MUSICAL IDEAS IN COMPOSITIONS BY TORTOISE Reiner Krämer Prologue For more than 25 years, the Chicago-based experimental rock band Tortoise has crossed over multiple popular music genres (ambient music, krautrock, dub, electronica, drums and bass, reggae, etc.) as well as different types of jazz music, and has made use of minimalist Western art music.1 The group is often labeled a pioneer of the »post-rock« genre. Allegedly, Simon Reynolds first used the term in his review of the album Hex by the English east Lon- don band Bark Psychosis (Reynolds 1994a) and shortly afterwards in his arti- cle »Shaking the Rock Narcotic« (Reynolds 1994b: 28-32). In connection to Tortoise he used »post-rock« in his review article of their 1996 album Mil- lions Now Living Will Never Die (Reynolds 1996). Reynolds describes music he labels as ›post-rock‹ where bands use guitars »in [non-rock] ways« to manipulate »timbre and texture rather than riff[s]« and »augment rock's basic guitar-bass-drums lineup with digital technology such as samplers and sequencers« (Reynolds 2017: 509). Most members of Tortoise act as multi- instrumentalists that play different combinations of instruments at different times. As Jeanette Leech (2017: 16) has stated, a typical Tortoise stage set- up can feature vibraphones, marimbas, two drum sets, and a multitude of synthesizers. With regards to the instrumental setup Leech draws up paral- lels with progressive rock of the 1970s but points to one decisive difference within the genres: »for Tortoise, the range of instrumentation [is] about creating mood, not showboating« (ibid.). -

Könnyűzene” Történetéből Sinkó Dániel Tartalomjegyzék

14 kiemelkedő év a „könnyűzene” történetéből Sinkó Dániel Tartalomjegyzék: Előszó ….......................................................................…….. 3 1979-Pink Floyd, The Wall...................................................... 6 1980-Genesis, Duke ............................................................. 17 1981-Szabó Gábor, Femme Fatale........................................... 25 1981- King Crimson, Discipline …………………………….. 30 1982-Miles Davis, We want Miles.......................................... 39 1983-Yes, 90125 ....................................................................... 46 1983- Manfred Mann Earth Band, Somewhere in Africa ….... 53 1984-Pat Metheny, First circle …............................................… 60 1985- Dire Straits, Brothers in Arms …...................................… 67 1986-Peter Gabriel, So …........................................................... 75 1986-Paul Simon Graceland…………………………………….. 85 1987-U2, The Joshua tree.......................................................... 96 1987-Sting, Nothing Like the sun............................................. 105 1988-Pink Floyd, Delicate Sound of thunder............................. 114 1989-Jeff Beck, Guitar shop. …............................................... 123 1990- Phish, Lawn Boy …....................................................... 129 1991- U2, Achtung Baby........................................................ 134 1992- R.E.M., Automatic for the people …............................ 146 1992- Mike Oldfield, Tubular -

Newslist Drone Records 31. January 2009

DR-90: NOISE DREAMS MACHINA - IN / OUT (Spain; great electro- acoustic drones of high complexity ) DR-91: MOLJEBKA PVLSE - lvde dings (Sweden; mesmerizing magneto-drones from Swedens drone-star, so dense and impervious) DR-92: XABEC - Feuerstern (Germany; long planned, finally out: two wonderful new tracks by the prolific german artist, comes in cardboard-box with golden print / lettering!) DR-93: OVRO - Horizontal / Vertical (Finland; intense subconscious landscapes & surrealistic schizophrenia-drones by this female Finnish artist, the "wondergirl" of Finnish exp. music) DR-94: ARTEFACTUM - Sub Rosa (Poland; alchemistic beauty- drones, a record fill with sonic magic) DR-95: INFANT CYCLE - Secret Hidden Message (Canada; long-time active Canadian project with intelligently made hypnotic drone-circles) MUSIC for the INNER SECOND EDITIONS (price € 6.00) EXPANSION, EC-STASIS, ELEVATION ! DR-10: TAM QUAM TABULA RASA - Cotidie morimur (Italy; outerworlds brain-wave-music, monotonous and hypnotizing loops & Dear Droners! rhythms) This NEWSLIST offers you a SELECTION of our mailorder programme, DR-29: AMON – Aura (Italy; haunting & shimmering magique as with a clear focus on droney, atmospheric, ambient music. With this list coming from an ancient culture) you have the chance to know more about the highlights & interesting DR-34: TARKATAK - Skärva / Oroa (Germany; atmospheric drones newcomers. It's our wish to support this special kind of electronic and with a special touch from this newcomer from North-Germany) experimental music, as we think its much more than "just music", the DR-39: DUAL – Klanik / 4 tH (U.K.; mighty guitar drones & massive "Drone"-genre is a way to work with your own mind, perception, and sub bass undertones that evoke feelings of total transcendence and (un)-consciousness-processes.