Combining Live Coding and Vjing for Live Visual Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops

Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art 28.9. – 1.10.2017 Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops 1 KONTAKTE’17 28.9.–1.10.2017 Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops KONTAKTE '17 INHALT 28. September bis 1. Oktober 2017 Akademie der Künste, Berlin Programmübersicht 9 Ein Festival des Studios für Elektroakustische Musik der Akademie der Künste A festival presented by the Studio for Electro acoustic Music of the Akademie der Künste Konzerte 10 Im Zusammenarbeit mit In collaboration with Installationen 48 Deutsche Gesellschaft für Elektroakustische Musik Berliner Künstlerprogramm des DAAD Forum 58 Universität der Künste Berlin Hochschule für Musik Hanns Eisler Berlin Technische Universität Berlin Ausstellung 62 Klangzeitort Helmholtz Zentrum Berlin Workshop 64 Ensemble ascolta Musik der Jahrhunderte, Stuttgart Institut für Elektronische Musik und Akustik der Kunstuniversität Graz Laboratorio Nacional de Música Electroacústica Biografien 66 de Cuba singuhr – projekte Partner 88 Heroines of Sound Lebenshilfe Berlin Deutschlandfunk Kultur Lageplan 92 France Culture Karten, Information 94 Studio für Elektroakustische Musik der Akademie der Künste Hanseatenweg 10, 10557 Berlin Fon: +49 (0) 30 200572236 www.adk.de/sem EMail: [email protected] KONTAKTE ’17 www.adk.de/kontakte17 #kontakte17 KONTAKTE’17 Die zwei Jahre, die seit der ersten Ausgabe von KONTAKTE im Jahr 2015 vergangen sind, waren für das Studio für Elektroakustische Musik eine ereignisreiche Zeit. Mitte 2015 erhielt das Studio eine großzügige Sachspende ausgesonderter Studiotechnik der Deut schen Telekom, die nach entsprechenden Planungs und Wartungsarbeiten seit 2016 neue Produktionsmöglichkeiten eröffnet. -

An AI Collaborator for Gamified Live Coding Music Performances

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Falmouth University Research Repository (FURR) Autopia: An AI Collaborator for Gamified Live Coding Music Performances Norah Lorway,1 Matthew Jarvis,1 Arthur Wilson,1 Edward J. Powley,2 and John Speakman2 Abstract. Live coding is \the activity of writing (parts of) regards to this dynamic between the performer and audience, a program while it runs" [20]. One significant application of where the level of risk involved in the performance is made ex- live coding is in algorithmic music, where the performer mod- plicit. However, in the context of the system proposed in this ifies the code generating the music in a live context. Utopia3 paper, we are more concerned with the effect that this has on is a software tool for collaborative live coding performances, the performer themselves. Any performer at an algorave must allowing several performers (each with their own laptop pro- be prepared to share their code publicly, which inherently en- ducing its own sound) to communicate and share code during courages a mindset of collaboration and communal learning a performance. We propose an AI bot, Autopia, which can with live coders. participate in such performances, communicating with human performers through Utopia. This form of human-AI collabo- 1.2 Collaborative live coding ration allows us to explore the implications of computational creativity from the perspective of live coding. Collaborative live coding takes its roots from laptop or- chestra/ensemble such as the Princeton Laptop Orchestra (PLOrk), an ensemble of computer based instruments formed 1 Background at Princeton University [19]. -

AGENDA SPECIAL FESTIVALS Gratuit / Juillet - Août - Septembre 2017 / Numéro Spécial Été

WWW.TECKYO.COM Jr RODRIGUEZ: photo by Felicia Malecha INTERVIEW Jr RODRIGUEZ CITIZEN KAIN 20SYL DOLMA REC AGENDA SPECIAL FESTIVALS Gratuit / Juillet - Août - Septembre 2017 / Numéro spécial été TECKYO.COM Edito Sommaire 3 Pour cette édition spécial été 2017, je ne vais pas faire un édito classique. En effet, je tiens à rendre hommage à Kaosspat, instigateur de la première heure de ce projet fou que représente Teckyo et qui nous a quitté en Décembre dernier. Pour la première fois depuis presque 20 ans, je ne peux compter sur son aide précieuse. Je suis seule face à cette absence et me revient en mémoire sa bonne humeur, son soutien indéfectible, son humour ainsi que sa folie si particulière. Quand, en 1999, Lucie Association nous a proposé de sortir un fanzine spécialisé en musique électronique, il a, sans hésitation, voulu relever le défi. Il s’est investi sans compter, il a passé un temps précieux au développement du magazine et quand il a fallu faire face à diverses difficultés, il s’est naturellement proposé pour créer le site www.tec- kyo.com. Ceux qui l’ont côtoyé ou croisé en soirées ou en festivals, ont pu mesurer son amour pour la fête et la musique et tous en gardent un souvenir ému. Il aimait à dire : « the show must go on ». Alors continuons à vivre et à profiter pleinement des bons moments que la vie nous offre... Vous trouverez dans cette nouvelle parution, l’interview de Rodrigez Jr que nous suivons depuis le début de son ascension. Citizen Kain et 20syl ont accepté de nous détailler leur projet respectif, étant tous les deux omniprésents dans les soirées et festivals estivaux. -

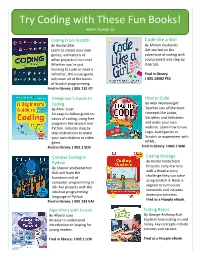

Try Coding with These Fun Books!

Try Coding with These Fun Books! www.mywpl.ca Coding From Scratch Code like a Girl By Rachel Ziter By Miriam Peskowitz Learn to create your own Get started on the games, animations or adventure of coding with other projects in no time! cool projects and step by Whether you’re just step tips. learning to code or need a refresher, this visual guide Find in library: will cover all of the basics J 005.13082 PES of Scratch programming. Find in library: J 005.133 ZIT A Beginner’s Guide to How to Code Coding By Max Wainewright By Marc Scott Teaches you all the basic An easy-to-follow guide to concepts like Loops, basics of coding, using free Variables and Selections programs like Scratch and and make your own Python. Includes step by website. Learn how to use step instructions to make Lego, build games in your own chatbot or video Scratch or experiment with game. HTML. Find in library: J 005.1 SCO Find in library: J 000.1 WAI Creative Coding in Coding Onstage By Kristin Fontichiaro Python By Sheena Vaidyanathan Presents early learners Kids will learn the with a theatre story fundamentals of challenge they can solve computer programming in using Scratch 3. Book is 30+ fun projects with the aligned to curriculum standards and includes intuitive programming language of Python extension activities. Find as a Hoopla eBook. Find in library: J 005.133 VAI Algorithms with Frozen Coding Basics By Allyssa Loya By George Anthony Kulz An easy to understand Explains how coding is used introduction to looping for today, key concepts include young readers. -

Glazbeni Turizam: Istraživanje Utjecaja Festivala Outlook U Kontekstu Lokalne Kulture

Glazbeni turizam: istraživanje utjecaja festivala Outlook u kontekstu lokalne kulture Kariko, Helena Master's thesis / Diplomski rad 2019 Degree Grantor / Ustanova koja je dodijelila akademski / stručni stupanj: University of Rijeka, Faculty of Humanities and Social Sciences / Sveučilište u Rijeci, Filozofski fakultet Permanent link / Trajna poveznica: https://urn.nsk.hr/urn:nbn:hr:186:865870 Rights / Prava: In copyright Download date / Datum preuzimanja: 2021-10-08 Repository / Repozitorij: Repository of the University of Rijeka, Faculty of Humanities and Social Sciences - FHSSRI Repository SVEUČILIŠTE U RIJECI FILOZOFSKI FAKULTET U RIJECI ODSJEK ZA KULTURALNE STUDIJE Helena Kariko Glazbeni Turizam: Istraživanje utjecaja festivala Outlook u kontekstu lokalne kulture DIPLOMSKI RAD Rijeka, 2019 SVEUČILIŠTE U RIJECI FILOZOFSKI FAKULTET U RIJECI ODSJEK ZA KULTURALNE STUDIJE Helena Kariko Glazbeni Turizam: Istraživanje utjecaja festivala Outlook u kontekstu lokalne kulture DIPLOMSKI RAD Mentorica: Dr. sc. Diana Grgurić Rijeka, 2019 SADRŽAJ: 1. Uvod ....................................................................................................................................... 1 2. Teorijski okvir razmatranja problematike glazbenog turizma ............................................... 2-9 2.1. O glazbenom turizmu ........................................................................................................... 2 2.2. Glazbeni festivali............................................................................................................. -

Spaces to Fail In: Negotiating Gender, Community and Technology in Algorave

Spaces to Fail in: Negotiating Gender, Community and Technology in Algorave Feature Article Joanne Armitage University of Leeds (UK) Abstract Algorave presents itself as a community that is open and accessible to all, yet historically, there has been a lack of diversity on both the stage and dance floor. Through women- only workshops, mentoring and other efforts at widening participation, the number of women performing at algorave events has increased. Grounded in existing research in feminist technology studies, computing education and gender and electronic music, this article unpacks how techno, social and cultural structures have gendered algorave. These ideas will be elucidated through a series of interviews with women participating in the algorave community, to centrally argue that gender significantly impacts an individual’s ability to engage and interact within the algorave community. I will also consider how live coding, as an embodied techno-social form, is represented at events and hypothesise as to how it could grow further as an inclusive and feminist practice. Keywords: gender; algorave; embodiment; performance; electronic music Joanne Armitage lectures in Digital Media at the School of Media and Communication, University of Leeds. Her work covers areas such as physical computing, digital methods and critical computing. Currently, her research focuses on coding practices, gender and embodiment. In 2017 she was awarded the British Science Association’s Daphne Oram award for digital innovation. She is a current recipient of Sound and Music’s Composer-Curator fund. Outside of academia she regularly leads community workshops in physical computing, live coding and experimental music making. Joanne is an internationally recognised live coder and contributes to projects including laptop ensemble, OFFAL and algo-pop duo ALGOBABEZ. -

Travel and Drug Use in Europe: a Short Review 1

ISSN 1725-5767 ISSN Travel and drug use in Europe: a short review 1 Travel and drug use in Europe: a short review PAPERS THEMATIC Travel and drug use in Europe: a short review emcdda.europa.eu Contents 1. Introduction 3 2. Travelling and using drugs 4 Young people 4 Problem drug users 4 3. Examples of drug-related destinations 6 4. Prevalence of drug use among young travellers 9 Young holidaymakers in Europe 9 Young backpackers 10 Young clubbers and partygoers 11 5. Risks associated with drug use while travelling 13 Health risks 13 Risk related to personal safety 14 Legal risks 14 Risks related to injecting drug use 14 Risks to local communities 15 6. Potential for prevention interventions 17 7. Conclusions 19 Acknowledgements 21 References 22 Travel and drug use in Europe: a short review 3 emcdda.europa.eu 1. Introduction Recent decades have seen a growth in travel and tourism abroad because of cheap air fares and holiday packages. This has been accompanied by a relaxation of border controls, especially within parts of Europe participating in the Schengen Agreement. As some people may be more inclined to use illicit substances during holiday periods and some may even choose to travel to destinations that are associated with drug use — a phenomenon sometimes referred to as ‘drug tourism’ — this means that from a European drug policy perspective the issue of drug use and travel has become more important. This Thematic paper examines travellers and drug use, with a focus on Europeans travelling within Europe, although some other relevant destinations are also included. -

Neotrance and the Psychedelic Festival DC

Neotrance and the Psychedelic Festival GRAHAM ST JOHN UNIVERSITY OF REGINA, UNIVERSITY OF QUEENSLAND Abstract !is article explores the religio-spiritual characteristics of psytrance (psychedelic trance), attending speci"cally to the characteristics of what I call neotrance apparent within the contemporary trance event, the countercultural inheritance of the “tribal” psytrance festival, and the dramatizing of participants’ “ultimate concerns” within the festival framework. An exploration of the psychedelic festival offers insights on ecstatic (self- transcendent), performative (self-expressive) and re!exive (conscious alternative) trajectories within psytrance music culture. I address this dynamic with reference to Portugal’s Boom Festival. Keywords psytrance, neotrance, psychedelic festival, trance states, religion, new spirituality, liminality, neotribe Figure 1: Main Floor, Boom Festival 2008, Portugal – Photo by jakob kolar www.jacomedia.net As electronic dance music cultures (EDMCs) flourish in the global present, their relig- ious and/or spiritual character have become common subjects of exploration for scholars of religion, music and culture.1 This article addresses the religio-spiritual Dancecult: Journal of Electronic Dance Music Culture 1(1) 2009, 35-64 + Dancecult ISSN 1947-5403 ©2009 Dancecult http://www.dancecult.net/ DC Journal of Electronic Dance Music Culture – DOI 10.12801/1947-5403.2009.01.01.03 + D DC –C 36 Dancecult: Journal of Electronic Dance Music Culture • vol 1 no 1 characteristics of psytrance (psychedelic trance), attending specifically to the charac- teristics of the contemporary trance event which I call neotrance, the countercultural inheritance of the “tribal” psytrance festival, and the dramatizing of participants’ “ul- timate concerns” within the framework of the “visionary” music festival. -

Politizace Ceske Freetekno Subkultury

MASARYKOVA UNIVERZITA FAKULTA SOCIÁLNÍCH STUDIÍ Katedra sociologie POLITIZACE ČESKÉ FREETEKNO SUBKULTURY Diplomová práce Jan Segeš Vedoucí práce: doc. PhDr. Csaba Szaló, Ph.D. UČO: 137526 Obor: Sociologie Imatrikulační ročník: 2009 Brno, 2011 Čestné prohlášení Prohlašuji, ţe jsem diplomovou práci „Politizace české freetekno subkultury“ vypracoval samostatně a pouze s pouţitím pramenů uvedených v seznamu literatury. ................................... V Brně dne 15. května 2011 Jan Segeš Poděkování Děkuji doc. PhDr. Csabovi Szaló, Ph.D. za odborné vedení práce a za podnětné připomínky, které mi poskytl. Dále děkuji RaveBoyovi za ochotu odpovídat na mé otázky a přístup k archivu dokumentů. V neposlední řadě děkuji svým rodičům a prarodičům za jejich podporu v průběhu celého mého studia. ANOTACE Práce se zabývá politizací freetekno subkultury v České Republice. Politizace je v nahlíţena ve dvou dimenzích. První dimenzí je explicitní politizace, kterou můţeme vnímat jako aktivní politickou participaci. Druhou dimenzí je politizace na úrovni kaţdodenního ţivota. Ta je odkrývána díky re-definici subkultury pomocí post- subkulturních teorií, zejména pak Maffesoliho konceptem neotribalismu a teorie dočasné autonomní zóny Hakima Beye. Práce se snaţí odkrýt obě úrovně politizace freetekno subkultury v českém prostředí pomocí analýzy dostupných dokumentů týkajících se teknivalů CzechTek, CzaroTek a pouličního festivalu DIY Karneval. Rozsah práce: základní text + poznámky pod čarou, titulní list, obsah, rejstřík, anotace a seznam literatury je 138 866 znaků. Klíčová slova: freetekno, politizace, dočasná autonomní zóna, DAZ, neo-kmen, neotribalismus, CzechTek, subkultura, post-subkultury ANNOTATION The presented work focuses on the politicization of the freetekno subculture in the Czech Republic. The politicization is perceived in two dimensions. The first dimension is the explicit politicization, which could be represented as the active political participation. -

Code-Bending: a New Creative Coding Practice

Code-bending: a new creative coding practice Ilias Bergstrom1, R. Beau Lotto2 1EventLAB, Universitat de Barcelona, Campus de Mundet - Edifici Teatre, Passeig de la Vall d'Hebron 171, 08035 Barcelona, Spain. Email: [email protected], [email protected] 2Lottolab Studio, University College London, 11-43 Bath Street, University College London, London, EC1V 9EL. Email: [email protected] Key-words: creative coding, programming as art, new media, parameter mapping. Abstract Creative coding, artistic creation through the medium of program instructions, is constantly gaining traction, a steady stream of new resources emerging to support it. The question of how creative coding is carried out however still deserves increased attention: in what ways may the act of program development be rendered conducive to artistic creativity? As one possible answer to this question, we here present and discuss a new creative coding practice, that of Code-Bending, alongside examples and considerations regarding its application. Introduction With the creative coding movement, artists’ have transcended the dependence on using only pre-existing software for creating computer art. In this ever-expanding body of work, the medium is programming itself, where the piece of Software Art is not made using a program; instead it is the program [1]. Its composing material is not paint on paper or collections of pixels, but program instructions. From being an uncommon practice, creative coding is today increasingly gaining traction. Schools of visual art, music, design and architecture teach courses on creatively harnessing the medium. The number of programming environments designed to make creative coding approachable by practitioners without formal software engineering training has expanded, reflecting creative coding’s widened user base. -

International Computer Music Conference (ICMC/SMC)

Conference Program 40th International Computer Music Conference joint with the 11th Sound and Music Computing conference Music Technology Meets Philosophy: From digital echos to virtual ethos ICMC | SMC |2014 14-20 September 2014, Athens, Greece ICMC|SMC|2014 14-20 September 2014, Athens, Greece Programme of the ICMC | SMC | 2014 Conference 40th International Computer Music Conference joint with the 11th Sound and Music Computing conference Editor: Kostas Moschos PuBlished By: x The National anD KapoDistrian University of Athens Music Department anD Department of Informatics & Telecommunications Panepistimioupolis, Ilissia, GR-15784, Athens, Greece x The Institute for Research on Music & Acoustics http://www.iema.gr/ ADrianou 105, GR-10558, Athens, Greece IEMA ISBN: 978-960-7313-25-6 UOA ISBN: 978-960-466-133-6 Ξ^ĞƉƚĞŵďĞƌϮϬϭϰʹ All copyrights reserved 2 ICMC|SMC|2014 14-20 September 2014, Athens, Greece Contents Contents ..................................................................................................................................................... 3 Sponsors ..................................................................................................................................................... 4 Preface ....................................................................................................................................................... 5 Summer School ....................................................................................................................................... -

64 BELL Woodson Renick Full.Pdf

Affect-Based Aesthetic Evaluation and Development of Abstractions for Rhythm in Live Coding Renick Bell, Tama Art University February 27, 2015 1 Renick Bell – Affect-Based Aesthetic Evaluation and Development of Abstractions for Rhythm in Live Coding Contents 1 Preface 7 2 Summary 9 3 Introduction 14 3.1 An Outline of this Document . 15 3.2 Definition of Live Coding . 16 3.3 How Live Coding Is Done . 17 3.3.1 Abstractions . 18 3.3.2 Other Tools . 18 3.3.3 Analogies to Traditional Music . 19 3.4 Justification for Live Coding . 20 3.5 Problem Statement . 22 4 Background 24 4.1 A Brief History of Live Coding . 24 4.2 Abstractions . 29 4.2.1 Definition of Abstraction . 35 4.2.2 Layers of Abstraction . 35 2 Renick Bell – Affect-Based Aesthetic Evaluation and Development of Abstractions for Rhythm in Live Coding 4.3 Generators . 37 4.4 Interaction . 39 4.5 Rhythm in Live Coding . 42 4.6 Approaches to Evaluating Live Coding . 44 5 My Works 46 5.1 Music Theory . 46 5.1.1 Rhythm Patterns . 46 5.1.2 Density . 52 5.2 Conductive . 55 5.3 Performances . 57 6 Discussion 63 6.1 A Pragmatic Aesthetic Theory . 63 6.1.1 Justification for Considering Live Coding from the Viewpoint of Aes- thetics . 63 6.1.2 Dewey’s Pragmatic Aesthetics . 68 6.1.3 Dewey and Valuation . 73 6.1.4 A Revised Pragmatic Aesthetics . 75 6.1.5 Affect . 75 3 Renick Bell – Affect-Based Aesthetic Evaluation and Development of Abstractions for Rhythm in Live Coding 6.1.6 Experience Phase .