From Live Coding to Virtual Being

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

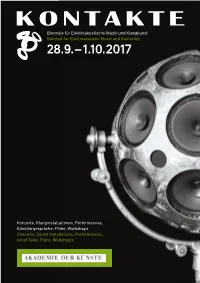

Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops

Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art 28.9. – 1.10.2017 Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops 1 KONTAKTE’17 28.9.–1.10.2017 Biennale für Elektroakustische Musik und Klangkunst Biennial for Electroacoustic Music and Sound Art Konzerte, Klanginstallationen, Performances, Künstlergespräche, Filme, Workshops Concerts, Sound Installations, Performances, Artist Talks, Films, Workshops KONTAKTE '17 INHALT 28. September bis 1. Oktober 2017 Akademie der Künste, Berlin Programmübersicht 9 Ein Festival des Studios für Elektroakustische Musik der Akademie der Künste A festival presented by the Studio for Electro acoustic Music of the Akademie der Künste Konzerte 10 Im Zusammenarbeit mit In collaboration with Installationen 48 Deutsche Gesellschaft für Elektroakustische Musik Berliner Künstlerprogramm des DAAD Forum 58 Universität der Künste Berlin Hochschule für Musik Hanns Eisler Berlin Technische Universität Berlin Ausstellung 62 Klangzeitort Helmholtz Zentrum Berlin Workshop 64 Ensemble ascolta Musik der Jahrhunderte, Stuttgart Institut für Elektronische Musik und Akustik der Kunstuniversität Graz Laboratorio Nacional de Música Electroacústica Biografien 66 de Cuba singuhr – projekte Partner 88 Heroines of Sound Lebenshilfe Berlin Deutschlandfunk Kultur Lageplan 92 France Culture Karten, Information 94 Studio für Elektroakustische Musik der Akademie der Künste Hanseatenweg 10, 10557 Berlin Fon: +49 (0) 30 200572236 www.adk.de/sem EMail: [email protected] KONTAKTE ’17 www.adk.de/kontakte17 #kontakte17 KONTAKTE’17 Die zwei Jahre, die seit der ersten Ausgabe von KONTAKTE im Jahr 2015 vergangen sind, waren für das Studio für Elektroakustische Musik eine ereignisreiche Zeit. Mitte 2015 erhielt das Studio eine großzügige Sachspende ausgesonderter Studiotechnik der Deut schen Telekom, die nach entsprechenden Planungs und Wartungsarbeiten seit 2016 neue Produktionsmöglichkeiten eröffnet. -

French Underground Raves of the Nineties. Aesthetic Politics of Affect and Autonomy Jean-Christophe Sevin

French underground raves of the nineties. Aesthetic politics of affect and autonomy Jean-Christophe Sevin To cite this version: Jean-Christophe Sevin. French underground raves of the nineties. Aesthetic politics of affect and autonomy. Political Aesthetics: Culture, Critique and the Everyday, Arundhati Virmani, pp.71-86, 2016, 978-0-415-72884-3. halshs-01954321 HAL Id: halshs-01954321 https://halshs.archives-ouvertes.fr/halshs-01954321 Submitted on 13 Dec 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. French underground raves of the 1990s. Aesthetic politics of affect and autonomy Jean-Christophe Sevin FRENCH UNDERGROUND RAVES OF THE 1990S. AESTHETIC POLITICS OF AFFECT AND AUTONOMY In Arundhati Virmani (ed.), Political Aesthetics: Culture, Critique and the Everyday, London, Routledge, 2016, p.71-86. The emergence of techno music – commonly used in France as electronic dance music – in the early 1990s is inseparable from rave parties as a form of spatiotemporal deployment. It signifies that the live diffusion via a sound system powerful enough to diffuse not only its volume but also its sound frequencies spectrum, including infrabass, is an integral part of the techno experience. In other words listening on domestic equipment is not a sufficient condition to experience this music. -

An AI Collaborator for Gamified Live Coding Music Performances

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Falmouth University Research Repository (FURR) Autopia: An AI Collaborator for Gamified Live Coding Music Performances Norah Lorway,1 Matthew Jarvis,1 Arthur Wilson,1 Edward J. Powley,2 and John Speakman2 Abstract. Live coding is \the activity of writing (parts of) regards to this dynamic between the performer and audience, a program while it runs" [20]. One significant application of where the level of risk involved in the performance is made ex- live coding is in algorithmic music, where the performer mod- plicit. However, in the context of the system proposed in this ifies the code generating the music in a live context. Utopia3 paper, we are more concerned with the effect that this has on is a software tool for collaborative live coding performances, the performer themselves. Any performer at an algorave must allowing several performers (each with their own laptop pro- be prepared to share their code publicly, which inherently en- ducing its own sound) to communicate and share code during courages a mindset of collaboration and communal learning a performance. We propose an AI bot, Autopia, which can with live coders. participate in such performances, communicating with human performers through Utopia. This form of human-AI collabo- 1.2 Collaborative live coding ration allows us to explore the implications of computational creativity from the perspective of live coding. Collaborative live coding takes its roots from laptop or- chestra/ensemble such as the Princeton Laptop Orchestra (PLOrk), an ensemble of computer based instruments formed 1 Background at Princeton University [19]. -

Confessions-Of-A-Live-Coder.Pdf

Proceedings of the International Computer Music Conference 2011, University of Huddersfield, UK, 31 July - 5 August 2011 CONFESSIONS OF A LIVE CODER Thor Magnusson ixi audio & Faculty of Arts and Media University of Brighton Grand Parade, BN2 0JY, UK ABSTRACT upon writing music with programming languages for very specific reasons and those are rarely comparable. This paper describes the process involved when a live At ICMC 2007, in Copenhagen, I met Andrew coder decides to learn a new musical programming Sorensen, the author of Impromptu and member of the language of another paradigm. The paper introduces the aa-cell ensemble that performed at the conference. We problems of running comparative experiments, or user discussed how one would explore and analyse the studies, within the field of live coding. It suggests that process of learning a new programming environment an autoethnographic account of the process can be for music. One of the prominent questions here is how a helpful for understanding the technological conditioning functional programming language like Impromptu of contemporary musical tools. The author is conducting would influence the thinking of a computer musician a larger research project on this theme: the part with background in an object orientated programming presented in this paper describes the adoption of a new language, such as SuperCollider? Being an avid user of musical programming environment, Impromptu [35], SuperCollider, I was intrigued by the perplexing code and how this affects the author’s musical practice. structure and work patterns demonstrated in the aa-cell performances using Impromptu [36]. I subsequently 1. INTRODUCTION decided to embark upon studying this environment and perform a reflexive study of the process. -

FAUST Tutorial for Functional Programmers

FAUST Tutorial for Functional Programmers Y. Orlarey, S. Letz, D. Fober, R. Michon ICFP 2017 / FARM 2017 What is Faust ? What is Faust? A programming language (DSL) to build electronic music instruments Some Music Programming Languages DARMS Kyma 4CED MCL DCMP LOCO PLAY2 Adagio MUSIC III/IV/V DMIX LPC PMX AML Elody Mars MusicLogo POCO AMPLE EsAC MASC Music1000 POD6 Antescofo Euterpea Max MUSIC7 POD7 Arctic Extempore Musictex PROD Autoklang MidiLisp Faust MUSIGOL Puredata Bang MidiLogo MusicXML PWGL Canon Flavors Band MODE Musixtex Ravel CHANT Fluxus MOM NIFF FOIL Moxc SALIERI Chuck NOTELIST FORMES MSX SCORE CLCE Nyquist FORMULA MUS10 ScoreFile CMIX OPAL Fugue MUS8 SCRIPT Cmusic OpenMusic Gibber MUSCMP SIREN CMUSIC Organum1 GROOVE MuseData SMDL Common Lisp Outperform SMOKE Music GUIDO MusES Overtone SSP Common HARP MUSIC 10 PE Music Haskore MUSIC 11 SSSP Patchwork Common HMSL MUSIC 360 ST Music PILE Notation INV MUSIC 4B Supercollider Pla invokator MUSIC 4BF Symbolic Composer Csound PLACOMP KERN MUSIC 4F Tidal CyberBand PLAY1 Keynote MUSIC 6 Brief Overview to Faust Faust offers end-users a high-level alternative to C to develop audio applications for a large variety of platforms. The role of the Faust compiler is to synthesize the most efficient implementations for the target language (C, C++, LLVM, Javascript, etc.). Faust is used on stage for concerts and artistic productions, for education and research, for open sources projects and commercial applications : What Is Faust Used For ? Artistic Applications Sonik Cube (Trafik/Orlarey), Smartfaust (Gracia), etc. Open-Source projects Guitarix: Hermann Meyer WebAudio Applications YC20 Emulator Thanks to the HTML5/WebAudio API and Asm.js it is now possible to run synthesizers and audio effects from a simple web page ! Sound Spatialization Ambitools: Pierre Lecomte, CNAM Ambitools (Faust Award 2016), 3-D sound spatialization using Ambisonic techniques. -

NIEUWE RELEASES KLIK OP DE HOES VOOR DE SINGLE (Mits Beschikbaar)

NIEUWE RELEASES KLIK OP DE HOES VOOR DE SINGLE (mits beschikbaar) LEONARD COHEN – You Want It Darker CD/LP 'You Want It Darker', een paar weken geleden werd deze titeltrack vrijgegeven online en ik was meteen verkocht. Ik vond de vorige plaat 'Populair Problems' al fantastisch maar 'You Want It Darker' overtreft het op alle vlakken. Donkere plaat met veel verwijzingen naar de dood bijvoorbeeld wat op zich niet vreemd is gezien zijn leeftijd (82). Prachtig en ontroerend meesterwerk van deze levende legende! Het wordt druk boven in de top tien albums van het jaar.... KENSINGTON – Control CD/LP ‘Control’ is het nieuwe album van Kensington, het Utrechtse viertal dat zich de afgelopen jaren heeft ontwikkeld tot één van de grootste bands van het land. Niet alleen door het succesvolle album ‘Rivals’ maar ook door hun live reputatie waarmee ze de festival- en live podia (én maar liefst 4x in onze winkel!) hebben veroverd. Eerste single van het album ‘Control’ is ‘Do I Ever’. Het album is gelimiteerd verkrijgbaar als cd digipack inclusief een 20 pagina booklet én op vinyl (eerste oplage is genummerd en gekleurd vinyl). KORN – Serenity of Suffering CD/CD-Deluxe/LP/LP-Deluxe 'Serenity of Suffering' is het 12e studio album van de nu-metal band Korn. Volgens gitarist Brian Welch is deze plaat een stuk zwaarder en steviger dan dat iemand in een lange tijd heeft gehoord. Het album is de opvolger van 'The Paradigm Shift' uit 2013 en werd geproduceerd door Grammy-award winnende producer Nick Raskulinecsz (Foo Fighters, Deftones, Mastodon). Op de deluxe versie staan twee extra tracks. -

Spaces to Fail In: Negotiating Gender, Community and Technology in Algorave

Spaces to Fail in: Negotiating Gender, Community and Technology in Algorave Feature Article Joanne Armitage University of Leeds (UK) Abstract Algorave presents itself as a community that is open and accessible to all, yet historically, there has been a lack of diversity on both the stage and dance floor. Through women- only workshops, mentoring and other efforts at widening participation, the number of women performing at algorave events has increased. Grounded in existing research in feminist technology studies, computing education and gender and electronic music, this article unpacks how techno, social and cultural structures have gendered algorave. These ideas will be elucidated through a series of interviews with women participating in the algorave community, to centrally argue that gender significantly impacts an individual’s ability to engage and interact within the algorave community. I will also consider how live coding, as an embodied techno-social form, is represented at events and hypothesise as to how it could grow further as an inclusive and feminist practice. Keywords: gender; algorave; embodiment; performance; electronic music Joanne Armitage lectures in Digital Media at the School of Media and Communication, University of Leeds. Her work covers areas such as physical computing, digital methods and critical computing. Currently, her research focuses on coding practices, gender and embodiment. In 2017 she was awarded the British Science Association’s Daphne Oram award for digital innovation. She is a current recipient of Sound and Music’s Composer-Curator fund. Outside of academia she regularly leads community workshops in physical computing, live coding and experimental music making. Joanne is an internationally recognised live coder and contributes to projects including laptop ensemble, OFFAL and algo-pop duo ALGOBABEZ. -

Download the Conference Abstracts Here

THURSDAY 8 SEPTEMBER 2016: SESSIONS 1‐4 Maciej Fortuna and Krzysztof Dys (Academy of Music, Poznán) BIOGRAPHIES Maciej Fortuna is a Polish trumpeter, composer and music producer. He has a PhD degree in Musical Arts and an MA degree in Law and actively pursues his artistic career. In his work, he strives to create his own language of musical expression and expand the sound palette of his instrument. He enjoys experimenting with combining different art forms. An important element of his creative work consists in the use of live electronics. He creates and directs multimedia concerts and video productions. Krzysztof Dys is a Polish jazz pianist. He has a PhD degree in Musical Arts. So far he has collaborated with the great Polish vibrafonist Jerzy Milian, with famous saxophonist Mikoaj Trzaska, and as well with clarinettist Wacaw Zimpel. Dys has worked on a regular basis with young, Poznań‐based trumpeter, Maciej Fortuna. Their album Tropy has been well‐received by the audience and critics as well. Dys also plays in Maciej Fortuna Quartet, with Jakub Mielcarek, double bass, and Przemysaw Jarosz, drums. In 2013 the group toured outside Poland with a project ‘Jazz from Poland’, with a goal to present the work of unappreciated or forgotten or Polish jazz composers. The main inspiration for Krzysztof Dys is the work of Russian composers Alexander Nikolayevich Scriabin, Sergei Sergeyevich Prokofiev, American artists like Bill Evans and Miles Davis, and last but not least, a great Polish composer, Grayna Bacewicz. TITLE Classical Inspirations in Jazz Compositions Based on Selected Works by Roman Maciejewski ABSTRACT A few years prior to commencing a PhD programme, I started my own research on the possibilities of implementing electronic sound modifiers into my jazz repertoire. -

Transforming Wikipedia Into a Large-Scale Fine-Grained Entity Type Corpus

Transforming Wikipedia into a Large-Scale Fine-Grained Entity Type Corpus Abbas Ghaddar, Philippe Langlais RALI-DIRO Montreal, Canada [email protected], [email protected] Abstract This paper presents WiFiNE, an English corpus annotated with fine-grained entity types. We propose simple but effective heuristics we applied to English Wikipedia to build a large, high quality, annotated corpus. We evaluate the impact of our corpus on the fine-grained entity typing system of Shimaoka et al. (2017), with 2 manually annotated benchmarks, FIGER (GOLD) and ONTONOTES. We report state-of-the-art performances, with a gain of 0.8 micro F1 score on the former dataset and a gain of 2.7 macro F1 score on the latter one, despite the fact that we employ the same quantity of training data used in previous works. We make our corpus available as a resource for future works. Keywords: Annotated Corpus, Fine-Grained Entity Type, Wikipedia 1. Introduction considering coreference mentions of anchored texts as can- Entity typing is the task of classifying textual mentions into didate annotations, and by exploiting the out-link structure their respective types. While the standard Named-Entity of Wikipedia. We propose an easy-first annotation pipeline Recognition (NER) task focuses on a small set of types (e.g. described in Section 3 which happens to reduce noise. Sec- 4 classes defined by the CONLL shared task-2003 (Tjong ond, we define simple yet efficient heuristics in order to Kim Sang and De Meulder, 2003)), fine-grained tagging prune the set of candidate types of each entity mention deals with much larger type sets (e.g. -

Farmers Market Starts 8Th Season at GV T-Llu.UUU WMU, CMU, U of M Flint & Oakland by CARLY SIMPSON Micro-Greens, Honey and Fresh Health Issues,” She Said

Grand Valley State University ScholarWorks@GVSU Volume 48, July 1, 2013 - June 2, 2014 Lanthorn, 1968-2001 6-2-2014 Lanthorn, vol. 48, no. 61, June 2, 2014 Grand Valley State University Follow this and additional works at: https://scholarworks.gvsu.edu/lanthorn_vol48 Part of the Archival Science Commons, Education Commons, and the History Commons Recommended Citation Grand Valley State University, "Lanthorn, vol. 48, no. 61, June 2, 2014" (2014). Volume 48, July 1, 2013 - June 2, 2014. 60. https://scholarworks.gvsu.edu/lanthorn_vol48/60 This Issue is brought to you for free and open access by the Lanthorn, 1968-2001 at ScholarWorks@GVSU. It has been accepted for inclusion in Volume 48, July 1, 2013 - June 2, 2014 by an authorized administrator of ScholarWorks@GVSU. For more information, please contact [email protected]. MONDAY, JUNE 2 WWW.LANTHORN.COM Dilanni accepts coaching position Big Ten university SPORTS, A7 SPORTS, A7 TWO FORMER LAKERS BATTLE FOR STARTING POSITION IN NFL fumwniTFifU: Pew Faculty Teaching & Learning Center Center for Scholarly and Creative Excellence Covered parking area Human Resources,Accounting Student Accounts, Payroll offices Faculty government & conference rooms Accounting, Institutional Marketing LL IL Conference rooms & storage rooms < UJ </> Z UJ> ¥ Office of the President, Provost's Office Various administrative offices BY AUDRA GAMBLE The renovations for the building will Zumberge Hall.” & conference rooms [email protected] be completed within the $22 million The concentration of offices and re ver the 2013-2014 academic year, budget that was originally allotted for sources is beneficial for more than just Grand Valley State University stu the project. -

Neotrance and the Psychedelic Festival DC

Neotrance and the Psychedelic Festival GRAHAM ST JOHN UNIVERSITY OF REGINA, UNIVERSITY OF QUEENSLAND Abstract !is article explores the religio-spiritual characteristics of psytrance (psychedelic trance), attending speci"cally to the characteristics of what I call neotrance apparent within the contemporary trance event, the countercultural inheritance of the “tribal” psytrance festival, and the dramatizing of participants’ “ultimate concerns” within the festival framework. An exploration of the psychedelic festival offers insights on ecstatic (self- transcendent), performative (self-expressive) and re!exive (conscious alternative) trajectories within psytrance music culture. I address this dynamic with reference to Portugal’s Boom Festival. Keywords psytrance, neotrance, psychedelic festival, trance states, religion, new spirituality, liminality, neotribe Figure 1: Main Floor, Boom Festival 2008, Portugal – Photo by jakob kolar www.jacomedia.net As electronic dance music cultures (EDMCs) flourish in the global present, their relig- ious and/or spiritual character have become common subjects of exploration for scholars of religion, music and culture.1 This article addresses the religio-spiritual Dancecult: Journal of Electronic Dance Music Culture 1(1) 2009, 35-64 + Dancecult ISSN 1947-5403 ©2009 Dancecult http://www.dancecult.net/ DC Journal of Electronic Dance Music Culture – DOI 10.12801/1947-5403.2009.01.01.03 + D DC –C 36 Dancecult: Journal of Electronic Dance Music Culture • vol 1 no 1 characteristics of psytrance (psychedelic trance), attending specifically to the charac- teristics of the contemporary trance event which I call neotrance, the countercultural inheritance of the “tribal” psytrance festival, and the dramatizing of participants’ “ul- timate concerns” within the framework of the “visionary” music festival. -

![EF 2017 Curated Event Final[2]](https://docslib.b-cdn.net/cover/4349/ef-2017-curated-event-final-2-744349.webp)

EF 2017 Curated Event Final[2]

Electric Forest Reveals 2017 Curated Event Series Details June 22–June 25 and June 29–July 2 Electric Forest Reveals 2017 Curated Event Series Details June 22–June 25 and June 29–July 2 EF2017 will include collaborations with BASSRUSH, THE BIRDHOUSE, Crew Love, Foreign Family Collective, Future Classic, Her Forest, HI-LO presents Heldeep, No Xcuses, Night Bass, and Turntablist. Tickets on sale now at ElectricForestFestival.com Rothbury, Mich. – Today, Electric Forest (EF) announced details on its highly anticipated 2017 Curated Event Series. The seventh annual music and camping festival in Rothbury, Michigan expands to two distinct weekends in 2017, on June 22–June 25 and June 29–July 2, 2017. Each year Electric Forest collaborates with fellow musical tastemakers from around the globe to take part in curating the lineup and vibe of a distinct stage each night. Creating unique musical experiences within the festival itself, Electric Forest will join forces with noteworthy partners BASSRUSH, THE BIRDHOUSE, Crew Love, Foreign Family Collective, Future Classic, Her Forest, HI-LO presents Heldeep, No Xcuses, Night Bass and Turntablists, delivering even more musical depth and diversity to Electric Forest’s already stellar lineup. The complete schedule and details for EF’s 2017 Curated Event Seriesis included below. The First Weekend of EF2017 is SOLD OUT. GA Weekend Passes and VIP options for the Second Weekend are still available at www.electricforestfestival.com/tickets. Electric Forest’s 2017 Curated Event Series details are as follows: First Weekend THE BIRDHOUSE Featuring Claude VonStroke, Maya Jane Coles, Walker & Royce, Machinedrum, Jimmy Edgar, Sonny Fodera, Golf Clap (Thursday, June 22 on Tripolee) In addition to featuring select artists Claude VonStroke has taken under his wing, BIRDHOUSE stages boast contemporaries, friends and no shortage of surprise visitors.