Methods of Monte Carlo Simulation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Conditional Generalizations of Strong Laws Which Conclude the Partial Sums Converge Almost Surely1

The Annals ofProbability 1982. Vol. 10, No.3. 828-830 CONDITIONAL GENERALIZATIONS OF STRONG LAWS WHICH CONCLUDE THE PARTIAL SUMS CONVERGE ALMOST SURELY1 By T. P. HILL Georgia Institute of Technology Suppose that for every independent sequence of random variables satis fying some hypothesis condition H, it follows that the partial sums converge almost surely. Then it is shown that for every arbitrarily-dependent sequence of random variables, the partial sums converge almost surely on the event where the conditional distributions (given the past) satisfy precisely the same condition H. Thus many strong laws for independent sequences may be immediately generalized into conditional results for arbitrarily-dependent sequences. 1. Introduction. Ifevery sequence ofindependent random variables having property A has property B almost surely, does every arbitrarily-dependent sequence of random variables have property B almost surely on the set where the conditional distributions have property A? Not in general, but comparisons of the conditional Borel-Cantelli Lemmas, the condi tional three-series theorem, and many martingale results with their independent counter parts suggest that the answer is affirmative in fairly general situations. The purpose ofthis note is to prove Theorem 1, which states, in part, that if "property B" is "the partial sums converge," then the answer is always affIrmative, regardless of "property A." Thus many strong laws for independent sequences (even laws yet undiscovered) may be immediately generalized into conditional results for arbitrarily-dependent sequences. 2. Main Theorem. In this note, IY = (Y1 , Y2 , ••• ) is a sequence ofrandom variables on a probability triple (n, d, !?I'), Sn = Y 1 + Y 2 + .. -

Kalman and Particle Filtering

Abstract: The Kalman and Particle filters are algorithms that recursively update an estimate of the state and find the innovations driving a stochastic process given a sequence of observations. The Kalman filter accomplishes this goal by linear projections, while the Particle filter does so by a sequential Monte Carlo method. With the state estimates, we can forecast and smooth the stochastic process. With the innovations, we can estimate the parameters of the model. The article discusses how to set a dynamic model in a state-space form, derives the Kalman and Particle filters, and explains how to use them for estimation. Kalman and Particle Filtering The Kalman and Particle filters are algorithms that recursively update an estimate of the state and find the innovations driving a stochastic process given a sequence of observations. The Kalman filter accomplishes this goal by linear projections, while the Particle filter does so by a sequential Monte Carlo method. Since both filters start with a state-space representation of the stochastic processes of interest, section 1 presents the state-space form of a dynamic model. Then, section 2 intro- duces the Kalman filter and section 3 develops the Particle filter. For extended expositions of this material, see Doucet, de Freitas, and Gordon (2001), Durbin and Koopman (2001), and Ljungqvist and Sargent (2004). 1. The state-space representation of a dynamic model A large class of dynamic models can be represented by a state-space form: Xt+1 = ϕ (Xt,Wt+1; γ) (1) Yt = g (Xt,Vt; γ) . (2) This representation handles a stochastic process by finding three objects: a vector that l describes the position of the system (a state, Xt X R ) and two functions, one mapping ∈ ⊂ 1 the state today into the state tomorrow (the transition equation, (1)) and one mapping the state into observables, Yt (the measurement equation, (2)). -

A Fourier-Wavelet Monte Carlo Method for Fractal Random Fields

JOURNAL OF COMPUTATIONAL PHYSICS 132, 384±408 (1997) ARTICLE NO. CP965647 A Fourier±Wavelet Monte Carlo Method for Fractal Random Fields Frank W. Elliott Jr., David J. Horntrop, and Andrew J. Majda Courant Institute of Mathematical Sciences, 251 Mercer Street, New York, New York 10012 Received August 2, 1996; revised December 23, 1996 2 2H k[v(x) 2 v(y)] l 5 CHux 2 yu , (1.1) A new hierarchical method for the Monte Carlo simulation of random ®elds called the Fourier±wavelet method is developed and where 0 , H , 1 is the Hurst exponent and k?l denotes applied to isotropic Gaussian random ®elds with power law spectral the expected value. density functions. This technique is based upon the orthogonal Here we develop a new Monte Carlo method based upon decomposition of the Fourier stochastic integral representation of the ®eld using wavelets. The Meyer wavelet is used here because a wavelet expansion of the Fourier space representation of its rapid decay properties allow for a very compact representation the fractal random ®elds in (1.1). This method is capable of the ®eld. The Fourier±wavelet method is shown to be straightfor- of generating a velocity ®eld with the Kolmogoroff spec- ward to implement, given the nature of the necessary precomputa- trum (H 5 Ad in (1.1)) over many (10 to 15) decades of tions and the run-time calculations, and yields comparable results scaling behavior comparable to the physical space multi- with scaling behavior over as many decades as the physical space multiwavelet methods developed recently by two of the authors. -

Laws of Total Expectation and Total Variance

Laws of Total Expectation and Total Variance Definition of conditional density. Assume and arbitrary random variable X with density fX . Take an event A with P (A) > 0. Then the conditional density fXjA is defined as follows: 8 < f(x) 2 P (A) x A fXjA(x) = : 0 x2 = A Note that the support of fXjA is supported only in A. Definition of conditional expectation conditioned on an event. Z Z 1 E(h(X)jA) = h(x) fXjA(x) dx = h(x) fX (x) dx A P (A) A Example. For the random variable X with density function 8 < 1 3 64 x 0 < x < 4 f(x) = : 0 otherwise calculate E(X2 j X ≥ 1). Solution. Step 1. Z h i 4 1 1 1 x=4 255 P (X ≥ 1) = x3 dx = x4 = 1 64 256 4 x=1 256 Step 2. Z Z ( ) 1 256 4 1 E(X2 j X ≥ 1) = x2 f(x) dx = x2 x3 dx P (X ≥ 1) fx≥1g 255 1 64 Z ( ) ( )( ) h i 256 4 1 256 1 1 x=4 8192 = x5 dx = x6 = 255 1 64 255 64 6 x=1 765 Definition of conditional variance conditioned on an event. Var(XjA) = E(X2jA) − E(XjA)2 1 Example. For the previous example , calculate the conditional variance Var(XjX ≥ 1) Solution. We already calculated E(X2 j X ≥ 1). We only need to calculate E(X j X ≥ 1). Z Z Z ( ) 1 256 4 1 E(X j X ≥ 1) = x f(x) dx = x x3 dx P (X ≥ 1) fx≥1g 255 1 64 Z ( ) ( )( ) h i 256 4 1 256 1 1 x=4 4096 = x4 dx = x5 = 255 1 64 255 64 5 x=1 1275 Finally: ( ) 8192 4096 2 630784 Var(XjX ≥ 1) = E(X2jX ≥ 1) − E(XjX ≥ 1)2 = − = 765 1275 1625625 Definition of conditional expectation conditioned on a random variable. -

Probability Cheatsheet V2.0 Thinking Conditionally Law of Total Probability (LOTP)

Probability Cheatsheet v2.0 Thinking Conditionally Law of Total Probability (LOTP) Let B1;B2;B3; :::Bn be a partition of the sample space (i.e., they are Compiled by William Chen (http://wzchen.com) and Joe Blitzstein, Independence disjoint and their union is the entire sample space). with contributions from Sebastian Chiu, Yuan Jiang, Yuqi Hou, and Independent Events A and B are independent if knowing whether P (A) = P (AjB )P (B ) + P (AjB )P (B ) + ··· + P (AjB )P (B ) Jessy Hwang. Material based on Joe Blitzstein's (@stat110) lectures 1 1 2 2 n n A occurred gives no information about whether B occurred. More (http://stat110.net) and Blitzstein/Hwang's Introduction to P (A) = P (A \ B1) + P (A \ B2) + ··· + P (A \ Bn) formally, A and B (which have nonzero probability) are independent if Probability textbook (http://bit.ly/introprobability). Licensed and only if one of the following equivalent statements holds: For LOTP with extra conditioning, just add in another event C! under CC BY-NC-SA 4.0. Please share comments, suggestions, and errors at http://github.com/wzchen/probability_cheatsheet. P (A \ B) = P (A)P (B) P (AjC) = P (AjB1;C)P (B1jC) + ··· + P (AjBn;C)P (BnjC) P (AjB) = P (A) P (AjC) = P (A \ B1jC) + P (A \ B2jC) + ··· + P (A \ BnjC) P (BjA) = P (B) Last Updated September 4, 2015 Special case of LOTP with B and Bc as partition: Conditional Independence A and B are conditionally independent P (A) = P (AjB)P (B) + P (AjBc)P (Bc) given C if P (A \ BjC) = P (AjC)P (BjC). -

Probability Cheatsheet

Probability Cheatsheet v1.1.1 Simpson's Paradox Expected Value, Linearity, and Symmetry P (A j B; C) < P (A j Bc;C) and P (A j B; Cc) < P (A j Bc;Cc) Expected Value (aka mean, expectation, or average) can be thought Compiled by William Chen (http://wzchen.com) with contributions yet still, P (A j B) > P (A j Bc) of as the \weighted average" of the possible outcomes of our random from Sebastian Chiu, Yuan Jiang, Yuqi Hou, and Jessy Hwang. variable. Mathematically, if x1; x2; x3;::: are all of the possible values Material based off of Joe Blitzstein's (@stat110) lectures Bayes' Rule and Law of Total Probability that X can take, the expected value of X can be calculated as follows: P (http://stat110.net) and Blitzstein/Hwang's Intro to Probability E(X) = xiP (X = xi) textbook (http://bit.ly/introprobability). Licensed under CC i Law of Total Probability with partitioning set B1; B2; B3; :::Bn and BY-NC-SA 4.0. Please share comments, suggestions, and errors at with extra conditioning (just add C!) Note that for any X and Y , a and b scaling coefficients and c is our http://github.com/wzchen/probability_cheatsheet. constant, the following property of Linearity of Expectation holds: P (A) = P (AjB1)P (B1) + P (AjB2)P (B2) + :::P (AjBn)P (Bn) Last Updated March 20, 2015 P (A) = P (A \ B1) + P (A \ B2) + :::P (A \ Bn) E(aX + bY + c) = aE(X) + bE(Y ) + c P (AjC) = P (AjB1; C)P (B1jC) + :::P (AjBn; C)P (BnjC) If two Random Variables have the same distribution, even when they are dependent by the property of Symmetry their expected values P (AjC) = P (A \ B jC) + P (A \ B jC) + :::P (A \ B jC) Counting 1 2 n are equal. -

Fourier, Wavelet and Monte Carlo Methods in Computational Finance

Fourier, Wavelet and Monte Carlo Methods in Computational Finance Kees Oosterlee1;2 1CWI, Amsterdam 2Delft University of Technology, the Netherlands AANMPDE-9-16, 7/7/2016 Kees Oosterlee (CWI, TU Delft) Comp. Finance AANMPDE-9-16 1 / 51 Joint work with Fang Fang, Marjon Ruijter, Luis Ortiz, Shashi Jain, Alvaro Leitao, Fei Cong, Qian Feng Agenda Derivatives pricing, Feynman-Kac Theorem Fourier methods Basics of COS method; Basics of SWIFT method; Options with early-exercise features COS method for Bermudan options Monte Carlo method BSDEs, BCOS method (very briefly) Kees Oosterlee (CWI, TU Delft) Comp. Finance AANMPDE-9-16 1 / 51 Agenda Derivatives pricing, Feynman-Kac Theorem Fourier methods Basics of COS method; Basics of SWIFT method; Options with early-exercise features COS method for Bermudan options Monte Carlo method BSDEs, BCOS method (very briefly) Joint work with Fang Fang, Marjon Ruijter, Luis Ortiz, Shashi Jain, Alvaro Leitao, Fei Cong, Qian Feng Kees Oosterlee (CWI, TU Delft) Comp. Finance AANMPDE-9-16 1 / 51 Feynman-Kac theorem: Z T v(t; x) = E g(s; Xs )ds + h(XT ) ; t where Xs is the solution to the FSDE dXs = µ(Xs )ds + σ(Xs )d!s ; Xt = x: Feynman-Kac Theorem The linear partial differential equation: @v(t; x) + Lv(t; x) + g(t; x) = 0; v(T ; x) = h(x); @t with operator 1 Lv(t; x) = µ(x)Dv(t; x) + σ2(x)D2v(t; x): 2 Kees Oosterlee (CWI, TU Delft) Comp. Finance AANMPDE-9-16 2 / 51 Feynman-Kac Theorem The linear partial differential equation: @v(t; x) + Lv(t; x) + g(t; x) = 0; v(T ; x) = h(x); @t with operator 1 Lv(t; x) = µ(x)Dv(t; x) + σ2(x)D2v(t; x): 2 Feynman-Kac theorem: Z T v(t; x) = E g(s; Xs )ds + h(XT ) ; t where Xs is the solution to the FSDE dXs = µ(Xs )ds + σ(Xs )d!s ; Xt = x: Kees Oosterlee (CWI, TU Delft) Comp. -

Efficient Monte Carlo Methods for Estimating Failure Probabilities

Reliability Engineering and System Safety 165 (2017) 376–394 Contents lists available at ScienceDirect Reliability Engineering and System Safety journal homepage: www.elsevier.com/locate/ress ffi E cient Monte Carlo methods for estimating failure probabilities MARK ⁎ Andres Albana, Hardik A. Darjia,b, Atsuki Imamurac, Marvin K. Nakayamac, a Mathematical Sciences Dept., New Jersey Institute of Technology, Newark, NJ 07102, USA b Mechanical Engineering Dept., New Jersey Institute of Technology, Newark, NJ 07102, USA c Computer Science Dept., New Jersey Institute of Technology, Newark, NJ 07102, USA ARTICLE INFO ABSTRACT Keywords: We develop efficient Monte Carlo methods for estimating the failure probability of a system. An example of the Probabilistic safety assessment problem comes from an approach for probabilistic safety assessment of nuclear power plants known as risk- Risk analysis informed safety-margin characterization, but it also arises in other contexts, e.g., structural reliability, Structural reliability catastrophe modeling, and finance. We estimate the failure probability using different combinations of Uncertainty simulation methodologies, including stratified sampling (SS), (replicated) Latin hypercube sampling (LHS), Monte Carlo and conditional Monte Carlo (CMC). We prove theorems establishing that the combination SS+LHS (resp., SS Variance reduction Confidence intervals +CMC+LHS) has smaller asymptotic variance than SS (resp., SS+LHS). We also devise asymptotically valid (as Nuclear regulation the overall sample size grows large) upper confidence bounds for the failure probability for the methods Risk-informed safety-margin characterization considered. The confidence bounds may be employed to perform an asymptotically valid probabilistic safety assessment. We present numerical results demonstrating that the combination SS+CMC+LHS can result in substantial variance reductions compared to stratified sampling alone. -

(Introduction to Probability at an Advanced Level) - All Lecture Notes

Fall 2018 Statistics 201A (Introduction to Probability at an advanced level) - All Lecture Notes Aditya Guntuboyina August 15, 2020 Contents 0.1 Sample spaces, Events, Probability.................................5 0.2 Conditional Probability and Independence.............................6 0.3 Random Variables..........................................7 1 Random Variables, Expectation and Variance8 1.1 Expectations of Random Variables.................................9 1.2 Variance................................................ 10 2 Independence of Random Variables 11 3 Common Distributions 11 3.1 Ber(p) Distribution......................................... 11 3.2 Bin(n; p) Distribution........................................ 11 3.3 Poisson Distribution......................................... 12 4 Covariance, Correlation and Regression 14 5 Correlation and Regression 16 6 Back to Common Distributions 16 6.1 Geometric Distribution........................................ 16 6.2 Negative Binomial Distribution................................... 17 7 Continuous Distributions 17 7.1 Normal or Gaussian Distribution.................................. 17 1 7.2 Uniform Distribution......................................... 18 7.3 The Exponential Density...................................... 18 7.4 The Gamma Density......................................... 18 8 Variable Transformations 19 9 Distribution Functions and the Quantile Transform 20 10 Joint Densities 22 11 Joint Densities under Transformations 23 11.1 Detour to Convolutions...................................... -

Basic Statistics and Monte-Carlo Method -2

Applied Statistical Mechanics Lecture Note - 10 Basic Statistics and Monte-Carlo Method -2 고려대학교 화공생명공학과 강정원 Table of Contents 1. General Monte Carlo Method 2. Variance Reduction Techniques 3. Metropolis Monte Carlo Simulation 1.1 Introduction Monte Carlo Method Any method that uses random numbers Random sampling the population Application • Science and engineering • Management and finance For given subject, various techniques and error analysis will be presented Subject : evaluation of definite integral b I = ρ(x)dx a 1.1 Introduction Monte Carlo method can be used to compute integral of any dimension d (d-fold integrals) Error comparison of d-fold integrals Simpson’s rule,… E ∝ N −1/ d − Monte Carlo method E ∝ N 1/ 2 purely statistical, not rely on the dimension ! Monte Carlo method WINS, when d >> 3 1.2 Hit-or-Miss Method Evaluation of a definite integral b I = ρ(x)dx a h X X X ≥ ρ X h (x) for any x X Probability that a random point reside inside X O the area O O I N' O O r = ≈ O O (b − a)h N a b N : Total number of points N’ : points that reside inside the region N' I ≈ (b − a)h N 1.2 Hit-or-Miss Method Start Set N : large integer N’ = 0 h X X X X X Choose a point x in [a,b] = − + X Loop x (b a)u1 a O N times O O y = hu O O Choose a point y in [0,h] 2 O O a b if [x,y] reside inside then N’ = N’+1 I = (b-a) h (N’/N) End 1.2 Hit-or-Miss Method Error Analysis of the Hit-or-Miss Method It is important to know how accurate the result of simulations are The rule of 3σ’s Identifying Random Variable N = 1 X X n N n=1 From -

Lecture Notes 4 36-705 1 Reminder: Convergence of Sequences 2

Lecture Notes 4 36-705 In today's lecture we discuss the convergence of random variables. At a high-level, our first few lectures focused on non-asymptotic properties of averages i.e. the tail bounds we derived applied for any fixed sample size n. For the next few lectures we focus on asymptotic properties, i.e. we ask the question: what happens to the average of n i.i.d. random variables as n ! 1. Roughly, from a theoretical perspective the idea is that many expressions will consider- ably simplify in the asymptotic regime. Rather than have many different tail bounds, we will derive simple \universal results" that hold under extremely weak conditions. From a slightly more practical perspective, asymptotic theory is often useful to obtain approximate confidence intervals. 1 Reminder: convergence of sequences When we think of convergence of deterministic real numbers the corresponding notions are classical. Formally, we say that a sequence of real numbers a1; a2;::: converges to a fixed real number a if, for every positive number , there exists a natural number N() such that for all n ≥ N(), jan − aj < . We call a the limit of the sequence and write limn!1 an = a: Our focus today will in trying to develop analogues of this notion that apply to sequences of random variables. We will first give some definitions and then try to circle back to relate the definitions and discuss some examples. Throughout, we will focus on the setting where we have a sequence of random variables X1;:::;Xn and another random variable X, and would like to define what is means for the sequence to converge to X. -

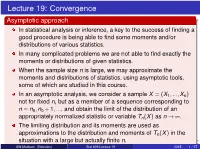

Stat 609: Mathematical Statistics Lecture 19

Lecture 19: Convergence Asymptotic approach In statistical analysis or inference, a key to the success of finding a good procedure is being able to find some moments and/or distributions of various statistics. In many complicated problems we are not able to find exactly the moments or distributions of given statistics. When the sample size n is large, we may approximate the moments and distributions of statistics, using asymptotic tools, some of which are studied in this course. In an asymptotic analysis, we consider a sample X = (X1;:::;Xn) not for fixed n, but as a member of a sequence corresponding to n = n0;n0 + 1;:::, and obtain the limit of the distribution of an appropriately normalized statistic or variable Tn(X) as n ! ¥. The limiting distribution and its moments are used as approximations to the distribution and moments of Tn(X) in thebeamer-tu-logo situation with a large but actually finite n. UW-Madison (Statistics) Stat 609 Lecture 19 2015 1 / 17 This leads to some asymptotic statistical procedures and asymptotic criteria for assessing their performances. The asymptotic approach is not only applied to the situation where no exact method (the approach considering a fixed n) is available, but also used to provide a procedure simpler (e.g., in terms of computation) than that produced by the exact approach. In addition to providing more theoretical results and/or simpler procedures, the asymptotic approach requires less stringent mathematical assumptions than does the exact approach. Definition 5.5.1 (convergence in probability) A sequence of random variables Zn, i = 1;2;:::, converges in probability to a random variable Z iff for every e > 0, lim P(jZn − Z j ≥ e) = 0: n!¥ A sequence of random vectors Zn converges in probability to a random vector Z iff each component of Zn converges in probability to the corresponding component of Z.