Lecture Notes 4 36-705 1 Reminder: Convergence of Sequences 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Conditional Generalizations of Strong Laws Which Conclude the Partial Sums Converge Almost Surely1

The Annals ofProbability 1982. Vol. 10, No.3. 828-830 CONDITIONAL GENERALIZATIONS OF STRONG LAWS WHICH CONCLUDE THE PARTIAL SUMS CONVERGE ALMOST SURELY1 By T. P. HILL Georgia Institute of Technology Suppose that for every independent sequence of random variables satis fying some hypothesis condition H, it follows that the partial sums converge almost surely. Then it is shown that for every arbitrarily-dependent sequence of random variables, the partial sums converge almost surely on the event where the conditional distributions (given the past) satisfy precisely the same condition H. Thus many strong laws for independent sequences may be immediately generalized into conditional results for arbitrarily-dependent sequences. 1. Introduction. Ifevery sequence ofindependent random variables having property A has property B almost surely, does every arbitrarily-dependent sequence of random variables have property B almost surely on the set where the conditional distributions have property A? Not in general, but comparisons of the conditional Borel-Cantelli Lemmas, the condi tional three-series theorem, and many martingale results with their independent counter parts suggest that the answer is affirmative in fairly general situations. The purpose ofthis note is to prove Theorem 1, which states, in part, that if "property B" is "the partial sums converge," then the answer is always affIrmative, regardless of "property A." Thus many strong laws for independent sequences (even laws yet undiscovered) may be immediately generalized into conditional results for arbitrarily-dependent sequences. 2. Main Theorem. In this note, IY = (Y1 , Y2 , ••• ) is a sequence ofrandom variables on a probability triple (n, d, !?I'), Sn = Y 1 + Y 2 + .. -

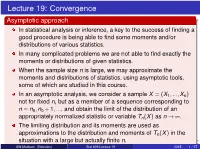

Stat 609: Mathematical Statistics Lecture 19

Lecture 19: Convergence Asymptotic approach In statistical analysis or inference, a key to the success of finding a good procedure is being able to find some moments and/or distributions of various statistics. In many complicated problems we are not able to find exactly the moments or distributions of given statistics. When the sample size n is large, we may approximate the moments and distributions of statistics, using asymptotic tools, some of which are studied in this course. In an asymptotic analysis, we consider a sample X = (X1;:::;Xn) not for fixed n, but as a member of a sequence corresponding to n = n0;n0 + 1;:::, and obtain the limit of the distribution of an appropriately normalized statistic or variable Tn(X) as n ! ¥. The limiting distribution and its moments are used as approximations to the distribution and moments of Tn(X) in thebeamer-tu-logo situation with a large but actually finite n. UW-Madison (Statistics) Stat 609 Lecture 19 2015 1 / 17 This leads to some asymptotic statistical procedures and asymptotic criteria for assessing their performances. The asymptotic approach is not only applied to the situation where no exact method (the approach considering a fixed n) is available, but also used to provide a procedure simpler (e.g., in terms of computation) than that produced by the exact approach. In addition to providing more theoretical results and/or simpler procedures, the asymptotic approach requires less stringent mathematical assumptions than does the exact approach. Definition 5.5.1 (convergence in probability) A sequence of random variables Zn, i = 1;2;:::, converges in probability to a random variable Z iff for every e > 0, lim P(jZn − Z j ≥ e) = 0: n!¥ A sequence of random vectors Zn converges in probability to a random vector Z iff each component of Zn converges in probability to the corresponding component of Z. -

1 Probability Spaces

BROWN UNIVERSITY Math 1610 Probability Notes Samuel S. Watson Last updated: December 18, 2015 Please do not hesitate to notify me about any mistakes you find in these notes. My advice is to refresh your local copy of this document frequently, as I will be updating it throughout the semester. 1 Probability Spaces We model random phenomena with a probability space, which consists of an arbitrary set , a collection1 of subsets of , and a map P : [0, 1], where and P satisfy certain F F! F conditions detailed below. An element ! is called an outcome, an element E is 2 2 F called an event, and we interpret P(E) as the probability of the event E. To connect this setup to the way you usually think about probability, regard ! as having been randomly selected from in such a way that, for each event E, the probability that ! is in E (in other words, that E happens) is equal to P(E). If E and F are events, then the event “E and F” corresponds to the ! : ! E and ! F , f 2 2 g abbreviated as E F. Similarly, E F is the event that E happens or F happens, and \ [ E is the event that E does not happen. We refer to E as the complement of E, and n n sometimes denote2 it by Ec. To ensure that we can perform these basic operations, we require that is closed under F them. In other words, E must be an event whenever E is an event (that is, E n n 2 F whenever E ). -

Probability Theory

Probability Theory "A random variable is neither random nor variable." Gian-Carlo Rota, M.I.T.. Florian Herzog 2013 Probability space Probability space A probability space W is a unique triple W = fΩ; F;P g: • Ω is its sample space •F its σ-algebra of events • P its probability measure Remarks: (1) The sample space Ω is the set of all possible samples or elementary events !: Ω = f! j ! 2 Ωg. (2)The σ-algebra F is the set of all of the considered events A, i.e., subsets of Ω: F = fA j A ⊆ Ω;A 2 Fg. (3) The probability measure P assigns a probability P (A) to every event A 2 F: P : F! [0; 1]. Stochastic Systems, 2013 2 Sample space The sample space Ω is sometimes called the universe of all samples or possible outcomes !. Example 1. Sample space • Toss of a coin (with head and tail): Ω = fH;T g. • Two tosses of a coin: Ω = fHH;HT;TH;TT g. • A cubic die: Ω = f!1;!2;!3;!4;!5;!6g. • The positive integers: Ω = f1; 2; 3;::: g. • The reals: Ω = f! j ! 2 Rg. Note that the !s are a mathematical construct and have per se no real or scientific meaning. The !s in the die example refer to the numbers of dots observed when the die is thrown. Stochastic Systems, 2013 3 Event An event A is a subset of Ω. If the outcome ! of the experiment is in the subset A, then the event A is said to have occurred. -

Advanced Probability

Advanced Probability Alan Sola Department of Pure Mathematics and Mathematical Statistics University of Cambridge [email protected] Michaelmas 2014 Contents 1 Conditional expectation 4 1.1 Basic objects: probability measures, σ-algebras, and random variables . .4 1.2 Conditional expectation . .8 1.3 Integration with respect to product measures and Fubini's theorem . 12 2 Martingales in discrete time 15 2.1 Basic definitions . 15 2.2 Stopping times and the optional stopping theorem . 16 2.3 The martingale convergence theorem . 20 2.4 Doob's inequalities . 22 2.5 Lp-convergence for martingales . 24 2.6 Some applications of martingale techniques . 26 3 Stochastic processes in continuous time 31 3.1 Basic definitions . 31 3.2 Canonical processes and sample paths . 36 3.3 Martingale regularization theorem . 38 3.4 Convergence and Doob's inequalities in continuous time . 40 3.5 Kolmogorov's continuity criterion . 42 3.6 The Poisson process . 44 1 4 Weak convergence 46 4.1 Basic definitions . 46 4.2 Characterizations of weak convergence . 49 4.3 Tightness . 51 4.4 Characteristic functions and L´evy'stheorem . 53 5 Brownian motion 56 5.1 Basic definitions . 56 5.2 Construction of Brownian motion . 59 5.3 Regularity and roughness of Brownian paths . 61 5.4 Blumenthal's 01-law and martingales for Brownian motion . 62 5.5 Strong Markov property and reflection principle . 67 5.6 Recurrence, transience, and connections with partial differential equations . 70 5.7 Donsker's invariance principle . 76 6 Poisson random measures and L´evyprocesses 81 6.1 Basic definitions . -

An Introduction to Stochastic Processes in Continuous Time

An Introduction to Stochastic Processes in Continuous Time Harry van Zanten November 8, 2004 (this version) always under construction ii Preface iv Contents 1 Stochastic processes 1 1.1 Stochastic processes 1 1.2 Finite-dimensional distributions 3 1.3 Kolmogorov's continuity criterion 4 1.4 Gaussian processes 7 1.5 Non-di®erentiability of the Brownian sample paths 10 1.6 Filtrations and stopping times 11 1.7 Exercises 17 2 Martingales 21 2.1 De¯nitions and examples 21 2.2 Discrete-time martingales 22 2.2.1 Martingale transforms 22 2.2.2 Inequalities 24 2.2.3 Doob decomposition 26 2.2.4 Convergence theorems 27 2.2.5 Optional stopping theorems 31 2.3 Continuous-time martingales 33 2.3.1 Upcrossings in continuous time 33 2.3.2 Regularization 34 2.3.3 Convergence theorems 37 2.3.4 Inequalities 38 2.3.5 Optional stopping 38 2.4 Applications to Brownian motion 40 2.4.1 Quadratic variation 40 2.4.2 Exponential inequality 42 2.4.3 The law of the iterated logarithm 43 2.4.4 Distribution of hitting times 45 2.5 Exercises 47 3 Markov processes 49 3.1 Basic de¯nitions 49 3.2 Existence of a canonical version 52 3.3 Feller processes 55 3.3.1 Feller transition functions and resolvents 55 3.3.2 Existence of a cadlag version 59 3.3.3 Existence of a good ¯ltration 61 3.4 Strong Markov property 64 vi Contents 3.4.1 Strong Markov property of a Feller process 64 3.4.2 Applications to Brownian motion 68 3.5 Generators 70 3.5.1 Generator of a Feller process 70 3.5.2 Characteristic operator 74 3.6 Killed Feller processes 76 3.6.1 Sub-Markovian processes 76 3.6.2 -

On Almost Sure Rates of Convergence for Sample Average Approximations

On almost sure rates of convergence for sample average approximations Dirk Banholzer1, J¨orgFliege1, and Ralf Werner2 1 Department of Mathematical Sciences, University of Southampton, Southampton, SO17 1BJ, UK [email protected] 2 Institut f¨urMathematik, Universit¨atAugsburg 86159 Augsburg, Germany Abstract. In this paper, we study the rates at which strongly con- sistent estimators in the sample average approximation approach (SAA) converge to their deterministic counterparts. To be able to quantify these rates at which convergence occurs in the almost sure sense, we consider the law of the iterated logarithm in a Banach space setting and first establish under relatively mild assumptions convergence rates for the approximating objective functions. These rates can then be transferred to the estimators for optimal values and solutions of the approximated problem. Based on these results, we further investigate the asymptotic bias of the SAA approach. Especially, we show that under the same as- sumptions as before the SAA estimator for the optimal value converges in mean to its deterministic counterpart, at a rate which essentially co- incides with the one in the almost sure sense. Further, optimal solutions also convergence in mean; for convex problems with the same rates, but with an as of now unknown rate for general problems. Finally, we address the notion of convergence in probability and conclude that in this case (weak) rates for the estimators can also be derived, and that without im- posing strong conditions on exponential moments, as it is typically done for obtaining exponential rates. Results on convergence in probability then allow to build universal confidence sets for the optimal value and optimal solutions under mild assumptions. -

Lecture 5. Stochastic Processes 131

128 LECTURE 5 Stochastic Processes We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes.1 In many problems that involve modeling the behavior of some system, we lack sufficiently detailed information to determine how the system behaves, or the be- havior of the system is so complicated that an exact description of it becomes irrelevant or impossible. In that case, a probabilistic model is often useful. Probability and randomness have many different philosophical interpretations, but, whatever interpretation one adopts, there is a clear mathematical formulation of probability in terms of measure theory, due to Kolmogorov. Probability is an enormous field with applications in many different areas. Here we simply aim to provide an introduction to some aspects that are useful in applied mathematics. We will do so in the context of stochastic processes of a continuous time variable, which may be thought of as a probabilistic analog of deterministic ODEs. We will focus on Brownian motion and stochastic differential equations, both because of their usefulness and the interest of the concepts they involve. -

Methods of Monte Carlo Simulation

Methods of Monte Carlo Simulation Ulm University Institute of Stochastics Lecture Notes Dr. Tim Brereton Winter Term 2015 – 2016 Ulm 2 Contents 1 Introduction 5 1.1 Somesimpleexamples .............................. .. 5 1.1.1 Example1................................... 5 1.1.2 Example2................................... 7 2 Generating Uniform Random Numbers 9 2.1 Howdowemakerandomnumbers? . 9 2.1.1 Physicalgeneration . .. 9 2.1.2 Pseudo-randomnumbers . 10 2.2 Pseudo-randomnumbers . ... 10 2.2.1 Abstractsetting................................ 10 2.2.2 LinearCongruentialGenerators . ..... 10 2.2.3 ImprovementsonLCGs ........................... 12 3 Non-Uniform Random Variable Generation 15 3.1 TheInverseTransform ............................. ... 15 3.1.1 Thetablelookupmethod . 17 3.2 Integration on Various Domains and Properties of Estimators .......... 18 3.2.1 Integration over (0,b)............................. 18 3.2.2 Propertiesofestimators . ... 19 3.2.3 Integrationoverunboundeddomains . ..... 20 3.3 TheAcceptance-RejectionMethod . ..... 21 3.3.1 Thecontinuouscase ............................. 21 3.3.2 Thediscretecase ............................... 25 3.3.3 Multivariateacceptance-rejection . ........ 26 3.3.4 Drawing uniformly from complicated sets . ....... 27 3.3.5 The limitations of high-dimensional acceptance-rejection ......... 28 3.4 Location-ScaleFamilies. ...... 29 3.5 GeneratingNormalRandomVariables . ..... 30 3.5.1 Box-Mullermethod.............................. 31 3.6 Big O notation .................................... 31 -

Stochastic Processes

Stochastic Processes David Nualart The University of Kansas [email protected] 1 1 Stochastic Processes 1.1 Probability Spaces and Random Variables In this section we recall the basic vocabulary and results of probability theory. A probability space associated with a random experiment is a triple (Ω; F;P ) where: (i) Ω is the set of all possible outcomes of the random experiment, and it is called the sample space. (ii) F is a family of subsets of Ω which has the structure of a σ-field: a) ; 2 F b) If A 2 F, then its complement Ac also belongs to F 1 c) A1;A2;::: 2 F =)[i=1Ai 2 F (iii) P is a function which associates a number P (A) to each set A 2 F with the following properties: a) 0 ≤ P (A) ≤ 1, b) P (Ω) = 1 c) For any sequence A1;A2;::: of disjoints sets in F (that is, Ai \ Aj = ; if i 6= j), 1 P1 P ([i=1Ai) = i=1 P (Ai) The elements of the σ-field F are called events and the mapping P is called a probability measure. In this way we have the following interpretation of this model: P (F )=\probability that the event F occurs" The set ; is called the empty event and it has probability zero. Indeed, the additivity property (iii,c) implies P (;) + P (;) + ··· = P (;): The set Ω is also called the certain set and by property (iii,b) it has probability one. Usually, there will be other events A ⊂ Ω such that P (A) = 1. -

![Arxiv:2102.06287V2 [Math.PR] 19 Feb 2021](https://docslib.b-cdn.net/cover/3049/arxiv-2102-06287v2-math-pr-19-feb-2021-5003049.webp)

Arxiv:2102.06287V2 [Math.PR] 19 Feb 2021

URN MODELS WITH RANDOM MULTIPLE DRAWING AND RANDOM ADDITION Abstract. We consider a two-color urn model with multiple drawing and random time-dependent addition matrix. The model is very general with respect to previous literature: the number of sampled balls at each time-step is random, the addition matrix is not balanced and it has general random entries. For the proportion of balls of a given color, we prove almost sure convergence results. In particular, in the case of equal reinforcement means, we prove fluctuation theorems (through CLTs in the sense of stable convergence and of almost sure conditional convergence, which are stronger than convergence in distribution) and we give asymptotic confidence intervals for the limit proportion, whose distribution is generally unknown. Irene Crimaldi1, Pierre-Yves Louis23, Ida G. Minelli4 Keywords. Hypergeometric Urn; Multiple drawing urn; P´olya urn; Random process with rein- forcement; Randomly reinforced urn; Central limit theorem; Stable convergence; Opinion dynamics; Epidemic models MSC2010 Classification. Primary: 60B10; 60F05; 60F15; 60G42 Secondary: 62P25; 91D30 ; 92C60 Contents 1. Introduction 2 2. The model 4 3. Asymptotic results 5 3.1. Almost sure convergence 7 3.2. Central limit theorems for the case of equal reinforcement means 10 3.3. Probability distribution of the limit proportion in the case of equal reinforcement means 14 3.4. Asymptotic confidence intervals for the limit proportion in the case of equal reinforcement means 14 4. Examples and numerical illustrations 16 Appendix A. Technical results 23 Appendix B. Some auxiliary results 25 arXiv:2102.06287v3 [math.PR] 4 Jul 2021 Appendix C. Stable convergence and its variants 25 References 27 1IMT School for Advanced Studies Lucca, Piazza San Ponziano 6, 55100 Lucca, Italy, irene.crimaldi@imtlucca. -

ON ALMOST SURE CONVERGENCE MICHEL Lotve UNIVERSITY of CALIFORNIA

ON ALMOST SURE CONVERGENCE MICHEL LOtVE UNIVERSITY OF CALIFORNIA 1. Introduction Since the discovery by Borel1 (1907) of the strong law of large numbers in the Bernoulli case, there has been much investigation of the problem of almost sure convergence and almost sure summability of series of random variables. So far most of the results concern series of independent random variables. In the case of dependent random variables, the first general result is the celebrated Birkhoff ergodic theorem [1], or the strong law of large numbers for a stationary sequence with a finite first moment. This theorem contains the Kolmogoroff strong law of large numbers as a particular case. P. Levy studied series that are the same as those of independent random variables in their properties of second order as de- scribed by the first and second conditional moments. The author [8] investigated series which behave asymptotically as those of P. Levy. There are also properties of martingales, due essentially to Doob [2], P. Levy and Ville [10]. We shall proceed to a systematic investigation of almost sure convergence of sequences of random variables, emphasizing the methods and assuming as little as possible about the stochastic structure of the sequences. The known results will appear as various particular cases of a few propositions, and the necessary known tools will be established. In that respect, this paper is self contained. Part I is devoted to definitions, notations, and general criteria. In part II the truncation and centering ideas are expounded. Part III is concerned with the use of "determining" set functions and with two propositions.