1 Probability Space, Events, and Random Variables

Total Page:16

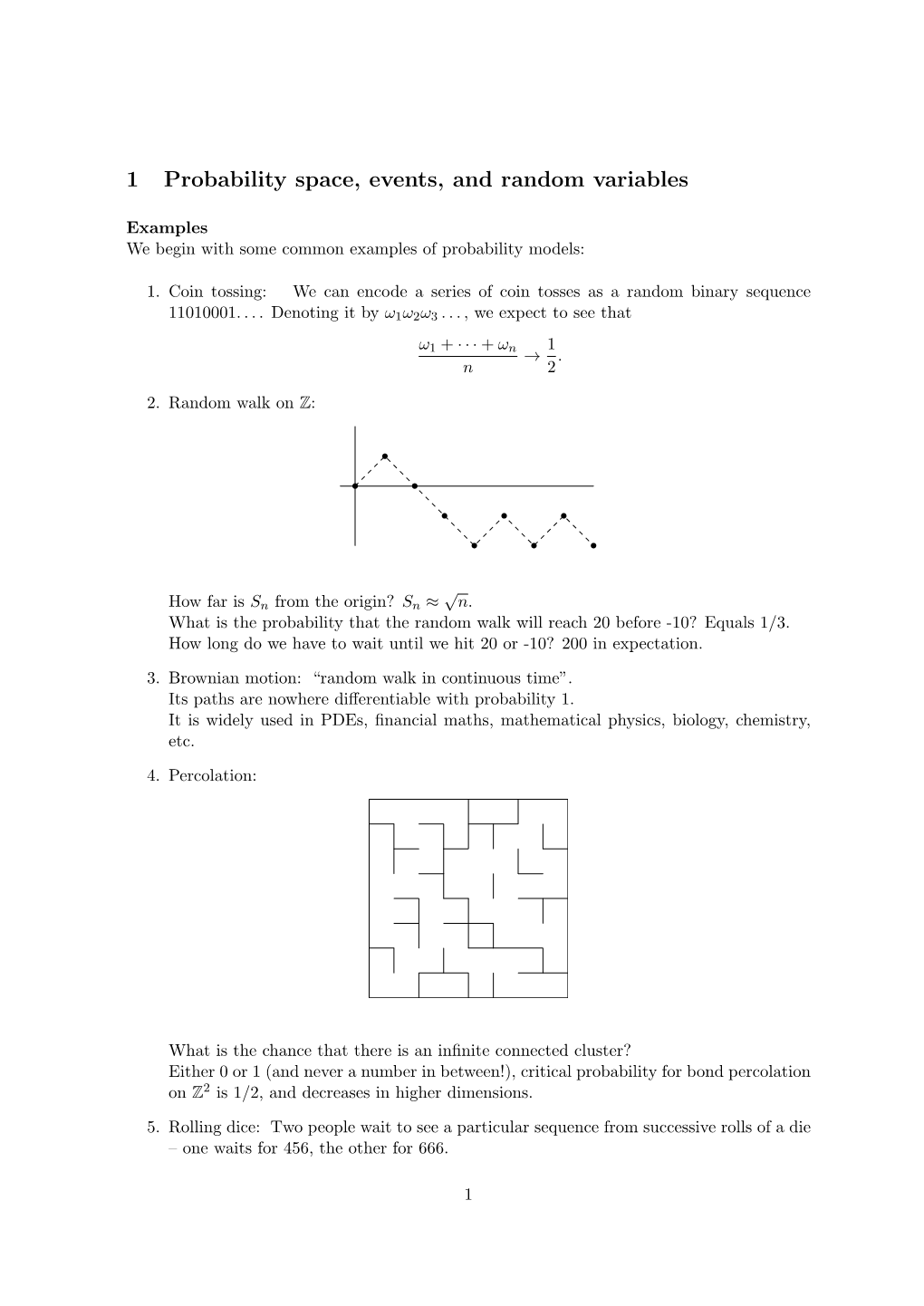

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Conditional Generalizations of Strong Laws Which Conclude the Partial Sums Converge Almost Surely1

The Annals ofProbability 1982. Vol. 10, No.3. 828-830 CONDITIONAL GENERALIZATIONS OF STRONG LAWS WHICH CONCLUDE THE PARTIAL SUMS CONVERGE ALMOST SURELY1 By T. P. HILL Georgia Institute of Technology Suppose that for every independent sequence of random variables satis fying some hypothesis condition H, it follows that the partial sums converge almost surely. Then it is shown that for every arbitrarily-dependent sequence of random variables, the partial sums converge almost surely on the event where the conditional distributions (given the past) satisfy precisely the same condition H. Thus many strong laws for independent sequences may be immediately generalized into conditional results for arbitrarily-dependent sequences. 1. Introduction. Ifevery sequence ofindependent random variables having property A has property B almost surely, does every arbitrarily-dependent sequence of random variables have property B almost surely on the set where the conditional distributions have property A? Not in general, but comparisons of the conditional Borel-Cantelli Lemmas, the condi tional three-series theorem, and many martingale results with their independent counter parts suggest that the answer is affirmative in fairly general situations. The purpose ofthis note is to prove Theorem 1, which states, in part, that if "property B" is "the partial sums converge," then the answer is always affIrmative, regardless of "property A." Thus many strong laws for independent sequences (even laws yet undiscovered) may be immediately generalized into conditional results for arbitrarily-dependent sequences. 2. Main Theorem. In this note, IY = (Y1 , Y2 , ••• ) is a sequence ofrandom variables on a probability triple (n, d, !?I'), Sn = Y 1 + Y 2 + .. -

Probability and Statistics Lecture Notes

Probability and Statistics Lecture Notes Antonio Jiménez-Martínez Chapter 1 Probability spaces In this chapter we introduce the theoretical structures that will allow us to assign proba- bilities in a wide range of probability problems. 1.1. Examples of random phenomena Science attempts to formulate general laws on the basis of observation and experiment. The simplest and most used scheme of such laws is: if a set of conditions B is satisfied =) event A occurs. Examples of such laws are the law of gravity, the law of conservation of mass, and many other instances in chemistry, physics, biology... If event A occurs inevitably whenever the set of conditions B is satisfied, we say that A is certain or sure (under the set of conditions B). If A can never occur whenever B is satisfied, we say that A is impossible (under the set of conditions B). If A may or may not occur whenever B is satisfied, then A is said to be a random phenomenon. Random phenomena is our subject matter. Unlike certain and impossible events, the presence of randomness implies that the set of conditions B do not reflect all the necessary and sufficient conditions for the event A to occur. It might seem them impossible to make any worthwhile statements about random phenomena. However, experience has shown that many random phenomena exhibit a statistical regularity that makes them subject to study. For such random phenomena it is possible to estimate the chance of occurrence of the random event. This estimate can be obtained from laws, called probabilistic or stochastic, with the form: if a set of conditions B is satisfied event A occurs m times =) repeatedly n times out of the n repetitions. -

The Probability Set-Up.Pdf

CHAPTER 2 The probability set-up 2.1. Basic theory of probability We will have a sample space, denoted by S (sometimes Ω) that consists of all possible outcomes. For example, if we roll two dice, the sample space would be all possible pairs made up of the numbers one through six. An event is a subset of S. Another example is to toss a coin 2 times, and let S = fHH;HT;TH;TT g; or to let S be the possible orders in which 5 horses nish in a horse race; or S the possible prices of some stock at closing time today; or S = [0; 1); the age at which someone dies; or S the points in a circle, the possible places a dart can hit. We should also keep in mind that the same setting can be described using dierent sample set. For example, in two solutions in Example 1.30 we used two dierent sample sets. 2.1.1. Sets. We start by describing elementary operations on sets. By a set we mean a collection of distinct objects called elements of the set, and we consider a set as an object in its own right. Set operations Suppose S is a set. We say that A ⊂ S, that is, A is a subset of S if every element in A is contained in S; A [ B is the union of sets A ⊂ S and B ⊂ S and denotes the points of S that are in A or B or both; A \ B is the intersection of sets A ⊂ S and B ⊂ S and is the set of points that are in both A and B; ; denotes the empty set; Ac is the complement of A, that is, the points in S that are not in A. -

1 Probabilities

1 Probabilities 1.1 Experiments with randomness We will use the term experiment in a very general way to refer to some process that produces a random outcome. Examples: (Ask class for some first) Here are some discrete examples: • roll a die • flip a coin • flip a coin until we get heads And here are some continuous examples: • height of a U of A student • random number in [0, 1] • the time it takes until a radioactive substance undergoes a decay These examples share the following common features: There is a proce- dure or natural phenomena called the experiment. It has a set of possible outcomes. There is a way to assign probabilities to sets of possible outcomes. We will call this a probability measure. 1.2 Outcomes and events Definition 1. An experiment is a well defined procedure or sequence of procedures that produces an outcome. The set of possible outcomes is called the sample space. We will typically denote an individual outcome by ω and the sample space by Ω. Definition 2. An event is a subset of the sample space. This definition will be changed when we come to the definition ofa σ-field. The next thing to define is a probability measure. Before we can do this properly we need some more structure, so for now we just make an informal definition. A probability measure is a function on the collection of events 1 that assign a number between 0 and 1 to each event and satisfies certain properties. NB: A probability measure is not a function on Ω. -

Propensities and Probabilities

ARTICLE IN PRESS Studies in History and Philosophy of Modern Physics 38 (2007) 593–625 www.elsevier.com/locate/shpsb Propensities and probabilities Nuel Belnap 1028-A Cathedral of Learning, University of Pittsburgh, Pittsburgh, PA 15260, USA Received 19 May 2006; accepted 6 September 2006 Abstract Popper’s introduction of ‘‘propensity’’ was intended to provide a solid conceptual foundation for objective single-case probabilities. By considering the partly opposed contributions of Humphreys and Miller and Salmon, it is argued that when properly understood, propensities can in fact be understood as objective single-case causal probabilities of transitions between concrete events. The chief claim is that propensities are well-explicated by describing how they fit into the existing formal theory of branching space-times, which is simultaneously indeterministic and causal. Several problematic examples, some commonsense and some quantum-mechanical, are used to make clear the advantages of invoking branching space-times theory in coming to understand propensities. r 2007 Elsevier Ltd. All rights reserved. Keywords: Propensities; Probabilities; Space-times; Originating causes; Indeterminism; Branching histories 1. Introduction You are flipping a fair coin fairly. You ascribe a probability to a single case by asserting The probability that heads will occur on this very next flip is about 50%. ð1Þ The rough idea of a single-case probability seems clear enough when one is told that the contrast is with either generalizations or frequencies attributed to populations asserted while you are flipping a fair coin fairly, such as In the long run; the probability of heads occurring among flips is about 50%. ð2Þ E-mail address: [email protected] 1355-2198/$ - see front matter r 2007 Elsevier Ltd. -

Topic 1: Basic Probability Definition of Sets

Topic 1: Basic probability ² Review of sets ² Sample space and probability measure ² Probability axioms ² Basic probability laws ² Conditional probability ² Bayes' rules ² Independence ² Counting ES150 { Harvard SEAS 1 De¯nition of Sets ² A set S is a collection of objects, which are the elements of the set. { The number of elements in a set S can be ¯nite S = fx1; x2; : : : ; xng or in¯nite but countable S = fx1; x2; : : :g or uncountably in¯nite. { S can also contain elements with a certain property S = fx j x satis¯es P g ² S is a subset of T if every element of S also belongs to T S ½ T or T S If S ½ T and T ½ S then S = T . ² The universal set is the set of all objects within a context. We then consider all sets S ½ . ES150 { Harvard SEAS 2 Set Operations and Properties ² Set operations { Complement Ac: set of all elements not in A { Union A \ B: set of all elements in A or B or both { Intersection A [ B: set of all elements common in both A and B { Di®erence A ¡ B: set containing all elements in A but not in B. ² Properties of set operations { Commutative: A \ B = B \ A and A [ B = B [ A. (But A ¡ B 6= B ¡ A). { Associative: (A \ B) \ C = A \ (B \ C) = A \ B \ C. (also for [) { Distributive: A \ (B [ C) = (A \ B) [ (A \ C) A [ (B \ C) = (A [ B) \ (A [ C) { DeMorgan's laws: (A \ B)c = Ac [ Bc (A [ B)c = Ac \ Bc ES150 { Harvard SEAS 3 Elements of probability theory A probabilistic model includes ² The sample space of an experiment { set of all possible outcomes { ¯nite or in¯nite { discrete or continuous { possibly multi-dimensional ² An event A is a set of outcomes { a subset of the sample space, A ½ . -

Probability Theory Review 1 Basic Notions: Sample Space, Events

Fall 2018 Probability Theory Review Aleksandar Nikolov 1 Basic Notions: Sample Space, Events 1 A probability space (Ω; P) consists of a finite or countable set Ω called the sample space, and the P probability function P :Ω ! R such that for all ! 2 Ω, P(!) ≥ 0 and !2Ω P(!) = 1. We call an element ! 2 Ω a sample point, or outcome, or simple event. You should think of a sample space as modeling some random \experiment": Ω contains all possible outcomes of the experiment, and P(!) gives the probability that we are going to get outcome !. Note that we never speak of probabilities except in relation to a sample space. At this point we give a few examples: 1. Consider a random experiment in which we toss a single fair coin. The two possible outcomes are that the coin comes up heads (H) or tails (T), and each of these outcomes is equally likely. 1 Then the probability space is (Ω; P), where Ω = fH; T g and P(H) = P(T ) = 2 . 2. Consider a random experiment in which we toss a single coin, but the coin lands heads with 2 probability 3 . Then, once again the sample space is Ω = fH; T g but the probability function 2 1 is different: P(H) = 3 , P(T ) = 3 . 3. Consider a random experiment in which we toss a fair coin three times, and each toss is independent of the others. The coin can come up heads all three times, or come up heads twice and then tails, etc. -

1 Probability Measure and Random Variables

1 Probability measure and random variables 1.1 Probability spaces and measures We will use the term experiment in a very general way to refer to some process that produces a random outcome. Definition 1. The set of possible outcomes is called the sample space. We will typically denote an individual outcome by ω and the sample space by Ω. Set notation: A B, A is a subset of B, means that every element of A is also in B. The union⊂ A B of A and B is the of all elements that are in A or B, including those that∪ are in both. The intersection A B of A and B is the set of all elements that are in both of A and B. ∩ n j=1Aj is the set of elements that are in at least one of the Aj. ∪n j=1Aj is the set of elements that are in all of the Aj. ∩∞ ∞ j=1Aj, j=1Aj are ... Two∩ sets A∪ and B are disjoint if A B = . denotes the empty set, the set with no elements. ∩ ∅ ∅ Complements: The complement of an event A, denoted Ac, is the set of outcomes (in Ω) which are not in A. Note that the book writes it as Ω A. De Morgan’s laws: \ (A B)c = Ac Bc ∪ ∩ (A B)c = Ac Bc ∩ ∪ c c ( Aj) = Aj j j [ \ c c ( Aj) = Aj j j \ [ (1) Definition 2. Let Ω be a sample space. A collection of subsets of Ω is a σ-field if F 1. -

CSE 21 Mathematics for Algorithm and System Analysis

CSE 21 Mathematics for Algorithm and System Analysis Unit 1: Basic Count and List Section 3: Set (cont’d) Section 4: Probability and Basic Counting CSE21: Lecture 4 1 Quiz Information • The first quiz will be in the first 15 minutes of the next class (Monday) at the same classroom. • You can use textbook and notes during the quiz. • For all the questions, no final number is necessary, arithmetic formula is enough. • Write down your analysis, e.g., applicable theorem(s)/rule(s). We will give partial credit if the analysis is correct but the result is wrong. CSE21: Lecture 4 2 Correction • For set U={1, 2, 3, 4, 5}, A={1, 2, 3}, B={3, 4}, – Set Difference A − B = {1, 2}, B − A ={4} – Symmetric Difference: A ⊕ B = ( A − B)∪(B − A)= {1, 2} ∪{4} = {1, 2, 4} CSE21: Lecture 4 3 Card Hand Illustration • 5 card hand of full house: a pair and a triple • 5 card hand with two pairs CSE21: Lecture 4 4 Review: Binomial Coefficient • Binomial Coefficient: number of subsets of A of size (or cardinality) k: n n! C(n, k) = = k k (! n − k)! CSE21: Lecture 4 5 Review : Deriving Recursions • How to construct the things of a given size by using the same type of things of a smaller size? • Recursion formula of binomial coefficient – C(0,0) = 1, – C(0, k) = 0 for k ≠ 0 and – C(n,k) = C(n−1, k−1)+ C(n−1, k) for n > 0; • It shows how recursion works and tells another way calculating C(n,k) besides the formula n n! C(n, k) = = k k (! n − k)! 6 Learning Outcomes • By the end of this lesson, you should be able to – Calculate set partition number by recursion. -

Characterization of Palm Measures Via Bijective Point-Shifts

The Annals of Probability 2005, Vol. 33, No. 5, 1698–1715 DOI 10.1214/009117905000000224 © Institute of Mathematical Statistics, 2005 CHARACTERIZATION OF PALM MEASURES VIA BIJECTIVE POINT-SHIFTS BY MATTHIAS HEVELING AND GÜNTER LAST Universität Karlsruhe The paper considers a stationary point process N in Rd . A point-map picks a point of N in a measurable way. It is called bijective [Thorisson, H. (2000). Coupling, Stationarity, and Regeneration. Springer, New York] if it is generating (by suitable shifts) a bijective mapping on N. Mecke [Math. Nachr. 65 (1975) 335–344] proved that the Palm measure of N is point- stationary in the sense that it is invariant under bijective point-shifts. Our main result identifies this property as being characteristic for Palm measures. This generalizes a fundamental classical result for point processes on the line (see, e.g., Theorem 11.4 in [Kallenberg, O. (2002). Foundations of Modern Probability, 2nd ed. Springer, New York]) and solves a problem posed in [Thorisson, H. (2000). Coupling, Stationarity, and Regeneration. Springer, New York] and [Ferrari, P. A., Landim, C. and Thorisson, H. (2004). Ann. Inst. H. Poincaré Probab. Statist. 40 141–152]. Our second result guarantees the existence of bijective point-maps that have (almost surely with respect to the Palm measure of N) no fixed points. This answers another question asked by Thorisson. Our final result shows that there is a directed graph with vertex set N that is defined in a translation-invariant way and whose components are almost surely doubly infinite paths. This generalizes and complements one of the main results in [Holroyd, A. -

Probability Spaces Lecturer: Dr

EE5110: Probability Foundations for Electrical Engineers July-November 2015 Lecture 4: Probability Spaces Lecturer: Dr. Krishna Jagannathan Scribe: Jainam Doshi, Arjun Nadh and Ajay M 4.1 Introduction Just as a point is not defined in elementary geometry, probability theory begins with two entities that are not defined. These undefined entities are a Random Experiment and its Outcome: These two concepts are to be understood intuitively, as suggested by their respective English meanings. We use these undefined terms to define other entities. Definition 4.1 The Sample Space Ω of a random experiment is the set of all possible outcomes of a random experiment. An outcome (or elementary outcome) of the random experiment is usually denoted by !: Thus, when a random experiment is performed, the outcome ! 2 Ω is picked by the Goddess of Chance or Mother Nature or your favourite genie. Note that the sample space Ω can be finite or infinite. Indeed, depending on the cardinality of Ω, it can be classified as follows: 1. Finite sample space 2. Countably infinite sample space 3. Uncountable sample space It is imperative to note that for a given random experiment, its sample space is defined depending on what one is interested in observing as the outcome. We illustrate this using an example. Consider a person tossing a coin. This is a random experiment. Now consider the following three cases: • Suppose one is interested in knowing whether the toss produces a head or a tail, then the sample space is given by, Ω = fH; T g. Here, as there are only two possible outcomes, the sample space is said to be finite. -

Lecture Notes 4 36-705 1 Reminder: Convergence of Sequences 2

Lecture Notes 4 36-705 In today's lecture we discuss the convergence of random variables. At a high-level, our first few lectures focused on non-asymptotic properties of averages i.e. the tail bounds we derived applied for any fixed sample size n. For the next few lectures we focus on asymptotic properties, i.e. we ask the question: what happens to the average of n i.i.d. random variables as n ! 1. Roughly, from a theoretical perspective the idea is that many expressions will consider- ably simplify in the asymptotic regime. Rather than have many different tail bounds, we will derive simple \universal results" that hold under extremely weak conditions. From a slightly more practical perspective, asymptotic theory is often useful to obtain approximate confidence intervals. 1 Reminder: convergence of sequences When we think of convergence of deterministic real numbers the corresponding notions are classical. Formally, we say that a sequence of real numbers a1; a2;::: converges to a fixed real number a if, for every positive number , there exists a natural number N() such that for all n ≥ N(), jan − aj < . We call a the limit of the sequence and write limn!1 an = a: Our focus today will in trying to develop analogues of this notion that apply to sequences of random variables. We will first give some definitions and then try to circle back to relate the definitions and discuss some examples. Throughout, we will focus on the setting where we have a sequence of random variables X1;:::;Xn and another random variable X, and would like to define what is means for the sequence to converge to X.