On Almost Sure Rates of Convergence for Sample Average Approximations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Conditional Generalizations of Strong Laws Which Conclude the Partial Sums Converge Almost Surely1

The Annals ofProbability 1982. Vol. 10, No.3. 828-830 CONDITIONAL GENERALIZATIONS OF STRONG LAWS WHICH CONCLUDE THE PARTIAL SUMS CONVERGE ALMOST SURELY1 By T. P. HILL Georgia Institute of Technology Suppose that for every independent sequence of random variables satis fying some hypothesis condition H, it follows that the partial sums converge almost surely. Then it is shown that for every arbitrarily-dependent sequence of random variables, the partial sums converge almost surely on the event where the conditional distributions (given the past) satisfy precisely the same condition H. Thus many strong laws for independent sequences may be immediately generalized into conditional results for arbitrarily-dependent sequences. 1. Introduction. Ifevery sequence ofindependent random variables having property A has property B almost surely, does every arbitrarily-dependent sequence of random variables have property B almost surely on the set where the conditional distributions have property A? Not in general, but comparisons of the conditional Borel-Cantelli Lemmas, the condi tional three-series theorem, and many martingale results with their independent counter parts suggest that the answer is affirmative in fairly general situations. The purpose ofthis note is to prove Theorem 1, which states, in part, that if "property B" is "the partial sums converge," then the answer is always affIrmative, regardless of "property A." Thus many strong laws for independent sequences (even laws yet undiscovered) may be immediately generalized into conditional results for arbitrarily-dependent sequences. 2. Main Theorem. In this note, IY = (Y1 , Y2 , ••• ) is a sequence ofrandom variables on a probability triple (n, d, !?I'), Sn = Y 1 + Y 2 + .. -

Numerical Solution of Ordinary Differential Equations

NUMERICAL SOLUTION OF ORDINARY DIFFERENTIAL EQUATIONS Kendall Atkinson, Weimin Han, David Stewart University of Iowa Iowa City, Iowa A JOHN WILEY & SONS, INC., PUBLICATION Copyright c 2009 by John Wiley & Sons, Inc. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey. Published simultaneously in Canada. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, scanning, or otherwise, except as permitted under Section 107 or 108 of the 1976 United States Copyright Act, without either the prior written permission of the Publisher, or authorization through payment of the appropriate per-copy fee to the Copyright Clearance Center, Inc., 222 Rosewood Drive, Danvers, MA 01923, (978) 750-8400, fax (978) 646-8600, or on the web at www.copyright.com. Requests to the Publisher for permission should be addressed to the Permissions Department, John Wiley & Sons, Inc., 111 River Street, Hoboken, NJ 07030, (201) 748-6011, fax (201) 748-6008. Limit of Liability/Disclaimer of Warranty: While the publisher and author have used their best efforts in preparing this book, they make no representations or warranties with respect to the accuracy or completeness of the contents of this book and specifically disclaim any implied warranties of merchantability or fitness for a particular purpose. No warranty may be created ore extended by sales representatives or written sales materials. The advice and strategies contained herin may not be suitable for your situation. You should consult with a professional where appropriate. Neither the publisher nor author shall be liable for any loss of profit or any other commercial damages, including but not limited to special, incidental, consequential, or other damages. -

Polarization Fields and Phase Space Densities in Storage Rings: Stroboscopic Averaging and the Ergodic Theorem

Physica D 234 (2007) 131–149 www.elsevier.com/locate/physd Polarization fields and phase space densities in storage rings: Stroboscopic averaging and the ergodic theorem✩ James A. Ellison∗, Klaus Heinemann Department of Mathematics and Statistics, University of New Mexico, Albuquerque, NM 87131, United States Received 1 May 2007; received in revised form 6 July 2007; accepted 9 July 2007 Available online 14 July 2007 Communicated by C.K.R.T. Jones Abstract A class of orbital motions with volume preserving flows and with vector fields periodic in the “time” parameter θ is defined. Spin motion coupled to the orbital dynamics is then defined, resulting in a class of spin–orbit motions which are important for storage rings. Phase space densities and polarization fields are introduced. It is important, in the context of storage rings, to understand the behavior of periodic polarization fields and phase space densities. Due to the 2π time periodicity of the spin–orbit equations of motion the polarization field, taken at a sequence of increasing time values θ,θ 2π,θ 4π,... , gives a sequence of polarization fields, called the stroboscopic sequence. We show, by using the + + Birkhoff ergodic theorem, that under very general conditions the Cesaro` averages of that sequence converge almost everywhere on phase space to a polarization field which is 2π-periodic in time. This fulfills the main aim of this paper in that it demonstrates that the tracking algorithm for stroboscopic averaging, encoded in the program SPRINT and used in the study of spin motion in storage rings, is mathematically well-founded. -

Approximations in Numerical Analysis, Cont'd

Jim Lambers MAT 460/560 Fall Semester 2009-10 Lecture 7 Notes These notes correspond to Section 1.3 in the text. Approximations in Numerical Analysis, cont'd Convergence Many algorithms in numerical analysis are iterative methods that produce a sequence fαng of ap- proximate solutions which, ideally, converges to a limit α that is the exact solution as n approaches 1. Because we can only perform a finite number of iterations, we cannot obtain the exact solution, and we have introduced computational error. If our iterative method is properly designed, then this computational error will approach zero as n approaches 1. However, it is important that we obtain a sufficiently accurate approximate solution using as few computations as possible. Therefore, it is not practical to simply perform enough iterations so that the computational error is determined to be sufficiently small, because it is possible that another method may yield comparable accuracy with less computational effort. The total computational effort of an iterative method depends on both the effort per iteration and the number of iterations performed. Therefore, in order to determine the amount of compu- tation that is needed to attain a given accuracy, we must be able to measure the error in αn as a function of n. The more rapidly this function approaches zero as n approaches 1, the more rapidly the sequence of approximations fαng converges to the exact solution α, and as a result, fewer iterations are needed to achieve a desired accuracy. We now introduce some terminology that will aid in the discussion of the convergence behavior of iterative methods. -

On the Three Methods for Bounding the Rate of Convergence for Some Continuous-Time Markov Chains

On the Three Methods for Bounding the Rate of Convergence for some Continuous-time Markov Chains Alexander Zeifman∗ Yacov Satiny Anastasia Kryukovaz Rostislav Razumchik§ Ksenia Kiseleva{ Galina Shilovak Abstract. Consideration is given to the three different analytical methods for the computation of upper bounds for the rate of convergence to the limiting regime of one specific class of (in)homogeneous continuous- time Markov chains. This class is particularly suited to describe evolutions of the total number of customers in (in)homogeneous M=M=S queueing systems with possibly state-dependent arrival and service intensities, batch arrivals and services. One of the methods is based on the logarithmic norm of a linear operator function; the other two rely on Lyapunov functions and differential inequalities, respectively. Less restrictive conditions (compared to those known from the literature) under which the methods are applicable, are being formulated. Two numerical examples are given. It is also shown that for homogeneous birth-death Markov processes defined on a finite state space with all transition rates being positive, all methods yield the same sharp upper bound. ∗Vologda State University, Vologda, Russia; Institute of Informatics Problems, Federal Research Center “Computer Science and Control”, Russian Academy of Sciences, Moscow, arXiv:1911.04086v2 [math.PR] 24 Feb 2020 Russia; Vologda Research Center of the Russian Academy of Sciences, Vologda, Russia. E-mail: [email protected] yVologda State University, Vologda, Russia zVologda State -

CONVERGENCE RATES of MARKOV CHAINS 1. Orientation 1

CONVERGENCE RATES OF MARKOV CHAINS STEVEN P. LALLEY CONTENTS 1. Orientation 1 1.1. Example 1: Random-to-Top Card Shuffling 1 1.2. Example 2: The Ehrenfest Urn Model of Diffusion 2 1.3. Example 3: Metropolis-Hastings Algorithm 4 1.4. Reversible Markov Chains 5 1.5. Exercises: Reversiblity, Symmetries and Stationary Distributions 8 2. Coupling 9 2.1. Coupling and Total Variation Distance 9 2.2. Coupling Constructions and Convergence of Markov Chains 10 2.3. Couplings for the Ehrenfest Urn and Random-to-Top Shuffling 12 2.4. The Coupon Collector’s Problem 13 2.5. Exercises 15 2.6. Convergence Rates for the Ehrenfest Urn and Random-to-Top 16 2.7. Exercises 17 3. Spectral Analysis 18 3.1. Transition Kernel of a Reversible Markov Chain 18 3.2. Spectrum of the Ehrenfest random walk 21 3.3. Rate of convergence of the Ehrenfest random walk 23 1. ORIENTATION Finite-state Markov chains have stationary distributions, and irreducible, aperiodic, finite- state Markov chains have unique stationary distributions. Furthermore, for any such chain the n−step transition probabilities converge to the stationary distribution. In various ap- plications – especially in Markov chain Monte Carlo, where one runs a Markov chain on a computer to simulate a random draw from the stationary distribution – it is desirable to know how many steps it takes for the n−step transition probabilities to become close to the stationary probabilities. These notes will introduce several of the most basic and important techniques for studying this problem, coupling and spectral analysis. We will focus on two Markov chains for which the convergence rate is of particular interest: (1) the random-to-top shuffling model and (2) the Ehrenfest urn model. -

Lecture Notes 4 36-705 1 Reminder: Convergence of Sequences 2

Lecture Notes 4 36-705 In today's lecture we discuss the convergence of random variables. At a high-level, our first few lectures focused on non-asymptotic properties of averages i.e. the tail bounds we derived applied for any fixed sample size n. For the next few lectures we focus on asymptotic properties, i.e. we ask the question: what happens to the average of n i.i.d. random variables as n ! 1. Roughly, from a theoretical perspective the idea is that many expressions will consider- ably simplify in the asymptotic regime. Rather than have many different tail bounds, we will derive simple \universal results" that hold under extremely weak conditions. From a slightly more practical perspective, asymptotic theory is often useful to obtain approximate confidence intervals. 1 Reminder: convergence of sequences When we think of convergence of deterministic real numbers the corresponding notions are classical. Formally, we say that a sequence of real numbers a1; a2;::: converges to a fixed real number a if, for every positive number , there exists a natural number N() such that for all n ≥ N(), jan − aj < . We call a the limit of the sequence and write limn!1 an = a: Our focus today will in trying to develop analogues of this notion that apply to sequences of random variables. We will first give some definitions and then try to circle back to relate the definitions and discuss some examples. Throughout, we will focus on the setting where we have a sequence of random variables X1;:::;Xn and another random variable X, and would like to define what is means for the sequence to converge to X. -

Convergence Rates for the Quantum Central Limit Theorem

Commun. Math. Phys. 383, 223–279 (2021) Communications in Digital Object Identifier (DOI) https://doi.org/10.1007/s00220-021-03988-1 Mathematical Physics Convergence Rates for the Quantum Central Limit Theorem Simon Becker1 , Nilanjana Datta1, Ludovico Lami2,3, Cambyse Rouzé4 1 Department of Applied Mathematics and Theoretical Physics, Centre for Mathematical Sciences University of Cambridge, Cambridge CB3 0WA,UK. E-mail: [email protected]; [email protected] 2 School of Mathematical Sciences and Centre for the Mathematics and Theoretical Physics of Quantum Non-Equilibrium Systems, University of Nottingham, University Park, Nottingham NG7 2RD, UK. 3 Institute of Theoretical Physics and IQST, Universität Ulm, Albert-Einstein-Allee, 89069 Ulm, Germany. E-mail: [email protected] 4 Zentrum Mathematik, Technische Universität München, 85748 Garching, Germany. E-mail: [email protected] Received: 20 February 2020 / Accepted: 15 January 2021 Published online: 15 February 2021 – © The Author(s) 2021 Contents 1. Introduction ................................. 224 2. Notation and Definitions ........................... 228 2.1 Mathematical notation ......................... 228 2.2 Definitions ................................ 229 2.2.1 Quantum information with continuous variables .......... 229 2.2.2 Phase space formalism ....................... 231 2.3 Moments ................................ 233 2.4 Quantum convolution .......................... 235 3. Cushen and Hudson’s Quantum Central Limit Theorem .......... 236 4. Main Results ................................. 237 4.1 Quantitative bounds in the QCLT .................... 237 4.2 Optimality of convergence rates and necessity of finite second moments in the QCLT ............................... 239 4.3 Applications to capacity of cascades of beam splitters with non-Gaussian environment ............................... 240 4.4 New results on quantum characteristic functions ............ 242 5. New Results on Quantum Characteristic Functions: Proofs ........ -

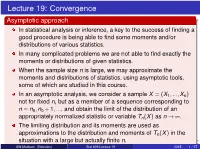

Stat 609: Mathematical Statistics Lecture 19

Lecture 19: Convergence Asymptotic approach In statistical analysis or inference, a key to the success of finding a good procedure is being able to find some moments and/or distributions of various statistics. In many complicated problems we are not able to find exactly the moments or distributions of given statistics. When the sample size n is large, we may approximate the moments and distributions of statistics, using asymptotic tools, some of which are studied in this course. In an asymptotic analysis, we consider a sample X = (X1;:::;Xn) not for fixed n, but as a member of a sequence corresponding to n = n0;n0 + 1;:::, and obtain the limit of the distribution of an appropriately normalized statistic or variable Tn(X) as n ! ¥. The limiting distribution and its moments are used as approximations to the distribution and moments of Tn(X) in thebeamer-tu-logo situation with a large but actually finite n. UW-Madison (Statistics) Stat 609 Lecture 19 2015 1 / 17 This leads to some asymptotic statistical procedures and asymptotic criteria for assessing their performances. The asymptotic approach is not only applied to the situation where no exact method (the approach considering a fixed n) is available, but also used to provide a procedure simpler (e.g., in terms of computation) than that produced by the exact approach. In addition to providing more theoretical results and/or simpler procedures, the asymptotic approach requires less stringent mathematical assumptions than does the exact approach. Definition 5.5.1 (convergence in probability) A sequence of random variables Zn, i = 1;2;:::, converges in probability to a random variable Z iff for every e > 0, lim P(jZn − Z j ≥ e) = 0: n!¥ A sequence of random vectors Zn converges in probability to a random vector Z iff each component of Zn converges in probability to the corresponding component of Z. -

High Order Regularization of Dirac-Delta Sources in Two Space Dimensions

INTERNSHIP REPORT High order regularization of Dirac-delta sources in two space dimensions. Author: Supervisors: Wouter DE VRIES Prof. Guustaaf JACOBS s1010239 Jean-Piero SUAREZ HOST INSTITUTION: San Diego State University, San Diego (CA), USA Department of Aerospace Engineering and Engineering Mechanics Computational Fluid Dynamics laboratory Prof. G.B. JACOBS HOME INSTITUTION University of Twente, Enschede, Netherlands Faculty of Engineering Technology Group of Engineering Fluid Dynamics Prof. H.W.M. HOEIJMAKERS March 4, 2015 Abstract In this report the regularization of singular sources appearing in hyperbolic conservation laws is studied. Dirac-delta sources represent small particles which appear as source-terms in for example Particle-laden flow. The Dirac-delta sources are regularized with a high order accurate polynomial based regularization technique. Instead of regularizing a single isolated particle, the aim is to employ the regularization technique for a cloud of particles distributed on a non- uniform grid. By assuming that the resulting force exerted by a cloud of particles can be represented by a continuous function, the influence of the source term can be approximated by the convolution of the continuous function and the regularized Dirac-delta function. It is shown that in one dimension the distribution of the singular sources, represented by the convolution of the contin- uous function and Dirac-delta function, can be approximated with high accuracy using the regularization technique. The method is extended to two dimensions and high order approximations of the source-term are obtained as well. The resulting approximation of the singular sources are interpolated using a polynomial interpolation in order to regain a continuous distribution representing the force exerted by the cloud of particles. -

Strongly Mixed Random Errors in Mann's Iteration Algorithm for a Contractive Real Function

Pr´e-Publica¸c~oesdo Departamento de Matem´atica Universidade de Coimbra Preprint Number 17{29 STRONGLY MIXED RANDOM ERRORS IN MANN'S ITERATION ALGORITHM FOR A CONTRACTIVE REAL FUNCTION HASSINA ARROUDJ, IDIR ARAB AND ABDELNASSER DAHMANI Abstract: This work deals with the Mann's stochastic iteration algorithm under α−mixing random errors. We establish the Fuk-Nagaev's inequalities that enable us to prove the almost complete convergence with its corresponding rate of con- vergence. Moreover, these inequalities give us the possibility of constructing a confidence interval for the unique fixed point. Finally, to check the feasibility and validity of our theoretical results, we consider some numerical examples, namely a classical example from astronomy. Keywords: Fixed point; Fuk-Nagaev's inequalities; Mann's iteration algorithm; α-mixing; Rate of convergence; confidence domain. 1. Introduction In many mathematical problems arising from various domains, the exis- tence of a solution is the same as the existence of a fixed point by some appropriate transformation of the problem. The most known problem in that framework is the root existence which can be tackled easily as the ex- istence of a fixed point and vice versa. Therefore, the fixed point theory is of paramount importance in engineering sciences and many areas of mathe- matics. Fixed point theory provides conditions under which maps have the existence and uniqueness of solutions. Over the last decades, that theory has been revealed as one of the most significant tool in the study of nonlinear problems. In particular, in many fields, equilibria or stability are fundamen- tal concepts that can be described in terms of fixed points. -

1 Probability Spaces

BROWN UNIVERSITY Math 1610 Probability Notes Samuel S. Watson Last updated: December 18, 2015 Please do not hesitate to notify me about any mistakes you find in these notes. My advice is to refresh your local copy of this document frequently, as I will be updating it throughout the semester. 1 Probability Spaces We model random phenomena with a probability space, which consists of an arbitrary set , a collection1 of subsets of , and a map P : [0, 1], where and P satisfy certain F F! F conditions detailed below. An element ! is called an outcome, an element E is 2 2 F called an event, and we interpret P(E) as the probability of the event E. To connect this setup to the way you usually think about probability, regard ! as having been randomly selected from in such a way that, for each event E, the probability that ! is in E (in other words, that E happens) is equal to P(E). If E and F are events, then the event “E and F” corresponds to the ! : ! E and ! F , f 2 2 g abbreviated as E F. Similarly, E F is the event that E happens or F happens, and \ [ E is the event that E does not happen. We refer to E as the complement of E, and n n sometimes denote2 it by Ec. To ensure that we can perform these basic operations, we require that is closed under F them. In other words, E must be an event whenever E is an event (that is, E n n 2 F whenever E ).