T2.Pdf (7.330Mb)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dualcad : Intégrer La Réalité Augmentée Et Les Interfaces D'ordinateurs De Bureau Dans Un Contexte De Conception Assistée Par Ordinateur

ÉCOLE DE TECHNOLOGIE SUPÉRIEURE UNIVERSITÉ DU QUÉBEC MÉMOIRE PRÉSENTÉ À L’ÉCOLE DE TECHNOLOGIE SUPÉRIEURE COMME EXIGENCE PARTIELLE À L’OBTENTION DE LA MAÎTRISE AVEC MÉMOIRE EN GÉNIE LOGICIEL M. Sc. A. PAR Alexandre MILLETTE DUALCAD : INTÉGRER LA RÉALITÉ AUGMENTÉE ET LES INTERFACES D'ORDINATEURS DE BUREAU DANS UN CONTEXTE DE CONCEPTION ASSISTÉE PAR ORDINATEUR MONTRÉAL, LE 27 AVRIL 2016 Alexandre Millette, 2016 Cette licence Creative Commons signifie qu’il est permis de diffuser, d’imprimer ou de sauvegarder sur un autre support une partie ou la totalité de cette œuvre à condition de mentionner l’auteur, que ces utilisations soient faites à des fins non commerciales et que le contenu de l’œuvre n’ait pas été modifié. PRÉSENTATION DU JURY CE MÉMOIRE A ÉTÉ ÉVALUÉ PAR UN JURY COMPOSÉ DE : M. Michael J. McGuffin, directeur de mémoire Département de génie logiciel et des TI à l’École de technologie supérieure M. Luc Duong, président du jury Département de génie logiciel et des TI à l’École de technologie supérieure M. David Labbé, membre du jury Département de génie logiciel et des TI à l’École de technologie supérieure IL A FAIT L’OBJET D’UNE SOUTENANCE DEVANT JURY ET PUBLIC LE 6 AVRIL 2016 À L’ÉCOLE DE TECHNOLOGIE SUPÉRIEURE AVANT-PROPOS Lorsqu’il m’est venu le temps de choisir si je désirais faire une maîtrise avec ou sans mémoire, les technologies à Réalité Augmentée (RA) étaient encore des embryons de projets, entrepris par des compagnies connues pour leurs innovations, telles que Google avec leur Google Glasses, ou par des compagnies émergentes, telles que META et leur Spaceglasses. -

A Review and Selective Analysis of 3D Display Technologies for Anatomical Education

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2018 A Review and Selective Analysis of 3D Display Technologies for Anatomical Education Matthew Hackett University of Central Florida Part of the Anatomy Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Hackett, Matthew, "A Review and Selective Analysis of 3D Display Technologies for Anatomical Education" (2018). Electronic Theses and Dissertations, 2004-2019. 6408. https://stars.library.ucf.edu/etd/6408 A REVIEW AND SELECTIVE ANALYSIS OF 3D DISPLAY TECHNOLOGIES FOR ANATOMICAL EDUCATION by: MATTHEW G. HACKETT BSE University of Central Florida 2007, MSE University of Florida 2009, MS University of Central Florida 2012 A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Modeling and Simulation program in the College of Engineering and Computer Science at the University of Central Florida Orlando, Florida Summer Term 2018 Major Professor: Michael Proctor ©2018 Matthew Hackett ii ABSTRACT The study of anatomy is complex and difficult for students in both graduate and undergraduate education. Researchers have attempted to improve anatomical education with the inclusion of three-dimensional visualization, with the prevailing finding that 3D is beneficial to students. However, there is limited research on the relative efficacy of different 3D modalities, including monoscopic, stereoscopic, and autostereoscopic displays. -

Multi-User Display Systems, Compendium of the State of the Art

Multi-user display systems, Compendium of the State of the Art. Juan Sebastian Munoz-Arango; Research Assistant EAC - UA Little Rock; Little Rock, AR, Dirk Reiners; Research Scientist EAC - UA Little Rock; Little Rock, AR Carolina Cruz-Neira; Research Director EAC - UA Little Rock; Little Rock, AR Abstract egories: Spatial Barriers, Optical Filtering, Optical Routing and One of the main shortcomings of most Virtual Reality display sys- Time Multiplexing. tems, be it head-mounted displays or projection based systems like In addition to these categories it is worth mentioning volu- CAVEs, is that they can only provide the correct perspective to a metric displays and light field displays, which are relevant to the single user. This is a significant limitation that reduces the appli- multi user viewing topic even though they achieve multi user per- cability of Virtual Reality approaches for most kinds of group col- spective through a totally different approach. laborative work, which is becoming more and more important in many disciplines. Different approaches have been tried to present Spatial barriers multiple images to different users at the same time, like optical Spatial barriers take advantage of the display’s physical config- barriers, optical filtering, optical routing, time multiplex, volu- uration and user placement to display users’ specific views. Es- metric displays and lightfield displays among others. This paper sentially they form a mechanical barrier that lets each user see a describes, discusses and compares different approaches that have subset of the underlying displays’ pixels. been developed and develop an evaluation approach to identify The spatial barriers approach has been around for a while. -

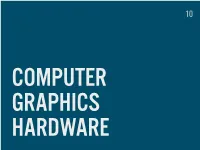

GRAPHICS HARDWARE WHAT’S in STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High Performance Power Consumption Heat Emission

10 COMPUTER GRAPHICS HARDWARE WHAT’S IN STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High performance Power consumption Heat emission INTEGRATED (INTERNAL) Low power, low heat Mobile devices, on board Integrated with CPU ARCHITECTURE VERTEX SHADERS (TRANSFORM GEOMETRY) GEOMETRY SHADERS (CREATE NEW GEOMETRY) PIXEL SHADERS (COLORS, SHADOWS…) UNIFIED SHADERS One type of processors for all operations GTX 680 = 1536 unified shader cores CUDA, GPGPU General (not only graphics) performed on GPU Parallelism (HW video encoding, numerical computations) CONNECTORS VGA Analog DVI Digital + Analog HDMI Digital miniHDMI, microHDMI DISPLAYPORT Digital, Analog Mini DisplayPort (Apple) MANUFACTURERS NVIDIA GeForce, Quadro, Tesla AMD (FORMERLY ATI) Radeon, FirePro INTEL Integrated in Core CPUs POWERVR ARM Mali DISPLAYS CATHODE RAY TUBE (CRT) CATHODE RAY TUBE (CRT) ANALOG TVS OLD COMPUTER MONITORS MEDIUM SIZE DISPLAYS LIGHT EMISSION Black is black FLICKER 75-100 Hz for work http://www.bthompson.net/ PLASMA (PDP, NEO-PDP) NOT SUITABLE FOR COMPUTER DISPLAYS LARGE SCREENS (30”+) LIGHT EMISSION Black is black HIGH ENERGY DEMANDS VIEW ANGLE DOESN’T MATTER IMAGE RETENTION Reduced for Neo-PDP LIQUID CRYSTAL DISPLAY (LCD) CRYSTALS BLOCK LIGHT FROM BACK SOURCE Black is hard to achieve DIFFERENT TECHNOLOGIES (TN, IPS, MVA, …) LOW ENERGY CONSUMPTION LARGE SCREENS VIEW ANGLES ISSUE LCD LED CONFUSION OLED (ORGANIC LIGHT-EMITTING DIODE) LIGHT EMISSION GOOD CONTRAST AND COLORS EXPENSIVE PRODUCTION Small screens so far LOW ENERGY POTENTIALLY FLEXIBLE VIEW ANGLE -

The Varriertm Autostereoscopic Virtual Reality Display

submission id papers_0427 The VarrierTM Autostereoscopic Virtual Reality Display Daniel J. Sandin, Todd Margolis, Jinghua Ge, Javier Girado, Tom Peterka, Thomas A. DeFanti Electronic Visualization Laboratory University of Illinois at Chicago [email protected] Abstract In most other lenticular and barrier strip implementations, stereo is achieved by sorting image slices in integer (image) coordinates. Virtual reality (VR) has long been hampered by the gear Moreover, many autostereo systems compress scene depth in the z needed to make the experience possible; specifically, stereo direction to improve image quality but Varrier is orthoscopic, glasses and tracking devices. Autostereoscopic display devices are which means that all 3 dimensions are displayed in the same gaining popularity by freeing the user from stereo glasses, scale. Besides head-tracked perspective interaction, the user can however few qualify as VR displays. The Electronic Visualization further interact with VR applications through hand-held devices Laboratory (EVL) at the University of Illinois at Chicago (UIC) such as a 3d wand, for example to control navigation through has designed and produced a large scale, high resolution head- virtual worlds. tracked barrier-strip autostereoscopic display system that There are four main contributions of this paper. New virtual produces a VR immersive experience without requiring the user to barrier algorithms are presented that enhance image quality and wear any encumbrances. The resulting system, called Varrier, is a lower color shifts by operating at sub-pixel resolution. Automated passive parallax barrier 35-panel tiled display that produces a camera-based registration of the physical and virtual barrier strip wide field of view, head-tracked VR experience. -

Photo-Aligned Ferroelectric Liquid Crystal Devices with Novel Electro-Optic Characteristics

crystals Review Photo-Aligned Ferroelectric Liquid Crystal Devices with Novel Electro-Optic Characteristics Vladimir Chigrinov 1,2,* , Qi Guo 3 and Aleksey Kudreyko 4 1 School of Physics and Optoelectronic Engineering, Foshan University, Foshan 528325, China 2 Department of Theoretical Physics, Moscow Region State University, 141014 Mytishi, Russia 3 School of Instrumentation and Optoelectronic Engineering, Beihang University, Beijing 100191, China; [email protected] 4 Department of Medical Physics and Informatics, Bashkir State Medical University, 450008 Ufa, Russia; [email protected] * Correspondence: [email protected] Received: 18 May 2020; Accepted: 19 June 2020; Published: 1 July 2020 Abstract: This paper examines different applications of ferroelectric liquid crystal devices based on photo-alignment. Successful application of the photo-alignment technique is considered to be a critical breakthrough. A variety of display and photonic devices with azo dye aligned ferroelectric liquid crystals is presented: smart glasses, liquid crystal Pancharatnam–Berry phase optical elements, 2D/3D switchable lenses, and laser therapy devices. Comparison of electro-optical behavior of ferroelectric liquid crystals is described considering the performance of devices. This paper facilitates the optimization of device design, and broadens the possible applications in the display and photonic area. Keywords: photo-alignment of ferroelectric liquid crystals; electro-optic modes; new devices; imaging technologies; displays; photonics 1. Introduction The appearance of portable and wearable devices has led to an ever-increasing demand for next-generation displays with ultra-high resolution, light weight, low power consumption, and high efficiency [1,2]. However, the response time of nematic liquid crystals (LCs) is too slow for fast-switching devices. -

Dynallax: Dynamic Parallax Barrier Autostereoscopic Display

Dynallax: Dynamic Parallax Barrier Autostereoscopic Display BY THOMAS PETERKA B.S., University of Illinois at Chicago, 1987 M.S., University of Illinois at Chicago, 2003 PH.D. DISSERTATION Submitted as partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate College of the University of Illinois at Chicago, 2007 Chicago, Illinois This thesis is dedicated to Melinda, Chris, and Amanda, who always believed in me, and whose confidence helped me believe in myself. Graduate school would never have been possible without their love and support, and for this I will always be grateful. iii ACKNOWLEDGEMENTS I would like to express my sincere gratitude to the following individuals for their assistance and support. • Andrew Johnson of the Electronic Visualization Laboratory (EVL), University of Illinois at Chicago (UIC) for advising me during my Ph.D. studies, for serving on the dissertation committee and for providing valuable feedback and critical reviews of my writing • Dan Sandin of EVL, UIC for introducing me to autostereoscopy, for providing the opportunity to work with him in this exciting field, for serving on the dissertation committee, and for many years of dedication to the advancement of VR as both an art and a science • Jason Leigh, EVL, UIC for serving on the dissertation committee and providing guidance, support, insight, and funding for my work • Tom DeFanti, EVL, UIC, UCSD, for making time to serve on my committee, and for his ongoing support and friendship for many years at EVL • Jurgen Schulze, UCSD, for taking the time and effort to travel from San Diego to Chicago to serve on the committee and for his interest and willingness to participate TP iv TABLE OF CONTENTS CHAPTER PAGE 1. -

Multi-User Display Systems, Compendium of the State of the Art

https://doi.org/10.2352/ISSN.2470-1173.2019.3.SDA-645 © 2019, Society for Imaging Science and Technology Multi-user display systems, Compendium of the State of the Art. Juan Sebastian Munoz-Arango; Research Assistant EAC - UA Little Rock; Little Rock, AR, Dirk Reiners; Research Scientist EAC - UA Little Rock; Little Rock, AR Carolina Cruz-Neira; Research Director EAC - UA Little Rock; Little Rock, AR Abstract egories: Spatial Barriers, Optical Filtering, Optical Routing and One of the main shortcomings of most Virtual Reality display sys- Time Multiplexing. tems, be it head-mounted displays or projection based systems like In addition to these categories it is worth mentioning volu- CAVEs, is that they can only provide the correct perspective to a metric displays and light field displays, which are relevant to the single user. This is a significant limitation that reduces the appli- multi user viewing topic even though they achieve multi user per- cability of Virtual Reality approaches for most kinds of group col- spective through a totally different approach. laborative work, which is becoming more and more important in many disciplines. Different approaches have been tried to present Spatial barriers multiple images to different users at the same time, like optical Spatial barriers take advantage of the display’s physical config- barriers, optical filtering, optical routing, time multiplex, volu- uration and user placement to display users’ specific views. Es- metric displays and lightfield displays among others. This paper sentially they form a mechanical barrier that lets each user see a describes, discusses and compares different approaches that have subset of the underlying displays’ pixels. -

Principles and Evaluation of Autostereoscopic Photogrammetric Measurement

04-084 3/14/06 9:02 PM Page 365 Principles and Evaluation of Autostereoscopic Photogrammetric Measurement Jie Shan, Chiung-Shiuan Fu, Bin Li, James Bethel, Jeffrey Kretsch, and Edward Mikhail Abstract display alternating pairs of remote sensing images. Usery Stereoscopic perception is a basic requirement for photogram- (2003) discusses the critical issues such as color, resolution, metric 3D measurement and accurate geospatial data collec- and refresh rate for autostereoscopic display of geospatial tion. Ordinary stereoscopic techniques require operators data. Despite these visualization-oriented studies, properties wearing glasses or using eyepieces for interpretation and and performance of autostereoscopic measurement are measurement. However, the recent emerging autostereoscopic unknown to the photogrammetric community. In this paper, technology makes it possible to eliminate this requirement. we focus on the metric properties of the autostereoscopic This paper studies the principles and implementation of display and evaluate its performance for photogrammetric autostereoscopic photogrammetric measurement and evalu- measurement. The paper starts with a brief introduction ates its performance. We first describe the principles and to the principles of the autostereoscopic technology. As properties of the parallax barrier-based autostereoscopic an important metric property, we quantitatively show the display used in this study. As an important metric property, autostereoscopic geometry, including viewing zones and we quantitatively present the autostereoscopic geometry, the boundary of operator’s movement for autostereoscopic including viewing zones and the boundary of a viewer’s perception. To carry out autostereoscopic measurement and movement for autostereoscopic measurement. A toolkit evaluate its performance, a photogrammetric toolkit, AUTO3D, AUTO3D is developed that has common photogrammetric is developed based on a DTI (Dimension Technologies, Inc., functions. -

Rendering and Display for Multi-Viewer Tele-Immersion

Rendering and Display for Multi-Viewer Tele-Immersion Andrew R. Nashel A dissertation submitted to the faculty of the University of North Carolina at Chapel Hill in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Department of Computer Science. Chapel Hill 2010 Approved by: Henry Fuchs, Advisor Ketan Mayer-Patel, Reader Leonard McMillan, Reader Leandra Vicci, Reader Greg Welch, Reader c 2010 Andrew R. Nashel ALL RIGHTS RESERVED ii Abstract Andrew R. Nashel: Rendering and Display for Multi-Viewer Tele-Immersion. (Under the direction of Henry Fuchs.) Video teleconferencing systems are widely deployed for business, education and personal use to enable face-to-face communication between people at distant sites. Unfortunately, the two- dimensional video of conventional systems does not correctly convey several important non- verbal communication cues such as eye contact and gaze awareness. Tele-immersion refers to technologies aimed at providing distant users with a more compelling sense of remote presence than conventional video teleconferencing. This dissertation is concerned with the particular challenges of interaction between groups of users at remote sites. The problems of video teleconferencing are exacerbated when groups of people communicate. Ideally, a group tele-immersion system would display views of the remote site at the right size and location, from the correct viewpoint for each local user. However, is is not practical to put a camera in every possible eye location, and it is not clear how to provide each viewer with correct and unique imagery. I introduce rendering techniques and multi-view display designs to support eye contact and gaze awareness between groups of viewers at two distant sites. -

Psholix Company Presentation 10.2019

Pseudo-Holographic Display Technology developed & patented by Company Presentation Pseudo-Holographic Display Technology Company overview • psHolix AG was founded in March 2015 in Basel, Switzerland • Dr Naske, the founder of the technology and CTO, has injected 25 patents • psHolix owns now more than 57 patents, 2 are in production • More than 2 Million USD have been invested so far • Dr Naske has worked 10 years on this project before it was merged into psHolix AG • Demo products are ready (8K display, laptop, tablet, mobile phones, VR headsets) • Based on the cutting-edge technology behind the eyewear-less 3D displays, the company has build following technologies - Virtual Reality (VR, AR, MR) Headset Software - autonomous car software 2 Pseudo-Holographic Display Technology The next AI-driven display technology • 12 years advanced research in Autostereoscopy • 1,000 times more perspectives than competition and therefore better natural perception • Highest fidelity in spacial viewing experience for everyone everywhere (no transition zones, multi users, no need for head tracking) • 2DTV backwards compatible with highest resolution • No headache and eye strain when watching 3D content over a longer period of time • Compatible with all contents (Games, TV, DVD, YouTube, etc) • Compatible with all technologies (HDR, OLED, LCD, Retina, etc) • Technology can be applied on all resolutions and sizes • 2D and 3D in a window at the same time is possible without any quality loss 3 Pseudo-Holographic Display Technology Benefits of psHolix display technology 1. Experience high quality 3D without glasses 2. Multiviewer solution, everyone can see the same spatial content in front of the screen 3. -

Idw ’17 the 24Th International Display Workshops

IDW ’17 THE 24TH INTERNATIONAL DISPLAY WORKSHOPS Special Topics of Interest on • Oxide-Semiconductor TFT • Lighting and Quantum Dot Technologies • AR/VR and Hyper Reality • Automotive Displays • Wide Color Gamut and Color Reproduction Topical Session on • User Experience and Cognitive Engineering • Haptics Technologies Workshops on • LC Science and Technologies (LCT) • Active Matrix Displays (AMD) • FPD Manufacturing, Materials and Components (FMC) • Inorganic Emissive Display and Phosphors(PH) • OLED Displays and Related Technologies (OLED) • 3D/Hyper-Realistic Displays and Systems (3D) • Applied Vision and Human Factors (VHF) • Projection and Large-Area Displays and Their Components (PRJ) • Electronic Paper (EP) • MEMS and Emerging Technologies for Future Displays and Devices (MEET) • Display Electronic Systems (DES) • Flexible Electronics (FLX) • Touch Panels and Input Technologies (INP) Advance Program Sendai International Center Sendai, Japan December 6 – 8, 2017 CONTENTS Program Highlights ................................................................................8 General Information .............................................................................14 Travel Information ................................................................................17 Plenary Sessions Wednesday, December 6 IDW '17 Opening .................................................................................23 IDW '17 Keynote Addresses ................................................................23 Special Topics of Interest on Oxide-Semiconductor