Rendering and Display for Multi-Viewer Tele-Immersion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

XF Camera System User Manual

XF Camera System User Manual XF Camera System Manual | Table of Contents 1 Jump to Table of Contents XF Camera System Manual | Table of Contents 2 Content | XF Camera System Manual Primary parts of the XF Camera System 4 XF Camera System Capture Modes 59 Lens, XF Camera Body, IQ Digital Back 4 XF Camera System Exposure Modes 61 Dials, Buttons and Touch Screen Interface 6 Lens, XF Camera Body, IQ Digital Back 6 Exposure Compensation 63 Assembling the XF Camera System 8 Long Exposure 64 Digital Back and camera body modularity 8 Electronic Shutter (ES) 65 Readying the camera system 10 General advice for using the battery and charger 11 Live View 67 Live View on the LCD, Capture One or HDMI monitor 67 Navigating the XF Camera System 14 XF Camera Body Controls 15 XF Custom Presets and System Backup 70 Customizing buttons and dials 18 Flash Photography 73 Profoto Remote Tool 74 OneTouch User Interface Flow Diagram 20 Triggering a Capture with the Profoto Remote 75 XF Camera Menu Overview 20 Flash Analysis Tool 76 IQ Digital Back Menu Overview 22 Rear Curtain sync and Trim 76 Shutter Mechanisms and Flash Synchronization range 77 OneTouch User Interface Overview 25 XF Camera Body Navigation 25 XF Camera System Lenses 78 Top Touch Screen Display 26 Capture One Pro 79 XF Tools on the Top Touch Screen 28 Capture One Tethered Use 82 IQ Digital Back Navigation 33 IQ Digital Back Navigation Shortcuts 34 Built-In WiFi and Capture Pilot 84 IQ Digital Back Viewing images 34 A-series Camera System 87 IQ Digital Back Contextual Menu 35 Overview of Contextual -

Dualcad : Intégrer La Réalité Augmentée Et Les Interfaces D'ordinateurs De Bureau Dans Un Contexte De Conception Assistée Par Ordinateur

ÉCOLE DE TECHNOLOGIE SUPÉRIEURE UNIVERSITÉ DU QUÉBEC MÉMOIRE PRÉSENTÉ À L’ÉCOLE DE TECHNOLOGIE SUPÉRIEURE COMME EXIGENCE PARTIELLE À L’OBTENTION DE LA MAÎTRISE AVEC MÉMOIRE EN GÉNIE LOGICIEL M. Sc. A. PAR Alexandre MILLETTE DUALCAD : INTÉGRER LA RÉALITÉ AUGMENTÉE ET LES INTERFACES D'ORDINATEURS DE BUREAU DANS UN CONTEXTE DE CONCEPTION ASSISTÉE PAR ORDINATEUR MONTRÉAL, LE 27 AVRIL 2016 Alexandre Millette, 2016 Cette licence Creative Commons signifie qu’il est permis de diffuser, d’imprimer ou de sauvegarder sur un autre support une partie ou la totalité de cette œuvre à condition de mentionner l’auteur, que ces utilisations soient faites à des fins non commerciales et que le contenu de l’œuvre n’ait pas été modifié. PRÉSENTATION DU JURY CE MÉMOIRE A ÉTÉ ÉVALUÉ PAR UN JURY COMPOSÉ DE : M. Michael J. McGuffin, directeur de mémoire Département de génie logiciel et des TI à l’École de technologie supérieure M. Luc Duong, président du jury Département de génie logiciel et des TI à l’École de technologie supérieure M. David Labbé, membre du jury Département de génie logiciel et des TI à l’École de technologie supérieure IL A FAIT L’OBJET D’UNE SOUTENANCE DEVANT JURY ET PUBLIC LE 6 AVRIL 2016 À L’ÉCOLE DE TECHNOLOGIE SUPÉRIEURE AVANT-PROPOS Lorsqu’il m’est venu le temps de choisir si je désirais faire une maîtrise avec ou sans mémoire, les technologies à Réalité Augmentée (RA) étaient encore des embryons de projets, entrepris par des compagnies connues pour leurs innovations, telles que Google avec leur Google Glasses, ou par des compagnies émergentes, telles que META et leur Spaceglasses. -

A Review and Selective Analysis of 3D Display Technologies for Anatomical Education

University of Central Florida STARS Electronic Theses and Dissertations, 2004-2019 2018 A Review and Selective Analysis of 3D Display Technologies for Anatomical Education Matthew Hackett University of Central Florida Part of the Anatomy Commons Find similar works at: https://stars.library.ucf.edu/etd University of Central Florida Libraries http://library.ucf.edu This Doctoral Dissertation (Open Access) is brought to you for free and open access by STARS. It has been accepted for inclusion in Electronic Theses and Dissertations, 2004-2019 by an authorized administrator of STARS. For more information, please contact [email protected]. STARS Citation Hackett, Matthew, "A Review and Selective Analysis of 3D Display Technologies for Anatomical Education" (2018). Electronic Theses and Dissertations, 2004-2019. 6408. https://stars.library.ucf.edu/etd/6408 A REVIEW AND SELECTIVE ANALYSIS OF 3D DISPLAY TECHNOLOGIES FOR ANATOMICAL EDUCATION by: MATTHEW G. HACKETT BSE University of Central Florida 2007, MSE University of Florida 2009, MS University of Central Florida 2012 A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in the Modeling and Simulation program in the College of Engineering and Computer Science at the University of Central Florida Orlando, Florida Summer Term 2018 Major Professor: Michael Proctor ©2018 Matthew Hackett ii ABSTRACT The study of anatomy is complex and difficult for students in both graduate and undergraduate education. Researchers have attempted to improve anatomical education with the inclusion of three-dimensional visualization, with the prevailing finding that 3D is beneficial to students. However, there is limited research on the relative efficacy of different 3D modalities, including monoscopic, stereoscopic, and autostereoscopic displays. -

Underwater Survey for Oil and Gas Industry: a Review of Close Range Optical Methods

remote sensing Review Underwater Survey for Oil and Gas Industry: A Review of Close Range Optical Methods Bertrand Chemisky 1,*, Fabio Menna 2 , Erica Nocerino 1 and Pierre Drap 1 1 LIS UMR 7020, Aix-Marseille Université, CNRS, Université De Toulon, 13397 Marseille, France; [email protected] (E.N.); [email protected] (P.D.) 2 3D Optical Metrology (3DOM) Unit, Bruno Kessler Foundation (FBK), Via Sommarive 18, 38123 Trento, Italy; [email protected] * Correspondence: [email protected] Abstract: In both the industrial and scientific fields, the need for very high-resolution cartographic data is constantly increasing. With the aging of offshore subsea assets, it is very important to plan and maintain the longevity of structures, equipment, and systems. Inspection, maintenance, and repair (IMR) of subsea structures are key components of an overall integrity management system that aims to reduce the risk of failure and extend the life of installations. The acquisition of very detailed data during the inspection phase is a technological challenge, especially since offshore installations are sometimes deployed in extreme conditions (e.g., depth, hydrodynamics, visibility). After a review of high resolution mapping techniques for underwater environment, this article will focus on optical sensors that can satisfy the requirements of the offshore industry by assessing their relevance and degree of maturity. These requirements concern the resolution and accuracy but also cost, ease of implementation, and qualification. With the evolution of embedded computing resources, in-vehicle optical survey solutions are becoming increasingly important in the landscape of large-scale mapping solutions and more and more off-the-shelf systems are now available. -

USING SONY A900 for ASTROPHOTOGRAPHY • TELESCOPE ADAPTORS - PRIME FOCUS - EYEPIECE PROJECTION

USING SONY a900 for ASTROPHOTOGRAPHY • TELESCOPE ADAPTORS - PRIME FOCUS - EYEPIECE PROJECTION • REMOTE RELEASE - SONY CABLE RELEASE RM-S1AM - SONY REMOTE COMMANDER RMT-DSLR1 - GADGET INFINITY RADIO S1 REMOTE - JJC-JR-C IR MODULAR REMOTE - HÄHNEL HW433S80 RF WiFi REMOTE - JJC TM-F LCD DIGITAL TIMER / INTERVALOMETER • DRIVE FUNCTION - MIRROR LOCK-UP • FOCUS - SCREEN L - DIOPTRE CORRECTION - RIGHT ANGLE MAGNIFIER • FINE FOCUS - RACK & PINION FOCUSER - MOTORISED FOCUS - REMOTE DIGITAL FOCUSER - DEPTH OF FOCUS • TRACKING FOCUS • SEEING • UN-DAMPED VIBRATION - ELECTROMAGNETIC SHUTTER RELEASE • CHECKING FOCUS • LAPTOP REMOTE CAPTURE & CONTROL vs LCD VIEW SCREEN • EXPOSURE - MANUAL MODE OPTION - CALCULATING EXPOSURE TIMES - ISO SETTING & RESOLUTION - ISO vs SEEING - BRACKETING vs DR-O USING SONY a900 for ASTROPHOTOGRAPHY The Sony a900 DSLR is capable of taking fine photographs of the Sun in H-alpha and white light, the Moon, and deep sky objects. It has a 35mm Fx format CMOS sensor specifically designed for low light level photography. The twin BIONZ processors are designed to produce high resolution, low noise, high dynamic range images. The 3-inch LCD view screen may enlarge raw images x19, and its resolution is sufficient to judge precise focus without having to resort to a remote laptop monitor. The optical viewfinder affords 100% frame coverage, and has internal dioptre correction and interchangeable focusing screens. • TELESCOPE ADAPTORS - PRIME FOCUS The Sony 'Exmor' CMOS sensor size is 35.9mm x 24.0mm, giving a frame diagonal 43.2mm. The throat clearance I.D. of the prime focus adaptor needs to accommodate an image circle 43.3mm diameter (allowing 0.1mm clearance) otherwise the frame corners will be mechanically vignetted. -

Multi-User Display Systems, Compendium of the State of the Art

Multi-user display systems, Compendium of the State of the Art. Juan Sebastian Munoz-Arango; Research Assistant EAC - UA Little Rock; Little Rock, AR, Dirk Reiners; Research Scientist EAC - UA Little Rock; Little Rock, AR Carolina Cruz-Neira; Research Director EAC - UA Little Rock; Little Rock, AR Abstract egories: Spatial Barriers, Optical Filtering, Optical Routing and One of the main shortcomings of most Virtual Reality display sys- Time Multiplexing. tems, be it head-mounted displays or projection based systems like In addition to these categories it is worth mentioning volu- CAVEs, is that they can only provide the correct perspective to a metric displays and light field displays, which are relevant to the single user. This is a significant limitation that reduces the appli- multi user viewing topic even though they achieve multi user per- cability of Virtual Reality approaches for most kinds of group col- spective through a totally different approach. laborative work, which is becoming more and more important in many disciplines. Different approaches have been tried to present Spatial barriers multiple images to different users at the same time, like optical Spatial barriers take advantage of the display’s physical config- barriers, optical filtering, optical routing, time multiplex, volu- uration and user placement to display users’ specific views. Es- metric displays and lightfield displays among others. This paper sentially they form a mechanical barrier that lets each user see a describes, discusses and compares different approaches that have subset of the underlying displays’ pixels. been developed and develop an evaluation approach to identify The spatial barriers approach has been around for a while. -

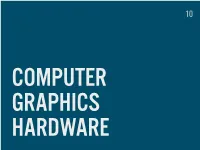

GRAPHICS HARDWARE WHAT’S in STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High Performance Power Consumption Heat Emission

10 COMPUTER GRAPHICS HARDWARE WHAT’S IN STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High performance Power consumption Heat emission INTEGRATED (INTERNAL) Low power, low heat Mobile devices, on board Integrated with CPU ARCHITECTURE VERTEX SHADERS (TRANSFORM GEOMETRY) GEOMETRY SHADERS (CREATE NEW GEOMETRY) PIXEL SHADERS (COLORS, SHADOWS…) UNIFIED SHADERS One type of processors for all operations GTX 680 = 1536 unified shader cores CUDA, GPGPU General (not only graphics) performed on GPU Parallelism (HW video encoding, numerical computations) CONNECTORS VGA Analog DVI Digital + Analog HDMI Digital miniHDMI, microHDMI DISPLAYPORT Digital, Analog Mini DisplayPort (Apple) MANUFACTURERS NVIDIA GeForce, Quadro, Tesla AMD (FORMERLY ATI) Radeon, FirePro INTEL Integrated in Core CPUs POWERVR ARM Mali DISPLAYS CATHODE RAY TUBE (CRT) CATHODE RAY TUBE (CRT) ANALOG TVS OLD COMPUTER MONITORS MEDIUM SIZE DISPLAYS LIGHT EMISSION Black is black FLICKER 75-100 Hz for work http://www.bthompson.net/ PLASMA (PDP, NEO-PDP) NOT SUITABLE FOR COMPUTER DISPLAYS LARGE SCREENS (30”+) LIGHT EMISSION Black is black HIGH ENERGY DEMANDS VIEW ANGLE DOESN’T MATTER IMAGE RETENTION Reduced for Neo-PDP LIQUID CRYSTAL DISPLAY (LCD) CRYSTALS BLOCK LIGHT FROM BACK SOURCE Black is hard to achieve DIFFERENT TECHNOLOGIES (TN, IPS, MVA, …) LOW ENERGY CONSUMPTION LARGE SCREENS VIEW ANGLES ISSUE LCD LED CONFUSION OLED (ORGANIC LIGHT-EMITTING DIODE) LIGHT EMISSION GOOD CONTRAST AND COLORS EXPENSIVE PRODUCTION Small screens so far LOW ENERGY POTENTIALLY FLEXIBLE VIEW ANGLE -

Ilca-99M2 Α99ii

Interchangeable Lens Digital Camera ILCA-99M2 α99II Names of parts/Icons and indicators Names of parts Front side [1] Rear side [2] Top side [3] Sides [4] Bottom [5] Basic operations Using the multi-selector [6] Using the front multi-controller [7] Using MENU items [8] Using the Fn (Function) button [9] How to use the Quick Navi screen [10] How to use the keyboard [11] Icons and indicators List of icons on the monitor [12] Indicators on the display panel [13] Switching the screen display (while shooting/during playback) [14] DISP Button (Monitor/Finder) [15] Preparing the camera Checking the camera and the supplied items [16] Charging the battery pack Charging the battery pack using a charger [17] Inserting/removing the battery pack [18] Battery life and number of recordable images [19] Notes on the battery pack [20] Inserting a memory card (sold separately) Inserting/removing a memory card [21] Memory cards that can be used [22] Notes on memory card [23] Recording images on two memory cards Selecting which memory card to record to (Select Rec. Media) [24] Attaching a lens Attaching/removing a lens [25] Attaching the lens hood [26] Attaching accessories Vertical grip [27] Setting language, date and time [28] In-Camera Guide [29] Shooting Shooting still images [30] Focusing Focus Mode [31] Auto focus Auto focus mechanism [32] Focus Area [33] Focus Standard [34] AF/MF control [35] AF w/ shutter (still image) [36] AF On [37] Eye AF [38] AF Range Control [39] AF Rng.Ctrl Assist (still image) [40] Center Lock-on AF [41] Eye-Start AF (still image) [42] AF drive speed (still image) [43] AF Track Sens (still image) [44] Priority Set in AF-S [45] Priority Set in AF-C [46] AF Illuminator (still image) [47] AF Area Auto Clear [48] Wide AF Area Disp. -

The Varriertm Autostereoscopic Virtual Reality Display

submission id papers_0427 The VarrierTM Autostereoscopic Virtual Reality Display Daniel J. Sandin, Todd Margolis, Jinghua Ge, Javier Girado, Tom Peterka, Thomas A. DeFanti Electronic Visualization Laboratory University of Illinois at Chicago [email protected] Abstract In most other lenticular and barrier strip implementations, stereo is achieved by sorting image slices in integer (image) coordinates. Virtual reality (VR) has long been hampered by the gear Moreover, many autostereo systems compress scene depth in the z needed to make the experience possible; specifically, stereo direction to improve image quality but Varrier is orthoscopic, glasses and tracking devices. Autostereoscopic display devices are which means that all 3 dimensions are displayed in the same gaining popularity by freeing the user from stereo glasses, scale. Besides head-tracked perspective interaction, the user can however few qualify as VR displays. The Electronic Visualization further interact with VR applications through hand-held devices Laboratory (EVL) at the University of Illinois at Chicago (UIC) such as a 3d wand, for example to control navigation through has designed and produced a large scale, high resolution head- virtual worlds. tracked barrier-strip autostereoscopic display system that There are four main contributions of this paper. New virtual produces a VR immersive experience without requiring the user to barrier algorithms are presented that enhance image quality and wear any encumbrances. The resulting system, called Varrier, is a lower color shifts by operating at sub-pixel resolution. Automated passive parallax barrier 35-panel tiled display that produces a camera-based registration of the physical and virtual barrier strip wide field of view, head-tracked VR experience. -

Photo-Aligned Ferroelectric Liquid Crystal Devices with Novel Electro-Optic Characteristics

crystals Review Photo-Aligned Ferroelectric Liquid Crystal Devices with Novel Electro-Optic Characteristics Vladimir Chigrinov 1,2,* , Qi Guo 3 and Aleksey Kudreyko 4 1 School of Physics and Optoelectronic Engineering, Foshan University, Foshan 528325, China 2 Department of Theoretical Physics, Moscow Region State University, 141014 Mytishi, Russia 3 School of Instrumentation and Optoelectronic Engineering, Beihang University, Beijing 100191, China; [email protected] 4 Department of Medical Physics and Informatics, Bashkir State Medical University, 450008 Ufa, Russia; [email protected] * Correspondence: [email protected] Received: 18 May 2020; Accepted: 19 June 2020; Published: 1 July 2020 Abstract: This paper examines different applications of ferroelectric liquid crystal devices based on photo-alignment. Successful application of the photo-alignment technique is considered to be a critical breakthrough. A variety of display and photonic devices with azo dye aligned ferroelectric liquid crystals is presented: smart glasses, liquid crystal Pancharatnam–Berry phase optical elements, 2D/3D switchable lenses, and laser therapy devices. Comparison of electro-optical behavior of ferroelectric liquid crystals is described considering the performance of devices. This paper facilitates the optimization of device design, and broadens the possible applications in the display and photonic area. Keywords: photo-alignment of ferroelectric liquid crystals; electro-optic modes; new devices; imaging technologies; displays; photonics 1. Introduction The appearance of portable and wearable devices has led to an ever-increasing demand for next-generation displays with ultra-high resolution, light weight, low power consumption, and high efficiency [1,2]. However, the response time of nematic liquid crystals (LCs) is too slow for fast-switching devices. -

Peter Read Miller on Sports Photography

Final spine = 0.5902 in. PETER READ MILLER ON SPORTS PHOTOGRAPHY A Sports Illustrated® photographer’s tips, tricks, and tales on shooting football, the Olympics, and portraits of athletes P ETER READ MILLER ON SPORTS PHOTOGRAPHY A Sports Illustrated photographer’s tips, tricks, and tales on shooting football, the Olympics, and portraits of athletes PETER READ MILLER ON SPORTS PHOTOGRAPHY A Sports Illustrated ® photographer’s tips, tricks, and tales on shooting football, the Olympics, and portraits of athletes Peter Read Miller New Riders www.newriders.com New Riders is an imprint of Peachpit, a division of Pearson Education Find us on the Web at www.newriders.com To report errors, please send a note to [email protected] Copyright © 2014 by Peter Read Miller Acquisitions Editor: Ted Waitt Project Editor: Valerie Witte Senior Production Editor: Lisa Brazieal Developmental and Copy Editor: Anne Marie Walker Photo Editor: Steve Fine Proofreader: Erfert Fenton Composition: Kim Scott/Bumpy Design Indexer: Valerie Haynes Perry Cover and Interior Design: Mimi Heft Cover Images: Peter Read Miller NOTICE OF RIGHTS All rights reserved. No part of this book may be reproduced or transmitted in any form by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the publisher. For information on getting permission for reprints and excerpts, contact [email protected]. NOTICE OF LIABILITY The information in this book is distributed on an “As Is” basis, without warranty. While every precaution has been taken in the preparation of the book, neither the author nor Peachpit shall have any liability to any person or entity with respect to any loss or damage caused or alleged to be caused directly or indirectly by the instructions contained in this book or by the computer software and hardware products described in it. -

Fundamentals of Photography Course Guidebook

Topic Subtopic Better Living Arts & Leisure Fundamentals of Photography Course Guidebook Joel Sartore Photographer, National Geographic Fellow PUBLISHED BY: THE GREAT COURSES Corporate Headquarters 4840 Westfi elds Boulevard, Suite 500 Chantilly, Virginia 20151-2299 Phone: 1-800-832-2412 Fax: 703-378-3819 www.thegreatcourses.com Copyright © The Teaching Company, 2012 Printed in the United States of America This book is in copyright. All rights reserved. Without limiting the rights under copyright reserved above, no part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form, or by any means (electronic, mechanical, photocopying, recording, or otherwise), without the prior written permission of The Teaching Company. Joel Sartore Professional Photographer National Geographic Magazine oel Sartore is a photographer, a speaker, an author, a teacher, and a regular contributor Jto National Geographic magazine. He holds a bachelor’s degree in Journalism, and his work has been recognized by the National Press Photographers Association and the Pictures of the Year International competition. Mr. Sartore was recently made a Fellow in the National Geographic Society for his work as a conservationist. The hallmarks of his professional style are a sense of humor and a midwestern work ethic. Mr. Sartore’s assignments have taken him to some of the world’s most beautiful and challenging environments, from the Arctic to the Antarctic. He has traveled to all 50 states and all seven continents, photographing every- thing from Alaskan salmon-fi shing bears to Amazonian tree frogs. His most recent focus is on documenting wildlife, endan gered species, and landscapes, bringing public attention to what he calls “a world worth saving.” His interest in nature started in child hood, when he learned about the very last passenger pigeon from one of his mother’s TIME LIFE picture books.