Openuh: an Optimizing, Portable Openmp Compiler

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

Introduchon to Arm for Network Stack Developers

Introducon to Arm for network stack developers Pavel Shamis/Pasha Principal Research Engineer Mvapich User Group 2017 © 2017 Arm Limited Columbus, OH Outline • Arm Overview • HPC SoLware Stack • Porng on Arm • Evaluaon 2 © 2017 Arm Limited Arm Overview © 2017 Arm Limited An introduc1on to Arm Arm is the world's leading semiconductor intellectual property supplier. We license to over 350 partners, are present in 95% of smart phones, 80% of digital cameras, 35% of all electronic devices, and a total of 60 billion Arm cores have been shipped since 1990. Our CPU business model: License technology to partners, who use it to create their own system-on-chip (SoC) products. We may license an instrucBon set architecture (ISA) such as “ARMv8-A”) or a specific implementaon, such as “Cortex-A72”. …and our IP extends beyond the CPU Partners who license an ISA can create their own implementaon, as long as it passes the compliance tests. 4 © 2017 Arm Limited A partnership business model A business model that shares success Business Development • Everyone in the value chain benefits Arm Licenses technology to Partner • Long term sustainability SemiCo Design once and reuse is fundamental IP Partner Licence fee • Spread the cost amongst many partners Provider • Technology reused across mulBple applicaons Partners develop • Creates market for ecosystem to target chips – Re-use is also fundamental to the ecosystem Royalty Upfront license fee OEM • Covers the development cost Customer Ongoing royalBes OEM sells • Typically based on a percentage of chip price -

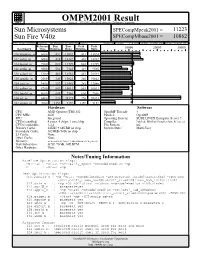

Sun Microsystems

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3 -

Best Practice Guide - Generic X86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013

Best Practice Guide - Generic x86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013 1 Best Practice Guide - Generic x86 Table of Contents 1. Introduction .............................................................................................................................. 3 2. x86 - Basic Properties ................................................................................................................ 3 2.1. Basic Properties .............................................................................................................. 3 2.2. Simultaneous Multithreading ............................................................................................. 4 3. Programming Environment ......................................................................................................... 5 3.1. Modules ........................................................................................................................ 5 3.2. Compiling ..................................................................................................................... 6 3.2.1. Compilers ........................................................................................................... 6 3.2.2. General Compiler Flags ......................................................................................... 6 3.2.2.1. GCC ........................................................................................................ 6 3.2.2.2. Intel ........................................................................................................ -

Pathscale ENZO - 2011-04-15 by Vincent - Streamcomputing

PathScale ENZO - 2011-04-15 by vincent - StreamComputing - http://www.streamcomputing.eu PathScale ENZO by vincent – Friday, 15 April 2011 http://www.streamcomputing.eu/blog/2011-04-15/pathscale-enzo/ My todo-list gets too large, because there seem so many things going on in GPGPU-world. Therefore the following article is not really complete, but I hope it gives you an idea of the product. ENZO was presented as the alternative to CUDA and OpenCL. In that light I compared them to Intel Array Building Blocks a few weeks ago, not that their technique is comparable in a technical way. PathScale’s CTO mailed me and tried to explain what ENZO really is. This article consists mainly of what he (Mr. C. Bergström) told me. Any questions you have, I will make sure he receives them. ENZO ENZO is a complete GPGPU solution and ecosystem of tools that provide full support for NVIDIA Tesla (kernel driver, runtime, assembler, code generation, front-end programming model and various other things to make a developer’s life easier). Right now it supports the HMPP C/Fortran programming model. HMPP is an open standard jointly developed by CAPS and PathScale. I’ve mentioned HMPP before, as it can translate Fortran and C-code to OpenCL, CUDA and other languages. ENZO’s implementation differs from HMPP by using native front-ends and does hardware optimised code generation. You can learn ENZO in 5 minutes if you’ve done any OpenMP-like programming in the past. For example the Fortran-code (sorry for the missing indenting): !$hmpp simple codelet, target=TESLA1 -

Qlogic Pathscale™ Compiler Suite User Guide

Q Simplify QLogic PathScale™ Compiler Suite User Guide Version 3.0 1-02404-15 Page i QLogic PathScale Compiler Suite User Guide Version 3.0 Q Information furnished in this manual is believed to be accurate and reliable. However, QLogic Corporation assumes no responsibility for its use, nor for any infringements of patents or other rights of third parties which may result from its use. QLogic Corporation reserves the right to change product specifications at any time without notice. Applications described in this document for any of these products are for illustrative purposes only. QLogic Corporation makes no representation nor warranty that such applications are suitable for the specified use without further testing or modification. QLogic Corporation assumes no responsibility for any errors that may appear in this document. No part of this document may be copied nor reproduced by any means, nor translated nor transmitted to any magnetic medium without the express written consent of QLogic Corporation. In accordance with the terms of their valid PathScale agreements, customers are permitted to make electronic and paper copies of this document for their own exclusive use. Linux is a registered trademark of Linus Torvalds. QLA, QLogic, SANsurfer, the QLogic logo, PathScale, the PathScale logo, and InfiniPath are registered trademarks of QLogic Corporation. Red Hat and all Red Hat-based trademarks are trademarks or registered trademarks of Red Hat, Inc. SuSE is a registered trademark of SuSE Linux AG. All other brand and product names are trademarks or registered trademarks of their respective owners. Document Revision History 1.4 New Sections 3.3.3.1, 3.7.4, 10.4, 10.5 Added Appendix B: Supported Fortran intrinsics 2.0 New Sections 2.3, 8.9.7, 11.8 Added Chapter 8: Using OpenMP in Fortran New Appendix B: Implementation dependent behavior for OpenMP Fortran Expanded and updated Appendix C: Supported Fortran intrinsics 2.1 Added Chapter 9: Using OpenMP in C/C++, Appendix E: eko man page. -

Pathscale Fortran and C/C++ Compilers

PathScale™ Compiler Suite User Guide Version 3.2 Page i PathScale Compiler Suite User Guide Version 3.2 Page ii PathScale Compiler Suite User Guide Version 3.2 Information furnished in this manual is believed to be accurate and reliable. However, PathScale LLC assumes no responsibility for its use, nor for any infringements of patents or other rights of third parties which may result from its use. PathScale LLC reserves the right to change product specifications at any time without notice. Applications described in this document for any of these products are for illustrative purposes only. PathScale LLC makes no representation nor warranty that such applications are suitable for the specified use without further testing or modification. PathScale LLC assumes no responsibility for any errors that may appear in this document. No part of this document may be copied nor reproduced by any means, nor translated nor transmitted to any magnetic medium without the express written consent of PathScale LLC. In accordance with the terms of their valid PathScale agreements, customers are permitted to make electronic and paper copies of this document for their own exclusive use. Linux is a registered trademark of Linus Torvalds. PathScale, the PathScale logo, and EKOPath are registered trademarks of PathScale, LLC. Red Hat and all Red Hat-based trademarks are trademarks or registered trademarks of Red Hat, Inc. SuSE is a registered trademark of SuSE Linux AG. All other brand and product names are trademarks or registered trademarks of their respective owners. © 2007, 2008 PathScale, LLC. All rights reserved. © 2006, 2007 QLogic Corporation. All rights reserved worldwide. -

Programming Guide for Sicortex

SiCortex® System Programming Guide For Software Version 3.0 Field Test Trademarks Cray the a registered trademark of Cray, Inc. Intel is the registered trademark of Intel Corporation. Linux is the registered trademark of Linus Torvalds in the U.S. and other countries. The registered trademark Linux is used pursuant to a sublicense from the Linux Mark Institute, the exclusive licensee of Linus Torvalds, owner of the mark in the U.S. and other countries. Lustre is the registered trademark of Cluster File Systems, Inc. MIPS and MIPS64 are registered trademarks of MIPS Technologies, Inc. NIST is a registered trademark of the National Institute of Standards and Technology, U.S. Department of Commerce. OpenMP is a trademark of Silicon Graphics, Inc. PCI, PCI Express, and PCIe are registered trademarks, and EXPRESSMODULE is a trademark of PCI-SIG. Perl is the registered trademark of The Perl Foundation. SiCortex is a registered trademark, and the SiCortex logo, SC5832, SC648, and PathScale are trademarks of SiCortex, Incorporated. TAU Performance System is a trademark of the joint developers: University of Oregon Performance Research Lab; Los Alamos National Laboratory Advanced Computing Laboratory; and The Research Centre Jülich, ZAM, Germany. TotalView is a registered trademark of TotalView Technologies, LLC. Vampir is a registered trademark of Wolfgang E. Nagel. All other brand and product names are trademarks or service marks of their respective owners. Copyrights Copyright© 2007-2008 SiCortex Incorporated. All rights reserved. Disclaimer The content of this document is furnished for informational use only, is subject to change without notice, and should not be construed as a com- mitment by SiCortex, Inc. -

Qlogic Pathscale™ Debugger User Guide

Q Simplify QLogic PathScale™ Debugger User Guide Version 1.1.1 2-092004-05 Page i QLogic PathScale Debugger User Guide Q Information furnished in this manual is believed to be accurate and reliable. However, QLogic Corporation assumes no responsibility for its use, nor for any infringements of patents or other rights of third parties which may result from its use. QLogic Corporation reserves the right to change product specifications at any time without notice. Applications described in this document for any of these products are for illustrative purposes only. QLogic Corporation makes no representation nor warranty that such applications are suitable for the specified use without further testing or modification. QLogic Corporation assumes no responsibility for any errors that may appear in this document. No part of this document may be copied nor reproduced by any means, nor translated nor transmitted to any magnetic medium without the express written consent of QLogic Corporation. In accordance with the terms of their valid PathScale agreements, customers are permitted to make electronic and paper copies of this document for their own exclusive use. Linux is a registered trademark of Linus Torvalds. QLA, QLogic, SANsurfer, the QLogic logo, PathScale, the PathScale logo, InfiniPath and EKOPath are registered trademarks of QLogic Corporation. Red Hat and all Red Hat-based trademarks are trademarks or registered trademarks of Red Hat, Inc. SuSE is a registered trademark of SuSE Linux AG. All other brand and product names are trademarks or registered trademarks of their respective owners. Document Revision History 1.0 Beta 1.0 1.1 1.1.1 2-092004-05 , December 22, 2006 Changes Document Sections Affected This now becomes a QLogic document, with new All Sections formatting and product names. -

LLVM in the Freebsd Toolchain

LLVM in the FreeBSD Toolchain David Chisnall 1 Introduction vacky and Pawel Worach begin trying to build FreeBSD with Clang and quickly got a working FreeBSD 10 shipped with Clang, based on kernel, as long as optimisations were disabled. By LLVM [5], as the system compiler for x86 and May 2011, Clang was able to build the entire base ARMv6+ platforms. This was the first FreeBSD system on both 32-bit and 64-bit x86 and so be- release not to include the GNU compiler since the came a viable migration target. A large number of project's beginning. Although the replacement of LLVM Clang bugs were found and fixed as a result the C compiler is the most obvious user-visible of FreeBSD testing the compilation of a large body change, the inclusion of LLVM provides opportu- of code. nities for other improvements. 3 Rebuilding the C++ stack 2 Rationale for migration The compiler itself was not the only thing that The most obvious incentive for the FreeBSD project the FreeBSD project adopted from GCC. The en- to switch from GCC to Clang was the decision by tire C++ stack was developed as part of the GCC the Free Software Foundation to switch the license project and underwent the same license switch. of GCC to version 3 of the GPL. This license is This stack comprised the C++ compiler (g++), the unacceptable to a number of large FreeBSD con- C++ language runtime (libsupc++) and the C++ sumers. Given this constraint, the project had a Standard Template Library (STL) implementation choice of either maintaining a fork of GCC 4.2.1 (libstdc++). -

Sun Fire V40z

CINT2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECint_rate2000 = 17.6 Sun Fire V40z SPECint_rate_base2000 = 16.0 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jun-2004 Hardware Avail: Jul-2004 Software Avail: May-2004 Base Base Base 27 24 21 18 15 12 9 6 3 Benchmark Copies Runtime Ratio Copies Runtime Ratio 164.gzip 1 96.5 16.8 1 96.3 16.9 175.vpr 1 124 13.1 1 121 13.4 176.gcc 1 67.4 18.9 1 67.4 18.9 181.mcf 1 276 7.57 1 180 11.6 186.crafty 1 48.1 24.1 1 48.1 24.1 197.parser 1 155 13.5 1 145 14.4 252.eon 1 75.4 20.0 1 59.5 25.3 253.perlbmk 1 122 17.1 1 110 19.0 254.gap 1 76.0 16.8 1 76.0 16.8 255.vortex 1 99.7 22.1 1 99.7 22.1 256.bzip2 1 115 15.1 1 115 15.1 300.twolf 1 243 14.3 1 187 18.6 Hardware Software CPU: AMD Opteron (TM) 850 Operating System: SuSE Linux 8.0 SLES 64 bit (SP3) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated SuSE optional gcc 3.3 (from SLES8 SP3) CPU(s) enabled: 1 core, 1 chip, 1 core/chip File System: Linux/ext3 CPU(s) orderable: 1,2,4 System State: Multi-user, Run level 3 Parallel: No Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC2700 CL2.5 DDR SDRAM ECC Registered Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Feedback-directed optimization is indicated by "+FDO", which means, unless otherwise noted: PASS1: -fb_create fbdata PASS2: -fb_opt fbdata Compiler: pathcc (PathScale C) unless otherwise noted.