Pathscale Fortran and C/C++ Compilers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Tech Tools Tech Tools

NEWS Tech Tools Tech Tools LLVM 3.0 Released Low Level Virtual Machine (LLVM) recently announced ver- replacing the C/ Objective-C compiler in the GCC system with a sion 3.0 of it compiler infrastructure. Originally implemented more easily integrated system and wider support for multithread- for C/ C++, the language-agnostic design of LLVM has ing. Other features include a new register allocator (which can spawned a wide variety of provide substantial perfor- front ends, including Objec- mance improvements in gen- tive-C, Fortran, Ada, Haskell, erated code), full support for Java bytecode, Python, atomic operations and the Ruby, ActionScript, GLSL, new C++ memory model, Clang, and others. and major improvement in The new release of LLVM the MIPS back end. represents six months of de- All LLVM releases are velopment over the previous available for immediate version and includes several download from the LLVM re- major changes, including dis- leases web site at: http:// continued support for llvm- llvm.org/releases/. For more gcc; the developers recom- information about LLVM, mend switching to Clang or visit the main LLVM website DragonEgg. Clang is aimed at at: http://llvm.org/. YaCy Search Engine Online Open64 5.0 Released The YaCy project has released version 1.0 of its Open64, the open source (GPLv2-licensed) peer-to-peer Free Software search engine. YaCy compiler for C/C++ and Fortran that’s does not use a central server; instead, its search re- backed by AMD and has been developed by sults come from a network of independent peers. SGI, HP, and various universities and re- According to the announcement, in this type of dis- search organizations, recently released ver- tributed network, no single entity decides which sion 5.0. -

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

Using GNU Fortran 95

Contributed by Steven Bosscher ([email protected]). Using GNU Fortran 95 Steven Bosscher For the 4.0.4 Version* Published by the Free Software Foundation 59 Temple Place - Suite 330 Boston, MA 02111-1307, USA Copyright c 1999-2005 Free Software Foundation, Inc. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.1 or any later version published by the Free Software Foundation; with the Invariant Sections being “GNU General Public License” and “Funding Free Software”, the Front-Cover texts being (a) (see below), and with the Back-Cover Texts being (b) (see below). A copy of the license is included in the section entitled “GNU Free Documentation License”. (a) The FSF’s Front-Cover Text is: A GNU Manual (b) The FSF’s Back-Cover Text is: You have freedom to copy and modify this GNU Manual, like GNU software. Copies published by the Free Software Foundation raise funds for GNU development. i Short Contents Introduction ...................................... 1 GNU GENERAL PUBLIC LICENSE .................... 3 GNU Free Documentation License ....................... 9 Funding Free Software .............................. 17 1 Getting Started................................ 19 2 GFORTRAN and GCC .......................... 21 3 GFORTRAN and G77 ........................... 23 4 GNU Fortran 95 Command Options.................. 25 5 Project Status ................................ 33 6 Extensions ................................... 37 7 Intrinsic Procedures ............................ -

Porting Open64 to the Cygwin Environment

Porting Open64 to the Cygwin Environment Nathan Tallent Mike Fagan ∗ November 2003 Abstract Cygwin is a Linux-like environment for Windows. It is sufficiently com- plete and stable that porting even very large codes to the environment is relatively straightforward. Cygwin is easy enough to download and install that it provides a convenient platform for traveling with code and giving conference demonstra- tions (via laptop) – even potentially expanding a code’s audience and user base (via desktop). However, for various reasons, Cygwin is not 100% compatible with Linux. We describe the major problems we encountered porting Rice University’s Open64/SL code base to Cygwin and present our solutions. 1 Introduction Cygwin is a Linux-like environment for Windows. It provides a Windows DLL that emulates nearly all of the Linux API. Programs written for Linux can link with the Cygwin DLL and thus run on Windows. A reasonably proficient Unix user can easily download and install a relatively complete Linux environment, including shells, de- velopment tools (GCC, make, gdb), editors (emacs, vi) and a graphical environment (XFree86). Moreover, the environment is complete and stable enough that it is rela- tively straightforward to port even very large codes to Cygwin. Because of the preva- lence of Windows-based desktops and laptops, Cygwin provides an attractive means for traveling with code and demonstrating it at conferences – even potentially expand- ing a code’s audience and user base. However, for full-time development or for critical applications, Unix remains superior. More information about Cygwin can be found on its web site, http://www.cygwin.com. -

Introduchon to Arm for Network Stack Developers

Introducon to Arm for network stack developers Pavel Shamis/Pasha Principal Research Engineer Mvapich User Group 2017 © 2017 Arm Limited Columbus, OH Outline • Arm Overview • HPC SoLware Stack • Porng on Arm • Evaluaon 2 © 2017 Arm Limited Arm Overview © 2017 Arm Limited An introduc1on to Arm Arm is the world's leading semiconductor intellectual property supplier. We license to over 350 partners, are present in 95% of smart phones, 80% of digital cameras, 35% of all electronic devices, and a total of 60 billion Arm cores have been shipped since 1990. Our CPU business model: License technology to partners, who use it to create their own system-on-chip (SoC) products. We may license an instrucBon set architecture (ISA) such as “ARMv8-A”) or a specific implementaon, such as “Cortex-A72”. …and our IP extends beyond the CPU Partners who license an ISA can create their own implementaon, as long as it passes the compliance tests. 4 © 2017 Arm Limited A partnership business model A business model that shares success Business Development • Everyone in the value chain benefits Arm Licenses technology to Partner • Long term sustainability SemiCo Design once and reuse is fundamental IP Partner Licence fee • Spread the cost amongst many partners Provider • Technology reused across mulBple applicaons Partners develop • Creates market for ecosystem to target chips – Re-use is also fundamental to the ecosystem Royalty Upfront license fee OEM • Covers the development cost Customer Ongoing royalBes OEM sells • Typically based on a percentage of chip price -

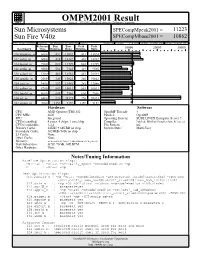

Sun Microsystems

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3 -

Best Practice Guide - Generic X86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013

Best Practice Guide - Generic x86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013 1 Best Practice Guide - Generic x86 Table of Contents 1. Introduction .............................................................................................................................. 3 2. x86 - Basic Properties ................................................................................................................ 3 2.1. Basic Properties .............................................................................................................. 3 2.2. Simultaneous Multithreading ............................................................................................. 4 3. Programming Environment ......................................................................................................... 5 3.1. Modules ........................................................................................................................ 5 3.2. Compiling ..................................................................................................................... 6 3.2.1. Compilers ........................................................................................................... 6 3.2.2. General Compiler Flags ......................................................................................... 6 3.2.2.1. GCC ........................................................................................................ 6 3.2.2.2. Intel ........................................................................................................ -

Automated Malware Analysis Report for Funny Linux.Elf

ID: 449051 Sample Name: funny_linux.elf Cookbook: defaultlinuxfilecookbook.jbs Time: 05:20:57 Date: 15/07/2021 Version: 33.0.0 White Diamond Table of Contents Table of Contents 2 Linux Analysis Report funny_linux.elf 3 Overview 3 General Information 3 Detection 3 Signatures 3 Classification 3 Analysis Advice 3 General Information 3 Process Tree 3 Yara Overview 3 Jbx Signature Overview 4 Mitre Att&ck Matrix 4 Malware Configuration 4 Behavior Graph 4 Antivirus, Machine Learning and Genetic Malware Detection 5 Initial Sample 5 Dropped Files 5 Domains 5 URLs 5 Domains and IPs 5 Contacted Domains 5 Contacted IPs 5 Runtime Messages 6 Joe Sandbox View / Context 6 IPs 6 Domains 6 ASN 6 JA3 Fingerprints 6 Dropped Files 6 Created / dropped Files 6 Static File Info 6 General 6 Static ELF Info 7 ELF header 7 Sections 7 Program Segments 8 Dynamic Tags 8 Symbols 8 Network Behavior 10 System Behavior 10 Analysis Process: funny_linux.elf PID: 4576 Parent PID: 4498 10 General 10 File Activities 10 File Read 10 Copyright Joe Security LLC 2021 Page 2 of 10 Linux Analysis Report funny_linux.elf Overview General Information Detection Signatures Classification Sample funny_linux.elf Name: SSaampplllee hhaass sstttrrriiippppeedd ssyymbboolll tttaabblllee Analysis ID: 449051 Sample has stripped symbol table MD5: e0ba4089e9b457… Ransomware SHA1: 21b3392a2fdab2a… Miner Spreading SHA256: d2544462756205… mmaallliiiccciiioouusss malicious Evader Phishing Infos: sssuusssppiiiccciiioouusss suspicious cccllleeaann clean Exploiter Banker Spyware Trojan / Bot Adware Score: -

Pathscale ENZO - 2011-04-15 by Vincent - Streamcomputing

PathScale ENZO - 2011-04-15 by vincent - StreamComputing - http://www.streamcomputing.eu PathScale ENZO by vincent – Friday, 15 April 2011 http://www.streamcomputing.eu/blog/2011-04-15/pathscale-enzo/ My todo-list gets too large, because there seem so many things going on in GPGPU-world. Therefore the following article is not really complete, but I hope it gives you an idea of the product. ENZO was presented as the alternative to CUDA and OpenCL. In that light I compared them to Intel Array Building Blocks a few weeks ago, not that their technique is comparable in a technical way. PathScale’s CTO mailed me and tried to explain what ENZO really is. This article consists mainly of what he (Mr. C. Bergström) told me. Any questions you have, I will make sure he receives them. ENZO ENZO is a complete GPGPU solution and ecosystem of tools that provide full support for NVIDIA Tesla (kernel driver, runtime, assembler, code generation, front-end programming model and various other things to make a developer’s life easier). Right now it supports the HMPP C/Fortran programming model. HMPP is an open standard jointly developed by CAPS and PathScale. I’ve mentioned HMPP before, as it can translate Fortran and C-code to OpenCL, CUDA and other languages. ENZO’s implementation differs from HMPP by using native front-ends and does hardware optimised code generation. You can learn ENZO in 5 minutes if you’ve done any OpenMP-like programming in the past. For example the Fortran-code (sorry for the missing indenting): !$hmpp simple codelet, target=TESLA1 -

CPU Function (Defining Location) CPU Time in Seconds

NASA Open|SpeedShop Tutorial Performance Analysis with Open|SpeedShop Contributors: Jim Galarowicz, Bill Hachfeld, Greg Schultz: Argo Navis Technologies Don Maghrak, Patrick Romero: Krell Institute Martin Schulz, Matt Legendre: Lawrence Livermore National Laboratory Jennifer Green, Dave Montoya: Los Alamos National Laboratory Mahesh Rajan, Doug Pase: Sandia National Laboratories Koushik Ghosh, Engility Dyninst group (Bart Miller: UW & Jeff Hollingsworth: UMD) Phil Roth, Mike Brim: ORNL LLNL-PRES-503451 Dec 12, 2016 Outline Introduction to Open|SpeedShop How to run basic timing experiments and what they can do? How to deal with parallelism (MPI and threads)? How to properly use hardware counters? Slightly more advanced targets for analysis How to understand and optimize I/O activity? How to evaluate memory efficiency? How to analyze codes running on GPUs? DIY and Conclusions: DIY and Future trends Hands-on Exercises (after each section) On site cluster available We will provide exercises and test codes Performance Analysis With Open|SpeedShop: NASA Hands-On Tutorial Dec 12, 2016 2 NASA Open|SpeedShop Tutorial Performance Analysis with Open|SpeedShop Section 1 Introduction to Open|SpeedShop Dec 12, 2016 Open|SpeedShop Tool Set Open Source Performance Analysis Tool Framework Most common performance analysis steps all in one tool Combines tracing and sampling techniques Gathers and displays several types of performance information Flexible and Easy to use User access through: GUI, Command Line, Python Scripting, convenience -

2010-2011 Annual Report

Software in the Public Interest, Inc. 2010-2011 Annual Report 1st July 2011 To the membership, board and friends of Software in the Public Interest, Inc: As mandated by Article 8 of the SPI Bylaws, I respectfully submit this annual report on the activities of Software in the Public Interest, Inc. and extend my thanks to all of those who contributed to the mission of SPI in the past year. { Jonathan McDowell, SPI Secretary 1 Contents 1 President's Welcome3 2 Committee Reports4 2.1 Membership Committee.......................4 2.1.1 Statistics...........................4 3 Board Report5 3.1 Board Members............................5 3.2 Board Changes............................6 3.3 Elections................................6 4 Treasurer's Report7 4.1 Income Statement..........................7 4.2 Balance Sheet.............................9 5 Member Project Reports 11 5.1 New Associated Projects....................... 11 5.1.1 LibreOffice.......................... 11 5.1.2 Open64............................ 11 5.1.3 Jenkins............................ 11 5.1.4 ankur.org.in.......................... 12 A About SPI 13 2 Chapter 1 President's Welcome In the time that has passed since I originally agreed to serve as President sev- eral years ago, SPI has made significant and enduring progress resolving long- standing procedural problems that once impeded our ability to meet the basic service expectations of our associated projects. These accomplishments are of course not my own, but the collective result of work contributed by a dedicated core group of volunteers including the rest of our officers and board. A measure of our success is the steady stream of new SPI associated projects in recent years, including those we invited to join us in the last year listed later in this report.