Qlogic Pathscale™ Compiler Suite User Guide

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CSE 582 – Compilers

CSE P 501 – Compilers Optimizing Transformations Hal Perkins Autumn 2011 11/8/2011 © 2002-11 Hal Perkins & UW CSE S-1 Agenda A sampler of typical optimizing transformations Mostly a teaser for later, particularly once we’ve looked at analyzing loops 11/8/2011 © 2002-11 Hal Perkins & UW CSE S-2 Role of Transformations Data-flow analysis discovers opportunities for code improvement Compiler must rewrite the code (IR) to realize these improvements A transformation may reveal additional opportunities for further analysis & transformation May also block opportunities by obscuring information 11/8/2011 © 2002-11 Hal Perkins & UW CSE S-3 Organizing Transformations in a Compiler Typically middle end consists of many individual transformations that filter the IR and produce rewritten IR No formal theory for order to apply them Some rules of thumb and best practices Some transformations can be profitably applied repeatedly, particularly if others transformations expose more opportunities 11/8/2011 © 2002-11 Hal Perkins & UW CSE S-4 A Taxonomy Machine Independent Transformations Realized profitability may actually depend on machine architecture, but are typically implemented without considering this Machine Dependent Transformations Most of the machine dependent code is in instruction selection & scheduling and register allocation Some machine dependent code belongs in the optimizer 11/8/2011 © 2002-11 Hal Perkins & UW CSE S-5 Machine Independent Transformations Dead code elimination Code motion Specialization Strength reduction -

ICS803 Elective – III Multicore Architecture Teacher Name: Ms

ICS803 Elective – III Multicore Architecture Teacher Name: Ms. Raksha Pandey Course Structure L T P 3 1 0 4 Prerequisite: Course Content: Unit-I: Multi-core Architectures Introduction to multi-core architectures, issues involved into writing code for multi-core architectures, Virtual Memory, VM addressing, VA to PA translation, Page fault, TLB- Parallel computers, Instruction level parallelism (ILP) vs. thread level parallelism (TLP), Performance issues, OpenMP and other message passing libraries, threads, mutex etc. Unit-II: Multi-threaded Architectures Brief introduction to cache hierarchy - Caches: Addressing a Cache, Cache Hierarchy, States of Cache line, Inclusion policy, TLB access, Memory Op latency, MLP, Memory Wall, communication latency, Shared memory multiprocessors, General architectures and the problem of cache coherence, Synchronization primitives: Atomic primitives; locks: TTS, ticket, array; barriers: central and tree; performance implications in shared memory programs; Chip multiprocessors: Why CMP (Moore's law, wire delay); shared L2 vs. tiled CMP; core complexity; power/performance; Snoopy coherence: invalidate vs. update, MSI, MESI, MOESI, MOSI; performance trade-offs; pipelined snoopy bus design; Memory consistency models: SC, PC, TSO, PSO, WO/WC, RC; Chip multiprocessor case studies: Intel Montecito and dual-core, Pentium4, IBM Power4, Sun Niagara Unit-III: Compiler Optimization Issues Code optimizations: Copy Propagation, dead Code elimination , Loop Optimizations-Loop Unrolling, Induction variable Simplification, Loop Jamming, Loop Unswitching, Techniques to improve detection of parallelism: Scalar Processors, Special locality, Temporal locality, Vector machine, Strip mining, Shared memory model, SIMD architecture, Dopar loop, Dosingle loop. Unit-IV: Control Flow analysis Control flow analysis, Flow graph, Loops in Flow graphs, Loop Detection, Approaches to Control Flow Analysis, Reducible Flow Graphs, Node Splitting. -

Fast-Path Loop Unrolling of Non-Counted Loops to Enable Subsequent Compiler Optimizations∗

Fast-Path Loop Unrolling of Non-Counted Loops to Enable Subsequent Compiler Optimizations∗ David Leopoldseder Roland Schatz Lukas Stadler Johannes Kepler University Linz Oracle Labs Oracle Labs Austria Linz, Austria Linz, Austria [email protected] [email protected] [email protected] Manuel Rigger Thomas Würthinger Hanspeter Mössenböck Johannes Kepler University Linz Oracle Labs Johannes Kepler University Linz Austria Zurich, Switzerland Austria [email protected] [email protected] [email protected] ABSTRACT Non-Counted Loops to Enable Subsequent Compiler Optimizations. In 15th Java programs can contain non-counted loops, that is, loops for International Conference on Managed Languages & Runtimes (ManLang’18), September 12–14, 2018, Linz, Austria. ACM, New York, NY, USA, 13 pages. which the iteration count can neither be determined at compile https://doi.org/10.1145/3237009.3237013 time nor at run time. State-of-the-art compilers do not aggressively optimize them, since unrolling non-counted loops often involves 1 INTRODUCTION duplicating also a loop’s exit condition, which thus only improves run-time performance if subsequent compiler optimizations can Generating fast machine code for loops depends on a selective appli- optimize the unrolled code. cation of different loop optimizations on the main optimizable parts This paper presents an unrolling approach for non-counted loops of a loop: the loop’s exit condition(s), the back edges and the loop that uses simulation at run time to determine whether unrolling body. All these parts must be optimized to generate optimal code such loops enables subsequent compiler optimizations. Simulat- for a loop. -

Compiler Optimization for Configurable Accelerators Betul Buyukkurt Zhi Guo Walid A

Compiler Optimization for Configurable Accelerators Betul Buyukkurt Zhi Guo Walid A. Najjar University of California Riverside University of California Riverside University of California Riverside Computer Science Department Electrical Engineering Department Computer Science Department Riverside, CA 92521 Riverside, CA 92521 Riverside, CA 92521 [email protected] [email protected] [email protected] ABSTRACT such as constant propagation, constant folding, dead code ROCCC (Riverside Optimizing Configurable Computing eliminations that enables other optimizations. Then, loop Compiler) is an optimizing C to HDL compiler targeting level optimizations such as loop unrolling, loop invariant FPGA and CSOC (Configurable System On a Chip) code motion, loop fusion and other such transforms are architectures. ROCCC system is built on the SUIF- applied. MACHSUIF compiler infrastructure. Our system first This paper is organized as follows. Next section discusses identifies frequently executed kernel loops inside programs on the related work. Section 3 gives an in depth description and then compiles them to VHDL after optimizing the of the ROCCC system. SUIF2 level optimizations are kernels to make best use of FPGA resources. This paper described in Section 4 followed by the compilation done at presents an overview of the ROCCC project as well as the MACHSUIF level in Section 5. Section 6 mentions our optimizations performed inside the ROCCC compiler. early results. Finally Section 7 concludes the paper. 1. INTRODUCTION 2. RELATED WORK FPGAs (Field Programmable Gate Array) can boost There are several projects and publications on translating C software performance due to the large amount of or other high level languages to different HDLs. Streams- parallelism they offer. -

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

Functional Array Programming in Sac

Functional Array Programming in SaC Sven-Bodo Scholz Dept of Computer Science, University of Hertfordshire, United Kingdom [email protected] Abstract. These notes present an introduction into array-based pro- gramming from a functional, i.e., side-effect-free perspective. The first part focuses on promoting arrays as predominant, stateless data structure. This leads to a programming style that favors compo- sitions of generic array operations that manipulate entire arrays over specifications that are made in an element-wise fashion. An algebraicly consistent set of such operations is defined and several examples are given demonstrating the expressiveness of the proposed set of operations. The second part shows how such a set of array operations can be defined within the first-order functional array language SaC.Itdoes not only discuss the language design issues involved but it also tackles implementation issues that are crucial for achieving acceptable runtimes from such genericly specified array operations. 1 Introduction Traditionally, binary lists and algebraic data types are the predominant data structures in functional programming languages. They fit nicely into the frame- work of recursive program specifications, lazy evaluation and demand driven garbage collection. Support for arrays in most languages is confined to a very resricted set of basic functionality similar to that found in imperative languages. Even if some languages do support more advanced specificational means such as array comprehensions, these usualy do not provide the same genericity as can be found in array programming languages such as Apl,J,orNial. Besides these specificational issues, typically, there is also a performance issue. -

Loop Transformations and Parallelization

Loop transformations and parallelization Claude Tadonki LAL/CNRS/IN2P3 University of Paris-Sud [email protected] December 2010 Claude Tadonki Loop transformations and parallelization C. Tadonki – Loop transformations Introduction Most of the time, the most time consuming part of a program is on loops. Thus, loops optimization is critical in high performance computing. Depending on the target architecture, the goal of loops transformations are: improve data reuse and data locality efficient use of memory hierarchy reducing overheads associated with executing loops instructions pipeline maximize parallelism Loop transformations can be performed at different levels by the programmer, the compiler, or specialized tools. At high level, some well known transformations are commonly considered: loop interchange loop (node) splitting loop unswitching loop reversal loop fusion loop inversion loop skewing loop fission loop vectorization loop blocking loop unrolling loop parallelization Claude Tadonki Loop transformations and parallelization C. Tadonki – Loop transformations Dependence analysis Extract and analyze the dependencies of a computation from its polyhedral model is a fundamental step toward loop optimization or scheduling. Definition For a given variable V and given indexes I1, I2, if the computation of X(I1) requires the value of X(I2), then I1 ¡ I2 is called a dependence vector for variable V . Drawing all the dependence vectors within the computation polytope yields the so-called dependencies diagram. Example The dependence vectors are (1; 0); (0; 1); (¡1; 1). Claude Tadonki Loop transformations and parallelization C. Tadonki – Loop transformations Scheduling Definition The computation on the entire domain of a given loop can be performed following any valid schedule.A timing function tV for variable V yields a valid schedule if and only if t(x) > t(x ¡ d); 8d 2 DV ; (1) where DV is the set of all dependence vectors for variable V . -

Introduchon to Arm for Network Stack Developers

Introducon to Arm for network stack developers Pavel Shamis/Pasha Principal Research Engineer Mvapich User Group 2017 © 2017 Arm Limited Columbus, OH Outline • Arm Overview • HPC SoLware Stack • Porng on Arm • Evaluaon 2 © 2017 Arm Limited Arm Overview © 2017 Arm Limited An introduc1on to Arm Arm is the world's leading semiconductor intellectual property supplier. We license to over 350 partners, are present in 95% of smart phones, 80% of digital cameras, 35% of all electronic devices, and a total of 60 billion Arm cores have been shipped since 1990. Our CPU business model: License technology to partners, who use it to create their own system-on-chip (SoC) products. We may license an instrucBon set architecture (ISA) such as “ARMv8-A”) or a specific implementaon, such as “Cortex-A72”. …and our IP extends beyond the CPU Partners who license an ISA can create their own implementaon, as long as it passes the compliance tests. 4 © 2017 Arm Limited A partnership business model A business model that shares success Business Development • Everyone in the value chain benefits Arm Licenses technology to Partner • Long term sustainability SemiCo Design once and reuse is fundamental IP Partner Licence fee • Spread the cost amongst many partners Provider • Technology reused across mulBple applicaons Partners develop • Creates market for ecosystem to target chips – Re-use is also fundamental to the ecosystem Royalty Upfront license fee OEM • Covers the development cost Customer Ongoing royalBes OEM sells • Typically based on a percentage of chip price -

Compiler-Based Code-Improvement Techniques

Compiler-Based Code-Improvement Techniques KEITH D. COOPER, KATHRYN S. MCKINLEY, and LINDA TORCZON Since the earliest days of compilation, code quality has been recognized as an important problem [18]. A rich literature has developed around the issue of improving code quality. This paper surveys one part of that literature: code transformations intended to improve the running time of programs on uniprocessor machines. This paper emphasizes transformations intended to improve code quality rather than analysis methods. We describe analytical techniques and specific data-flow problems to the extent that they are necessary to understand the transformations. Other papers provide excellent summaries of the various sub-fields of program analysis. The paper is structured around a simple taxonomy that classifies transformations based on how they change the code. The taxonomy is populated with example transformations drawn from the literature. Each transformation is described at a depth that facilitates broad understanding; detailed references are provided for deeper study of individual transformations. The taxonomy provides the reader with a framework for thinking about code-improving transformations. It also serves as an organizing principle for the paper. Copyright 1998, all rights reserved. You may copy this article for your personal use in Comp 512. Further reproduction or distribution requires written permission from the authors. 1INTRODUCTION This paper presents an overview of compiler-based methods for improving the run-time behavior of programs — often mislabeled code optimization. These techniques have a long history in the literature. For example, Backus makes it quite clear that code quality was a major concern to the implementors of the first Fortran compilers [18]. -

Survey of Compiler Technology at the IBM Toronto Laboratory

An (incomplete) Survey of Compiler Technology at the IBM Toronto Laboratory Bob Blainey March 26, 2002 Target systems Sovereign (Sun JDK-based) Just-in-Time (JIT) Compiler zSeries (S/390) OS/390, Linux Resettable, shareable pSeries (PowerPC) AIX 32-bit and 64-bit Linux xSeries (x86 or IA-32) Windows, OS/2, Linux, 4690 (POS) IA-64 (Itanium, McKinley) Windows, Linux C and C++ Compilers zSeries OS/390 pSeries AIX Fortran Compiler pSeries AIX Key Optimizing Compiler Components TOBEY (Toronto Optimizing Back End with Yorktown) Highly optimizing code generator for S/390 and PowerPC targets TPO (Toronto Portable Optimizer) Mostly machine-independent optimizer for Wcode intermediate language Interprocedural analysis, loop transformations, parallelization Sun JDK-based JIT (Sovereign) Best of breed JIT compiler for client and server applications Based very loosely on Sun JDK Inside a Batch Compilation C source C++ source Fortran source Other source C++ Front Fortran C Front End Other Front End Front End Ends Wcode Wcode Wcode++ Wcode Wcode TPO Wcode Scalarizer Wcode TOBEY Wcode Back End Object Code TOBEY Optimizing Back End Project started in 1983 targetting S/370 Later retargetted to ROMP (PC-RT), Power, Power2, PowerPC, SPARC, and ESAME/390 (64 bit) Experimental retargets to i386 and PA-RISC Shipped in over 40 compiler products on 3 different platforms with 8 different source languages Primary vehicle for compiler optimization since the creation of the RS/6000 (pSeries) Implemented in a combination of PL.8 ("80% of PL/I") and C++ on an AIX reference -

Sun Microsystems

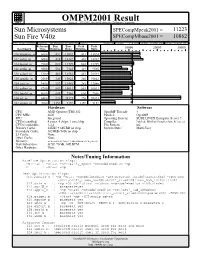

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3