Introduchon to Arm for Network Stack Developers

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ARM HPC Ecosystem

ARM HPC Ecosystem Darren Cepulis HPC Segment Manager ARM Business Segment Group HPC Forum, Santa Fe, NM 19th April 2017 © ARM 2017 ARM Collaboration for Exascale Programs United States Japan ARM is currently a participant in Fujitsu and RIKEN announced that the two Department of Energy funded Post-K system targeted at Exascale will pre-Exascale projects: Data be based on ARMv8 with new Scalable Movement Dominates and Fast Vector Extensions. Forward 2. European Union China Through FP7 and Horizon 2020, James Lin, vice director for the Center ARM has been involved in several of HPC at Shanghai Jiao Tong University funded pre-Exascale projects claims China will build three pre- including the Mont Blanc program Exascale prototypes to select the which deployed one of the first architecture for their Exascale system. ARM prototype HPC systems. The three prototypes are based on AMD, SunWei TaihuLight, and ARMv8. 2 © ARM 2017 ARM HPC deployments starting in 2H2017 Tw o recent announcements about ARM in HPC in Europe: 3 © ARM 2017 Japan Exascale slides from Fujitsu at ISC’16 4 © ARM 2017 Foundational SW Ecosystem for HPC . Linux OS’s – RedHat, SUSE, CENTOS, UBUNTU,… Commercial . Compilers – ARM, GNU, LLVM,… . Libraries – ARM, OpenBLAS, BLIS, ATLAS, FFTW… . Parallelism – OpenMP, OpenMPI, MVAPICH2,… . Debugging – Allinea, RW Totalview, GDB,… Open-source . Analysis – ARM, Allinea, HPCToolkit, TAU,… . Job schedulers – LSF, PBS Pro, SLURM,… . Cluster mgmt – Bright, CMU, warewulf,… Predictable Baseline 5 © ARM 2017 – now on ARM Functional Supported packages / components Areas OpenHPC defines a baseline. It is a community effort to Base OS RHEL/CentOS 7.1, SLES 12 provide a common, verified set of open source packages for Administrative Conman, Ganglia, Lmod, LosF, ORCM, Nagios, pdsh, HPC deployments Tools prun ARM’s participation: Provisioning Warewulf Resource Mgmt. -

0 BLIS: a Modern Alternative to the BLAS

0 BLIS: A Modern Alternative to the BLAS FIELD G. VAN ZEE and ROBERT A. VAN DE GEIJN, The University of Texas at Austin We propose the portable BLAS-like Interface Software (BLIS) framework which addresses a number of short- comings in both the original BLAS interface and present-day BLAS implementations. The framework allows developers to rapidly instantiate high-performance BLAS-like libraries on existing and new architectures with relatively little effort. The key to this achievement is the observation that virtually all computation within level-2 and level-3 BLAS operations may be expressed in terms of very simple kernels. Higher-level framework code is generalized so that it can be reused and/or re-parameterized for different operations (as well as different architectures) with little to no modification. Inserting high-performance kernels into the framework facilitates the immediate optimization of any and all BLAS-like operations which are cast in terms of these kernels, and thus the framework acts as a productivity multiplier. Users of BLAS-dependent applications are supported through a straightforward compatibility layer, though calling sequences must be updated for those who wish to access new functionality. Experimental performance of level-2 and level-3 operations is observed to be competitive with two mature open source libraries (OpenBLAS and ATLAS) as well as an established commercial product (Intel MKL). Categories and Subject Descriptors: G.4 [Mathematical Software]: Efficiency General Terms: Algorithms, Performance Additional Key Words and Phrases: linear algebra, libraries, high-performance, matrix, BLAS ACM Reference Format: ACM Trans. Math. Softw. 0, 0, Article 0 ( 0000), 31 pages. -

18 Restructuring the Tridiagonal and Bidiagonal QR Algorithms for Performance

18 Restructuring the Tridiagonal and Bidiagonal QR Algorithms for Performance FIELD G. VAN ZEE and ROBERT A. VAN DE GEIJN, The University of Texas at Austin GREGORIO QUINTANA-ORT´I, Universitat Jaume I We show how both the tridiagonal and bidiagonal QR algorithms can be restructured so that they become rich in operations that can achieve near-peak performance on a modern processor. The key is a novel, cache-friendly algorithm for applying multiple sets of Givens rotations to the eigenvector/singular vec- tor matrix. This algorithm is then implemented with optimizations that: (1) leverage vector instruction units to increase floating-point throughput, and (2) fuse multiple rotations to decrease the total number of memory operations. We demonstrate the merits of these new QR algorithms for computing the Hermitian eigenvalue decomposition (EVD) and singular value decomposition (SVD) of dense matrices when all eigen- vectors/singular vectors are computed. The approach yields vastly improved performance relative to tradi- tional QR algorithms for these problems and is competitive with two commonly used alternatives—Cuppen’s Divide-and-Conquer algorithm and the method of Multiple Relatively Robust Representations—while inherit- ing the more modest O(n) workspace requirements of the original QR algorithms. Since the computations performed by the restructured algorithms remain essentially identical to those performed by the original methods, robust numerical properties are preserved. Categories and Subject Descriptors: G.4 [Mathematical Software]: Efficiency General Terms: Algorithms, Performance Additional Key Words and Phrases: Eigenvalues, singular values, tridiagonal, bidiagonal, EVD, SVD, QR algorithm, Givens rotations, linear algebra, libraries, high performance ACM Reference Format: Van Zee, F. -

0 BLIS: a Framework for Rapidly Instantiating BLAS Functionality

0 BLIS: A Framework for Rapidly Instantiating BLAS Functionality FIELD G. VAN ZEE and ROBERT A. VAN DE GEIJN, The University of Texas at Austin The BLAS-like Library Instantiation Software (BLIS) framework is a new infrastructure for rapidly in- stantiating Basic Linear Algebra Subprograms (BLAS) functionality. Its fundamental innovation is that virtually all computation within level-2 (matrix-vector) and level-3 (matrix-matrix) BLAS operations can be expressed and optimized in terms of very simple kernels. While others have had similar insights, BLIS reduces the necessary kernels to what we believe is the simplest set that still supports the high performance that the computational science community demands. Higher-level framework code is generalized and imple- mented in ISO C99 so that it can be reused and/or re-parameterized for different operations (and different architectures) with little to no modification. Inserting high-performance kernels into the framework facili- tates the immediate optimization of any BLAS-like operations which are cast in terms of these kernels, and thus the framework acts as a productivity multiplier. Users of BLAS-dependent applications are given a choice of using the the traditional Fortran-77 BLAS interface, a generalized C interface, or any other higher level interface that builds upon this latter API. Preliminary performance of level-2 and level-3 operations is observed to be competitive with two mature open source libraries (OpenBLAS and ATLAS) as well as an established commercial product (Intel MKL). Categories and Subject Descriptors: G.4 [Mathematical Software]: Efficiency General Terms: Algorithms, Performance Additional Key Words and Phrases: linear algebra, libraries, high-performance, matrix, BLAS ACM Reference Format: ACM Trans. -

DD2358 – Introduction to HPC Linear Algebra Libraries & BLAS

DD2358 – Introduction to HPC Linear Algebra Libraries & BLAS Stefano Markidis, KTH Royal Institute of Technology After this lecture, you will be able to • Understand the importance of numerical libraries in HPC • List a series of key numerical libraries including BLAS • Describe which kind of operations BLAS supports • Experiment with OpenBLAS and perform a matrix-matrix multiply using BLAS 2021-02-22 2 Numerical Libraries are the Foundation for Application Developments • While these applications are used in a wide variety of very different disciplines, their underlying computational algorithms are very similar to one another. • Application developers do not have to waste time redeveloping supercomputing software that has already been developed elsewhere. • Libraries targeting numerical linear algebra operations are the most common, given the ubiquity of linear algebra in scientific computing algorithms. 2021-02-22 3 Libraries are Tuned for Performance • Numerical libraries have been highly tuned for performance, often for more than a decade – It makes it difficult for the application developer to match a library’s performance using a homemade equivalent. • Because they are relatively easy to use and their highly tuned performance across a wide range of HPC platforms – The use of scientific computing libraries as software dependencies in computational science applications has become widespread. 2021-02-22 4 HPC Community Standards • Apart from acting as a repository for software reuse, libraries serve the important role of providing a -

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

The BLAS API of BLASFEO: Optimizing Performance for Small Matrices

The BLAS API of BLASFEO: optimizing performance for small matrices Gianluca Frison1, Tommaso Sartor1, Andrea Zanelli1, Moritz Diehl1;2 University of Freiburg, 1 Department of Microsystems Engineering (IMTEK), 2 Department of Mathematics email: [email protected] February 5, 2020 This research was supported by the German Federal Ministry for Economic Affairs and Energy (BMWi) via eco4wind (0324125B) and DyConPV (0324166B), and by DFG via Research Unit FOR 2401. Abstract BLASFEO is a dense linear algebra library providing high-performance implementations of BLAS- and LAPACK-like routines for use in embedded optimization and other applications targeting relatively small matrices. BLASFEO defines an API which uses a packed matrix format as its native format. This format is analogous to the internal memory buffers of optimized BLAS, but it is exposed to the user and it removes the packing cost from the routine call. For matrices fitting in cache, BLASFEO outperforms optimized BLAS implementations, both open-source and proprietary. This paper investigates the addition of a standard BLAS API to the BLASFEO framework, and proposes an implementation switching between two or more algorithms optimized for different matrix sizes. Thanks to the modular assembly framework in BLASFEO, tailored linear algebra kernels with mixed column- and panel-major arguments are easily developed. This BLAS API has lower performance than the BLASFEO API, but it nonetheless outperforms optimized BLAS and especially LAPACK libraries for matrices fitting in cache. Therefore, it can boost a wide range of applications, where standard BLAS and LAPACK libraries are employed and the matrix size is moderate. In particular, this paper investigates the benefits in scientific programming languages such as Octave, SciPy and Julia. -

Accelerating the “Motifs” in Machine Learning on Modern Processors

Accelerating the \Motifs" in Machine Learning on Modern Processors Submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical and Computer Engineering Jiyuan Zhang B.S., Electrical Engineering, Harbin Institute of Technology Carnegie Mellon University Pittsburgh, PA December 2020 c Jiyuan Zhang, 2020 All Rights Reserved Acknowledgments First and foremost, I would like to thank my advisor | Franz. Thank you for being a supportive and understanding advisor. You encouraged me to pursue projects I enjoyed and guided me to be the researcher that I am today. When I was stuck on a difficult problem, you are always there to offer help and stand side by side with me to figure out solutions. No matter what the difficulties are, from research to personal life issues, you can always consider in our shoes and provide us your unreserved support. Your encouragement and sense of humor has been a great help to guide me through the difficult times. You are the key enabler for me to accomplish the PhD journey, as well as making my PhD journey less suffering and more joyful. Next, I would like to thank the members of my thesis committee { Dr. Michael Garland, Dr. Phil Gibbons, and Dr. Tze Meng Low. Thank you for taking the time to be my thesis committee. Your questions and suggestions have greatly helped shape this work, and your insights have helped me under- stand the limitations that I would have overlooked in the original draft. I am grateful for the advice and suggestions I have received from you to improve this dissertation. -

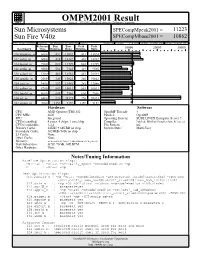

Sun Microsystems

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3 -

Best Practice Guide - Generic X86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013

Best Practice Guide - Generic x86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013 1 Best Practice Guide - Generic x86 Table of Contents 1. Introduction .............................................................................................................................. 3 2. x86 - Basic Properties ................................................................................................................ 3 2.1. Basic Properties .............................................................................................................. 3 2.2. Simultaneous Multithreading ............................................................................................. 4 3. Programming Environment ......................................................................................................... 5 3.1. Modules ........................................................................................................................ 5 3.2. Compiling ..................................................................................................................... 6 3.2.1. Compilers ........................................................................................................... 6 3.2.2. General Compiler Flags ......................................................................................... 6 3.2.2.1. GCC ........................................................................................................ 6 3.2.2.2. Intel ........................................................................................................ -

Algorithms and Optimization Techniques for High-Performance Matrix-Matrix Multiplications of Very Small Matrices

Algorithms and Optimization Techniques for High-Performance Matrix-Matrix Multiplications of Very Small Matrices I. Masliahc, A. Abdelfattaha, A. Haidara, S. Tomova, M. Baboulinb, J. Falcoub, J. Dongarraa,d aInnovative Computing Laboratory, University of Tennessee, Knoxville, TN, USA bUniversity of Paris-Sud, France cInria Bordeaux, France dUniversity of Manchester, Manchester, UK Abstract Expressing scientific computations in terms of BLAS, and in particular the general dense matrix-matrix multiplication (GEMM), is of fundamental impor- tance for obtaining high performance portability across architectures. However, GEMMs for small matrices of sizes smaller than 32 are not sufficiently optimized in existing libraries. We consider the computation of many small GEMMs and its performance portability for a wide range of computer architectures, includ- ing Intel CPUs, ARM, IBM, Intel Xeon Phi, and GPUs. These computations often occur in applications like big data analytics, machine learning, high-order finite element methods (FEM), and others. The GEMMs are grouped together in a single batched routine. For these cases, we present algorithms and their op- timization techniques that are specialized for the matrix sizes and architectures of interest. We derive a performance model and show that the new develop- ments can be tuned to obtain performance that is within 90% of the optimal for any of the architectures of interest. For example, on a V100 GPU for square matrices of size 32, we achieve an execution rate of about 1; 600 gigaFLOP/s in double-precision arithmetic, which is 95% of the theoretically derived peak for this computation on a V100 GPU. We also show that these results outperform I Preprint submitted to Journal of Parallel Computing September 25, 2018 currently available state-of-the-art implementations such as vendor-tuned math libraries, including Intel MKL and NVIDIA CUBLAS, as well as open-source libraries like OpenBLAS and Eigen.