MPI Over Infiniband

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Designing an Ultra Low-Overhead Multithreading Runtime for Nim

Designing an ultra low-overhead multithreading runtime for Nim Mamy Ratsimbazafy Weave [email protected] https://github.com/mratsim/weave Hello! I am Mamy Ratsimbazafy During the day blockchain/Ethereum 2 developer (in Nim) During the night, deep learning and numerical computing developer (in Nim) and data scientist (in Python) You can contact me at [email protected] Github: mratsim Twitter: m_ratsim 2 Where did this talk came from? ◇ 3 years ago: started writing a tensor library in Nim. ◇ 2 threading APIs at the time: OpenMP and simple threadpool ◇ 1 year ago: complete refactoring of the internals 3 Agenda ◇ Understanding the design space ◇ Hardware and software multithreading: definitions and use-cases ◇ Parallel APIs ◇ Sources of overhead and runtime design ◇ Minimum viable runtime plan in a weekend 4 Understanding the 1 design space Concurrency vs parallelism, latency vs throughput Cooperative vs preemptive, IO vs CPU 5 Parallelism is not 6 concurrency Kernel threading 7 models 1:1 Threading 1 application thread -> 1 hardware thread N:1 Threading N application threads -> 1 hardware thread M:N Threading M application threads -> N hardware threads The same distinctions can be done at a multithreaded language or multithreading runtime level. 8 The problem How to schedule M tasks on N hardware threads? Latency vs 9 Throughput - Do we want to do all the work in a minimal amount of time? - Numerical computing - Machine learning - ... - Do we want to be fair? - Clients-server - Video decoding - ... Cooperative vs 10 Preemptive Cooperative multithreading: -

Fiber Context As a first-Class Object

Document number: P0876R6 Date: 2019-06-17 Author: Oliver Kowalke ([email protected]) Nat Goodspeed ([email protected]) Audience: SG1 fiber_context - fibers without scheduler Revision History . .1 abstract . .2 control transfer mechanism . .2 std::fiber_context as a first-class object . .3 encapsulating the stack . .4 invalidation at resumption . .4 problem: avoiding non-const global variables and undefined behaviour . .5 solution: avoiding non-const global variables and undefined behaviour . .6 inject function into suspended fiber . 11 passing data between fibers . 12 termination . 13 exceptions . 16 std::fiber_context as building block for higher-level frameworks . 16 interaction with STL algorithms . 18 possible implementation strategies . 19 fiber switch on architectures with register window . 20 how fast is a fiber switch . 20 interaction with accelerators . 20 multi-threading environment . 21 acknowledgments . 21 API ................................................................ 22 33.7 Cooperative User-Mode Threads . 22 33.7.1 General . 22 33.7.2 Empty vs. Non-Empty . 22 33.7.3 Explicit Fiber vs. Implicit Fiber . 22 33.7.4 Implicit Top-Level Function . 22 33.7.5 Header <experimental/fiber_context> synopsis . 23 33.7.6 Class fiber_context . 23 33.7.7 Function unwind_fiber() . 27 references . 29 Revision History This document supersedes P0876R5. Changes since P0876R5: • std::unwind_exception removed: stack unwinding must be performed by platform facilities. • fiber_context::can_resume_from_any_thread() renamed to can_resume_from_this_thread(). • fiber_context::valid() renamed to empty() with inverted sense. • Material has been added concerning the top-level wrapper logic governing each fiber. The change to unwinding fiber stacks using an anonymous foreign exception not catchable by C++ try / catch blocks is in response to deep discussions in Kona 2019 of the surprisingly numerous problems surfaced by using an ordinary C++ exception for that purpose. -

Fiber-Based Architecture for NFV Cloud Databases

Fiber-based Architecture for NFV Cloud Databases Vaidas Gasiunas, David Dominguez-Sal, Ralph Acker, Aharon Avitzur, Ilan Bronshtein, Rushan Chen, Eli Ginot, Norbert Martinez-Bazan, Michael Muller,¨ Alexander Nozdrin, Weijie Ou, Nir Pachter, Dima Sivov, Eliezer Levy Huawei Technologies, Gauss Database Labs, European Research Institute fvaidas.gasiunas,david.dominguez1,fi[email protected] ABSTRACT to a specific workload, as well as to change this alloca- The telco industry is gradually shifting from using mono- tion on demand. Elasticity is especially attractive to op- lithic software packages deployed on custom hardware to erators who are very conscious about Total Cost of Own- using modular virtualized software functions deployed on ership (TCO). NFV meets databases (DBs) in more than cloudified data centers using commodity hardware. This a single aspect [20, 30]. First, the NFV concepts of modu- transformation is referred to as Network Function Virtual- lar software functions accelerate the separation of function ization (NFV). The scalability of the databases (DBs) un- (business logic) and state (data) in the design and imple- derlying the virtual network functions is the cornerstone for mentation of network functions (NFs). This in turn enables reaping the benefits from the NFV transformation. This independent scalability of the DB component, and under- paper presents an industrial experience of applying shared- lines its importance in providing elastic state repositories nothing techniques in order to achieve the scalability of a DB for various NFs. In principle, the NFV-ready DBs that are in an NFV setup. The special combination of requirements relevant to our discussion are sophisticated main-memory in NFV DBs are not easily met with conventional execution key-value (KV) stores with stringent high availability (HA) models. -

A Thread Performance Comparison: Windows NT and Solaris on a Symmetric Multiprocessor

The following paper was originally published in the Proceedings of the 2nd USENIX Windows NT Symposium Seattle, Washington, August 3–4, 1998 A Thread Performance Comparison: Windows NT and Solaris on A Symmetric Multiprocessor Fabian Zabatta and Kevin Ying Brooklyn College and CUNY Graduate School For more information about USENIX Association contact: 1. Phone: 510 528-8649 2. FAX: 510 548-5738 3. Email: [email protected] 4. WWW URL:http://www.usenix.org/ A Thread Performance Comparison: Windows NT and Solaris on A Symmetric Multiprocessor Fabian Zabatta and Kevin Ying Logic Based Systems Lab Brooklyn College and CUNY Graduate School Computer Science Department 2900 Bedford Avenue Brooklyn, New York 11210 {fabian, kevin}@sci.brooklyn.cuny.edu Abstract Manufacturers now have the capability to build high performance multiprocessor machines with common CPU CPU CPU CPU cache cache cache cache PC components. This has created a new market of low cost multiprocessor machines. However, these machines are handicapped unless they have an oper- Bus ating system that can take advantage of their under- lying architectures. Presented is a comparison of Shared Memory I/O two such operating systems, Windows NT and So- laris. By focusing on their implementation of Figure 1: A basic symmetric multiprocessor architecture. threads, we show each system's ability to exploit multiprocessors. We report our results and inter- pretations of several experiments that were used to compare the performance of each system. What 1.1 Symmetric Multiprocessing emerges is a discussion on the performance impact of each implementation and its significance on vari- Symmetric multiprocessing (SMP) is the primary ous types of applications. -

Concurrent Cilk: Lazy Promotion from Tasks to Threads in C/C++

Concurrent Cilk: Lazy Promotion from Tasks to Threads in C/C++ Christopher S. Zakian, Timothy A. K. Zakian Abhishek Kulkarni, Buddhika Chamith, and Ryan R. Newton Indiana University - Bloomington, fczakian, tzakian, adkulkar, budkahaw, [email protected] Abstract. Library and language support for scheduling non-blocking tasks has greatly improved, as have lightweight (user) threading packages. How- ever, there is a significant gap between the two developments. In previous work|and in today's software packages|lightweight thread creation incurs much larger overheads than tasking libraries, even on tasks that end up never blocking. This limitation can be removed. To that end, we describe an extension to the Intel Cilk Plus runtime system, Concurrent Cilk, where tasks are lazily promoted to threads. Concurrent Cilk removes the overhead of thread creation on threads which end up calling no blocking operations, and is the first system to do so for C/C++ with legacy support (standard calling conventions and stack representations). We demonstrate that Concurrent Cilk adds negligible overhead to existing Cilk programs, while its promoted threads remain more efficient than OS threads in terms of context-switch overhead and blocking communication. Further, it enables development of blocking data structures that create non-fork-join dependence graphs|which can expose more parallelism, and better supports data-driven computations waiting on results from remote devices. 1 Introduction Both task-parallelism [1, 11, 13, 15] and lightweight threading [20] libraries have become popular for different kinds of applications. The key difference between a task and a thread is that threads may block|for example when performing IO|and then resume again. -

The Component Architecture of Open Mpi: Enabling Third-Party Collective Algorithms∗

THE COMPONENT ARCHITECTURE OF OPEN MPI: ENABLING THIRD-PARTY COLLECTIVE ALGORITHMS∗ Jeffrey M. Squyres and Andrew Lumsdaine Open Systems Laboratory, Indiana University Bloomington, Indiana, USA [email protected] [email protected] Abstract As large-scale clusters become more distributed and heterogeneous, significant research interest has emerged in optimizing MPI collective operations because of the performance gains that can be realized. However, researchers wishing to develop new algorithms for MPI collective operations are typically faced with sig- nificant design, implementation, and logistical challenges. To address a number of needs in the MPI research community, Open MPI has been developed, a new MPI-2 implementation centered around a lightweight component architecture that provides a set of component frameworks for realizing collective algorithms, point-to-point communication, and other aspects of MPI implementations. In this paper, we focus on the collective algorithm component framework. The “coll” framework provides tools for researchers to easily design, implement, and exper- iment with new collective algorithms in the context of a production-quality MPI. Performance results with basic collective operations demonstrate that the com- ponent architecture of Open MPI does not introduce any performance penalty. Keywords: MPI implementation, Parallel computing, Component architecture, Collective algorithms, High performance 1. Introduction Although the performance of the MPI collective operations [6, 17] can be a large factor in the overall run-time of a parallel application, their optimiza- tion has not necessarily been a focus in some MPI implementations until re- ∗This work was supported by a grant from the Lilly Endowment and by National Science Foundation grant 0116050. -

The Impact of Process Placement and Oversubscription on Application Performance: a Case Study for Exascale Computing

Takustraße 7 Konrad-Zuse-Zentrum D-14195 Berlin-Dahlem fur¨ Informationstechnik Berlin Germany FLORIAN WENDE,THOMAS STEINKE,ALEXANDER REINEFELD The Impact of Process Placement and Oversubscription on Application Performance: A Case Study for Exascale Computing ZIB-Report 15-05 (January 2015) Herausgegeben vom Konrad-Zuse-Zentrum fur¨ Informationstechnik Berlin Takustraße 7 D-14195 Berlin-Dahlem Telefon: 030-84185-0 Telefax: 030-84185-125 e-mail: [email protected] URL: http://www.zib.de ZIB-Report (Print) ISSN 1438-0064 ZIB-Report (Internet) ISSN 2192-7782 The Impact of Process Placement and Oversubscription on Application Performance: A Case Study for Exascale Computing Florian Wende, Thomas Steinke, Alexander Reinefeld Abstract: With the growing number of hardware components and the increasing software complexity in the upcoming exascale computers, system failures will become the norm rather than an exception for long-running applications. Fault-tolerance can be achieved by the creation of checkpoints dur- ing the execution of a parallel program. Checkpoint/Restart (C/R) mechanisms allow for both task migration (even if there were no hardware faults) and restarting of tasks after the occurrence of hard- ware faults. Affected tasks are then migrated to other nodes which may result in unfortunate process placement and/or oversubscription of compute resources. In this paper we analyze the impact of unfortunate process placement and oversubscription of compute resources on the performance and scalability of two typical HPC application workloads, CP2K and MOM5. Results are given for a Cray XC30/40 with Aries dragonfly topology. Our results indicate that unfortunate process placement has only little negative impact while oversubscription substantially degrades the performance. -

And Computer Systems I: Introduction Systems

Welcome to Computer Team! Noah Singer Introduction Welcome to Computer Team! Units Computer and Computer Systems I: Introduction Systems Computer Parts Noah Singer Montgomery Blair High School Computer Team September 14, 2017 Overview Welcome to Computer Team! Noah Singer Introduction 1 Introduction Units Computer Systems 2 Units Computer Parts 3 Computer Systems 4 Computer Parts Welcome to Computer Team! Noah Singer Introduction Units Computer Systems Computer Parts Section 1: Introduction Computer Team Welcome to Computer Team! Computer Team is... Noah Singer A place to hang out and eat lunch every Thursday Introduction A cool discussion group for computer science Units Computer An award-winning competition programming group Systems A place for people to learn from each other Computer Parts Open to people of all experience levels Computer Team isn’t... A programming club A highly competitive environment A rigorous course that requires commitment Structure Welcome to Computer Team! Noah Singer Introduction Units Four units, each with five to ten lectures Computer Each lecture comes with notes that you can take home! Systems Computer Guided activities to help you understand more Parts difficult/technical concepts Special presentations and guest lectures on interesting topics Conventions Welcome to Computer Team! Noah Singer Vocabulary terms are marked in bold. Introduction Definition Units Computer Definitions are in boxes (along with theorems, etc.). Systems Computer Parts Important statements are highlighted in italics. Formulas are written -

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

Introduchon to Arm for Network Stack Developers

Introducon to Arm for network stack developers Pavel Shamis/Pasha Principal Research Engineer Mvapich User Group 2017 © 2017 Arm Limited Columbus, OH Outline • Arm Overview • HPC SoLware Stack • Porng on Arm • Evaluaon 2 © 2017 Arm Limited Arm Overview © 2017 Arm Limited An introduc1on to Arm Arm is the world's leading semiconductor intellectual property supplier. We license to over 350 partners, are present in 95% of smart phones, 80% of digital cameras, 35% of all electronic devices, and a total of 60 billion Arm cores have been shipped since 1990. Our CPU business model: License technology to partners, who use it to create their own system-on-chip (SoC) products. We may license an instrucBon set architecture (ISA) such as “ARMv8-A”) or a specific implementaon, such as “Cortex-A72”. …and our IP extends beyond the CPU Partners who license an ISA can create their own implementaon, as long as it passes the compliance tests. 4 © 2017 Arm Limited A partnership business model A business model that shares success Business Development • Everyone in the value chain benefits Arm Licenses technology to Partner • Long term sustainability SemiCo Design once and reuse is fundamental IP Partner Licence fee • Spread the cost amongst many partners Provider • Technology reused across mulBple applicaons Partners develop • Creates market for ecosystem to target chips – Re-use is also fundamental to the ecosystem Royalty Upfront license fee OEM • Covers the development cost Customer Ongoing royalBes OEM sells • Typically based on a percentage of chip price -

Sun Microsystems

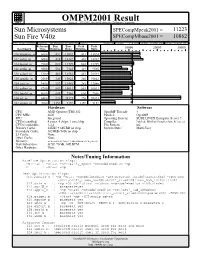

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3