HPC Strategy and Direction for Meteorological Modelling

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linpack Evaluation on a Supercomputer with Heterogeneous Accelerators

Linpack Evaluation on a Supercomputer with Heterogeneous Accelerators Toshio Endo Akira Nukada Graduate School of Information Science and Engineering Global Scientific Information and Computing Center Tokyo Institute of Technology Tokyo Institute of Technology Tokyo, Japan Tokyo, Japan [email protected] [email protected] Satoshi Matsuoka Naoya Maruyama Global Scientific Information and Computing Center Global Scientific Information and Computing Center Tokyo Institute of Technology/National Institute of Informatics Tokyo Institute of Technology Tokyo, Japan Tokyo, Japan [email protected] [email protected] Abstract—We report Linpack benchmark results on the Roadrunner or other systems described above, it includes TSUBAME supercomputer, a large scale heterogeneous system two types of accelerators. This is due to incremental upgrade equipped with NVIDIA Tesla GPUs and ClearSpeed SIMD of the system, which has been the case in commodity CPU accelerators. With all of 10,480 Opteron cores, 640 Xeon cores, 648 ClearSpeed accelerators and 624 NVIDIA Tesla GPUs, clusters; they may have processors with different speeds as we have achieved 87.01TFlops, which is the third record as a result of incremental upgrade. In this paper, we present a heterogeneous system in the world. This paper describes a Linpack implementation and evaluation results on TSUB- careful tuning and load balancing method required to achieve AME with 10,480 Opteron cores, 624 Tesla GPUs and 648 this performance. On the other hand, since the peak speed is ClearSpeed accelerators. In the evaluation, we also used a 163 TFlops, the efficiency is 53%, which is lower than other systems. -

Tsubame 2.5 Towards 3.0 and Beyond to Exascale

Being Very Green with Tsubame 2.5 towards 3.0 and beyond to Exascale Satoshi Matsuoka Professor Global Scientific Information and Computing (GSIC) Center Tokyo Institute of Technology ACM Fellow / SC13 Tech Program Chair NVIDIA Theater Presentation 2013/11/19 Denver, Colorado TSUBAME2.0 NEC Confidential TSUBAME2.0 Nov. 1, 2010 “The Greenest Production Supercomputer in the World” TSUBAME 2.0 New Development >600TB/s Mem BW 220Tbps NW >12TB/s Mem BW >400GB/s Mem BW >1.6TB/s Mem BW Bisecion BW 80Gbps NW BW 35KW Max 1.4MW Max 32nm 40nm ~1KW max 3 Performance Comparison of CPU vs. GPU 1750 GPU 200 GPU ] 1500 160 1250 GByte/s 1000 120 750 80 500 CPU CPU 250 40 Peak Performance [GFLOPS] Performance Peak 0 Memory Bandwidth [ Bandwidth Memory 0 x5-6 socket-to-socket advantage in both compute and memory bandwidth, Same power (200W GPU vs. 200W CPU+memory+NW+…) NEC Confidential TSUBAME2.0 Compute Node 1.6 Tflops Thin 400GB/s Productized Node Mem BW as HP 80GBps NW ProLiant Infiniband QDR x2 (80Gbps) ~1KW max SL390s HP SL390G7 (Developed for TSUBAME 2.0) GPU: NVIDIA Fermi M2050 x 3 515GFlops, 3GByte memory /GPU CPU: Intel Westmere-EP 2.93GHz x2 (12cores/node) Multi I/O chips, 72 PCI-e (16 x 4 + 4 x 2) lanes --- 3GPUs + 2 IB QDR Memory: 54, 96 GB DDR3-1333 SSD:60GBx2, 120GBx2 Total Perf 2.4PFlops Mem: ~100TB NEC Confidential SSD: ~200TB 4-1 2010: TSUBAME2.0 as No.1 in Japan > All Other Japanese Centers on the Top500 COMBINED 2.3 PetaFlops Total 2.4 Petaflops #4 Top500, Nov. -

The Component Architecture of Open Mpi: Enabling Third-Party Collective Algorithms∗

THE COMPONENT ARCHITECTURE OF OPEN MPI: ENABLING THIRD-PARTY COLLECTIVE ALGORITHMS∗ Jeffrey M. Squyres and Andrew Lumsdaine Open Systems Laboratory, Indiana University Bloomington, Indiana, USA [email protected] [email protected] Abstract As large-scale clusters become more distributed and heterogeneous, significant research interest has emerged in optimizing MPI collective operations because of the performance gains that can be realized. However, researchers wishing to develop new algorithms for MPI collective operations are typically faced with sig- nificant design, implementation, and logistical challenges. To address a number of needs in the MPI research community, Open MPI has been developed, a new MPI-2 implementation centered around a lightweight component architecture that provides a set of component frameworks for realizing collective algorithms, point-to-point communication, and other aspects of MPI implementations. In this paper, we focus on the collective algorithm component framework. The “coll” framework provides tools for researchers to easily design, implement, and exper- iment with new collective algorithms in the context of a production-quality MPI. Performance results with basic collective operations demonstrate that the com- ponent architecture of Open MPI does not introduce any performance penalty. Keywords: MPI implementation, Parallel computing, Component architecture, Collective algorithms, High performance 1. Introduction Although the performance of the MPI collective operations [6, 17] can be a large factor in the overall run-time of a parallel application, their optimiza- tion has not necessarily been a focus in some MPI implementations until re- ∗This work was supported by a grant from the Lilly Endowment and by National Science Foundation grant 0116050. -

(Intel® OPA) for Tsubame 3

CASE STUDY High Performance Computing (HPC) with Intel® Omni-Path Architecture Tokyo Institute of Technology Chooses Intel® Omni-Path Architecture for Tsubame 3 Price/performance, thermal stability, and adaptive routing are key features for enabling #1 on Green 500 list Challenge How do you make a good thing better? Professor Satoshi Matsuoka of the Tokyo Institute of Technology (Tokyo Tech) has been designing and building high- performance computing (HPC) clusters for 20 years. Among the systems he and his team at Tokyo Tech have architected, Tsubame 1 (2006) and Tsubame 2 (2010) have shown him the importance of heterogeneous HPC systems for scientific research, analytics, and artificial intelligence (AI). Tsubame 2, built on Intel® Xeon® Tsubame at a glance processors and Nvidia* GPUs with InfiniBand* QDR, was Japan’s first peta-scale • Tsubame 3, the second- HPC production system that achieved #4 on the Top500, was the #1 Green 500 generation large, production production supercomputer, and was the fastest supercomputer in Japan at the time. cluster based on heterogeneous computing at Tokyo Institute of Technology (Tokyo Tech); #61 on June 2017 Top 500 list and #1 on June 2017 Green 500 list For Matsuoka, the next-generation machine needed to take all the goodness of Tsubame 2, enhance it with new technologies to not only advance all the current • The system based upon HPE and latest generations of simulation codes, but also drive the latest application Apollo* 8600 blades, which targets—which included deep learning/machine learning, AI, and very big data are smaller than a 1U server, analytics—and make it more efficient that its predecessor. -

Sun SPARC Enterprise T5440 Servers

Sun SPARC Enterprise® T5440 Server Just the Facts SunWIN token 526118 December 16, 2009 Version 2.3 Distribution restricted to Sun Internal and Authorized Partners Only. Not for distribution otherwise, in whole or in part T5440 Server Just the Facts Dec. 16, 2009 Sun Internal and Authorized Partner Use Only Page 1 of 133 Copyrights ©2008, 2009 Sun Microsystems, Inc. All Rights Reserved. Sun, Sun Microsystems, the Sun logo, Sun Fire, Sun SPARC Enterprise, Solaris, Java, J2EE, Sun Java, SunSpectrum, iForce, VIS, SunVTS, Sun N1, CoolThreads, Sun StorEdge, Sun Enterprise, Netra, SunSpectrum Platinum, SunSpectrum Gold, SunSpectrum Silver, and SunSpectrum Bronze are trademarks or registered trademarks of Sun Microsystems, Inc. in the United States and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the United States and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. UNIX is a registered trademark in the United States and other countries, exclusively licensed through X/Open Company, Ltd. T5440 Server Just the Facts Dec. 16, 2009 Sun Internal and Authorized Partner Use Only Page 2 of 133 Revision History Version Date Comments 1.0 Oct. 13, 2008 - Initial version 1.1 Oct. 16, 2008 - Enhanced I/O Expansion Module section - Notes on release tabs of XSR-1242/XSR-1242E rack - Updated IBM 560 and HP DL580 G5 competitive information - Updates to external storage products 1.2 Nov. 18, 2008 - Number -

Sun Microsystems: Sun Java Workstation W1100z

CFP2000 Result spec Copyright 1999-2004, Standard Performance Evaluation Corporation Sun Microsystems SPECfp2000 = 1787 Sun Java Workstation W1100z SPECfp_base2000 = 1637 SPEC license #: 6 Tested by: Sun Microsystems, Santa Clara Test date: Jul-2004 Hardware Avail: Jul-2004 Software Avail: Jul-2004 Reference Base Base Benchmark Time Runtime Ratio Runtime Ratio 1000 2000 3000 4000 168.wupwise 1600 92.3 1733 71.8 2229 171.swim 3100 134 2310 123 2519 172.mgrid 1800 124 1447 103 1755 173.applu 2100 150 1402 133 1579 177.mesa 1400 76.1 1839 70.1 1998 178.galgel 2900 104 2789 94.4 3073 179.art 2600 146 1786 106 2464 183.equake 1300 93.7 1387 89.1 1460 187.facerec 1900 80.1 2373 80.1 2373 188.ammp 2200 158 1397 154 1425 189.lucas 2000 121 1649 122 1637 191.fma3d 2100 135 1552 135 1552 200.sixtrack 1100 148 742 148 742 301.apsi 2600 170 1529 168 1549 Hardware Software CPU: AMD Opteron (TM) 150 Operating System: Red Hat Enterprise Linux WS 3 (AMD64) CPU MHz: 2400 Compiler: PathScale EKO Compiler Suite, Release 1.1 FPU: Integrated Red Hat gcc 3.5 ssa (from RHEL WS 3) PGI Fortran 5.2 (build 5.2-0E) CPU(s) enabled: 1 core, 1 chip, 1 core/chip AMD Core Math Library (Version 2.0) for AMD64 CPU(s) orderable: 1 File System: Linux/ext3 Parallel: No System State: Multi-user, Run level 3 Primary Cache: 64KBI + 64KBD on chip Secondary Cache: 1024KB (I+D) on chip L3 Cache: N/A Other Cache: N/A Memory: 4x1GB, PC3200 CL3 DDR SDRAM ECC Registered Disk Subsystem: IDE, 80GB, 7200RPM Other Hardware: None Notes/Tuning Information A two-pass compilation method is -

Cray Scientific Libraries Overview

Cray Scientific Libraries Overview What are libraries for? ● Building blocks for writing scientific applications ● Historically – allowed the first forms of code re-use ● Later – became ways of running optimized code ● Today the complexity of the hardware is very high ● The Cray PE insulates users from this complexity • Cray module environment • CCE • Performance tools • Tuned MPI libraries (+PGAS) • Optimized Scientific libraries Cray Scientific Libraries are designed to provide the maximum possible performance from Cray systems with minimum effort. Scientific libraries on XC – functional view FFT Sparse Dense Trilinos BLAS FFTW LAPACK PETSc ScaLAPACK CRAFFT CASK IRT What makes Cray libraries special 1. Node performance ● Highly tuned routines at the low-level (ex. BLAS) 2. Network performance ● Optimized for network performance ● Overlap between communication and computation ● Use the best available low-level mechanism ● Use adaptive parallel algorithms 3. Highly adaptive software ● Use auto-tuning and adaptation to give the user the known best (or very good) codes at runtime 4. Productivity features ● Simple interfaces into complex software LibSci usage ● LibSci ● The drivers should do it all for you – no need to explicitly link ● For threads, set OMP_NUM_THREADS ● Threading is used within LibSci ● If you call within a parallel region, single thread used ● FFTW ● module load fftw (there are also wisdom files available) ● PETSc ● module load petsc (or module load petsc-complex) ● Use as you would your normal PETSc build ● Trilinos ● -

Introduchon to Arm for Network Stack Developers

Introducon to Arm for network stack developers Pavel Shamis/Pasha Principal Research Engineer Mvapich User Group 2017 © 2017 Arm Limited Columbus, OH Outline • Arm Overview • HPC SoLware Stack • Porng on Arm • Evaluaon 2 © 2017 Arm Limited Arm Overview © 2017 Arm Limited An introduc1on to Arm Arm is the world's leading semiconductor intellectual property supplier. We license to over 350 partners, are present in 95% of smart phones, 80% of digital cameras, 35% of all electronic devices, and a total of 60 billion Arm cores have been shipped since 1990. Our CPU business model: License technology to partners, who use it to create their own system-on-chip (SoC) products. We may license an instrucBon set architecture (ISA) such as “ARMv8-A”) or a specific implementaon, such as “Cortex-A72”. …and our IP extends beyond the CPU Partners who license an ISA can create their own implementaon, as long as it passes the compliance tests. 4 © 2017 Arm Limited A partnership business model A business model that shares success Business Development • Everyone in the value chain benefits Arm Licenses technology to Partner • Long term sustainability SemiCo Design once and reuse is fundamental IP Partner Licence fee • Spread the cost amongst many partners Provider • Technology reused across mulBple applicaons Partners develop • Creates market for ecosystem to target chips – Re-use is also fundamental to the ecosystem Royalty Upfront license fee OEM • Covers the development cost Customer Ongoing royalBes OEM sells • Typically based on a percentage of chip price -

Sun Fire X4500 Server Linux and Solaris OS Installation Guide

Sun Fire X4500 Server Linux and Solaris OS Installation Guide Sun Microsystems, Inc. www.sun.com Part No. 819-4362-17 March 2009, Revision A Submit comments about this document at: http://www.sun.com/hwdocs/feedback Copyright © 2009 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, U.S.A. All rights reserved. This distribution may include materials developed by third parties. Sun, Sun Microsystems, the Sun logo, Java, Netra, Solaris, Sun Ray and Sun Fire X4500 Backup Server are trademarks or registered trademarks of Sun Microsystems, Inc., and its subsidiaries, in the U.S. and other countries. This product is covered and controlled by U.S. Export Control laws and may be subject to the export or import laws in other countries. Nuclear, missile, chemical biological weapons or nuclear maritime end uses or end users, whether direct or indirect, are strictly prohibited. Export or reexport to countries subject to U.S. embargo or to entities identified on U.S. export exclusion lists, including, but not limited to, the denied persons and specially designated nationals lists is strictly prohibited. Use of any spare or replacement CPUs is limited to repair or one-for-one replacement of CPUs in products exported in compliance with U.S. export laws. Use of CPUs as product upgrades unless authorized by the U.S. Government is strictly prohibited. Copyright © 2009 Sun Microsystems, Inc., 4150 Network Circle, Santa Clara, California 95054, Etats-Unis. Tous droits réservés. Cette distribution peut incluire des élements développés par des tiers. Sun, Sun Microsystems, le logo Sun, Java, Netra, Solaris, Sun Ray et Sun Fire X4500 Backup Server sont des marques de fabrique ou des marques déposées de Sun Microsystems, Inc., et ses filiales, aux Etats-Unis et dans d'autres pays. -

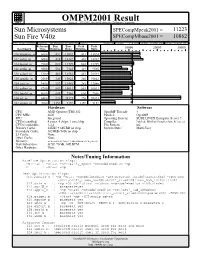

Sun Microsystems

OMPM2001 Result spec Copyright 1999-2002, Standard Performance Evaluation Corporation Sun Microsystems SPECompMpeak2001 = 11223 Sun Fire V40z SPECompMbase2001 = 10862 SPEC license #:HPG0010 Tested by: Sun Microsystems, Santa Clara Test site: Menlo Park Test date: Feb-2005 Hardware Avail:Apr-2005 Software Avail:Apr-2005 Reference Base Base Peak Peak Benchmark Time Runtime Ratio Runtime Ratio 10000 20000 30000 310.wupwise_m 6000 432 13882 431 13924 312.swim_m 6000 424 14142 401 14962 314.mgrid_m 7300 622 11729 622 11729 316.applu_m 4000 536 7463 419 9540 318.galgel_m 5100 483 10561 481 10593 320.equake_m 2600 240 10822 240 10822 324.apsi_m 3400 297 11442 282 12046 326.gafort_m 8700 869 10011 869 10011 328.fma3d_m 4600 608 7566 608 7566 330.art_m 6400 226 28323 226 28323 332.ammp_m 7000 1359 5152 1359 5152 Hardware Software CPU: AMD Opteron (TM) 852 OpenMP Threads: 4 CPU MHz: 2600 Parallel: OpenMP FPU: Integrated Operating System: SUSE LINUX Enterprise Server 9 CPU(s) enabled: 4 cores, 4 chips, 1 core/chip Compiler: PathScale EKOPath Compiler Suite, Release 2.1 CPU(s) orderable: 1,2,4 File System: ufs Primary Cache: 64KBI + 64KBD on chip System State: Multi-User Secondary Cache: 1024KB (I+D) on chip L3 Cache: None Other Cache: None Memory: 16GB (8x2GB, PC3200 CL3 DDR SDRAM ECC Registered) Disk Subsystem: SCSI, 73GB, 10K RPM Other Hardware: None Notes/Tuning Information Baseline Optimization Flags: Fortran : -Ofast -OPT:early_mp=on -mcmodel=medium -mp C : -Ofast -mp Peak Optimization Flags: 310.wupwise_m : -mp -Ofast -mcmodel=medium -LNO:prefetch_ahead=5:prefetch=3 -

Repository Design Report with Attached Metadata Plan

National Digital Information Infrastructure and Preservation Program Preserving Digital Public Television Project REPOSITORY DESIGN REPORT WITH ATTACHED METADATA PLAN Prepared by Joseph Pawletko, Software Systems Architect, New York University With contributions by Nan Rubin, Project Director, Thirteen/WNET-TV Kara van Malssen, Senior Research Scholar, New York University 2010-03-19 Table of Contents Executive Summary ………………………………………………………………………………………… 2 1. Background ……………………………………………………………………………………………..... 3 2. OAIS Reference Model and Terms …………………………………………………………………… 3 3. Preservation Repository Design ……………………………………………………………………….. 3 4. Core Archival Information Package Structure …………………………………………………….. 11 5. PDPTV Archival Information Package Structure …………………………………………………... 14 6. PDPTV Submission Information Packages …………………………………………………………... 17 7. PDPTV Archival Information Package Generation and Metadata Plan ……………………... 18 8. Content Transfer to the Library of Congress .……………………………………………………….. 22 9. Summary …………………………………………………………………………………………………… 23 10. Remaining Work …………………………………………………………………………………………. 30 11. Acknowledgements ……………………………………………………………………………………. 31 Appendix 1 Glossary and OAIS Operation ……………………………………………...…………… 32 Appendix 2 WNET NAAT 002405 METS File ……………………………………………………………. 37 Appendix 3 WNET NAAT 002405 PBCore File …………………………………………………………. 41 Appendix 4 WNET NAAT 002405 SD Broadcast Master PREMIS File ...……………………………. 46 Appendix 5 WNET NAAT 002405 SD Broadcast Master METSRights File …………………………. 48 Appendix 6 WGBH -

Best Practice Guide - Generic X86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013

Best Practice Guide - Generic x86 Vegard Eide, NTNU Nikos Anastopoulos, GRNET Henrik Nagel, NTNU 02-05-2013 1 Best Practice Guide - Generic x86 Table of Contents 1. Introduction .............................................................................................................................. 3 2. x86 - Basic Properties ................................................................................................................ 3 2.1. Basic Properties .............................................................................................................. 3 2.2. Simultaneous Multithreading ............................................................................................. 4 3. Programming Environment ......................................................................................................... 5 3.1. Modules ........................................................................................................................ 5 3.2. Compiling ..................................................................................................................... 6 3.2.1. Compilers ........................................................................................................... 6 3.2.2. General Compiler Flags ......................................................................................... 6 3.2.2.1. GCC ........................................................................................................ 6 3.2.2.2. Intel ........................................................................................................