Optimizing CPU Simulator Parameters with Learned Differentiable

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

Horizontal PDF Slides

1 2 Speed, speed, speed $1000 TCR hashing competition D. J. Bernstein Crowley: “I have a problem where I need to make some University of Illinois at Chicago; cryptography faster, and I’m Ruhr University Bochum setting up a $1000 competition funded from my own pocket for Reporting some recent work towards the solution.” symmetric-speed discussions, Not fast enough: Signing H(M), especially from RWC 2020. where M is a long message. Not included in this talk: “[On a] 900MHz Cortex-A7 NISTLWC. • [SHA-256] takes 28.86 cpb ::: Short inputs. • BLAKE2b is nearly twice as FHE/MPC ciphers. • fast ::: However, this is still a lot slower than I’m happy with.” 1 2 3 Speed, speed, speed $1000 TCR hashing competition Instead choose random R and sign (R; H(R; M)). D. J. Bernstein Crowley: “I have a problem where I need to make some Note that H needs only “TCR”, University of Illinois at Chicago; cryptography faster, and I’m not full collision resistance. Ruhr University Bochum setting up a $1000 competition Does this allow faster H design? funded from my own pocket for TCR breaks how many rounds? Reporting some recent work towards the solution.” symmetric-speed discussions, Not fast enough: Signing H(M), especially from RWC 2020. where M is a long message. Not included in this talk: “[On a] 900MHz Cortex-A7 NISTLWC. • [SHA-256] takes 28.86 cpb ::: Short inputs. • BLAKE2b is nearly twice as FHE/MPC ciphers. • fast ::: However, this is still a lot slower than I’m happy with.” 1 2 3 Speed, speed, speed $1000 TCR hashing competition Instead choose random R and sign (R; H(R; M)). -

Broadwell Skylake Next Gen* NEW Intel NEW Intel NEW Intel Microarchitecture Microarchitecture Microarchitecture

15 лет доступности IOTG is extending the product availability for IOTG roadmap products from a minimum of 7 years to a minimum of 15 years when both processor and chipset are on 22nm and newer process technologies. - Xeon Scalable (w/ chipsets) - E3-12xx/15xx v5 and later (w/ chipsets) - 6th gen Core and later (w/ chipsets) - Bay Trail (E3800) and later products (Braswell, N3xxx) - Atom C2xxx (Rangeley) and later - Не включает в себя Xeon-D (7 лет) и E5-26xx v4 (7 лет) 2 IOTG Product Availability Life-Cycle 15 year product availability will start with the following products: Product Discontinuance • Intel® Xeon® Processor Scalable Family codenamed Skylake-SP and later with associated chipsets Notification (PDN)† • Intel® Xeon® E3-12xx/15xx v5 series (Skylake) and later with associated chipsets • 6th Gen Intel® Core™ processor family (Skylake) and later (includes Intel® Pentium® and Celeron® processors) with PDNs will typically be issued no later associated chipsets than 13.5 years after component • Intel Pentium processor N3700 (Braswell) and later and Intel Celeron processors N3xxx (Braswell) and J1900/N2xxx family introduction date. PDNs are (Bay Trail) and later published at https://qdms.intel.com/ • Intel® Atom® processor C2xxx (Rangeley) and E3800 family (Bay Trail) and late Last 7 year product availability Time Last Last Order Ship Last 15 year product availability Time Last Last Order Ship L-1 L L+1 L+2 L+3 L+4 L+5 L+6 L+7 L+8 L+9 L+10 L+11 L+12 L+13 L+14 L+15 Years Introduction of component family † Intel may support this extended manufacturing using reasonably Last Time Order/Ship Periods Component family introduction dates are feasible means deemed by Intel to be appropriate. -

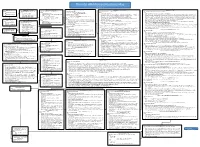

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

Validation Report

National Information Assurance Partnership Common Criteria Evaluation and Validation Scheme Validation Report Cisco Network Convergence System 1000 Series Report Number: CCEVS-VR--11093 Dated: 07/07/2020 Version: 1.0 National Institute of Standards and Technology National Security Agency Information Technology Laboratory Information Assurance Directorate 100 Bureau Drive 9800 Savage Road STE 6940 Gaithersburg, MD 20899 Fort George G. Meade, MD 20755-6940 Cisco Network Convergence System 1000 SeriesValidation Report Version 1.0, 07/06/2020 ACKNOWLEDGEMENTS Validation Team Paul Bicknell: Senior Validator Randy Heimann Linda Morrison: Lead Validator Clare Olin Common Criteria Testing Laboratory Chris Keenan Katie Sykes Gossamer Security Solutions, Inc. Catonsville, MD ii Cisco Network Convergence System 1000 SeriesValidation Report Version 1.0, 07/06/2020 Table of Contents Contents 1 Executive Summary .................................................................................................... 1 2 Identification ............................................................................................................... 2 3 Architectural Information ........................................................................................... 3 3.1 TOE Evaluated Configuration ............................................................................ 3 3.2 TOE Architecture ................................................................................................ 3 3.3 Physical Boundaries ........................................................................................... -

Sales Opportunity Guide

Intel® Pentium® Silver and Celeron® Processors Sales Opportunity Better Performance Faster Security And battery life Enabled Browsing better web browsing 3 UP TO UP 79% experience better Windows* browsing and sharing application hours of Security- with password 1 2 ** APPROX. UP TO UP 58% performance 10 battery life enabled managers Flexible connectivity Enhanced media and choices graphics experience graphics Gigabit Fast networking performance performance with the first Gigabit Wi-Fi PC 4 UP TO UP 2.7X improvement Wi-fi* capability on entry level systems – faster than wired Dynamically boost your display visibility outdoors in sunlight with Gigabit Ethernet connection5,6 Local Adaptive Contrast Enhancement (LACE) technology 1. As projected by SYSmark* 2014 v1.5 on Intel® Pentium® Silver Processor N5000 PL1=6W TDP, 4C/4T, up to 2.7GHz, Memory: 2x2GB DDR4 2400, Storage: Intel SSD, OS: Windows* 10 RS2 vs. Intel® Pentium® Processor N3540, PL1=7.5W TDP, 4C/4T, up to 2.66GHz, Memory: 2x2GB DDR3L-1333, Storage: Intel SSD, OS: Windows* 10 RS2 > 2. As projected by 1080p Video Playback on Intel® Pentium® Silver Processor N5000 , PL1=6W TDP, 4C/4T, up to 2.7GHz, Memory: 2x2GB DDR4 2400, Storage: Intel SSD, OS: Windows* 10 RS2 Battery: 35WHr, 12.5", 1920x1080 > 3. As projected by WebXPRT* 2015 on Intel® Pentium® Silver Processor N5000 PL1=6W TDP, 4C/4T, up to 2.7GHz, Memory: 2x2GB DDR4 2400, Storage: Intel SSD, OS: Windows* 10 RS2 vs. Intel® Pentium® Processor N3540, PL1=7.5W TDP, 4C/4T, up to 2.66GHz, Memory: 2x2GB DDR3L-1333, Storage: Intel SSD, OS: Windows* 10 RS2 > 4. -

The Intel X86 Microarchitectures Map Version 2.2

The Intel x86 Microarchitectures Map Version 2.2 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • New instructions: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

Intel® Architecture Instruction Set Extensions and Future Features

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference May 2021 319433-044 Intel technologies may require enabled hardware, software or service activation. No product or component can be absolutely secure. Your costs and results may vary. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. All product plans and roadmaps are subject to change without notice. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifications. Current characterized errata are available on request. Intel disclaims all express and implied warranties, including without limitation, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement, as well as any warranty arising from course of performance, course of dealing, or usage in trade. Code names are used by Intel to identify products, technologies, or services that are in development and not publicly available. These are not “commercial” names and not intended to function as trademarks. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be ob- tained by calling 1-800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Copyright © 2021, Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. -

Undocumented X86 Instructions to Control the CPU at the Microarchitecture Level

UNDOCUMENTED X86 INSTRUCTIONS TO CONTROL THE CPU AT THE MICROARCHITECTURE LEVEL IN MODERN INTEL PROCESSORS Mark Ermolov Dmitry Sklyarov Positive Technologies Positive Technologies [email protected] [email protected] Maxim Goryachy independent researcher [email protected] July 7, 2021 ABSTRACT At the beginning of 2020, we discovered the Red Unlock technique that allows extracting microcode (ucode) and targeting Intel Atom CPUs. Using the technique we were able to research the internal structure of the microcode and then x86 instructions implementation. We found two undocumented x86 instructions which are intendent to control the microarhitecture for debug purposes. In this paper we are going to introduce these instructions and explain the conditions under which they can be used on public-available platforms. We believe, this is a unique opportunity for third-party researchers to better understand the x86 architecture. Disclamer. All information is provided for educational purposes only. Follow these instructions at your own risk. Neither the authors nor their employer are responsible for any direct or consequential damage or loss arising from any person or organization acting or failing to act on the basis of information contained in this paper. Keywords Intel · Microcode · Undocumented · x86 1 Introduction The existence of undocumented mechanisms in the internals of modern CPUs has always been a concern for information security researchers and ordinary users. Assuming that such mechanisms do exist, the main worry is that -

Intel's Core 2 Family

Intel’s Core 2 family - TOCK lines II Nehalem to Haswell Dezső Sima Vers. 3.11 August 2018 Contents • 1. Introduction • 2. The Core 2 line • 3. The Nehalem line • 4. The Sandy Bridge line • 5. The Haswell line • 6. The Skylake line • 7. The Kaby Lake line • 8. The Kaby Lake Refresh line • 9. The Coffee Lake line • 10. The Cannon Lake line 3. The Nehalem line 3.1 Introduction to the 1. generation Nehalem line • (Bloomfield) • 3.2 Major innovations of the 1. gen. Nehalem line 3.3 Major innovations of the 2. gen. Nehalem line • (Lynnfield) 3.1 Introduction to the 1. generation Nehalem line (Bloomfield) 3.1 Introduction to the 1. generation Nehalem line (Bloomfield) (1) 3.1 Introduction to the 1. generation Nehalem line (Bloomfield) Developed at Hillsboro, Oregon, at the site where the Pentium 4 was designed. Experiences with HT Nehalem became a multithreaded design. The design effort took about five years and required thousands of engineers (Ronak Singhal, lead architect of Nehalem) [37]. The 1. gen. Nehalem line targets DP servers, yet its first implementation appeared in the desktop segment (Core i7-9xx (Bloomfield)) 4C in 11/2008 1. gen. 2. gen. 3. gen. 4. gen. 5. gen. West- Core 2 Penryn Nehalem Sandy Ivy Haswell Broad- mere Bridge Bridge well New New New New New New New New Microarch. Process Microarchi. Microarch. Process Microarch. Process Process 45 nm 65 nm 45 nm 32 nm 32 nm 22 nm 22 nm 14 nm TOCK TICK TOCK TICK TOCK TICK TOCK TICK (2006) (2007) (2008) (2010) (2011) (2012) (2013) (2014) Figure : Intel’s Tick-Tock development model (Based on [1]) * 3.1 Introduction to the 1. -

Introducing Intel® Tremont Microarchitecture

Introducing Intel® Tremont Microarchitecture Stephen Robinson | Senior Principal Engineer | Intel® Linley Fall Processor Conference 2019 – October 24, 2019 Tremont: Top-level Design Targets Single thread performance Networking . Performance/mW . Performance/mm2 . New instructions Battery life . Performance/mW 2 Design Target: Single Thread Performance Intel® Core™ class branch prediction 6 wide out of order instruction decode 4 wide allocation 10 execution ports Dual load/store pipelines Quad-core module L2 cache up to 4.5MB . Size is product dependent 3 Front End 44 Front End: Fetch, Predict Core™ class branch prediction . Long history . 32 byte based . L1 predictor (no penalty) . Large L2 predictor Out of order fetch . 32KB instruction cache . 32 bytes / cycle . Up to 8 outstanding misses 5 Front End: Decode 6-wide x86 instruction decode . Dual 3-wide clusters . Out of order . Wide decode without the area of a uop cache . Optional single cluster mode based on product targets 6 Integer Execution Large entry out of order window (>200) Parallel reservation stations (6) Wide execution (7) . 3 ALU . 2 AGU . 1 jump . 1 store data 7 Vector Execution Crypto acceleration . Dual 128b AES units, 4 cycle . Single instruction SHA256, 4 cycle . Galois Field new instructions Parallel reservation stations (2) Execution ports (3) . SIMD/AES/FMUL . SIMD/AES/FADD . Store data 8 Memory Execution Dual load/store pipeline 32KB data cache . Three cycle load to use 1024 entry second level TLB . Shared between code and data 9 Memory Subsystem L2 shared among 1-4 cores . Configurable from 1.5MB to 4.5MB Last level cache support . Inclusive . Non-inclusive Intel® Resource Directory Technology . -

Code That Performs

PRODUCT BRIEF High-Performance Computing Intel® Parallel Studio XE 2020 Software Code that Performs Intel® Parallel Studio XE helps developers take their HPC, enterprise, AI, and cloud applications to the max—with fast, scalable, and portable parallel code Intel® Parallel Studio XE is a comprehensive suite of development tools that make it fast and easy to build modern code that gets every last ounce of performance out of the newest Intel® processors. This tool-packed suite simplifies creating code with the latest techniques in vectorization, multi-threading, multi-node, and memory optimization. Get powerful, consistent programming with Intel® Advanced Vector Extensions 512 (Intel® AVX-512) instructions for Intel® Xeon® Scalable processors, plus support for the latest standards and integrated development environments (IDEs). Who Needs It? • C, C++, Fortran, and Python* software developers and architects building HPC, enterprise, AI, and cloud solutions • Developers looking to maximize their software’s performance on current and future Intel® platforms What it Does • Creates faster code1. Boost application performance that scales on current and future Intel® platforms with industry-leading compilers, numerical libraries, performance profilers, and code analyzers. • Builds code faster. Simplify the process of creating fast, scalable, and reliable parallel code. • Delivers Priority Support. Connect directly to Intel’s engineers for confidential answers to technical questions, access older versions of the products, and receive free updates for a year. Paid license required. What’s New • Speed artificial intelligence inferencing. Intel® Compilers, Intel® Performance Libraries and analysis tools support Intel® Deep Learning Boost, which includes Vector Neural Network Instructions (VNNI) in 2nd generation Intel® Xeon® Scalable processors (codenamed Cascade Lake/AP platforms) • Develop for large memories of up to 512GB DIMMs with Persistence.