The Growing Inaccessibility of Science

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Relative Value of Alcohol May Be Encoded by Discrete Regions of the Brain, According to a Study of 24 Men Published This Week in Neuropsychopharmacology

PRESS RELEASE FROM NEUROPSYCHOPHARMACOLOGY (http://www.nature.com/npp) This press release is copyrighted to the journal Neuropsychopharmacology. Its use is granted only for journalists and news media receiving it directly from the Nature Publishing Group. *** PLEASE DO NOT REDISTRIBUTE THIS DOCUMENT *** EMBARGO: 1500 London time (GMT) / 1000 US Eastern Time Monday 03 March 2014 0000 Japanese time / 0200 Australian Eastern Time Tuesday 04 March 2014 Wire services’ stories must always carry the embargo time at the head of each item, and may not be sent out more than 24 hours before that time. Solely for the purpose of soliciting informed comment on this paper, you may show it to independent specialists - but you must ensure in advance that they understand and accept the embargo conditions. This press release contains: • Summaries of newsworthy papers: Brain changes in young smokers *PODCAST* To drink or not to drink? *PODCAST* • Geographical listing of authors PDFs of the papers mentioned on this release can be found in the Academic Journals section of the press site: http://press.nature.com Warning: This document, and the papers to which it refers, may contain sensitive or confidential information not yet disclosed to the public. Using, sharing or disclosing this information to others in connection with securities dealing or trading may be a violation of insider trading under the laws of several countries, including, but not limited to, the UK Financial Services and Markets Act 2000 and the US Securities Exchange Act of 1934, each of which may be subject to penalties and imprisonment. PICTURES: While we are happy for images from Neuropsychopharmacology to be reproduced for the purposes of contemporaneous news reporting, you must also seek permission from the copyright holder (if named) or author of the research paper in question (if not). -

Antidepressants During Pregnancy and Fetal Development

PRESS RELEASE FROM NEUROPSYCHOPHARMACOLOGY (http://www.nature.com/npp) This press release is copyrighted to the journal Neuropsychopharmacology. Its use is granted only for journalists and news media receiving it directly from the Nature Publishing Group. *** PLEASE DO NOT REDISTRIBUTE THIS DOCUMENT *** EMBARGO: 1500 London time (BST) / 1000 US Eastern Time / 2300 Japanese time Monday 19 May 2014 0000 Australian Eastern Time Tuesday 20 May 2014 Wire services’ stories must always carry the embargo time at the head of each item, and may not be sent out more than 24 hours before that time. Solely for the purpose of soliciting informed comment on this paper, you may show it to independent specialists - but you must ensure in advance that they understand and accept the embargo conditions. A PDF of the paper mentioned on this release can be found in the Academic Journals section of the press site: http://press.nature.com Warning: This document, and the papers to which it refers, may contain sensitive or confidential information not yet disclosed to the public. Using, sharing or disclosing this information to others in connection with securities dealing or trading may be a violation of insider trading under the laws of several countries, including, but not limited to, the UK Financial Services and Markets Act 2000 and the US Securities Exchange Act of 1934, each of which may be subject to penalties and imprisonment. PICTURES: While we are happy for images from Neuropsychopharmacology to be reproduced for the purposes of contemporaneous news reporting, you must also seek permission from the copyright holder (if named) or author of the research paper in question (if not). -

Advertising (PDF)

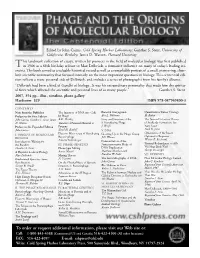

Edited by John Cairns, Cold Spring Harbor Laboratory; Gunther S. Stent, University of California, Berkeley; James D. Watson, Harvard University his landmark collection of essays, written by pioneers in the field of molecular biology, was first published T in 1966 as a 60th birthday tribute to Max Delbrück, a formative influence on many of today’s leading sci- entists. The book served as a valuable historical record as well as a remarkable portrait of a small, pioneering, close- knit scientific community that focused intensely on the most important questions in biology. This centennial edi- tion reflects a more personal side of Delbrück, and includes a series of photographs from his family’s albums. “Delbrück had been a kind of Gandhi of biology...It was his extraordinary personality that made him the spiritu- al force which affected the scientific and personal lives of so many people.” —Gunther S. Stent 2007, 394 pp., illus., timeline, photo gallery Hardcover $29 ISBN 978-087969800-3 CONTENTS Note from the Publisher The Injection of DNA into Cells Bacterial Conjugation Quantitatitve Tumor Virology Preface to the First Edition by Phage Elie L. Wollman H. Rubin John Cairns, Gunther S. Stent, James A.D. Hershey Story and Structure of the The Natural Selection Theory D. Watson Transfer of Parental Material to λ Transducing Phage of Antibody Formation; Ten Preface to the Expanded Edition Progeny J. Weigle Years Later John Cairns Lloyd M. Kozloff V. DNA Niels K. Jerne Electron Microscopy of Developing Growing Up in the Phage Group Cybernetics of the Insect I. ORIGINS OF MOLECULAR Optomotor Response BIOLOGY Bacteriophage J.D. -

Journal Holdings 2019-2020

PONCE HEALTH SCIENCES UNIVERSITY LIBRARY Databases with Full Text Online: A B C D E F G H I J K L M N O P Q R S T U V W X Y Z JOURNAL HOLDINGS 2019-2020 TITLE VOLUME YEAR ONLINE ACCESS AADE in Practice V.1- 2013- Sage AAP News 1985- ACP Journal Club V.136- 2002- Medline complete AJIC American Journal of Infection Control V.23- 1995- OVID AJN American Journal of Nursing V. 23- 1996- OVID AJR :American Journal of Roentgenology V. 95- 1965- Free Access(12mo.delay) ACIMED V.1- 1993- SciELO Academia de Médicos de Familia V.1-21. 1988-2009. Academic Emergency Medicine V.1- 1994- Ebsco Academic Medicine V.36- 1961- OVID Academic Pediatrics V. 9- 2009- ClinicalKey Academic Psychiatry V.21- 1997- Ebsco Acta Bioethica V.6- 2000- SciELO Acta Medica Colombiana V.30- 2005- SciELO Acta Medica Costarricense V.37- 1995- Free access Acta Medica Peruana V.23- 2006- Free access Page 1 Back to Top ↑ TITLE VOLUME YEAR ONLINE ACCESS Acta Pharmacologica Sinica V. 26- 2005- Nature.com Actas Españolas de Psiquiatría V. 29- 2001- Medline complete Action Research V. 1- 2003- Sage Adicciones V.11- 1999- Free access V.88- 1993- Medline complete (12 mo. Addiction delay) Addictive Behaviors V. 32- 2007- ClinicalKey Advances in Anatomic Pathology V.8- 2001- Ovid Advances in Immunology V.72-128. 1999- Science Direct Advances in Nutrition V.1- 2010-2015. Free access (12 mo. delay) Advances in Physiology Education V.256#6- 1989- Advances in Surgery V.41- 2007- Clinical Key The Aesculapian V.1. -

SPRINGER NATURE Products, Services & Solutions 2 Springer Nature Products, Services & Solutions Springernature.Com

springernature.com Illustration inspired by the work of Marie Curie SPRINGER NATURE Products, Services & Solutions 2 Springer Nature Products, Services & Solutions springernature.com About Springer Nature Springer Nature advances discovery by publishing robust and insightful research, supporting the development of new areas of knowledge, making ideas and information accessible around the world, and leading the way on open access. Our journals, eBooks, databases and solutions make sure that researchers, students, teachers and professionals have access to important research. Springer Established in 1842, Springer is a leading global scientific, technical, medical, humanities and social sciences publisher. Providing researchers with quality content via innovattive products and services, Springer has one of the most significant science eBooks and archives collections, as well as a comprehensive range of hybrid and open access journals. Nature Research Publishing some of the most significant discoveries since 1869. Nature Research publishes the world’s leading weekly science journal, Nature, in addition to Nature- branded research and review subscription journals. The portfolio also includes Nature Communications, the leading open access journal across all sciences, plus a variety of Nature Partner Journals, developed with institutions and societies. Academic journals on nature.com Prestigious titles in the clinical, life and physical sciences for communities and established medical and scientific societies, many of which are published in partnership a society. Adis A leading international publisher of drug-focused content and solutions. Adis supports work in the pharmaceutical and biotech industry, medical research, practice and teaching, drug regulation and reimbursement as well as related finance and consulting markets. Apress A technical publisher of high-quality, practical content including over 3000 titles for IT professionals, software developers, programmers and business leaders around the world. -

How a Mathematician Started Making Movies 185

statements pioneers and pathbreakers How a Mathematician Started Making Movies M i ch e l e e M M e R The author’s father, Luciano Emmer, was an Italian filmmaker who made essentially two—possibly three—reasons. The first: In 1976 I feature movies and documentaries on art from the 1930s through was at the University of Trento in northern Italy. I was work- 2008, one year before his death. Although the author’s interest in films ing in an area called the calculus of variations, in particular, inspired him to write many books and articles on cinema, he knew he ABSTRACT would be a mathematician from a young age. After graduating in 1970 minimal surfaces and capillarity problems [4]. I had gradu- and fortuitously working on minimal surfaces—soap bubbles—he had ated from the University of Rome in 1970 and started my the idea of making a film. It was the start of a film series on art and career at the University of Ferrara, where I was very lucky mathematics, produced by his father and Italian state television. This to start working with Mario Miranda, the favorite student of article tells of the author’s professional life as a mathematician and a Ennio De Giorgi. At that time, I also met Enrico Giusti and filmmaker. Enrico Bombieri. It was the period of the investigations of partial differential equations, the calculus of variations and My father, Luciano Emmer, was a famous Italian filmmaker. the perimeter theory—which Renato Caccioppoli first intro- He made not only movies but also many documentaries on duced in the 1950s and De Giorgi and Miranda then devel- art, for example, a documentary about Picasso in 1954 [1] oped [5–7]—at the Italian school Scuola Normale Superiore and one about Leonardo da Vinci [2] that won a Silver Lion of Pisa. -

Scientific American

CYBERSECURITY NEUROSCIENCE EDUCATION 3-Part Plan Accidental The Science for Big Data Genius of Learning ScientificAmerican.com AUGUST 2014 The Black Hole at the Begınning of Tıme Do we live in a holographic mirage from another dimension? © 2014 Scientific American Researchers are using tools borrowed from medicine and economics to figure out what works best in the classroom. But the results aren’t making it into schools EDUCATION The Scıence of Learning By Barbara Kantrowitz IN BRIEF Researchers are conducting hundreds of experi- nna fisher was leading an undergraduate semi ments in an effort to bring more rigorous science to U.S. schools. nar on the subject of attention and distractibility The movement started with former president in young children when she noticed that the George W. Bush’s No Child Left Behind Act and has continued under President Barack Obama. Awalls of her classroom were bare. That got her thinking Using emerging technology and new methods about kindergarten classrooms, which are typically decorat of data analysis, researchers are undertaking studies that would have been impossible even ed with cheerful posters, multicolored maps, charts and art 10 years ago. work. What effect, she wondered, does all that visual stimu The new research is challenging widely held beliefs, such as that teachers should be judged lation have on children, who are far more susceptible to primarily on the basis of their academic creden- distraction than her students at Carnegie Mellon University? tials, that classroom size is paramount, and that students need detailed instructions to learn. Do the decorations affect youngsters’ ability to learn? Photograph by Fredrik Broden August 2014, ScientificAmerican.com 69 © 2014 Scientific American To find out, Fisher’s graduate student Karrie Godwin de signed an experiment involving kindergartners at Carnegie Mel Barbara Kantrowitz is a senior editor at the Hechinger Report, a nonprofit news organization lon’s Children’s School, a campus laboratory school. -

Oral and Whole Body Health

• Mouth - Body CONNECTIONS • The Facts and Fictions of INFLAMMATION • PREGNANCY and PERIODONTAL DISEASE • Linking DIABETES, OBESITY and INFECTION • Reflections from a SURGEON GENERAL • HEALTH POLICY of the Future • Blurring the DOCTOR-DENTIST Barrier A custom publication production in collaboration with the Procter & Gamble Company The mouth speaks for the body. We speak for the mouth. Crest and Oral-B. Today, the combined company of Crest and Oral-B exceeds more than Together for the first time. 100 years of innovation, information, and expertise. This combined heritage, Dedicated to uncovering coupled with a shared dedication to improving outcomes for dental all the links between professionals and patients alike, puts the new company of Crest and Oral-B at good oral health and the forefront of breakthrough science and oral health solutions. Crest and whole-body wellness. Oral-B are passionately committed to evolving the field of oral health and its relationship to whole-body wellness. Do we have all the answers today? No. Are we collecting evidence to suggest that there are some important links between oral and systemic health? Absolutely. Join us on the journey as we work to uncover the most critical information regarding the important role good oral health can play in achieving whole-body wellness. © 2006 P&G PGC-1713C OPAD06506 Dedicated to healthy lifesmiles SCIENTIFIC AMERICAN PRESENTS ORRALAL AANDND WHHOLEOLE BOODYDY HEEALTHALTH INTRODUCTION » OUR MOUTHS, OURSELVES As the relationship between the mouth and the rest of the body becomes clearer, it is changing the way dentists, doctors and patients view oral health. 3 BY SHARON GUYNUP INVADERS AND THE BODY’S DEFENSES Gum disease illustrates how local infections may have systemic consequences. -

Scientific American Scientificamerican.Com

ORGAN REPAIRS DENGUE DEBACLE HOW EELS GET ELECTRIC The forgotten compound that can A vaccination program Insights into their shocking repair damaged tissue PAGE 56 gone wrong PAGE 38 attack mechanisms PAGE 62 MIND READER A new brain-machine interface detects what the user wants PLUS QUANTUM GR AVIT Y IN A LAB Could new experiments APRIL 2019 pull it off? PAGE 48 © 2019 Scientific American ScientificAmerican.com APRIL 2019 VOLUME 320, NUMBER 4 48 NEUROTECH PHYSICS 24 The Intention Machine 48 Quantum Gravity A new generation of brain-machine in the Lab interface can deduce what a person Novel experiments could test wants. By Richard Andersen the quantum nature of gravity on a tabletop. By Tim Folger INFRASTRUCTURE 32 Beyond Seawalls MEDICINE Fortified wetlands and oyster reefs 56 A Shot at Regeneration can protect shorelines better than A once forgotten drug compound hard structures. By Rowan Jacobsen could rebuild damaged organs. By Kevin Strange and Viravuth Yin PUBLIC HEALTH 38 The Dengue Debacle ANIMAL PHYSIOLOGY In April 2016 children in the Philip- 62 Shock and Awe pines began receiving the world’s Understanding the electric eel’s first dengue vaccine. Almost two unusual anatomical power. years later new research showed By Kenneth C. Catania ON THE COVER Tapping into the brain’s neural circuits lets people that the vaccine was risky for many MATHEMATICS with spinal cord injuries manipulate computer kids. The campaign ground to 70 Outsmarting cursors and robotic limbs. Early studies underline a halt, and the public exploded a Virus with Math the need for technical advances that make in outrage. -

Scientific American, October, 1988

OCTOBER 1988 SCIENTIFIC $2.95 ERICAN A SINGLE -TOPIC ISSUE © 1988 SCIENTIFIC AMERICAN, INC In The Best Theaters ,TheSound Is As BigAs © 1988 SCIENTIFIC AMERICAN, INC The Picture. From the people who hrought you big screentelevision comes the sound togo with it. Intro ducing Mitsubishi Home TheaterSystems. For the Mitsubishi dealernearest you, call (BOO) 5.56-1234,ext. 145. In California, (BOO) 441-2345, ext. 145. © 1988Mitsubishi Electric SalesAmerica, Inc. J..MITSUBISHr © 1988 SCIENTIFIC AMERICAN, INC SCIENTIFIC AMERICAN October 1988 Volume 259 Number 4 40 AIDS in 1988 Robert C.Gallo and Lue Montagnier Where do we stand? What are the key areas of current research? The prospects for therapy or a vaccine? In their first collaborative article the two investigators who established the cause of AIDSanswer these questions and tell how HN was isolated and linked to AIDS. 52 The Molecular Biology of the AIDS Virus William A. Haseltine and Flossie Wong-Staal Just three viral genes can direct the machinery of an infected cell to make a new HIV particle-provided that at least three other viral genes give the go-ahead. These regulatory genes give the virus its protean behavioral repertoire: they spur viral replication, hold it in check or bring it to a halt. 64 The Origins of the AIDS Virus Max Essex and Phyllis]. Kanki The AIDSvirus has a past and it has relatives. Aninquiry into its family history can reveal how the related viruses interact with human beings and monkeys. The inquiry may also uncover vulnerabilities: some forms of the virus have evolved toward disease-free coexistence with their hosts. -

Genetische Impfstoffe Gegen COVID-19: Hoffnung Oder Risiko?

TRIBÜNE COVID-19 862 Genetische Impfstoffe gegen COVID-19: Hoffnung oder Risiko? Clemens Arvay Dipl.-Ing., Biologe, freier Autor, Graz Es wird häufig davon ausgegangen, dass sich das globale Sozialleben erst normalisie- ren wird, wenn ein Impfstoff gegen SARS-CoV2 zur Verfügung steht. Fast die Hälfte der Impfstoffkandidaten sind genetische Impfstoffe, welche einige gesundheitliche Risiken bergen. Die Biologie von SARS-CoV-2 peripher-genetische Abläufe in der Wirtszelle findet die Translation der Boten-RNA an den Ribosomen SARS-CoV-2 gehört zur Familie der Coronaviren (Coro- statt, in denen die Proteinbiosynthese abläuft. In der naviridae). Das virale Genom liegt bei diesen nicht als Folge kommt es zur Synthese viraler Proteine [2]. DNA (Desoxyribonukleinsäure), sondern als RNA (Ri- bonukleinsäure) vor. Die Virionen des Erregers mit ei- nem Durchmesser von etwa 120 nm bestehen aus einer Genbasierte Impfstoffe Lipiddoppelschichthülle mit Membran- und Stachel- proteinen, in deren Inneren die genomische RNA Viele Experten gehen davon aus, dass sich unser Alltag (vRNA für «viral RNA») im Querschnitt ringförmig im erst normalisieren wird, wenn ein wirksamer Impf- Nukleokapsid angeordnet ist [1]. stoff gegen SARS-CoV-2 zur Verfügung steht [3]. Unter Nach dem Eintritt in die Wirtszelle kommt es dort zur den Kandidaten befindet sich ein signifikanter Anteil Expression der Boten-RNA (mRNA für «messenger genbasierter, das heisst auf der Transduktion von RNA»), die aus einsträngigen Transkripten von Ab- Nuklein säuren in die menschliche Zielzelle aufbauen- schnitten der viralen RNA besteht. Über Eingriffe in der Impfstoffe [4]. Bei der Weltgesundheitsorganisa- tion (WHO) wurden von pharmazeutischen Unterneh- men 18 RNA- und 11 DNA-basierte Impfstoffstudien für RNA – Impfstoffe (18) eine Immunisierung gegen COVID-19 angemeldet (von 13,64% 132) [5]. -

Gehirn&Geist«, »Epoc« Und Natürlich Die Onlinezeitung »

LESERSTIMMEN »Spektrum der Wissenschaft«, »Sterne und Weltraum«, »Gehirn&Geist«, »epoc« und natürlich die Onlinezeitung »spektrumdirekt«: Derzeit produzieren, bewerben und vertreiben rund 65 Mitarbeiter die Magazine des Verlags. Die meisten davon sind auf diesem Foto versammelt. Obere Galerie von links nach rechts: Reinhard Breuer, Martin Neumann, Uwe Reichert, Joachim Schüring, Robin Gerst, Andreas Jahn, Carsten Könneker, Ursula Wessels, Christiane Gelitz, Dominik Wigger, Klaus-Dieter Linsmeier, Erika Eschwei, Holger Schmidt, Rabea Rentschler, Markus Schlierfstein, Christina Meyberg, Daniel Lingenhöhl, Anke Lingg, Christian Wolf, CH S Sandra Czaja, Steve Ayan, Antje Findeklee, Alice Krüssmann, Annette Baumbusch, Eva Kahlmann, Claus Schäfer, Anke Heinzelmann, Axel Quetz, Thilo Körkel, Karsten Kramarczik (mit Redaktionshund Cooper), Marc Grove, Oliver Gabriel. Treppe von links nach rechts: Hartwig Hanser, Adelheid Stahnke, Sabine Häusser, Christoph Pöppe, Vera Spillner, Markus ANFRED ZENT M Bossle, Anja Blänsdorf, Richard Zinken, Frank Olheide, Karin Schmidt, Elisabeth Stachura, Anja Albat-Nollau, Christa Winter-Fedke, Sibylle Franz, Ronald Berger, Thomas Bleck, Inge Hoefer, Katja Gaschler, Jan Hattenbach, Gerhard Trageser, CHAFT / S Michael Springer. Nicht im Bild: Tilmann Althaus, Süleyman Ciçek, Ann-Kristin Ebert, Britta Feuerstein, Martin Huhn, EN ss Andrea Jamerson, Ilona Keith, Barbara Kuhn, Jan Osterkamp, Michaela Pyrlik, Gabriela Rabe, Marianne Reger, Elke Reinecke, Christoph Roloff, Natalie Schäfer, Günther Schultz, Sigrid Spies, Stefan Taube, Anke Walter, Bärbel Wehner, Katharina Werle SPEKTRUM DER WI Danke! 2 SPEKTRUM DER WISSENSCHAFT · NOVEMBER 2008 SPEKTRUM DER WISSENSCHAFT · NOVEMBER 2008 3 Die Extraseite Liebe Leserinnen nur für Abonnenten und Leser, Unter www.spektrum-plus.de haben wir ir feiern – und zwar das 30-jährige Ju- »Spektrum« hat sich verändert in diesen drei exklusiv für unsere Abonnenten viele Vorteile Wbiläum von »Spektrum der Wissen- Jahrzehnten – nicht nur in seiner Gestaltung.