Dispatch /Execute Unit Retire Unit Instruction Pool Fetch/ Decode Unit

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AMD Athlon™ Processor X86 Code Optimization Guide

AMD AthlonTM Processor x86 Code Optimization Guide © 2000 Advanced Micro Devices, Inc. All rights reserved. The contents of this document are provided in connection with Advanced Micro Devices, Inc. (“AMD”) products. AMD makes no representations or warranties with respect to the accuracy or completeness of the contents of this publication and reserves the right to make changes to specifications and product descriptions at any time without notice. No license, whether express, implied, arising by estoppel or otherwise, to any intellectual property rights is granted by this publication. Except as set forth in AMD’s Standard Terms and Conditions of Sale, AMD assumes no liability whatsoever, and disclaims any express or implied warranty, relating to its products including, but not limited to, the implied warranty of merchantability, fitness for a particular purpose, or infringement of any intellectual property right. AMD’s products are not designed, intended, authorized or warranted for use as components in systems intended for surgical implant into the body, or in other applications intended to support or sustain life, or in any other applica- tion in which the failure of AMD’s product could create a situation where per- sonal injury, death, or severe property or environmental damage may occur. AMD reserves the right to discontinue or make changes to its products at any time without notice. Trademarks AMD, the AMD logo, AMD Athlon, K6, 3DNow!, and combinations thereof, AMD-751, K86, and Super7 are trademarks, and AMD-K6 is a registered trademark of Advanced Micro Devices, Inc. Microsoft, Windows, and Windows NT are registered trademarks of Microsoft Corporation. -

IEEE Paper Template in A4

Vasantha.N.S. et al, International Journal of Computer Science and Mobile Computing, Vol.6 Issue.6, June- 2017, pg. 302-306 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IMPACT FACTOR: 6.017 IJCSMC, Vol. 6, Issue. 6, June 2017, pg.302 – 306 Enhancing Performance in Multiple Execution Unit Architecture using Tomasulo Algorithm Vasantha.N.S.1, Meghana Kulkarni2 ¹Department of VLSI and Embedded Systems, Centre for PG Studies, VTU Belagavi, India ²Associate Professor Department of VLSI and Embedded Systems, Centre for PG Studies, VTU Belagavi, India 1 [email protected] ; 2 [email protected] Abstract— Tomasulo’s algorithm is a computer architecture hardware algorithm for dynamic scheduling of instructions that allows out-of-order execution, designed to efficiently utilize multiple execution units. It was developed by Robert Tomasulo at IBM. The major innovations of Tomasulo’s algorithm include register renaming in hardware. It also uses the concept of reservation stations for all execution units. A common data bus (CDB) on which computed values broadcast to all reservation stations that may need them is also present. The algorithm allows for improved parallel execution of instructions that would otherwise stall under the use of other earlier algorithms such as scoreboarding. Keywords— Reservation Station, Register renaming, common data bus, multiple execution unit, register file I. INTRODUCTION The instructions in any program may be executed in any of the 2 ways namely sequential order and the other is the data flow order. The sequential order is the one in which the instructions are executed one after the other but in reality this flow is very rare in programs. -

Computer Science 246 Computer Architecture Spring 2010 Harvard University

Computer Science 246 Computer Architecture Spring 2010 Harvard University Instructor: Prof. David Brooks [email protected] Dynamic Branch Prediction, Speculation, and Multiple Issue Computer Science 246 David Brooks Lecture Outline • Tomasulo’s Algorithm Review (3.1-3.3) • Pointer-Based Renaming (MIPS R10000) • Dynamic Branch Prediction (3.4) • Other Front-end Optimizations (3.5) – Branch Target Buffers/Return Address Stack Computer Science 246 David Brooks Tomasulo Review • Reservation Stations – Distribute RAW hazard detection – Renaming eliminates WAW hazards – Buffering values in Reservation Stations removes WARs – Tag match in CDB requires many associative compares • Common Data Bus – Achilles heal of Tomasulo – Multiple writebacks (multiple CDBs) expensive • Load/Store reordering – Load address compared with store address in store buffer Computer Science 246 David Brooks Tomasulo Organization From Mem FP Op FP Registers Queue Load Buffers Load1 Load2 Load3 Load4 Load5 Store Load6 Buffers Add1 Add2 Mult1 Add3 Mult2 Reservation To Mem Stations FP adders FP multipliers Common Data Bus (CDB) Tomasulo Review 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 LD F0, 0(R1) Iss M1 M2 M3 M4 M5 M6 M7 M8 Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 M3 Wb SUBI R1, R1, 8 Iss Ex Wb BNEZ R1, Loop Iss Ex Wb LD F0, 0(R1) Iss Iss Iss Iss M Wb MUL F4, F0, F2 Iss Iss Iss Iss Iss Ex Ex Ex Ex Wb SD 0(R1), F0 Iss Iss Iss Iss Iss Iss Iss Iss Iss M1 M2 -

Dynamic Scheduling

Dynamically-Scheduled Machines ! • In a Statically-Scheduled machine, the compiler schedules all instructions to avoid data-hazards: the ID unit may require that instructions can issue together without hazards, otherwise the ID unit inserts stalls until the hazards clear! • This section will deal with Dynamically-Scheduled machines, where hardware- based techniques are used to detect are remove avoidable data-hazards automatically, to allow ‘out-of-order’ execution, and improve performance! • Dynamic scheduling used in the Pentium III and 4, the AMD Athlon, the MIPS R10000, the SUN Ultra-SPARC III; the IBM Power chips, the IBM/Motorola PowerPC, the HP Alpha 21264, the Intel Dual-Core and Quad-Core processors! • In contrast, static multiple-issue with compiler-based scheduling is used in the Intel IA-64 Itanium architectures! • In 2007, the dual-core and quad-core Intel processors use the Pentium 5 family of dynamically-scheduled processors. The Itanium has had a hard time gaining market share.! Ch. 6, Advanced Pipelining-DYNAMIC, slide 1! © Ted Szymanski! Dynamic Scheduling - the Idea ! (class text - pg 168, 171, 5th ed.)" !DIVD ! !F0, F2, F4! ADDD ! !F10, F0, F8 !- data hazard, stall issue for 23 cc! SUBD ! !F12, F8, F14 !- SUBD inherits 23 cc of stalls! • ADDD depends on DIVD; in a static scheduled machine, the ID unit detects the hazard and causes the basic pipeline to stall for 23 cc! • The SUBD instruction cannot execute because the pipeline has stalled, even though SUBD does not logically depend upon either previous instruction! • suppose the machine architecture was re-organized to let the SUBD and subsequent instructions “bypass” the previous stalled instruction (the ADDD) and proceed with its execution -> we would allow “out-of-order” execution! • however, out-of-order execution would allow out-of-order completion, which may allow RW (Read-Write) and WW (Write-Write) data hazards ! • a RW and WW hazard occurs when the reads/writes complete in the wrong order, destroying the data. -

United States Patent (19) 11 Patent Number: 5,680,565 Glew Et Al

USOO568.0565A United States Patent (19) 11 Patent Number: 5,680,565 Glew et al. 45 Date of Patent: Oct. 21, 1997 (54) METHOD AND APPARATUS FOR Diefendorff, "Organization of the Motorola 88110 Super PERFORMING PAGE TABLE WALKS INA scalar RISC Microprocessor.” IEEE Micro, Apr. 1996, pp. MCROPROCESSOR CAPABLE OF 40-63. PROCESSING SPECULATIVE Yeager, Kenneth C. "The MIPS R10000 Superscalar Micro INSTRUCTIONS processor.” IEEE Micro, Apr. 1996, pp. 28-40 Apr. 1996. 75 Inventors: Andy Glew, Hillsboro; Glenn Hinton; Smotherman et al. "Instruction Scheduling for the Motorola Haitham Akkary, both of Portland, all 88.110" Microarchitecture 1993 International Symposium, of Oreg. pp. 257-262. Circello et al., “The Motorola 68060 Microprocessor.” 73) Assignee: Intel Corporation, Santa Clara, Calif. COMPCON IEEE Comp. Soc. Int'l Conf., Spring 1993, pp. 73-78. 21 Appl. No.: 176,363 "Superscalar Microprocessor Design" by Mike Johnson, Advanced Micro Devices, Prentice Hall, 1991. 22 Filed: Dec. 30, 1993 Popescu, et al., "The Metaflow Architecture.” IEEE Micro, (51) Int. C. G06F 12/12 pp. 10-13 and 63–73, Jun. 1991. 52 U.S. Cl. ........................... 395/415: 395/800; 395/383 Primary Examiner-David K. Moore 58) Field of Search .................................. 395/400, 375, Assistant Examiner-Kevin Verbrugge 395/800, 414, 415, 421.03 Attorney, Agent, or Firm-Blakely, Sokoloff, Taylor & 56 References Cited Zafiman U.S. PATENT DOCUMENTS 57 ABSTRACT 5,136,697 8/1992 Johnson .................................. 395/586 A page table walk is performed in response to a data 5,226,126 7/1993 McFarland et al. 395/.394 translation lookaside buffer miss based on a speculative 5,230,068 7/1993 Van Dyke et al. -

Identifying Bottlenecks in a Multithreaded Superscalar

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by KITopen Identifying Bottlenecks in a Multithreaded Sup erscalar Micropro cessor Ulrich Sigmund and Theo Ungerer VIONA DevelopmentGmbH Karlstr D Karlsruhe Germany University of Karlsruhe Dept of Computer Design and Fault Tolerance D Karlsruhe Germany Abstract This pap er presents a multithreaded sup erscalar pro cessor that p ermits several threads to issue instructions to the execution units of a wide sup erscalar pro cessor in a single cycle Instructions can simul taneously b e issued from up to threads with a total issue bandwidth of instructions p er cycle Our results show that the threaded issue pro cessor reaches a throughput of instructions p er cycle Intro duction Current micropro cessors utilize instructionlevel parallelism by a deep pro cessor pip eline and by the sup erscalar technique that issues up to four instructions p er cycle from a single thread VLSItechnology will allow future generations of micropro cessors to exploit instructionlevel parallelism up to instructions p er cycle or more However the instructionlevel parallelism found in a conventional instruction stream is limited The solution is the additional utilization of more coarsegrained parallelism The main approaches are the multipro cessor chip and the multithreaded pro ces pro cessors on a sor The multipro cessor chip integrates two or more complete single chip Therefore every unit of a pro cessor is duplicated and used indep en dently of its copies on the chip -

Benchmark Evaluations of Modern Multi Processor Vlsi Ds Pm Ps

1220 Session 1220 Benchmark Evaluations of Modern Multi-Processor VLSI DSPµPs Aaron L. Robinson and Fred O. Simons, Jr. High-Performance Computing and Simulation (HCS) Research Laboratory Electrical Engineering Department Florida A&M University and Florida State University Tallahassee, FL 32316-2175 Abstract - The authors continue their tradition of presenting technical reviews and performance comparisons of the newest multi-processor VLSI DSPµPs with the intention of providing concise focused analyses designed to help established or aspiring DSP analysts evaluate the applicability of new DSP technology to their specific applications. As in the past, the Analog Devices SHARCTM and Texas Instruments TMS320C80 families of DSPµPs will be the focus of our presentation because these manufacturers continue to push the envelop of new DSPµPs (Digital Signal Processing microProcessors) development. However, in addition to the standard performance analyses and benchmark evaluations, the authors will present a new image-processing bench marking technique designed specifically for evaluating new DSPµP image processing capabilities. 1. Introduction The combination of constantly evolving DSP algorithm development and continually advancing DSPuP hardware have formed the basis for an exponential growth in DSP applications. The increased market demand for these applications and their required hardware has resulted in the production of DSPuPs with very powerful components capable of performing a wide range of complicated operations. Due to the complicated architectures of these new processors, it would be time consuming for even the experienced DSP analysts to review and evaluate these new DSPuPs. Furthermore, the inexperienced DSP analysts would find it even more time consuming, and possibly very difficult to appreciate the significance and opportunities for these new components. -

Soft Machines Targets Ipcbottleneck

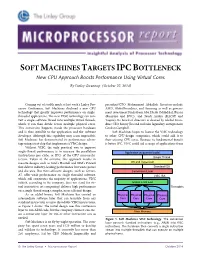

SOFT MACHINES TARGETS IPC BOTTLENECK New CPU Approach Boosts Performance Using Virtual Cores By Linley Gwennap (October 27, 2014) ................................................................................................................... Coming out of stealth mode at last week’s Linley Pro- president/CTO Mohammad Abdallah. Investors include cessor Conference, Soft Machines disclosed a new CPU AMD, GlobalFoundries, and Samsung as well as govern- technology that greatly improves performance on single- ment investment funds from Abu Dhabi (Mubdala), Russia threaded applications. The new VISC technology can con- (Rusnano and RVC), and Saudi Arabia (KACST and vert a single software thread into multiple virtual threads, Taqnia). Its board of directors is chaired by Global Foun- which it can then divide across multiple physical cores. dries CEO Sanjay Jha and includes legendary entrepreneur This conversion happens inside the processor hardware Gordon Campbell. and is thus invisible to the application and the software Soft Machines hopes to license the VISC technology developer. Although this capability may seem impossible, to other CPU-design companies, which could add it to Soft Machines has demonstrated its performance advan- their existing CPU cores. Because its fundamental benefit tage using a test chip that implements a VISC design. is better IPC, VISC could aid a range of applications from Without VISC, the only practical way to improve single-thread performance is to increase the parallelism Application (sequential code) (instructions per cycle, or IPC) of the CPU microarchi- Single Thread tecture. Taken to the extreme, this approach results in massive designs such as Intel’s Haswell and IBM’s Power8 OS and Hypervisor that deliver industry-leading performance but waste power Standard ISA and die area. -

Micro-Circuits for High Energy Physics*

MICRO-CIRCUITS FOR HIGH ENERGY PHYSICS* Paul F. Kunz Stanford Linear Accelerator Center Stanford University, Stanford, California, U.S.A. ABSTRACT Microprogramming is an inherently elegant method for implementing many digital systems. It is a mixture of hardware and software techniques with the logic subsystems controlled by "instructions" stored Figure 1: Basic TTL Gate in a memory. In the past, designing microprogrammed systems was difficult, tedious, and expensive because the available components were capable of only limited number of functions. Today, however, large blocks of microprogrammed systems have been incorporated into a A input B input C output single I.e., thus microprogramming has become a simple, practical method. false false true false true true true false true true true false 1. INTRODUCTION 1.1 BRIEF HISTORY OF MICROCIRCUITS Figure 2: Truth Table for NAND Gate. The first question which arises when one talks about microcircuits is: What is a microcircuit? The answer is simple: a complete circuit within a single integrated-circuit (I.e.) package or chip. The next question one might ask is: What circuits are available? The answer to this question is also simple: it depends. It depends on the economics of the circuit for the semiconductor manufacturer, which depends on the technology he uses, which in turn changes as a function of time. Thus to understand Figure 3: Logical NOT Circuit. what microcircuits are available today and what makes them different from those of yesterday it is interesting to look into the economics of producing microcircuits. The basic element in a logic circuit is a gate, which is a circuit with a number of inputs and one output and it performs a basic logical function such as AND, OR, or NOT. -

(12) Patent Application Publication (10) Pub. No.: US 2011/0231717 A1 HUR Et Al

US 20110231717A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2011/0231717 A1 HUR et al. (43) Pub. Date: Sep. 22, 2011 (54) SEMICONDUCTOR MEMORY DEVICE Publication Classification (76) Inventors: Hwang HUR, Kyoungki-do (KR): ( 51) Int. Cl. Chang-Ho Do, Kyoungki-do (KR): C. % CR Jae-Bum Ko, Kyoungki-do (KR); ( .01) Jin-Il Chung, Kyoungki-do (KR) (21) Appl. N 13A149,683 (52) U.S. Cl. ................................. 714/718; 714/E11.145 ppl. No.: 9 (22)22) FileFiled: Mavay 31,51, 2011 (57) ABSTRACT Related U.S. Application Data Semiconductor memory device includes a cell array includ (62) Division of application No. 12/154,870, filed on May ing a plurality of unit cells; and a test circuit configured to 28, 2008, now Pat. No. 7,979,758. perform a built-in self-stress (BISS) test for detecting a defect by performing a plurality of internal operations including a (30) Foreign Application Priority Data write operation through an access to the unit cells using a plurality of patterns during a test procedure carried out at a Feb. 29, 2008 (KR) ............................. 2008-OO18761 wafer-level. O 2 FAD MoDE PE, FRS PWD PADX8 GENERATOR EAELER PEC 3. PD) PWDC CRECKCCES CKCKB RASCASECSCE SISTE CLOCKBUFFER CEE e EST CLK BSI RASCASECSCKE WREFB ESTE s ACTF 32 P 8:W 4. 38t.A 3. se. A(2) FIRST REFRESH AKE13A2) REFIREFA CONTROR ROWANC RSBSAApp.12BS ARS BA255612.ESE E L - ww.i. 33 EE 3. PE PWDA RAE7(3) ECS issi: OLREFB SECOND LAK:2) BSDT DATABUFFERG08 EABLER REFIREFA SECON REFRESH BSE Y ADDRC CONTROLLER BST YEADDK)) PWS Testaposs WKB811-12 BAADDICBAAD BSYBRST (88.11:12) "Of BST CLK 380 3. -

Computer Architecture Out-Of-Order Execution

Computer Architecture Out-of-order Execution By Yoav Etsion With acknowledgement to Dan Tsafrir, Avi Mendelson, Lihu Rappoport, and Adi Yoaz 1 Computer Architecture 2013– Out-of-Order Execution The need for speed: Superscalar • Remember our goal: minimize CPU Time CPU Time = duration of clock cycle × CPI × IC • So far we have learned that in order to Minimize clock cycle ⇒ add more pipe stages Minimize CPI ⇒ utilize pipeline Minimize IC ⇒ change/improve the architecture • Why not make the pipeline deeper and deeper? Beyond some point, adding more pipe stages doesn’t help, because Control/data hazards increase, and become costlier • (Recall that in a pipelined CPU, CPI=1 only w/o hazards) • So what can we do next? Reduce the CPI by utilizing ILP (instruction level parallelism) We will need to duplicate HW for this purpose… 2 Computer Architecture 2013– Out-of-Order Execution A simple superscalar CPU • Duplicates the pipeline to accommodate ILP (IPC > 1) ILP=instruction-level parallelism • Note that duplicating HW in just one pipe stage doesn’t help e.g., when having 2 ALUs, the bottleneck moves to other stages IF ID EXE MEM WB • Conclusion: Getting IPC > 1 requires to fetch/decode/exe/retire >1 instruction per clock: IF ID EXE MEM WB 3 Computer Architecture 2013– Out-of-Order Execution Example: Pentium Processor • Pentium fetches & decodes 2 instructions per cycle • Before register file read, decide on pairing Can the two instructions be executed in parallel? (yes/no) u-pipe IF ID v-pipe • Pairing decision is based… On data -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction.